1. Introduction

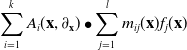

Our calculus class taught us how to solve ordinary differential equations (ODE) of the form

Here we seek functions

![]() $\phi = \phi(z)$

in one unknown z. The ODE is linear of order m, it has constant coefficients

$\phi = \phi(z)$

in one unknown z. The ODE is linear of order m, it has constant coefficients

![]() $c_i \in \mathbb{C}$

, and it is homogeneous, meaning that the right-hand side is zero. The set of all solutions is a vector space of dimension m. A basis consists of m functions

$c_i \in \mathbb{C}$

, and it is homogeneous, meaning that the right-hand side is zero. The set of all solutions is a vector space of dimension m. A basis consists of m functions

Here

![]() $u_i$

is a complex zero with multiplicity larger than

$u_i$

is a complex zero with multiplicity larger than

![]() $a \in \mathbb{N}$

of the characteristic polynomial

$a \in \mathbb{N}$

of the characteristic polynomial

Thus solving the ODE (1.1) means finding all the zeros of (1.3) and their multiplicities.

We next turn to a partial differential equation (PDE) for functions

![]() $\phi\,:\, \mathbb{R}^2 \rightarrow \mathbb{R}$

that is familiar from the undergraduate curriculum, namely the one-dimensional wave equation

$\phi\,:\, \mathbb{R}^2 \rightarrow \mathbb{R}$

that is familiar from the undergraduate curriculum, namely the one-dimensional wave equation

D’Alembert found in 1747 that the general solution is the superposition of traveling waves,

where f and g are twice differentiable functions in one variable. For the special parameter value

![]() $c=0$

, the PDE (1.4) becomes

$c=0$

, the PDE (1.4) becomes

![]() $\phi_{tt} = 0$

, and the general solution has still two summands

$\phi_{tt} = 0$

, and the general solution has still two summands

We get this from (1.5) by replacing

![]() $ g(z-ct)$

with

$ g(z-ct)$

with

![]() $ \frac{1}{2c}(h(z{+}ct) - h(z{-}ct)) $

and taking the limit

$ \frac{1}{2c}(h(z{+}ct) - h(z{-}ct)) $

and taking the limit

![]() $c \rightarrow 0$

. Here, the role of the characteristic polynomial (1.3) is played by the quadratic form

$c \rightarrow 0$

. Here, the role of the characteristic polynomial (1.3) is played by the quadratic form

The solutions (1.5) and (1.6) mirror the algebraic geometry of the conic

![]() $\{q_c=0\}$

for any

$\{q_c=0\}$

for any

![]() $c \in \mathbb{R}$

.

$c \in \mathbb{R}$

.

Our third example is a system of three PDE for unknown functions

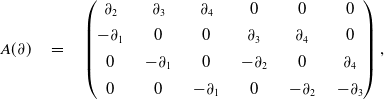

Namely, we consider the following linear PDE with constant coefficients:

The general solution to this system has nine summands, labeled

![]() $a,b, \ldots,h$

and

$a,b, \ldots,h$

and

![]() $(\tilde \alpha,\tilde \beta)$

:

$(\tilde \alpha,\tilde \beta)$

:

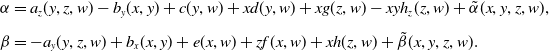

\begin{align} \alpha & = a_z(y,z,w)- b_y(x,y)+c(y,w)+xd(y,w)+xg(z,w)-xyh_z(z,w)+ \tilde \alpha(x,y,z,w), \nonumber\\[4pt] \beta & = -a_y(y,z,w)+b_x(x,y)+e(x,w)+zf(x,w) +xh(z,w)+ \tilde \beta(x,y,z,w) .\end{align}

\begin{align} \alpha & = a_z(y,z,w)- b_y(x,y)+c(y,w)+xd(y,w)+xg(z,w)-xyh_z(z,w)+ \tilde \alpha(x,y,z,w), \nonumber\\[4pt] \beta & = -a_y(y,z,w)+b_x(x,y)+e(x,w)+zf(x,w) +xh(z,w)+ \tilde \beta(x,y,z,w) .\end{align}

Here, a is any function in three variables, b, c, d, e, f, g, h are functions in two variables, and

![]() $\tilde \psi = (\tilde \alpha, \tilde \beta)$

is any function

$\tilde \psi = (\tilde \alpha, \tilde \beta)$

is any function

![]() $\mathbb{R}^4 \rightarrow \mathbb{C}^2$

that satisfies the following four linear PDE of first order:

$\mathbb{R}^4 \rightarrow \mathbb{C}^2$

that satisfies the following four linear PDE of first order:

We note that all solutions to (1.10) also satisfy (1.8), and they admit the integral representation

where

![]() $\mu$

is a measure on

$\mu$

is a measure on

![]() $\mathbb{C}^2$

. All functions in (1.9) are assumed to be suitably differentiable.

$\mathbb{C}^2$

. All functions in (1.9) are assumed to be suitably differentiable.

Our aim is to present methods for solving arbitrary systems of homogeneous linear PDE with constant coefficients. The input is a system like (1.1), (1.4), (1.8), or (1.10). We seek to compute the corresponding output (1.2), (1.5), (1.9), or (1.11), respectively. We present techniques that are based on the Fundamental Principle of Ehrenpreis and Palamodov, as discussed in the classical books [Reference Björk7, Reference Ehrenpreis17, Reference Hörmander23, Reference Palamodov32]. We utilize the theory of differential primary decomposition [Reference Cid-Ruiz and Sturmfels12]. While deriving (1.5) from (1.4) is easy by hand, getting from (1.8) to (1.9) requires a computer.

This article is primarily expository. One goal is to explain the findings in [Reference Chen, Cid-Ruiz, Härkönen, Krone and Leykin8–Reference Cid-Ruiz and Sturmfels12], such as the differential primary decompositions of minimal size, from the viewpoint of analysis and PDE. In addition to these recent advances, our development rests on a considerable body of earlier work. The articles [Reference Damiano, Sabadini and Struppa15, Reference Oberst29, Reference Oberst31] are especially important. However, there are also some new contributions in the present article, mostly in Sections 4, 5, and 6. We describe the first universally applicable algorithm for computing Noetherian operators.

This presentation is organized as follows. Section 2 explains how linear PDE are represented by polynomial modules. The Fundamental Principle (Theorem 2.2) is illustrated with concrete examples. In Section 3, we examine the support of a module and how it governs exponential solutions (Proposition 3.7) and polynomial solutions (Proposition 3.9). Theorem 3.8 characterizes PDE whose solution space is finite dimensional. Section 4 features the theory of differential primary decomposition [Reference Chen and Cid-Ruiz9, Reference Cid-Ruiz and Sturmfels12]. Theorem 4.4 shows how this theory yields the integral representations promised by Ehrenpreis–Palamodov. This result appeared implicitly in the analysis literature, but the present algebraic form is new. It is the foundation of our algorithm for computing a minimal set of Noetherian multipliers. This is presented in Section 5, along with its implementation in the command solvePDE in Macaulay2 [Reference Grayson and Stillman21].

The concepts of schemes and coherent sheaves are central to modern algebraic geometry. In Section 6, we argue that linear PDE are an excellent tool for understanding these concepts and for computing their behaviors in families. Hilbert schemes and Quot schemes make an appearance along the lines of [Reference Chen and Cid-Ruiz9, Reference Cid-Ruiz, Homs and Sturmfels11]. Section 7 is devoted to directions for further study and research in the subject area of this paper. It also features more examples and applications.

2. PDE and polynomials

Our point of departure is the observation that homogeneous linear partial differential equations with constant coefficients are the same as vectors of polynomials. The entries of the vectors are elements in the polynomial ring

![]() $R = K[\partial_{1}, \partial_{2}, \ldots,\partial_{n}]$

, where K is a subfield of the complex numbers

$R = K[\partial_{1}, \partial_{2}, \ldots,\partial_{n}]$

, where K is a subfield of the complex numbers

![]() $\mathbb{C}$

. In all our examples, we use the field

$\mathbb{C}$

. In all our examples, we use the field

![]() $K = \mathbb{Q}$

of rational numbers. This has the virtue of being amenable to exact symbolic computation, e.g. in Macaulay2 [Reference Grayson and Stillman21].

$K = \mathbb{Q}$

of rational numbers. This has the virtue of being amenable to exact symbolic computation, e.g. in Macaulay2 [Reference Grayson and Stillman21].

For instance, in (1.1), we have

![]() $n=1$

. Writing

$n=1$

. Writing

![]() $\partial = \dfrac{\partial}{\partial z}$

for the generator of R, our ODE is given by one polynomial

$\partial = \dfrac{\partial}{\partial z}$

for the generator of R, our ODE is given by one polynomial

![]() $p(\partial) = c_0 + c_1 \partial + \cdots + c_m \partial^m$

, where

$p(\partial) = c_0 + c_1 \partial + \cdots + c_m \partial^m$

, where

![]() $c_0,c_1,\ldots,c_m \in K$

. For

$c_0,c_1,\ldots,c_m \in K$

. For

![]() $n \geq 2$

, we write

$n \geq 2$

, we write

![]() ${\bf z} = (z_1,\ldots,z_n)$

for the unknowns in the functions we seek, and the partial derivatives that act on these functions are

${\bf z} = (z_1,\ldots,z_n)$

for the unknowns in the functions we seek, and the partial derivatives that act on these functions are

![]() $\partial_i = \partial_{z_i} = \dfrac{\partial}{\partial z_i}$

. With this notation, the wave equation in (1.4) corresponds to the polynomial

$\partial_i = \partial_{z_i} = \dfrac{\partial}{\partial z_i}$

. With this notation, the wave equation in (1.4) corresponds to the polynomial

![]() $q_c(\partial) = \partial_2^2 - c^2 \partial_1^2 = (\partial_2 - c \partial_1)(\partial_2 + c \partial_1)$

with

$q_c(\partial) = \partial_2^2 - c^2 \partial_1^2 = (\partial_2 - c \partial_1)(\partial_2 + c \partial_1)$

with

![]() $n=2$

. Finally, the PDE in (1.8) has

$n=2$

. Finally, the PDE in (1.8) has

![]() $n=4$

and is encoded in three polynomial vectors

$n=4$

and is encoded in three polynomial vectors

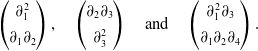

\begin{equation}\begin{pmatrix} \partial_1^2 \\[4pt] \partial_1 \partial_2 \end{pmatrix} , \quad \begin{pmatrix} \partial_2 \partial_3 \\[4pt] \partial_3^2 \end{pmatrix} \quad {\textrm{and}} \quad \begin{pmatrix} \partial_1^2 \partial_3 \\[4pt] \partial_1 \partial_2 \partial_4 \end{pmatrix} .\end{equation}

\begin{equation}\begin{pmatrix} \partial_1^2 \\[4pt] \partial_1 \partial_2 \end{pmatrix} , \quad \begin{pmatrix} \partial_2 \partial_3 \\[4pt] \partial_3^2 \end{pmatrix} \quad {\textrm{and}} \quad \begin{pmatrix} \partial_1^2 \partial_3 \\[4pt] \partial_1 \partial_2 \partial_4 \end{pmatrix} .\end{equation}

The system (1.8) corresponds to the submodule of

![]() $R^2$

that is generated by these three vectors.

$R^2$

that is generated by these three vectors.

We shall study PDE that describe vector-valued functions from n-space to k-space. To this end, we need to specify a space

![]() $\mathcal{F}$

of sufficiently differentiable functions such that

$\mathcal{F}$

of sufficiently differentiable functions such that

![]() $\mathcal{F}^k$

contains our solutions. The scalar-valued functions in

$\mathcal{F}^k$

contains our solutions. The scalar-valued functions in

![]() $\mathcal{F}$

are either real-valued functions

$\mathcal{F}$

are either real-valued functions

![]() $\psi\,:\,\Omega \rightarrow \mathbb{R}$

or complex-valued functions

$\psi\,:\,\Omega \rightarrow \mathbb{R}$

or complex-valued functions

![]() $\psi\,:\,\Omega \rightarrow \mathbb{C}$

, where

$\psi\,:\,\Omega \rightarrow \mathbb{C}$

, where

![]() $\Omega$

is a suitable subset of

$\Omega$

is a suitable subset of

![]() $\mathbb{R}^n$

or

$\mathbb{R}^n$

or

![]() $\mathbb{C}^n$

. Later we will be more specific about the choice of

$\mathbb{C}^n$

. Later we will be more specific about the choice of

![]() $\mathcal{F}$

. One requirement is that the space

$\mathcal{F}$

. One requirement is that the space

![]() $\mathcal{F}^k$

should contain the exponential functions

$\mathcal{F}^k$

should contain the exponential functions

Here

![]() ${\bf u} \in \mathbb{C}^n$

and q is any vector of length k whose entries are polynomials in n unknowns.

${\bf u} \in \mathbb{C}^n$

and q is any vector of length k whose entries are polynomials in n unknowns.

Remark 2.1 (

![]() $k=1$

) A differential operator

$k=1$

) A differential operator

![]() $p(\partial) $

in R annihilates the function

$p(\partial) $

in R annihilates the function

![]() ${\textrm{exp}}({\bf u}^t {\bf z})$

if and only if

${\textrm{exp}}({\bf u}^t {\bf z})$

if and only if

![]() $p({\bf u}) = 0$

. This is the content of [Reference Michałek and Sturmfels27, Lemma 3.25]. See also Lemma 3.26. If

$p({\bf u}) = 0$

. This is the content of [Reference Michałek and Sturmfels27, Lemma 3.25]. See also Lemma 3.26. If

![]() $p(\partial)$

annihilates a function

$p(\partial)$

annihilates a function

![]() $ q({\bf z}) \cdot {\textrm{exp}}( {\bf u}^t {\bf z})$

, where q is a polynomial of positive degree, then u is a point of higher multiplicity on the hypersurface

$ q({\bf z}) \cdot {\textrm{exp}}( {\bf u}^t {\bf z})$

, where q is a polynomial of positive degree, then u is a point of higher multiplicity on the hypersurface

![]() $\{ p = 0 \}$

. In the case

$\{ p = 0 \}$

. In the case

![]() $n=1$

, when p is the characteristic polynomial (1.3), we have a solution basis of exponential functions (1.2).

$n=1$

, when p is the characteristic polynomial (1.3), we have a solution basis of exponential functions (1.2).

Another requirement for the space

![]() $\mathcal{F}$

is that it is closed under differentiation. In other words, if

$\mathcal{F}$

is that it is closed under differentiation. In other words, if

![]() $\phi = \phi(z_1,\ldots,z_n)$

lies in

$\phi = \phi(z_1,\ldots,z_n)$

lies in

![]() $\mathcal{F}$

, then so does

$\mathcal{F}$

, then so does

![]() $\partial_i \bullet \phi = \dfrac{\partial \phi}{\partial z_i}$

for

$\partial_i \bullet \phi = \dfrac{\partial \phi}{\partial z_i}$

for

![]() $i=1,2,\ldots,n$

. The elements of

$i=1,2,\ldots,n$

. The elements of

![]() $\mathcal{F}^k$

are vector-valued functions

$\mathcal{F}^k$

are vector-valued functions

![]() $\psi = \psi({\bf z})$

. Their coordinates

$\psi = \psi({\bf z})$

. Their coordinates

![]() $\psi_i$

are scalar-valued functions in

$\psi_i$

are scalar-valued functions in

![]() $\mathcal{F}$

. All in all,

$\mathcal{F}$

. All in all,

![]() $\mathcal{F}$

should be large, in the sense that it furnishes enough solutions. Formulated algebraically, we want

$\mathcal{F}$

should be large, in the sense that it furnishes enough solutions. Formulated algebraically, we want

![]() $\mathcal{F}$

to be an injective R-module [Reference Lomadze25]. A more precise desideratum, formulated by Oberst [Reference Oberst28–Reference Oberst30], is that

$\mathcal{F}$

to be an injective R-module [Reference Lomadze25]. A more precise desideratum, formulated by Oberst [Reference Oberst28–Reference Oberst30], is that

![]() $\mathcal{F}$

should be an injective cogenerator.

$\mathcal{F}$

should be an injective cogenerator.

Examples of injective cogenerators include the ring

![]() $\mathbb{C}[[z_1,\ldots,z_n]]$

of formal power series, the space

$\mathbb{C}[[z_1,\ldots,z_n]]$

of formal power series, the space

![]() $C^\infty(\mathbb{R}^n)$

of smooth complex-valued functions over

$C^\infty(\mathbb{R}^n)$

of smooth complex-valued functions over

![]() $\mathbb{R}^n$

, or more generally, the space

$\mathbb{R}^n$

, or more generally, the space

![]() $\mathcal{D}'(\mathbb{R}^n)$

of complex-valued distributions on

$\mathcal{D}'(\mathbb{R}^n)$

of complex-valued distributions on

![]() $\mathbb{R}^n$

. If

$\mathbb{R}^n$

. If

![]() $\Omega $

is any open convex domain in

$\Omega $

is any open convex domain in

![]() $\mathbb{R}^n$

, then we can also take

$\mathbb{R}^n$

, then we can also take

![]() $\mathcal{F}$

to be

$\mathcal{F}$

to be

![]() $C^\infty(\Omega)$

or

$C^\infty(\Omega)$

or

![]() $\mathcal{D}'(\Omega)$

. In this paper, we focus on algebraic methods. Analytic difficulties are mostly swept under the rug.

$\mathcal{D}'(\Omega)$

. In this paper, we focus on algebraic methods. Analytic difficulties are mostly swept under the rug.

Our PDE are elements in the free R-module

![]() $R^k$

, that is, they are column vectors of length k whose entries are polynomials in

$R^k$

, that is, they are column vectors of length k whose entries are polynomials in

![]() $\partial = (\partial_1,\ldots,\partial_n)$

. Such a vector acts on

$\partial = (\partial_1,\ldots,\partial_n)$

. Such a vector acts on

![]() $\mathcal{F}^k$

by coordinate-wise application of the differential operator and then adding up the results in

$\mathcal{F}^k$

by coordinate-wise application of the differential operator and then adding up the results in

![]() $\mathcal{F}$

. In this manner, each element in

$\mathcal{F}$

. In this manner, each element in

![]() $R^k$

defines an R-linear map

$R^k$

defines an R-linear map

![]() $\mathcal{F}^k \rightarrow \mathcal{F}$

. For instance, the third vector in (2.1) is an element in

$\mathcal{F}^k \rightarrow \mathcal{F}$

. For instance, the third vector in (2.1) is an element in

![]() $R^2$

that acts on functions

$R^2$

that acts on functions

![]() $\psi \,:\, \mathbb{R}^4 \rightarrow \mathbb{C}^2$

in

$\psi \,:\, \mathbb{R}^4 \rightarrow \mathbb{C}^2$

in

![]() $\mathcal{F}^2$

as follows:

$\mathcal{F}^2$

as follows:

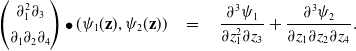

\begin{equation}\begin{pmatrix} \partial_1^2 \partial_3 \\[4pt] \partial_1 \partial_2 \partial_4 \end{pmatrix} \bullet(\psi_1 ({\bf z} ) ,\psi_2({\bf z})) \quad = \quad \frac{\partial^3 \psi_1}{ \partial z_1^2 \partial z_3} + \frac{\partial^3 \psi_2}{ \partial z_1 \partial z_2 \partial z_4}.\end{equation}

\begin{equation}\begin{pmatrix} \partial_1^2 \partial_3 \\[4pt] \partial_1 \partial_2 \partial_4 \end{pmatrix} \bullet(\psi_1 ({\bf z} ) ,\psi_2({\bf z})) \quad = \quad \frac{\partial^3 \psi_1}{ \partial z_1^2 \partial z_3} + \frac{\partial^3 \psi_2}{ \partial z_1 \partial z_2 \partial z_4}.\end{equation}

The right-hand side is a scalar-valued function

![]() $\mathbb{R}^4 \rightarrow \mathbb{C}$

, that is, it is an element of

$\mathbb{R}^4 \rightarrow \mathbb{C}$

, that is, it is an element of

![]() $\mathcal{F}$

.

$\mathcal{F}$

.

Our systems of PDE are submodules M of the free module

![]() $R^k$

. By Hilbert’s Basis Theorem, every module M is finitely generated, so we can write

$R^k$

. By Hilbert’s Basis Theorem, every module M is finitely generated, so we can write

![]() $M = {\textrm{image}}_R(A)$

, where A is a

$M = {\textrm{image}}_R(A)$

, where A is a

![]() $k \times l$

matrix with entries in R. Each column of A is a generator of M, and it defines a differential operator that maps

$k \times l$

matrix with entries in R. Each column of A is a generator of M, and it defines a differential operator that maps

![]() $\mathcal{F}^k$

to

$\mathcal{F}^k$

to

![]() $\mathcal{F}$

. The solution space to the PDE given by M equals

$\mathcal{F}$

. The solution space to the PDE given by M equals

It suffices to take the operators m from a generating set of M, such as the l columns of A. The case

![]() $k=1$

is of special interest, since we often consider PDE for scalar-valued functions. In that case, the submodule is an ideal in the polynomial ring R, and we denote this by I. The solution space

$k=1$

is of special interest, since we often consider PDE for scalar-valued functions. In that case, the submodule is an ideal in the polynomial ring R, and we denote this by I. The solution space

![]() ${\textrm{Sol}}(I)$

of the ideal

${\textrm{Sol}}(I)$

of the ideal

![]() $I \subseteq R$

is the set of functions

$I \subseteq R$

is the set of functions

![]() $\phi$

in

$\phi$

in

![]() $\mathcal{F}$

such that

$\mathcal{F}$

such that

![]() $p(\partial) \bullet \phi = 0 $

for all

$p(\partial) \bullet \phi = 0 $

for all

![]() $p \in I$

. Thus, ideals are instances of modules, with their own notation.

$p \in I$

. Thus, ideals are instances of modules, with their own notation.

The solution spaces

![]() ${\textrm{Sol}}(M)$

and

${\textrm{Sol}}(M)$

and

![]() ${\textrm{Sol}}(I)$

are

${\textrm{Sol}}(I)$

are

![]() $\mathbb{C}$

-vector spaces and R-modules. Indeed, any

$\mathbb{C}$

-vector spaces and R-modules. Indeed, any

![]() $\mathbb{C}$

-linear combination of solutions is again a solution. The R-module action means applying the same differential operator

$\mathbb{C}$

-linear combination of solutions is again a solution. The R-module action means applying the same differential operator

![]() $p(\partial)$

to each coordinate, which leads to another vector in

$p(\partial)$

to each coordinate, which leads to another vector in

![]() $\mathcal{F}^k$

. This action takes solutions to solutions because the ring of differential operators with constant coefficients

$\mathcal{F}^k$

. This action takes solutions to solutions because the ring of differential operators with constant coefficients

![]() $R = \mathbb{C}[\partial_1,\ldots,\partial_n]$

is commutative.

$R = \mathbb{C}[\partial_1,\ldots,\partial_n]$

is commutative.

The purpose of this paper is to present practical methods for the following task:

If

![]() $k=1$

then we consider the ideal I generated by the entries of A and we compute

$k=1$

then we consider the ideal I generated by the entries of A and we compute

![]() ${\textrm{Sol}}(I)$

.

${\textrm{Sol}}(I)$

.

This raises the question of what a “good representation” means. The formulas in (1.2), (1.5), (1.9) and (1.11) are definitely good. They guide us to what is desirable. Our general answer stems from the following important result at the crossroads of analysis and algebra. It involves two sets of unknowns

![]() ${\bf z} = (z_1,\ldots,z_n)$

and

${\bf z} = (z_1,\ldots,z_n)$

and

![]() ${\bf x}= (x_1,\ldots,x_n)$

. Here x gives coordinates on certain irreducible varieties

${\bf x}= (x_1,\ldots,x_n)$

. Here x gives coordinates on certain irreducible varieties

![]() $V_i$

in

$V_i$

in

![]() $\mathbb{C}^n$

that are parameter spaces for solutions. Our solutions

$\mathbb{C}^n$

that are parameter spaces for solutions. Our solutions

![]() $\psi$

are functions in z. We take

$\psi$

are functions in z. We take

![]() $\mathcal{F} = C^\infty(\Omega)$

where

$\mathcal{F} = C^\infty(\Omega)$

where

![]() $\Omega \subset \mathbb{R}^n$

is open, convex, and bounded.

$\Omega \subset \mathbb{R}^n$

is open, convex, and bounded.

Theorem 2.2 (Ehrenpreis--Palamodov Fundamental Principle). Consider a module

![]() $M \subseteq R^k$

, representing linear PDE for a function

$M \subseteq R^k$

, representing linear PDE for a function

![]() $\psi\,:\, \Omega \rightarrow \mathbb{C}^k$

. There exist irreducible varieties

$\psi\,:\, \Omega \rightarrow \mathbb{C}^k$

. There exist irreducible varieties

![]() $V_1,\ldots,V_s$

in

$V_1,\ldots,V_s$

in

![]() $\mathbb{C}^n$

and finitely many vectors

$\mathbb{C}^n$

and finitely many vectors

![]() $B_{ij}$

of polynomials in 2n unknowns

$B_{ij}$

of polynomials in 2n unknowns

![]() $({\bf x},{\bf z})$

, all independent of the set

$({\bf x},{\bf z})$

, all independent of the set

![]() $\Omega$

, such that any solution

$\Omega$

, such that any solution

![]() $\psi \in \mathcal{F}$

admits an integral representation

$\psi \in \mathcal{F}$

admits an integral representation

Here

![]() $m_i$

is a certain invariant of

$m_i$

is a certain invariant of

![]() $(M,V_i)$

and each

$(M,V_i)$

and each

![]() $\mu_{ij}$

is a bounded measure supported on the variety

$\mu_{ij}$

is a bounded measure supported on the variety

![]() $V_i $

.

$V_i $

.

Theorem 2.2 appears in different forms in the books by Björk [Reference Björk7, Theorem 8.1.3], Ehrenpreis [Reference Ehrenpreis17], Hörmander [Reference Hörmander23, Section 7.7], and Palamodov [Reference Palamodov32]. Other references with different emphases include [Reference Berndtsson and Passare5, Reference Lomadze25, Reference Oberst29]. For a perspective from commutative algebra see [Reference Cid-Ruiz, Homs and Sturmfels11, Reference Cid-Ruiz and Sturmfels12].

In the next sections, we will study the ingredients in Theorem 2.2. Given the module M, we compute each associated variety

![]() $V_i$

, the arithmetic length

$V_i$

, the arithmetic length

![]() $m_i$

of M along

$m_i$

of M along

![]() $V_i$

, and the Noetherian multipliers

$V_i$

, and the Noetherian multipliers

![]() $B_{i,1},B_{i,2},\ldots,B_{i,m_i}$

. We shall see that not all n of the unknowns

$B_{i,1},B_{i,2},\ldots,B_{i,m_i}$

. We shall see that not all n of the unknowns

![]() $z_1,\ldots,z_n$

appear in the polynomials

$z_1,\ldots,z_n$

appear in the polynomials

![]() $B_{i,j}$

but only a subset of

$B_{i,j}$

but only a subset of

![]() ${\textrm{codim}}(V_i)$

of them.

${\textrm{codim}}(V_i)$

of them.

The most basic example is the ODE in (1.1), with

![]() $l=n=k=1$

. Here

$l=n=k=1$

. Here

![]() $V_i = \{u_i\}$

is the ith root of the polynomial (1.3), which has multiplicity

$V_i = \{u_i\}$

is the ith root of the polynomial (1.3), which has multiplicity

![]() $m_i$

, and

$m_i$

, and

![]() $B_{i,j} = z^{j-1}$

. The measure

$B_{i,j} = z^{j-1}$

. The measure

![]() $\mu_{ij}$

is a scaled Dirac measure on

$\mu_{ij}$

is a scaled Dirac measure on

![]() $u_i$

, so the integrals in (2.6) are multiples of the basis functions (1.2).

$u_i$

, so the integrals in (2.6) are multiples of the basis functions (1.2).

In light of Theorem 2.2, we now refine our computational task in (2.5) as follows:

In our introductory examples, we gave formulas for the general solution, namely (1.5) and (1.9). We claim that such formulas can be read off from the integrals in (2.6). For instance, for the wave equation (1.4), we have

![]() $s=2$

,

$s=2$

,

![]() $B_{1,1} = B_{1,2} = 1$

, and (1.5) is obtained by integrating

$B_{1,1} = B_{1,2} = 1$

, and (1.5) is obtained by integrating

![]() ${\textrm{exp}}( {\bf x}^t {\bf z})$

against measures

${\textrm{exp}}( {\bf x}^t {\bf z})$

against measures

![]() $ d\mu_{i1}({\bf x})$

on two lines

$ d\mu_{i1}({\bf x})$

on two lines

![]() $V_1$

and

$V_1$

and

![]() $V_2$

in

$V_2$

in

![]() $ \mathbb{C}^2$

. For the system (1.8), we find

$ \mathbb{C}^2$

. For the system (1.8), we find

![]() $s=6$

, with

$s=6$

, with

![]() $m_1=m_2=m_3=1$

and

$m_1=m_2=m_3=1$

and

![]() $m_4=m_5=m_6=2$

, and the nine integrals in (2.6) translate into (1.9). We shall explain such a translation in full detail for two other examples.

$m_4=m_5=m_6=2$

, and the nine integrals in (2.6) translate into (1.9). We shall explain such a translation in full detail for two other examples.

Example 2.3 (

![]() $n=3,k=1,l=2$

) The ideal

$n=3,k=1,l=2$

) The ideal

![]() $I = \langle \partial_1^2 - \partial_2 \partial_3, \partial_3^2 \rangle $

represents the PDE

$I = \langle \partial_1^2 - \partial_2 \partial_3, \partial_3^2 \rangle $

represents the PDE

for a scalar-valued function

![]() $\phi = \phi(z_1,z_2,z_3)$

. This is [Reference Chen, Härkönen, Krone and Leykin10, Example 4.2]. A Macaulay2 computation as in Section 5 shows that

$\phi = \phi(z_1,z_2,z_3)$

. This is [Reference Chen, Härkönen, Krone and Leykin10, Example 4.2]. A Macaulay2 computation as in Section 5 shows that

![]() $s=1, m_1 =4$

. It reveals the Noetherian multipliers

$s=1, m_1 =4$

. It reveals the Noetherian multipliers

Arbitrary functions

![]() $f(z_2) = \int {\textrm{exp}}( t z_2 ) dt$

are obtained by integrating against suitable measures on the line

$f(z_2) = \int {\textrm{exp}}( t z_2 ) dt$

are obtained by integrating against suitable measures on the line

![]() $V_1= \{ (0,t,0) \,:\,t \in \mathbb{C} \} \subset \mathbb{C}^3$

. Their derivatives are found by differentiating under the integral sign, namely

$V_1= \{ (0,t,0) \,:\,t \in \mathbb{C} \} \subset \mathbb{C}^3$

. Their derivatives are found by differentiating under the integral sign, namely

![]() $f'(z_2) = \int t \cdot {\textrm{exp}}( t z_2 )dt$

. Consider four functions a,b,c,d, each specified by a different measure. Thus, the sum of the four integrals in (2.6) evaluates to

$f'(z_2) = \int t \cdot {\textrm{exp}}( t z_2 )dt$

. Consider four functions a,b,c,d, each specified by a different measure. Thus, the sum of the four integrals in (2.6) evaluates to

According to Ehrenpreis–Palamodov, this sum is the general solution of the PDE (2.8).

Our final example uses concepts from primary decomposition, to be reviewed in Section 3.

Example 2.4 (

![]() $n=4,k=2,l=3$

). Let

$n=4,k=2,l=3$

). Let

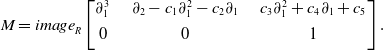

![]() $M \subset R^4$

be the module generated by the columns of

$M \subset R^4$

be the module generated by the columns of

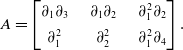

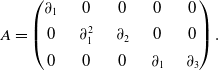

\begin{equation} A = \begin{bmatrix} \partial_{1} \partial_{3} & \quad\partial_{1} \partial_{2} & \quad\partial_{1}^2 \partial_{2} \\[4pt] \partial_{1}^2 & \quad\partial_{2}^2 & \quad\partial_{1}^2 \partial_{4} \end{bmatrix}. \end{equation}

\begin{equation} A = \begin{bmatrix} \partial_{1} \partial_{3} & \quad\partial_{1} \partial_{2} & \quad\partial_{1}^2 \partial_{2} \\[4pt] \partial_{1}^2 & \quad\partial_{2}^2 & \quad\partial_{1}^2 \partial_{4} \end{bmatrix}. \end{equation}

Computing

![]() ${\textrm{Sol}}(M)$

means solving

${\textrm{Sol}}(M)$

means solving

![]() $ \dfrac{\partial^2 \psi_1}{\partial z_1 \partial z_3} + \dfrac{\partial^2 \psi_2}{\partial z_1^2} = \dfrac{\partial^2 \psi_1}{\partial z_1 \partial z_2} + \dfrac{\partial^2 \psi_2}{\partial z_2^2} = \dfrac{\partial^3 \psi_1}{\partial z_1^2 \partial z_2} + \dfrac{\partial^3 \psi_2}{\partial z_1^2 \partial z_4} =0$

. Two solutions are

$ \dfrac{\partial^2 \psi_1}{\partial z_1 \partial z_3} + \dfrac{\partial^2 \psi_2}{\partial z_1^2} = \dfrac{\partial^2 \psi_1}{\partial z_1 \partial z_2} + \dfrac{\partial^2 \psi_2}{\partial z_2^2} = \dfrac{\partial^3 \psi_1}{\partial z_1^2 \partial z_2} + \dfrac{\partial^3 \psi_2}{\partial z_1^2 \partial z_4} =0$

. Two solutions are

![]() $\psi({\bf z}) = \bigl(\phi(z_2,z_3,z_4) , 0\bigr)$

and

$\psi({\bf z}) = \bigl(\phi(z_2,z_3,z_4) , 0\bigr)$

and

![]() $\psi({\bf z}) = {\textrm{exp}}(s^2 t z_1 + s t^2 z_2 + s^3 z_3 + t^3 z_4) \cdot \bigl( t , -s \bigr)$

.

$\psi({\bf z}) = {\textrm{exp}}(s^2 t z_1 + s t^2 z_2 + s^3 z_3 + t^3 z_4) \cdot \bigl( t , -s \bigr)$

.

We apply Theorem 2.2 to derive the general solution to (2.10). The module M has six associated primes, namely

![]() $P_1 = \langle \partial_{1} \rangle$

,

$P_1 = \langle \partial_{1} \rangle$

,

![]() $P_2 = \langle \partial_{2}, \partial_{4} \rangle $

,

$P_2 = \langle \partial_{2}, \partial_{4} \rangle $

,

![]() $P_3 = \langle \partial_{2}, \partial_{3} \rangle $

,

$P_3 = \langle \partial_{2}, \partial_{3} \rangle $

,

![]() $P_4 = \langle \partial_{1}, \partial_{3} \rangle $

,

$P_4 = \langle \partial_{1}, \partial_{3} \rangle $

,

![]() $P_5 = \langle \partial_{1}, \partial_{2} \rangle $

, and

$P_5 = \langle \partial_{1}, \partial_{2} \rangle $

, and

![]() $P_6 = \langle \partial_{1}^2 - \partial_2 \partial_3, \partial_1 \partial_2 - \partial_3 \partial_4,\partial_2^2 - \partial_1 \partial_4 \rangle $

. Four of them are minimal and two are embedded. We find that

$P_6 = \langle \partial_{1}^2 - \partial_2 \partial_3, \partial_1 \partial_2 - \partial_3 \partial_4,\partial_2^2 - \partial_1 \partial_4 \rangle $

. Four of them are minimal and two are embedded. We find that

![]() $m_1 = m_2 = m_3 = m_4 = m_6 = 1$

and

$m_1 = m_2 = m_3 = m_4 = m_6 = 1$

and

![]() $m_5 = 4$

. A minimal primary decomposition

$m_5 = 4$

. A minimal primary decomposition

is given by the following primary submodules of

![]() $R^4$

, each of which contains M:

$R^4$

, each of which contains M:

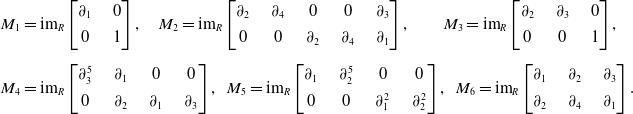

\begin{align*} M_1 & = {\textrm{im}}_R \begin{bmatrix} \partial_1 & \quad 0 \\[4pt] 0 & \quad 1 \end{bmatrix},\quad M_2 = {\textrm{im}}_R \begin{bmatrix} \partial_2 & \quad \partial_4 & \quad 0 & \quad 0 & \quad \partial_3 \\[4pt] 0 & \quad 0 & \quad \partial_2 & \quad \partial_4 & \quad \partial_1\end{bmatrix},\qquad M_3 = {\textrm{im}}_R \begin{bmatrix} \partial_2 & \quad \partial_3 & \quad 0 \\[4pt] 0 & \quad 0 & \quad 1 \end{bmatrix},\\[5pt]M_4 & = {\textrm{im}}_R \begin{bmatrix} \partial_3^5 & \quad \partial_1 & \quad 0 & \quad 0 \\[4pt] 0 & \quad \partial_2 & \quad \partial_1 & \quad \partial_3 \end{bmatrix},\ \ M_5 = {\textrm{im}}_R \begin{bmatrix} \partial_1 & \quad \partial_2^5 & \quad 0 & \quad 0 \\[4pt] 0 & \quad 0 & \quad \partial_1^2 & \quad \partial_2^2 \end{bmatrix},\ \ M_6 = {\textrm{im}}_R \begin{bmatrix}\partial_1 & \quad \partial_2 & \quad \partial_3 \\[4pt]\partial_2 & \quad \partial_4 & \quad \partial_1\end{bmatrix}. \end{align*}

\begin{align*} M_1 & = {\textrm{im}}_R \begin{bmatrix} \partial_1 & \quad 0 \\[4pt] 0 & \quad 1 \end{bmatrix},\quad M_2 = {\textrm{im}}_R \begin{bmatrix} \partial_2 & \quad \partial_4 & \quad 0 & \quad 0 & \quad \partial_3 \\[4pt] 0 & \quad 0 & \quad \partial_2 & \quad \partial_4 & \quad \partial_1\end{bmatrix},\qquad M_3 = {\textrm{im}}_R \begin{bmatrix} \partial_2 & \quad \partial_3 & \quad 0 \\[4pt] 0 & \quad 0 & \quad 1 \end{bmatrix},\\[5pt]M_4 & = {\textrm{im}}_R \begin{bmatrix} \partial_3^5 & \quad \partial_1 & \quad 0 & \quad 0 \\[4pt] 0 & \quad \partial_2 & \quad \partial_1 & \quad \partial_3 \end{bmatrix},\ \ M_5 = {\textrm{im}}_R \begin{bmatrix} \partial_1 & \quad \partial_2^5 & \quad 0 & \quad 0 \\[4pt] 0 & \quad 0 & \quad \partial_1^2 & \quad \partial_2^2 \end{bmatrix},\ \ M_6 = {\textrm{im}}_R \begin{bmatrix}\partial_1 & \quad \partial_2 & \quad \partial_3 \\[4pt]\partial_2 & \quad \partial_4 & \quad \partial_1\end{bmatrix}. \end{align*}

The number of Noetherian multipliers

![]() $B_{ij}$

is

$B_{ij}$

is

![]() $\sum_{i=1}^6 m_i = 9$

. We choose them to be

$\sum_{i=1}^6 m_i = 9$

. We choose them to be

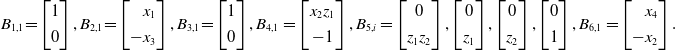

\begin{equation*} B_{1,1} {=} \begin{bmatrix} 1 \\[4pt] 0 \end{bmatrix} , B_{2,1} {=} \begin{bmatrix} \phantom{-}x_1 \\[4pt] -x_3 \end{bmatrix} , B_{3,1} {=} \begin{bmatrix} 1 \\[4pt] 0 \end{bmatrix} , B_{4,1} = \begin{bmatrix} x_2 z_1 \\[4pt] -1 \end{bmatrix} , B_{5,i} = \begin{bmatrix} 0 \\[4pt] z_1 z_2 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] z_1 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] z_2 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] 1 \end{bmatrix} , B_{6,1} = \begin{bmatrix} \phantom{-}x_4 \\[4pt] -x_2 \end{bmatrix}.\end{equation*}

\begin{equation*} B_{1,1} {=} \begin{bmatrix} 1 \\[4pt] 0 \end{bmatrix} , B_{2,1} {=} \begin{bmatrix} \phantom{-}x_1 \\[4pt] -x_3 \end{bmatrix} , B_{3,1} {=} \begin{bmatrix} 1 \\[4pt] 0 \end{bmatrix} , B_{4,1} = \begin{bmatrix} x_2 z_1 \\[4pt] -1 \end{bmatrix} , B_{5,i} = \begin{bmatrix} 0 \\[4pt] z_1 z_2 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] z_1 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] z_2 \end{bmatrix} , \begin{bmatrix} 0 \\[4pt] 1 \end{bmatrix} , B_{6,1} = \begin{bmatrix} \phantom{-}x_4 \\[4pt] -x_2 \end{bmatrix}.\end{equation*}

These nine vectors describe all solutions to our PDE. For instance,

![]() $B_{3,1}$

gives the solutions

$B_{3,1}$

gives the solutions

![]() $ \Big[\begin{array}{c} \alpha(z_1,z_4) \\[4pt] 0 \end{array}\Big]$

, and

$ \Big[\begin{array}{c} \alpha(z_1,z_4) \\[4pt] 0 \end{array}\Big]$

, and

![]() $B_{5,1}$

gives the solutions

$B_{5,1}$

gives the solutions

![]() $ \Big[\begin{array}{c} 0 \\[4pt] z_1 z_2 \beta(z_3,z_4) \end{array}\Big]$

, where

$ \Big[\begin{array}{c} 0 \\[4pt] z_1 z_2 \beta(z_3,z_4) \end{array}\Big]$

, where

![]() $\alpha,\beta$

are bivariate functions. Furthermore,

$\alpha,\beta$

are bivariate functions. Furthermore,

![]() $B_{1,1}$

and

$B_{1,1}$

and

![]() $B_{6,1}$

encode the two families of solutions mentioned after (2.10).

$B_{6,1}$

encode the two families of solutions mentioned after (2.10).

For the latter, we note that

![]() $V_6 = V(P_6) $

is the surface in

$V_6 = V(P_6) $

is the surface in

![]() $\mathbb{C}^4$

with parametric representation

$\mathbb{C}^4$

with parametric representation

![]() $(x_1,x_2,x_3,x_4) = (s^2 t, st^2, s^3,t^3)$

for

$(x_1,x_2,x_3,x_4) = (s^2 t, st^2, s^3,t^3)$

for

![]() $s,t \in \mathbb{C}$

. This surface is the cone over the twisted cubic curve, in the same notation as in [Reference Cid-Ruiz, Homs and Sturmfels11, Section 1]. The kernel under the integral in (2.6) equals

$s,t \in \mathbb{C}$

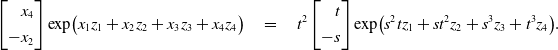

. This surface is the cone over the twisted cubic curve, in the same notation as in [Reference Cid-Ruiz, Homs and Sturmfels11, Section 1]. The kernel under the integral in (2.6) equals

\begin{equation*} \begin{bmatrix} \phantom{-}x_4 \\[4pt] -x_2 \end{bmatrix}{\textrm{exp}}\bigl(x_1 z_1 + x_2 z_2 + x_3 z_3 + x_4 z_4\bigr) \quad = \quad t^2 \begin{bmatrix} \phantom{-} t \\[4pt] - s \end{bmatrix} {\textrm{exp}}\bigl( s^2t z_1 + st^2 z_2 + s^3 z_3 + t^3 z_4 \bigr).\end{equation*}

\begin{equation*} \begin{bmatrix} \phantom{-}x_4 \\[4pt] -x_2 \end{bmatrix}{\textrm{exp}}\bigl(x_1 z_1 + x_2 z_2 + x_3 z_3 + x_4 z_4\bigr) \quad = \quad t^2 \begin{bmatrix} \phantom{-} t \\[4pt] - s \end{bmatrix} {\textrm{exp}}\bigl( s^2t z_1 + st^2 z_2 + s^3 z_3 + t^3 z_4 \bigr).\end{equation*}

This is a solution to

![]() $M_6$

, and hence to M, for any values of s and t. Integrating the left- hand side over

$M_6$

, and hence to M, for any values of s and t. Integrating the left- hand side over

![]() ${\bf x} \in V_6$

amounts to integrating the right-hand side over

${\bf x} \in V_6$

amounts to integrating the right-hand side over

![]() $(s,t) \in \mathbb{C}^2$

. Any such integral is also a solution. Ehrenpreis–Palamodov tells us that these are all the solutions.

$(s,t) \in \mathbb{C}^2$

. Any such integral is also a solution. Ehrenpreis–Palamodov tells us that these are all the solutions.

3. Modules and varieties

Our aim is to offer practical tools for solving PDE. The input is a

![]() $k \times l$

matrix A with entries in

$k \times l$

matrix A with entries in

![]() $R = K[\partial_1,\ldots,\partial_n]$

, and

$R = K[\partial_1,\ldots,\partial_n]$

, and

![]() $M = {\textrm{image}}_R(A)$

is the corresponding submodule of

$M = {\textrm{image}}_R(A)$

is the corresponding submodule of

![]() $R^k = \bigoplus_{j=1}^k Re_j$

. The output is the description of

$R^k = \bigoplus_{j=1}^k Re_j$

. The output is the description of

![]() ${\textrm{Sol}}(M)$

sought in (2.7). That description is unique up to basis change, in the sense of [Reference Cid-Ruiz and Sturmfels12, Remark 3.8], by the discussion in Section 4. Our method is implemented in a Macaulay2 command, called solvePDE and to be described in Section 5.

${\textrm{Sol}}(M)$

sought in (2.7). That description is unique up to basis change, in the sense of [Reference Cid-Ruiz and Sturmfels12, Remark 3.8], by the discussion in Section 4. Our method is implemented in a Macaulay2 command, called solvePDE and to be described in Section 5.

We now explain the ingredients of Theorem 2.2 coming from commutative algebra (cf. [Reference Eisenbud18]). For a vector

![]() $m \in R^k$

, the quotient

$m \in R^k$

, the quotient

![]() $(M\,:\,m)$

is the ideal

$(M\,:\,m)$

is the ideal

![]() $\{f \in R \,:\, fm \in M\}$

. A prime ideal

$\{f \in R \,:\, fm \in M\}$

. A prime ideal

![]() $P_i \subseteq R$

is associated to M if there exists

$P_i \subseteq R$

is associated to M if there exists

![]() $m \in R^k$

such that

$m \in R^k$

such that

![]() $(M\,:\,m) = P_i$

. Since R is Noetherian, the list of associated primes of M is finite, say

$(M\,:\,m) = P_i$

. Since R is Noetherian, the list of associated primes of M is finite, say

![]() $P_1,\ldots,P_s$

. If

$P_1,\ldots,P_s$

. If

![]() $s=1$

then the module M is called primary or

$s=1$

then the module M is called primary or

![]() $P_1$

-primary. A primary decomposition of M is a list of primary submodules

$P_1$

-primary. A primary decomposition of M is a list of primary submodules

![]() $M_1,\ldots,M_s \subseteq R^k$

where

$M_1,\ldots,M_s \subseteq R^k$

where

![]() $M_i$

is

$M_i$

is

![]() $P_i$

-primary and

$P_i$

-primary and

![]() $ M = M_1 \cap M_2 \cap \cdots \cap M_s $

.

$ M = M_1 \cap M_2 \cap \cdots \cap M_s $

.

Primary decomposition is a standard topic in commutative algebra. It is usually presented for ideals

![]() $(k=1)$

, as in [Reference Michałek and Sturmfels27, Chapter 3]. The case of modules is analogous. The latest version of Macaulay2 has an implementation of primary decomposition for modules, as described in [Reference Chen and Cid-Ruiz9, Section 2]. Given M, the primary module

$(k=1)$

, as in [Reference Michałek and Sturmfels27, Chapter 3]. The case of modules is analogous. The latest version of Macaulay2 has an implementation of primary decomposition for modules, as described in [Reference Chen and Cid-Ruiz9, Section 2]. Given M, the primary module

![]() $M_i$

is not unique if

$M_i$

is not unique if

![]() $P_i$

is an embedded prime.

$P_i$

is an embedded prime.

The contribution of the primary module

![]() $M_i$

to M is quantified by a positive integer

$M_i$

to M is quantified by a positive integer

![]() $m_i$

, called the arithmetic length of M along

$m_i$

, called the arithmetic length of M along

![]() $P_i$

. To define this, we consider the localization

$P_i$

. To define this, we consider the localization

![]() $(R_{P_i})^k/M_{P_i}$

. This is a module over the local ring

$(R_{P_i})^k/M_{P_i}$

. This is a module over the local ring

![]() $R_{P_i}$

. The arithmetic length is the length of the largest submodule of finite length in

$R_{P_i}$

. The arithmetic length is the length of the largest submodule of finite length in

![]() $(R_{P_i})^k/M_{P_i}$

; in symbols,

$(R_{P_i})^k/M_{P_i}$

; in symbols,

![]() $m_i = {\textrm{length}} \bigl( H^0_{P_i} ((R_{P_i})^k/ M_{P_i})\bigr)$

. The sum

$m_i = {\textrm{length}} \bigl( H^0_{P_i} ((R_{P_i})^k/ M_{P_i})\bigr)$

. The sum

![]() $m_1 + \cdots + m_s$

is an invariant of the module M, denoted

$m_1 + \cdots + m_s$

is an invariant of the module M, denoted

![]() ${\textrm{amult}}(M)$

, and known as the arithmetic multiplicity of M. These numbers can be computed in Macaulay2 as in [Reference Cid-Ruiz and Sturmfels12, Remark 5.1]. We return to these invariants in Theorem 4.3.

${\textrm{amult}}(M)$

, and known as the arithmetic multiplicity of M. These numbers can be computed in Macaulay2 as in [Reference Cid-Ruiz and Sturmfels12, Remark 5.1]. We return to these invariants in Theorem 4.3.

To make the connection to Theorem 2.2, we set

![]() $V_i = V(P_i)$

for

$V_i = V(P_i)$

for

![]() $i=1,2,\ldots,s$

. Thus,

$i=1,2,\ldots,s$

. Thus,

![]() $V_i$

is the irreducible variety in

$V_i$

is the irreducible variety in

![]() $\mathbb{C}^n$

defined by the prime ideal

$\mathbb{C}^n$

defined by the prime ideal

![]() $P_i$

in

$P_i$

in

![]() $R = K[\partial_1,\ldots,\partial_n]$

. The integer

$R = K[\partial_1,\ldots,\partial_n]$

. The integer

![]() $m_i$

is an invariant of the pair

$m_i$

is an invariant of the pair

![]() $(M,V_i)$

: it measures the thickness of the module M along

$(M,V_i)$

: it measures the thickness of the module M along

![]() $V_i$

.

$V_i$

.

By taking the union of the irreducible varieties

![]() $V_i$

we obtain the variety

$V_i$

we obtain the variety

Algebraists refer to V(M) as the support of M, while analysts call it the characteristic variety of M. The support is generally reducible, with

![]() $\leq s$

irreducible components. For instance, the module M in Example 2.4 has six associated primes, and an explicit primary decomposition was given in (2.11). However, the support V(M) has only four irreducible components in

$\leq s$

irreducible components. For instance, the module M in Example 2.4 has six associated primes, and an explicit primary decomposition was given in (2.11). However, the support V(M) has only four irreducible components in

![]() $\mathbb{C}^4$

, namely one hyperplane, two-dimensional planes, and one nonlinear surface (twisted cubic).

$\mathbb{C}^4$

, namely one hyperplane, two-dimensional planes, and one nonlinear surface (twisted cubic).

Remark 3.1. If

![]() $k=1$

and

$k=1$

and

![]() $M=I$

, then the support V(M) coincides with the variety V(I) attached as usual to an ideal I, namely the common zero set in

$M=I$

, then the support V(M) coincides with the variety V(I) attached as usual to an ideal I, namely the common zero set in

![]() $\mathbb{C}^n$

of all polynomials in I.

$\mathbb{C}^n$

of all polynomials in I.

The relationship between modules and ideals mirrors the relationship between PDE for vector-valued functions and related PDE for scalar-valued functions. To pursue this a bit further, we now define two ideals that are naturally associated with a given module

![]() $M\subseteq R^k$

.

$M\subseteq R^k$

.

The first ideal is the annihilator of the quotient module

![]() $R^k/M = {\textrm{coker}}_R(A)$

, which is

$R^k/M = {\textrm{coker}}_R(A)$

, which is

The second is the zeroth Fitting ideal of

![]() $R^k/M$

, which is the ideal in R generated by the

$R^k/M$

, which is the ideal in R generated by the

![]() $k \times k$

minors of the presentation matrix A. It is independent of the choice of A, and we write

$k \times k$

minors of the presentation matrix A. It is independent of the choice of A, and we write

We are interested in the affine varieties in

![]() $\mathbb{C}^n$

defined by these ideals. They are denoted by V(I) and V(J), respectively. The following is a standard result in commutative algebra.

$\mathbb{C}^n$

defined by these ideals. They are denoted by V(I) and V(J), respectively. The following is a standard result in commutative algebra.

Proposition 3.2. The three varieties above are equal for every submodule M of

![]() $R^k$

, that is,

$R^k$

, that is,

Proof. This follows from [Reference Eisenbud18, Proposition 20.7].

![]()

Remark 3.3. It can happen that

![]() ${\textrm{rank}}(A) < k$

, for instance when

${\textrm{rank}}(A) < k$

, for instance when

![]() $k > l$

. In that case,

$k > l$

. In that case,

![]() $I = J = \{ 0 \}$

and

$I = J = \{ 0 \}$

and

![]() $V(M) = \mathbb{C}^n$

. Geometrically, the module M furnishes a coherent sheaf that is supported on the entire space

$V(M) = \mathbb{C}^n$

. Geometrically, the module M furnishes a coherent sheaf that is supported on the entire space

![]() $\mathbb{C}^n$

. For instance, let

$\mathbb{C}^n$

. For instance, let

![]() $k=n=2,l=1$

, and

$k=n=2,l=1$

, and

![]() $A = \displaystyle\binom{\phantom{-}\partial_1}{-\partial_2}$

. The PDE asks for pairs

$A = \displaystyle\binom{\phantom{-}\partial_1}{-\partial_2}$

. The PDE asks for pairs

![]() $(\psi_1,\psi_2)$

such that

$(\psi_1,\psi_2)$

such that

![]() $\partial \psi_1 /\partial z_1 = \partial \psi_2 /\partial z_2 $

. We see that

$\partial \psi_1 /\partial z_1 = \partial \psi_2 /\partial z_2 $

. We see that

![]() $\textrm{Sol}(M)$

consists of all pairs

$\textrm{Sol}(M)$

consists of all pairs

![]() $\bigl( \partial \alpha/ \partial z_2,\partial \alpha/ \partial z_1 \big)$

, where

$\bigl( \partial \alpha/ \partial z_2,\partial \alpha/ \partial z_1 \big)$

, where

![]() $\alpha= \alpha(z_1,z_2)$

runs over functions in two variables. In general, the left kernel of A furnishes differential operators for creating solutions to M.

$\alpha= \alpha(z_1,z_2)$

runs over functions in two variables. In general, the left kernel of A furnishes differential operators for creating solutions to M.

The following example shows that (3.1) is not true at the level of schemes (cf. Section 6).

Example 3.4. (

![]() $n=k=3,l=5$

) Let

$n=k=3,l=5$

) Let

![]() $R = \mathbb{C}[\partial_1,\partial_2,\partial_3]$

and M the submodule of

$R = \mathbb{C}[\partial_1,\partial_2,\partial_3]$

and M the submodule of

![]() $R^3$

given by

$R^3$

given by

\begin{equation*} A = \begin{pmatrix}\partial_1 & \quad 0 & \quad 0 & \quad 0 & \quad 0 \\[4pt] 0 & \quad \partial_1^2 & \quad \partial_2 & \quad 0 & \quad 0 \\[4pt] 0 & \quad 0 & \quad 0 & \quad \partial_1 & \quad \partial_3 \end{pmatrix} . \end{equation*}

\begin{equation*} A = \begin{pmatrix}\partial_1 & \quad 0 & \quad 0 & \quad 0 & \quad 0 \\[4pt] 0 & \quad \partial_1^2 & \quad \partial_2 & \quad 0 & \quad 0 \\[4pt] 0 & \quad 0 & \quad 0 & \quad \partial_1 & \quad \partial_3 \end{pmatrix} . \end{equation*}

We find

![]() $I = \langle \partial_1^2, \partial_1 \partial_2 \rangle \supset J = \langle \partial_1^4, \partial_1^3 \partial_3,\partial_1^2 \partial_2 , \partial_1 \partial_2 \partial_3 \rangle$

. The sets of associated primes are

$I = \langle \partial_1^2, \partial_1 \partial_2 \rangle \supset J = \langle \partial_1^4, \partial_1^3 \partial_3,\partial_1^2 \partial_2 , \partial_1 \partial_2 \partial_3 \rangle$

. The sets of associated primes are

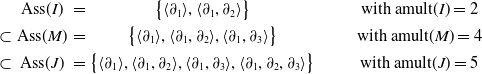

\begin{equation*} \begin{matrix}& \textrm{Ass}(I) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle \bigr\}&\qquad & {\textrm{with}}\ {\textrm{amult}}(I) =2 \\[4pt] \subset & \textrm{Ass}(M) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle , \langle \partial_1, \partial_3 \rangle \bigr\} & \qquad & {\textrm{with}}\ {\textrm{amult}}(M) = 4 \\[4pt] \subset & \textrm{Ass}(J) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle , \langle \partial_1, \partial_3 \rangle, \langle \partial_1, \partial_2, \partial_3 \rangle \bigr\} & \qquad & {\textrm{with}}\ {\textrm{amult}}(J) = 5 \end{matrix} \end{equation*}

\begin{equation*} \begin{matrix}& \textrm{Ass}(I) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle \bigr\}&\qquad & {\textrm{with}}\ {\textrm{amult}}(I) =2 \\[4pt] \subset & \textrm{Ass}(M) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle , \langle \partial_1, \partial_3 \rangle \bigr\} & \qquad & {\textrm{with}}\ {\textrm{amult}}(M) = 4 \\[4pt] \subset & \textrm{Ass}(J) & = & \bigl\{ \langle \partial_1 \rangle, \langle \partial_1, \partial_2 \rangle , \langle \partial_1, \partial_3 \rangle, \langle \partial_1, \partial_2, \partial_3 \rangle \bigr\} & \qquad & {\textrm{with}}\ {\textrm{amult}}(J) = 5 \end{matrix} \end{equation*}

The support V(M) is a plane in 3-space, on which I and J define different scheme structures. Our module M defines a coherent sheaf on that plane that lives between these two schemes. We consider the PDE in each of the three cases, we compute the Noetherian multipliers, and from this we derive the general solution. To begin with, functions in

![]() $ {\textrm{Sol}}(J)$

have the form

$ {\textrm{Sol}}(J)$

have the form

The first two terms give functions in the subspace

![]() ${\textrm{Sol}}(I)$

. Elements in

${\textrm{Sol}}(I)$

. Elements in

![]() ${\textrm{Sol}}(M)$

are vectors

${\textrm{Sol}}(M)$

are vectors

These represent all functions

![]() $\mathbb{C}^3 \rightarrow \mathbb{C}^3$

that satisfy the five PDE given by the matrix A.

$\mathbb{C}^3 \rightarrow \mathbb{C}^3$

that satisfy the five PDE given by the matrix A.

Remark 3.5. The quotient

![]() $R/I$

embeds naturally into the direct sum of k copies of

$R/I$

embeds naturally into the direct sum of k copies of

![]() $R^k/M$

, via

$R^k/M$

, via

![]() $1 \mapsto e_j$

. This implies

$1 \mapsto e_j$

. This implies

![]() ${\textrm{Ass}}(I) \subseteq {\textrm{Ass}}(M)$

. It would be worthwhile to understand how the differential primary decompositions of I,J and M are related, and to study implications for the solution spaces

${\textrm{Ass}}(I) \subseteq {\textrm{Ass}}(M)$

. It would be worthwhile to understand how the differential primary decompositions of I,J and M are related, and to study implications for the solution spaces

![]() ${\textrm{Sol}}(I)$

,

${\textrm{Sol}}(I)$

,

![]() ${\textrm{Sol}}(J)$

, and

${\textrm{Sol}}(J)$

, and

![]() ${\textrm{Sol}}(M)$

. What relationships hold between these?

${\textrm{Sol}}(M)$

. What relationships hold between these?

Lemma 3.6. Fix a

![]() $k \times l$

matrix

$k \times l$

matrix

![]() $A(\partial)$

and its module

$A(\partial)$

and its module

![]() $M \subseteq R^k$

as above. A point

$M \subseteq R^k$

as above. A point

![]() ${\bf u}\in \mathbb{C}^n$

lies in V(M) if and only if there exist constants

${\bf u}\in \mathbb{C}^n$

lies in V(M) if and only if there exist constants

![]() $c_1,\ldots,c_k \in \mathbb{C}$

, not all zero, such that

$c_1,\ldots,c_k \in \mathbb{C}$

, not all zero, such that

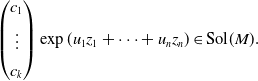

\begin{align} \begin{pmatrix} c_1 \\[4pt] \vdots \\[4pt] c_k \end{pmatrix} \exp(u_1z_1 + \dotsb + u_nz_n) \in \textrm{Sol}(M). \end{align}

\begin{align} \begin{pmatrix} c_1 \\[4pt] \vdots \\[4pt] c_k \end{pmatrix} \exp(u_1z_1 + \dotsb + u_nz_n) \in \textrm{Sol}(M). \end{align}

More precisely, (3.2) holds if and only if

![]() $ (c_1,\ldots,c_k) \cdot A({\bf u}) = 0 $

.

$ (c_1,\ldots,c_k) \cdot A({\bf u}) = 0 $

.

Proof. Let

![]() $a_{ij}(\partial)$

denote the entries of the matrix

$a_{ij}(\partial)$

denote the entries of the matrix

![]() $A(\partial)$

. Then (3.2) holds if and only if

$A(\partial)$

. Then (3.2) holds if and only if

This is equivalent to

This condition holds if and only if

![]() $ (c_1,\ldots,c_k) \cdot A({\bf u}) $

is the zero vector in

$ (c_1,\ldots,c_k) \cdot A({\bf u}) $

is the zero vector in

![]() $\mathbb{C}^l$

. We conclude that, for any given

$\mathbb{C}^l$

. We conclude that, for any given

![]() ${\bf u} \in \mathbb{C}^n$

, the previous condition is satisfied for some

${\bf u} \in \mathbb{C}^n$

, the previous condition is satisfied for some

![]() $c \in \mathbb{C}^k \backslash \{0\}$

if and only if

$c \in \mathbb{C}^k \backslash \{0\}$

if and only if

![]() ${\textrm{rank}}(A({\bf u})) < k$

if and only if

${\textrm{rank}}(A({\bf u})) < k$

if and only if

![]() ${\bf u} \in V(M) = V(I)$

. Here we use Proposition 3.2.

${\bf u} \in V(M) = V(I)$

. Here we use Proposition 3.2.

![]()

Here is an alternative way to interpret the characteristic variety of a system of PDE:

Proposition 3.7. The solution space

![]() $\textrm{Sol}(M)$

contains an exponential solution

$\textrm{Sol}(M)$

contains an exponential solution

![]() $ q({\bf z}) \cdot {\textrm{exp}}( {\bf u}^t {\bf z}) $

if and only if

$ q({\bf z}) \cdot {\textrm{exp}}( {\bf u}^t {\bf z}) $

if and only if

![]() ${\bf u} \in V(M)$

. Here q is some vector of k polynomials in n unknowns, as in (2.2).

${\bf u} \in V(M)$

. Here q is some vector of k polynomials in n unknowns, as in (2.2).

Proof. One direction is clear from Lemma 3.6. Next, suppose

![]() $ q(\mathbf{z}) \exp({\bf u}^t {\bf z}) \in \textrm{Sol}(M)$

. The partial derivative of this function with respect to any unknown

$ q(\mathbf{z}) \exp({\bf u}^t {\bf z}) \in \textrm{Sol}(M)$

. The partial derivative of this function with respect to any unknown

![]() $z_i$

is also in

$z_i$

is also in

![]() ${\textrm{Sol}}(M)$

. Hence,

${\textrm{Sol}}(M)$

. Hence,

Hence, the exponential function

![]() $ (\partial_i \bullet q({\bf z})) \exp({\bf u}^t {\bf z})$

is in

$ (\partial_i \bullet q({\bf z})) \exp({\bf u}^t {\bf z})$

is in

![]() ${\textrm{Sol}}(M)$

. Since the degree of

${\textrm{Sol}}(M)$

. Since the degree of

![]() $\partial_i \bullet q({\bf z})$

is less than that of

$\partial_i \bullet q({\bf z})$

is less than that of

![]() $q({\bf z})$

, we can find a sequence

$q({\bf z})$

, we can find a sequence

![]() $D = \partial_{i_1} \partial_{i_2} \dotsb \partial_{i_s}$

such that

$D = \partial_{i_1} \partial_{i_2} \dotsb \partial_{i_s}$

such that

![]() $D \bullet q$

is a nonzero constant vector and

$D \bullet q$

is a nonzero constant vector and

![]() $(D \bullet q) \exp({\bf u}^t {\bf z}) \in \textrm{Sol}(M)$

. Lemma 3.6 now implies that

$(D \bullet q) \exp({\bf u}^t {\bf z}) \in \textrm{Sol}(M)$

. Lemma 3.6 now implies that

![]() ${\bf u} \in V(M)$

.

${\bf u} \in V(M)$

.

![]()

The solution space

![]() ${\textrm{Sol}}(M)$

to a submodule

${\textrm{Sol}}(M)$

to a submodule

![]() $M \subseteq R^k$

is a vector space over

$M \subseteq R^k$

is a vector space over

![]() $\mathbb{C}$

. It is infinite-dimensional whenever V(M) is a variety of positive dimension. This follows from Lemma 3.6 because there are infinitely many points u in V(M). However, if V(M) is a finite subset of

$\mathbb{C}$

. It is infinite-dimensional whenever V(M) is a variety of positive dimension. This follows from Lemma 3.6 because there are infinitely many points u in V(M). However, if V(M) is a finite subset of

![]() $\mathbb{C}^n$

, then

$\mathbb{C}^n$

, then

![]() ${\textrm{Sol}}(M)$

is finite-dimensional. This is the content of the next theorem.

${\textrm{Sol}}(M)$

is finite-dimensional. This is the content of the next theorem.

Theorem 3.8. Consider a module

![]() $M \subseteq R^k$

, viewed as a system of linear PDE. Its solution space

$M \subseteq R^k$

, viewed as a system of linear PDE. Its solution space

![]() $\textrm{Sol}(M)$

is finite-dimensional over

$\textrm{Sol}(M)$

is finite-dimensional over

![]() $\mathbb{C}$

if and only if V(M) has dimension 0. In this case,

$\mathbb{C}$

if and only if V(M) has dimension 0. In this case,

![]() ${\textrm{dim}}_\mathbb{C} \textrm{Sol}(M) = {\textrm{dim}}_K(R^k/M) = {\textrm{amult}}(M)$

. There is a basis of

${\textrm{dim}}_\mathbb{C} \textrm{Sol}(M) = {\textrm{dim}}_K(R^k/M) = {\textrm{amult}}(M)$

. There is a basis of

![]() ${\textrm{Sol}}(M)$

given by vectors

${\textrm{Sol}}(M)$

given by vectors

![]() $ q({\bf z}) {\textrm{exp}}({\bf u}^t {\bf z})$

, where

$ q({\bf z}) {\textrm{exp}}({\bf u}^t {\bf z})$

, where

![]() ${\bf u} \in V(M)$

and

${\bf u} \in V(M)$

and

![]() $q({\bf z})$

runs over a finite set of polynomial vectors, whose cardinality is the length of M along the maximal ideal

$q({\bf z})$

runs over a finite set of polynomial vectors, whose cardinality is the length of M along the maximal ideal

![]() $\langle x_1 - u_1,\ldots,x_n-u_n \rangle$

. There exist polynomial solutions if and only if

$\langle x_1 - u_1,\ldots,x_n-u_n \rangle$

. There exist polynomial solutions if and only if

![]() $\mathfrak{m} = \langle x_1,\ldots,x_n \rangle$

is an associated prime of M. The polynomial solutions are found by solving the PDE given by the

$\mathfrak{m} = \langle x_1,\ldots,x_n \rangle$

is an associated prime of M. The polynomial solutions are found by solving the PDE given by the

![]() $\mathfrak{m}$

-primary component of M.

$\mathfrak{m}$

-primary component of M.

Proof. This is the main result in Oberst’s article [Reference Oberst30], proved in the setting of injective cogenerators

![]() $\mathcal{F}$

. The same statement for

$\mathcal{F}$

. The same statement for

![]() $\mathcal{F} = C^\infty(\Omega)$

appears in [Reference Björk7, Ch. 8, Theorem 7.1]. The scalar case

$\mathcal{F} = C^\infty(\Omega)$

appears in [Reference Björk7, Ch. 8, Theorem 7.1]. The scalar case

![]() $(k=1)$

is found in [Reference Michałek and Sturmfels27, Theorem 3.27]. The proof given there uses solutions in the power series ring, which is an injective cogenerator, and it generalizes to modules.

$(k=1)$

is found in [Reference Michałek and Sturmfels27, Theorem 3.27]. The proof given there uses solutions in the power series ring, which is an injective cogenerator, and it generalizes to modules.

![]()

By a polynomial solution we mean a vector

![]() $q({\bf z})$

whose coordinates are polynomials. The

$q({\bf z})$

whose coordinates are polynomials. The

![]() $\mathfrak{m}$

-primary component in Theorem 3.8 is computed by a double saturation step. When

$\mathfrak{m}$

-primary component in Theorem 3.8 is computed by a double saturation step. When

![]() $M=I$

is an ideal, then this double saturation is

$M=I$

is an ideal, then this double saturation is

![]() $I\,:\,(I\,:\,\mathfrak{m}^\infty)$

, as seen in [Reference Michałek and Sturmfels27, Theorem 3.27]. For submodules M of

$I\,:\,(I\,:\,\mathfrak{m}^\infty)$

, as seen in [Reference Michałek and Sturmfels27, Theorem 3.27]. For submodules M of

![]() $R^k$

with

$R^k$

with

![]() $k \geq 2$

, we would compute

$k \geq 2$

, we would compute

![]() $ M \,:\, {\textrm{Ann}}(R^k / (M \,:\, \mathfrak{m}^\infty) ) $

. The inner colon

$ M \,:\, {\textrm{Ann}}(R^k / (M \,:\, \mathfrak{m}^\infty) ) $

. The inner colon

![]() $(M\,:\,\mathfrak{m}^\infty)$

is the intersection of all primary components of M whose variety

$(M\,:\,\mathfrak{m}^\infty)$

is the intersection of all primary components of M whose variety

![]() $V_i$

does not contain the origin 0. It is computed as

$V_i$

does not contain the origin 0. It is computed as

![]() $(M\,:\,f) = \{m \in R^k\,:\, fm \in M \}$

, where f is a random homogeneous polynomial of large degree. The outer colon is the module

$(M\,:\,f) = \{m \in R^k\,:\, fm \in M \}$

, where f is a random homogeneous polynomial of large degree. The outer colon is the module

![]() $(M\,:\,g)$

, where g is a general polynomial in the ideal

$(M\,:\,g)$

, where g is a general polynomial in the ideal

![]() $\textrm{Ann}(R^k/(M\,:\,f))$

. See also [Reference Chen and Cid-Ruiz9, Proposition 2.2].

$\textrm{Ann}(R^k/(M\,:\,f))$

. See also [Reference Chen and Cid-Ruiz9, Proposition 2.2].

It is an interesting problem to identify polynomial solutions when V(M) is no longer finite and to decide whether these are dense in the infinite-dimensional space of all solutions. Here “dense” refers to the topology on

![]() $\mathcal{F}$

used by Lomadze in [Reference Lomadze26]. The following result gives an algebraic characterization of the closure in

$\mathcal{F}$

used by Lomadze in [Reference Lomadze26]. The following result gives an algebraic characterization of the closure in

![]() ${\textrm{Sol}}(M)$

of the subspace of polynomial solutions.

${\textrm{Sol}}(M)$

of the subspace of polynomial solutions.

Proposition 3.9. The polynomial solutions are dense in

![]() ${\textrm{Sol}}(M)$

if and only if the origin 0 lies in every associated variety

${\textrm{Sol}}(M)$

if and only if the origin 0 lies in every associated variety

![]() $V_i$

of the module M. If this fails, then the topological closure of the space of polynomial solutions

$V_i$

of the module M. If this fails, then the topological closure of the space of polynomial solutions

![]() $q({\bf z})$

to M is the solution space of

$q({\bf z})$

to M is the solution space of

![]() $M \,:\, \textrm{Ann}(R^k/(M \,:\, \mathfrak{m}^\infty))$

.

$M \,:\, \textrm{Ann}(R^k/(M \,:\, \mathfrak{m}^\infty))$

.

Proof. This proposition is our reinterpretation of Lomadze’s result in [Reference Lomadze26, Theorem 3.1].

![]()

The result gives rise to algebraic algorithms for answering analytic questions about a system of PDE. The property in the first sentence can be decided by running the primary decomposition algorithm in [Reference Chen and Cid-Ruiz9]. For the second sentence, we need to compute a double saturation as above. This can be carried out in Macaulay2 as well.

4. Differential primary decomposition

We now shift gears and pass to a setting that is dual to the one we have seen so far. Namely, we discuss differential primary decompositions [Reference Chen and Cid-Ruiz9, Reference Cid-Ruiz and Sturmfels12]. That duality is subtle and can be confusing at first sight. To mitigate this, we introduce new notation. We set

![]() $x_i = \partial_i = \partial_{z_i}$

for

$x_i = \partial_i = \partial_{z_i}$

for

![]() $i=1,\ldots,n$

. Thus, R is now the polynomial ring

$i=1,\ldots,n$

. Thus, R is now the polynomial ring

![]() $K[x_1,\ldots,x_n]$

. This is the notation we are used to from algebra courses (such as [Reference Michałek and Sturmfels27]). We write

$K[x_1,\ldots,x_n]$

. This is the notation we are used to from algebra courses (such as [Reference Michałek and Sturmfels27]). We write

![]() $\partial_{x_1},\ldots,\partial_{x_n}$

for the differential operators corresponding to

$\partial_{x_1},\ldots,\partial_{x_n}$

for the differential operators corresponding to

![]() $x_1,\ldots,x_n$

. Later on, we also identify

$x_1,\ldots,x_n$

. Later on, we also identify

![]() $z_i = \partial_{x_i}$

, and we think of the unknowns x and z in the multipliers

$z_i = \partial_{x_i}$

, and we think of the unknowns x and z in the multipliers

![]() $B_i({\bf x},{\bf z})$

as dual in the sense of the Fourier transform.

$B_i({\bf x},{\bf z})$

as dual in the sense of the Fourier transform.

The ring of differential operators on the polynomial ring R is the Weyl algebra

The 2n generators commute, except for the n relations

![]() $\partial_{x_i} x_i - x_i \partial_{x_i} = 1$

, which expresses the Product Rule from Calculus. Elements in the Weyl algebra

$\partial_{x_i} x_i - x_i \partial_{x_i} = 1$

, which expresses the Product Rule from Calculus. Elements in the Weyl algebra

![]() $D_n$

are linear differential operators with polynomial coefficients. We write

$D_n$

are linear differential operators with polynomial coefficients. We write

![]() $\delta \bullet p$

for the result of applying

$\delta \bullet p$

for the result of applying

![]() $\delta \in D_n$

to a polynomial

$\delta \in D_n$

to a polynomial

![]() $p = p({\bf x})$

in R. For instance,

$p = p({\bf x})$

in R. For instance,

![]() $x_i \bullet p = x_i p$

and

$x_i \bullet p = x_i p$

and

![]() $\partial_{x_i} \bullet p = \partial p/\partial x_i$

. Let

$\partial_{x_i} \bullet p = \partial p/\partial x_i$

. Let

![]() $D_n^k$

denote the k-tuples of differential operators in

$D_n^k$

denote the k-tuples of differential operators in

![]() $D_n$

. These operate on the free module

$D_n$

. These operate on the free module

![]() $R^k$

as follows:

$R^k$

as follows:

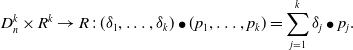

\begin{equation*} D_n^k \times R^k \rightarrow R \,:\, (\delta_1,\ldots,\delta_k) \bullet (p_1,\ldots,p_k)= \sum_{j=1}^k \delta_j \bullet p_j. \end{equation*}

\begin{equation*} D_n^k \times R^k \rightarrow R \,:\, (\delta_1,\ldots,\delta_k) \bullet (p_1,\ldots,p_k)= \sum_{j=1}^k \delta_j \bullet p_j. \end{equation*}

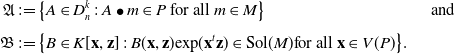

Fix a submodule M of

![]() $R^k$

and let

$R^k$

and let

![]() $P_1,\ldots,P_s$

be its associated primes, as in Section 3. A differential primary decomposition of M is a list

$P_1,\ldots,P_s$

be its associated primes, as in Section 3. A differential primary decomposition of M is a list

![]() $\mathcal{A}_1,\ldots,\mathcal{A}_s$

of finite subsets of

$\mathcal{A}_1,\ldots,\mathcal{A}_s$

of finite subsets of

![]() $D_n^k$

such that

$D_n^k$

such that

This is a membership test for the module M using differential operators. This test is geometric since the polynomial

![]() $\delta \bullet m $

lies in

$\delta \bullet m $

lies in

![]() $P_i $

if and only if it vanishes on the variety

$P_i $

if and only if it vanishes on the variety

![]() $V_i=V(P_i)$

.

$V_i=V(P_i)$

.

Theorem 4.1 Every submodule M of

![]() $R^k$

has a differential primary decomposition. We can choose the sets

$R^k$

has a differential primary decomposition. We can choose the sets

![]() $\mathcal{A}_1,\ldots,\mathcal{A}_s$

such that

$\mathcal{A}_1,\ldots,\mathcal{A}_s$

such that

![]() $|\mathcal{A}_i|$

is the arithmetic length of M along the prime

$|\mathcal{A}_i|$

is the arithmetic length of M along the prime

![]() $P_i$

.

$P_i$

.

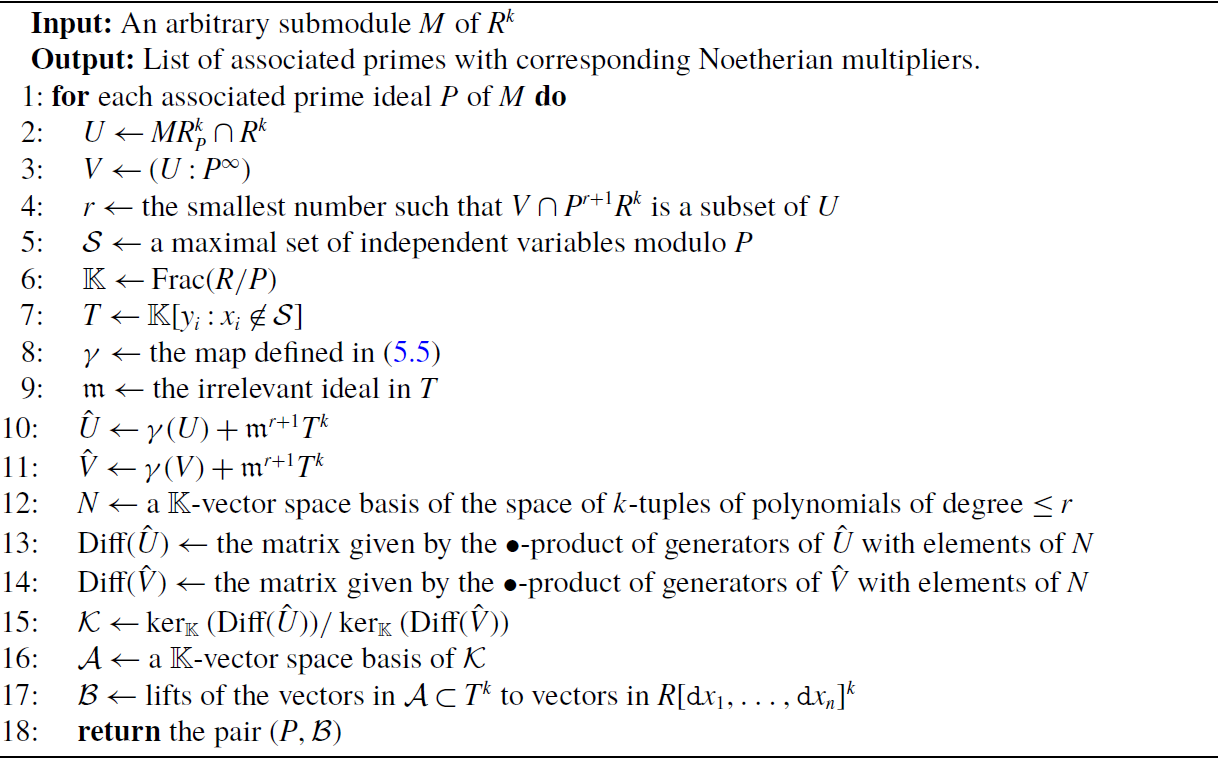

Proof and discussion. The result is proved in [Reference Cid-Ruiz and Sturmfels12] and further refined in [Reference Chen and Cid-Ruiz9]. These sources also develop an algorithm. We shall explain this in Section 5, along with a discussion of the Macaulay2 command solvePDE, which computes differential primary decompositions.

![]()

The differential operators in

![]() $\mathcal{A}_1,\ldots,\mathcal{A}_s$

are known as Noetherian operators in the literature; see [Reference Chen, Härkönen, Krone and Leykin10, Reference Cid-Ruiz, Homs and Sturmfels11, Reference Damiano, Sabadini and Struppa15, Reference Oberst31]. Theorem 4.1 says that we can find a collection of

$\mathcal{A}_1,\ldots,\mathcal{A}_s$

are known as Noetherian operators in the literature; see [Reference Chen, Härkönen, Krone and Leykin10, Reference Cid-Ruiz, Homs and Sturmfels11, Reference Damiano, Sabadini and Struppa15, Reference Oberst31]. Theorem 4.1 says that we can find a collection of

![]() $\textrm{amult}(M) = m_1 + \cdots + m_s$

Noetherian operators in

$\textrm{amult}(M) = m_1 + \cdots + m_s$

Noetherian operators in

![]() $D_n^k$

to characterize membership in the module M.

$D_n^k$

to characterize membership in the module M.

Remark 4.2 The construction of Noetherian operators is studied in [Reference Björk7, Reference Chen, Cid-Ruiz, Härkönen, Krone and Leykin8, Reference Chen, Härkönen, Krone and Leykin10, Reference Cid-Ruiz, Homs and Sturmfels11, Reference Hörmander23, Reference Oberst31]. Some of these sources offer explicit methods, while others remain at an abstract level. All previous methods share one serious shortcoming, namely they yield operators separately for each primary component

![]() $M_i$

of M. They do not take into account how one primary component is embedded into another. This leads to a number of operators that can be much larger than amult (M). We refer to [Reference Cid-Ruiz and Sturmfels12, Example 5.6] for an instance from algebraic statistics where the previous methods require 1044 Noetherian operators, while

$M_i$

of M. They do not take into account how one primary component is embedded into another. This leads to a number of operators that can be much larger than amult (M). We refer to [Reference Cid-Ruiz and Sturmfels12, Example 5.6] for an instance from algebraic statistics where the previous methods require 1044 Noetherian operators, while

![]() ${\textrm{amult}}(M) = 207$

suffice.

${\textrm{amult}}(M) = 207$

suffice.

While Theorem 4.1 makes no claim of minimality, it is known that

![]() $\textrm{amult}(M)$

is the minimal number of Noetherian operators required for a differential primary decomposition of a certain desirable form. To make this precise, we begin with a few necessary definitions. For any given subset

$\textrm{amult}(M)$

is the minimal number of Noetherian operators required for a differential primary decomposition of a certain desirable form. To make this precise, we begin with a few necessary definitions. For any given subset

![]() $\mathcal{S}$

of

$\mathcal{S}$

of

![]() $\{x_1,\ldots,x_n\}$

, the relative Weyl algebra is defined as the subring of the Weyl algebra

$\{x_1,\ldots,x_n\}$

, the relative Weyl algebra is defined as the subring of the Weyl algebra

![]() $D_n$

using only differential operators corresponding to variables not in

$D_n$

using only differential operators corresponding to variables not in

![]() $\mathcal{S}$

:

$\mathcal{S}$

:

Thus, if

![]() $\mathcal{S} = \emptyset$

, then

$\mathcal{S} = \emptyset$

, then

![]() $D_n(\mathcal{S}) = D_n$

, and if

$D_n(\mathcal{S}) = D_n$

, and if

![]() $\mathcal{S} = \{x_1,\ldots,x_n\}$

, then

$\mathcal{S} = \{x_1,\ldots,x_n\}$

, then

![]() $D_n(\mathcal{S}) = R= K[x_1,\ldots,x_n]$

.

$D_n(\mathcal{S}) = R= K[x_1,\ldots,x_n]$

.

For any prime ideal

![]() $P_i$

in R we fix a set

$P_i$

in R we fix a set

![]() $\mathcal{S}_i \subseteq \{x_1,\ldots,x_n\}$

that satisfies

$\mathcal{S}_i \subseteq \{x_1,\ldots,x_n\}$

that satisfies

![]() $K[\mathcal{S}_i] \cap P_i = \{0\}$

and is maximal with this property. Thus,

$K[\mathcal{S}_i] \cap P_i = \{0\}$

and is maximal with this property. Thus,

![]() $\mathcal{S}_i$

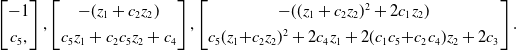

is a maximal independent set of coordinates on the irreducible variety

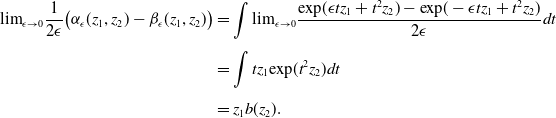

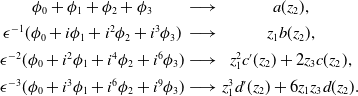

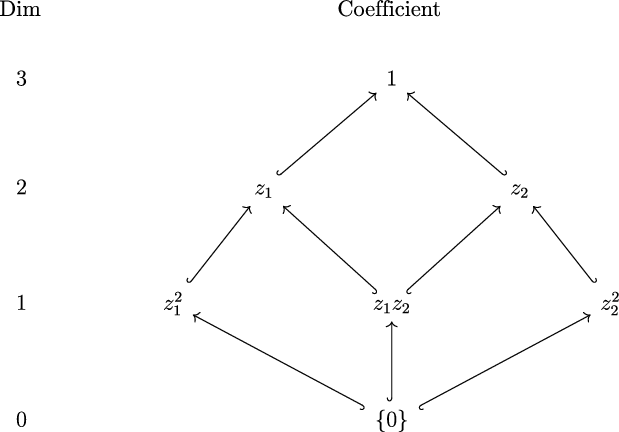

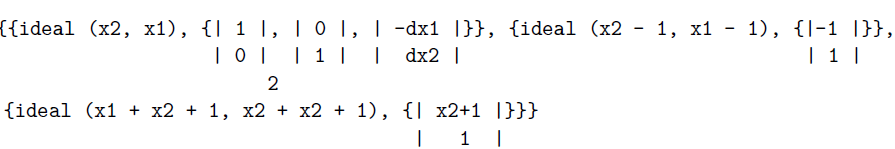

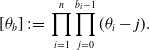

$\mathcal{S}_i$