Introduction

The classification of simple Lie algebras over algebraically closed fields is a classical subject, and the situation is, in many aspects, well understood. That is not the case at all over arbitrary fields. Typically, this situation is studied by first extending to a splitting field (e.g., the algebraic closure) and then performing Galois descent, using tools such as Galois cohomology.

This is not the only possible approach, however. Inspired by the successful applications (e.g., by Efim Zel’manov and Alekseĭ Kostrikin [Reference Zelmanov and KostrikinZK90]) of sandwich elements (i.e., nonzero elements x in a Lie algebra L such that

![]() $\operatorname {\mathrm {ad}}_x^2 = 0$

), various people have studied simple Lie algebras through the concept of extremal elements. A nonzero element

$\operatorname {\mathrm {ad}}_x^2 = 0$

), various people have studied simple Lie algebras through the concept of extremal elements. A nonzero element

![]() $x \in L$

is called extremal if

$x \in L$

is called extremal if

![]() $[x, [x, L]] \leq kx$

(together with an additional axiom that is relevant only in characteristic

$[x, [x, L]] \leq kx$

(together with an additional axiom that is relevant only in characteristic

![]() $2$

); notice that this implies

$2$

); notice that this implies

![]() $\operatorname {\mathrm {ad}}_x^3 = 0$

. A typical example of an extremal element is a long root element in a classical Lie algebra. In fact, these extremal elements span a one-dimensional inner ideal of the Lie algebra, a notion going back to John Faulkner [Reference FaulknerFau73] and Georgia Benkart [Reference BenkartBen74]. The first paper explicitly underlying the importance of one-dimensional inner ideals, that we are aware of, is Alexander Premet’s paper [Reference PremetPre86] from 1986; see, in particular, its main result stating that any finite-dimensional Lie algebra over an algebraically closed field of characteristic

$\operatorname {\mathrm {ad}}_x^3 = 0$

. A typical example of an extremal element is a long root element in a classical Lie algebra. In fact, these extremal elements span a one-dimensional inner ideal of the Lie algebra, a notion going back to John Faulkner [Reference FaulknerFau73] and Georgia Benkart [Reference BenkartBen74]. The first paper explicitly underlying the importance of one-dimensional inner ideals, that we are aware of, is Alexander Premet’s paper [Reference PremetPre86] from 1986; see, in particular, its main result stating that any finite-dimensional Lie algebra over an algebraically closed field of characteristic

![]() $p>5$

contains a one-dimensional inner ideal (Theorem 1). The idea of extremal elements has also famously been used by Vladimir Chernousov in his proof of the Hasse principle for algebraic groups of type

$p>5$

contains a one-dimensional inner ideal (Theorem 1). The idea of extremal elements has also famously been used by Vladimir Chernousov in his proof of the Hasse principle for algebraic groups of type

![]() $E_8$

[Reference ChernousovChe89]. (He called the extremal elements root elements because in his situation, they belong to root groups. A detailed exposition can be found in [Reference Platonov and RapinchukPR94, Chapter 6, Section 8]; see, in particular, the discussion about root elements starting on p. 387.)

$E_8$

[Reference ChernousovChe89]. (He called the extremal elements root elements because in his situation, they belong to root groups. A detailed exposition can be found in [Reference Platonov and RapinchukPR94, Chapter 6, Section 8]; see, in particular, the discussion about root elements starting on p. 387.)

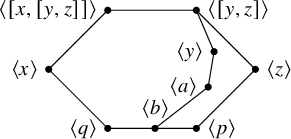

Around the turn of the century, Arjeh Cohen and his collaborators (Anja Steinbach, Rosane Ushirobira, David Wales, Gábor Ivanyos, Dan Roozemond) obtained the clever insight that the extremal elements have a geometric interpretation, and the corresponding one-dimensional inner ideals can serve as the point set of a so-called shadow space of a building [Reference Cohen, Steinbach, Ushirobira and WalesCSUW01, Reference Cohen and IvanyosCI06, Reference Cohen and IvanyosCI07, Reference Cohen, Ivanyos and RoozemondCIR08]. That idea led them to the notion of a root filtration space and makes it possible to visualize the different relations between extremal elements. In particular, distinct extremal points can be in four possible relations: collinear, symplectic, special or hyperbolic. These notions will play an important role in Sections 2 and 3 of our paper. In particular, a crucial ingredient will be the result by Cohen and Ivanyos that each hyperbolic pair of extremal points gives rise to a

![]() $5$

-grading of the Lie algebra.

$5$

-grading of the Lie algebra.

Since then, extremal elements in Lie algebras, and the corresponding extremal geometry, have been studied by various people; see, for instance, [Reference Draisma and in ’t panhuisDitp08, Reference in ’t panhuis, Postma and RoozemonditpPR09, Reference RoozemondRoo11, Reference CohenCoh12, Reference Cuypers, Roberts and ShpectorovCRS15, Reference LópezFL16, Reference Cuypers and FleischmannCF18, Reference Cuypers and MeulewaeterCM21, Reference Cuypers and FleischmannCF23] and [Reference LópezFL19, Chapter 6]. The origin of the terminology ‘extremal elements’ remains slightly mysterious. We have learned from Arjeh Cohen that he believes that he picked it up from Jean-Pierre Serre and consequently used it in their paper [Reference Cohen, Steinbach, Ushirobira and WalesCSUW01], but neither Cohen nor we have been able to find a written source confirming this origin, and in fact, Serre informed us that he does not think that he is responsible for this terminology, but ‘that it is possible that [he] used it once in a discussion’.

In our paper, we will adopt a different point of view on extremal elements and relate the Lie algebras to two types of exceptional algebraic structures: cubic norm structures and quadrangular algebras.

Cubic norm structures are well known: The Book of Involutions [Reference Knus, Merkurjev, Rost and TignolKMRT98] spends a whole Chapter IX on them. In particular, §38 and the Notes at the end of Chapter IX contain a lot of additional information and (historical) background. The key players in this theory are Hans Freudenthal [Reference FreudenthalFre54], Tonny Springer [Reference SpringerSpr62], Kevin McCrimmon [Reference McCrimmonMcC69], Holger Petersson and Michel Racine [Reference Petersson and RacinePR84, Reference Petersson and RacinePR86a, Reference Petersson and RacinePR86b]. We will also encounter ‘twin cubic norm structures’ (or ‘cubic norm pairs’), a notion that seems to have appeared only once before in the literature in a paper by John Faulkner [Reference FaulknerFau01].

Quadrangular algebras are certainly not so well known. They first appeared implicitly in the work of Jacques Tits and Richard Weiss on the classification of Moufang polygons, which are geometric structures (spherical buildings of rank 2) associated to simple linear algebraic groups of relative rank 2 [Reference Tits and WeissTW02]. The first explicit definition of these algebras, in the anisotropic case, appeared in Weiss’ monograph [Reference WeissWei06]. The general definition of arbitrary quadrangular algebras is even more recent and is due to Bernhard Mühlherr and Richard Weiss [Reference Mühlherr and WeissMW19]. The definition looks rather daunting (see Definition 1.17 below) mostly because of technicalities in characteristic

![]() $2$

, although the definition itself is characteristic-free.

$2$

, although the definition itself is characteristic-free.

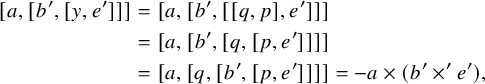

It is worth pointing out that the anisotropic quadrangular algebras are precisely the parametrizing structures for Moufang quadrangles and that the anisotropic cubic norm structures are precisely the parametrizing structures for Moufang hexagons. More generally, arbitrary quadrangular algebras and arbitrary cubic norm structures (which might or might not be anisotropic) have been used in the theory of Tits quadrangles and Tits hexagons, respectively [Reference Mühlherr and WeissMW22]. See, in particular, the appendix of [Reference Mühlherr and WeissMW19] for the connection between quadrangular algebras and Tits quadrangles, and [Reference Mühlherr and WeissMW22, Chapter 2] for the connection between cubic norm structures and Tits hexagons. Notice that Moufang quadrangles are, in fact, buildings of type

![]() $B_2$

or

$B_2$

or

![]() $BC_2$

, and that Moufang hexagons are buildings of type

$BC_2$

, and that Moufang hexagons are buildings of type

![]() $G_2$

. (In the language of linear algebraic groups, this is the type of the relative root system.)

$G_2$

. (In the language of linear algebraic groups, this is the type of the relative root system.)

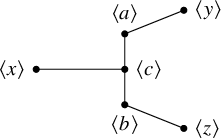

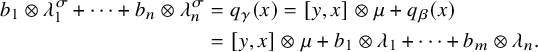

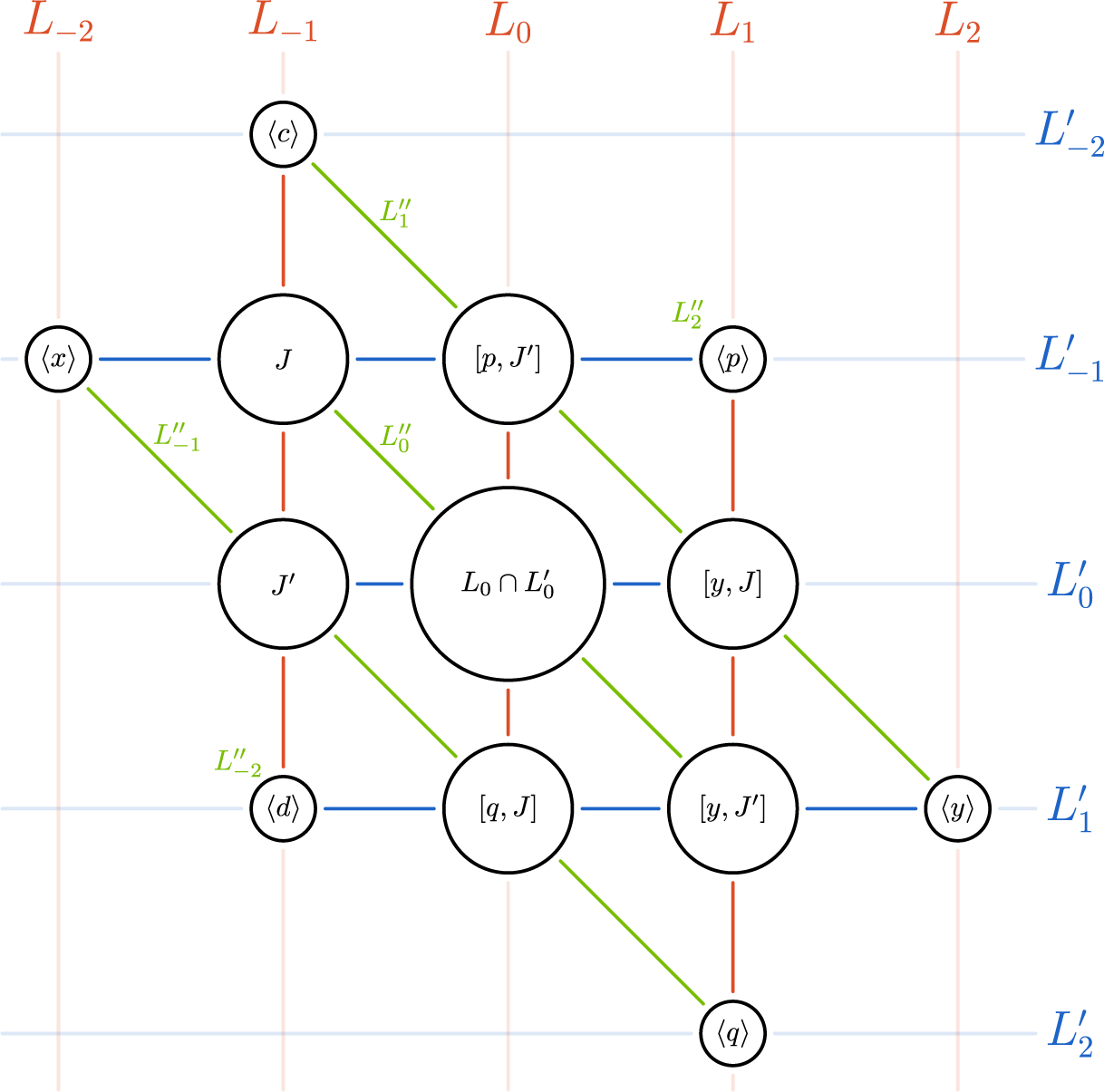

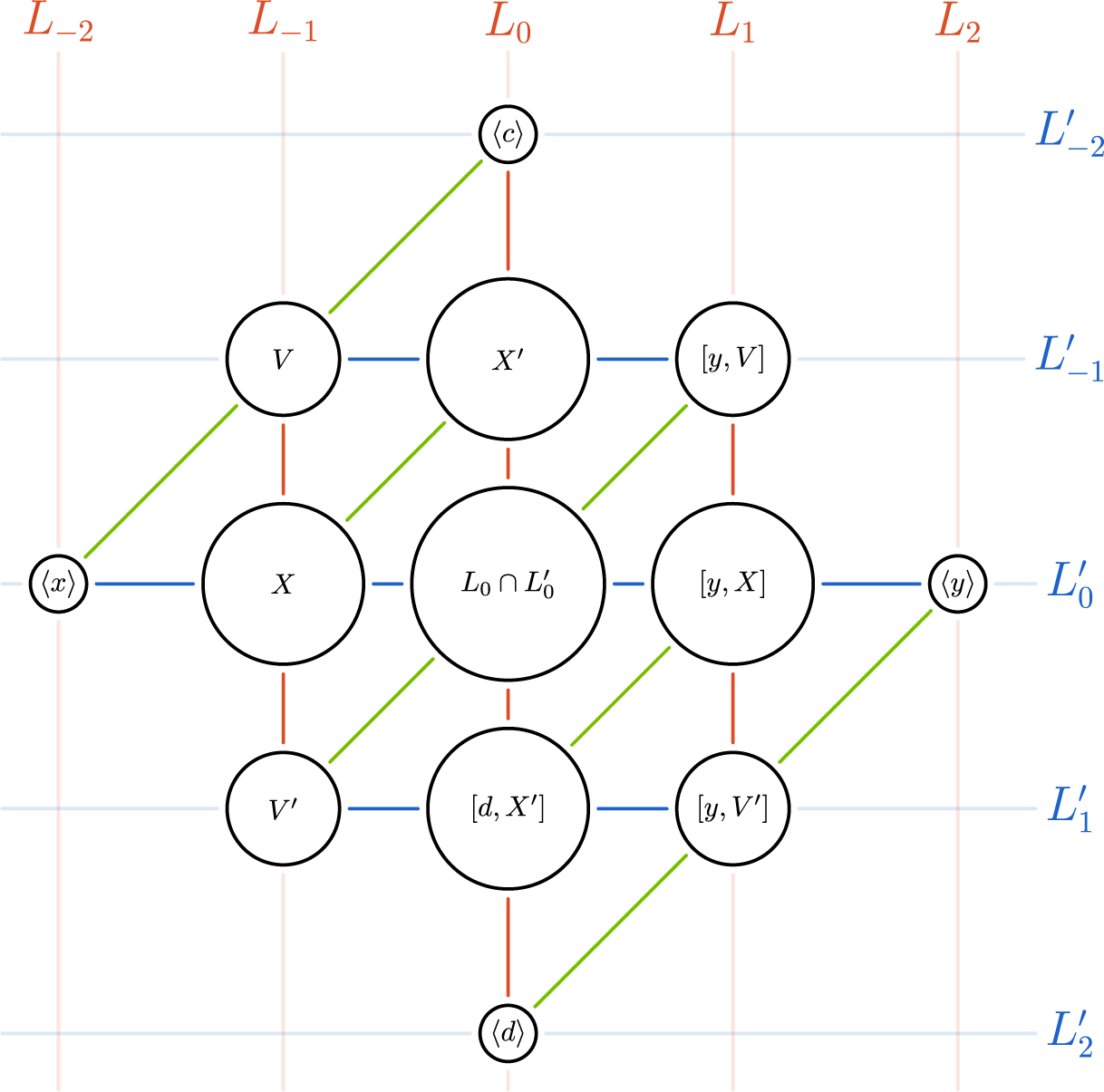

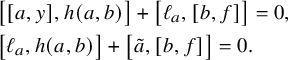

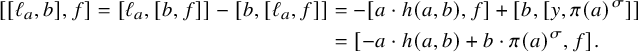

In our approach, we will start from a simple Lie algebra admitting extremal elements satisfying two possible sets of assumptions (see Theorems B and C below). In each of these two cases, we will use two different hyperbolic pairs of extremal points, giving rise to two different

![]() $5$

-gradings on the Lie algebra, so we obtain a

$5$

-gradings on the Lie algebra, so we obtain a

![]() $5 \times 5$

-graded Lie algebra. Depending on which of the two cases we are considering, this

$5 \times 5$

-graded Lie algebra. Depending on which of the two cases we are considering, this

![]() $5 \times 5$

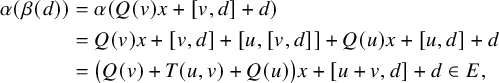

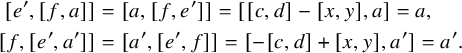

-grading takes a different shape. For the reader’s convenience, we have reproduced smaller versions of Figures 1 and 2 of these gradings below, that can be found in full size on pages 32 and 42, respectively.

$5 \times 5$

-grading takes a different shape. For the reader’s convenience, we have reproduced smaller versions of Figures 1 and 2 of these gradings below, that can be found in full size on pages 32 and 42, respectively.

A closer look at these gradings reveals that, in fact, the

![]() $5 \times 5$

-grading in Figure 1 is a

$5 \times 5$

-grading in Figure 1 is a

![]() $G_2$

-grading, and the

$G_2$

-grading, and the

![]() $5 \times 5$

-grading in Figure 2 is a

$5 \times 5$

-grading in Figure 2 is a

![]() $BC_2$

-grading. We will show that in the first case, the Lie algebra is parametrized by a cubic norm pair, in the sense that each of the pieces arising in the decomposition as well as the explicit formulas describing their Lie brackets, are determined by the structure of this cubic norm pair (Theorem B). In the second case, it turns out that the Lie algebra is parametrized by a quadrangular algebra (Theorem C).

$BC_2$

-grading. We will show that in the first case, the Lie algebra is parametrized by a cubic norm pair, in the sense that each of the pieces arising in the decomposition as well as the explicit formulas describing their Lie brackets, are determined by the structure of this cubic norm pair (Theorem B). In the second case, it turns out that the Lie algebra is parametrized by a quadrangular algebra (Theorem C).

The study of Lie algebras graded by root systems is not new, and we refer, for instance, to the work of Bruce Allison, Georgia Benkart, Yun Gao and Efim Zel’manov [Reference Allison, Benkart and GaoABG02, Reference Benkart and ZelmanovBZ96]. However, their goals have been rather different from ours. Whereas earlier research has focused on classifying all Lie algebras graded by certain root systems in terms of central extensions of TKK-constructions, we focus on simple Lie algebras, but we have as goal to understand the internal structure of the Lie algebra, via the different pieces of the grading, directly in terms of an algebraic structure.Footnote 1 In addition, we do not assume the existence of a

![]() $BC_2$

-or

$BC_2$

-or

![]() $G_2$

-grading, but it is a consequence of some natural conditions on the extremal elements of the Lie algebra. These conditions are satisfied very often, and in fact, there are many Lie algebras for which both sets of conditions are satisfied, and hence admit both a

$G_2$

-grading, but it is a consequence of some natural conditions on the extremal elements of the Lie algebra. These conditions are satisfied very often, and in fact, there are many Lie algebras for which both sets of conditions are satisfied, and hence admit both a

![]() $BC_2$

-and a

$BC_2$

-and a

![]() $G_2$

-grading, and hence, they can be parametrized both by a cubic norm structure and by a quadrangular algebra! It is our hope that this observation will lead to a deeper understanding of connections between different forms of exceptional Lie algebras (and exceptional algebraic groups, as well as their geometric counterparts – namely, the corresponding Moufang polygons and Tits polygons mentioned above). We have indicated a first impression in this direction in Remark 1.2 in the appendix.

$G_2$

-grading, and hence, they can be parametrized both by a cubic norm structure and by a quadrangular algebra! It is our hope that this observation will lead to a deeper understanding of connections between different forms of exceptional Lie algebras (and exceptional algebraic groups, as well as their geometric counterparts – namely, the corresponding Moufang polygons and Tits polygons mentioned above). We have indicated a first impression in this direction in Remark 1.2 in the appendix.

We formulate our main results. We emphasize that k is an arbitrary field of arbitrary characteristic.

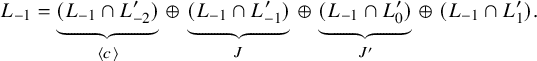

Theorem A. Let L be a simple Lie algebra. Assume that for some Galois extension

![]() $k'/k$

with

$k'/k$

with

![]() $k'\neq \mathbb F_2$

,

$k'\neq \mathbb F_2$

,

![]() $L_{k'} := L \otimes _k k'$

is a simple Lie algebra generated by its pure extremal elements such that the extremal geometry contains lines.

$L_{k'} := L \otimes _k k'$

is a simple Lie algebra generated by its pure extremal elements such that the extremal geometry contains lines.

Consider a hyperbolic pair

![]() $(x,y)$

of extremal elements and let

$(x,y)$

of extremal elements and let

![]() $L=L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2$

be the associated

$L=L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2$

be the associated

![]() $5$

-grading.

$5$

-grading.

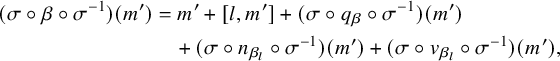

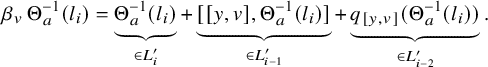

Then for each

![]() $l \in L_1$

, we can define an ‘l-exponential automorphism’

$l \in L_1$

, we can define an ‘l-exponential automorphism’

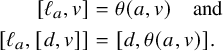

where

![]() $q_{\alpha },n_{\alpha },v_{\alpha } \colon L \to L$

are maps with

$q_{\alpha },n_{\alpha },v_{\alpha } \colon L \to L$

are maps with

for all

![]() $i\in [-2,2]$

.

$i\in [-2,2]$

.

This is shown in Theorem 3.16 below, with the essential part being obtained by Theorem 3.13, along with uniqueness results and other auxiliary results. (Notice that

![]() $\alpha $

is not unique: there is a one-parameter family of such automorphisms.)

$\alpha $

is not unique: there is a one-parameter family of such automorphisms.)

In particular, when

![]() $\operatorname {\mathrm {char}}(k) \neq 2$

, we can always choose

$\operatorname {\mathrm {char}}(k) \neq 2$

, we can always choose

![]() $q_\alpha (m) = \tfrac {1}{2}[l, [l, m]]$

, and if, in addition,

$q_\alpha (m) = \tfrac {1}{2}[l, [l, m]]$

, and if, in addition,

![]() $\operatorname {\mathrm {char}}(k) \neq 3$

, then it is a consequence that

$\operatorname {\mathrm {char}}(k) \neq 3$

, then it is a consequence that

![]() $n_\alpha (m) = \tfrac {1}{6} [l, [l, [l, m]]]$

and that

$n_\alpha (m) = \tfrac {1}{6} [l, [l, [l, m]]]$

and that

![]() $v_\alpha (m) = \tfrac {1}{24} [l, [l, [l, [l, m]]]]$

, so our l-exponential automorphism really are a generalization of the usual exponential automorphisms.

$v_\alpha (m) = \tfrac {1}{24} [l, [l, [l, [l, m]]]]$

, so our l-exponential automorphism really are a generalization of the usual exponential automorphisms.

It is worth mentioning that the condition ‘being generated by pure extremal elements’ is easily fulfilled. For instance, the main result of [Reference Cohen, Ivanyos and RoozemondCIR08] shows that as soon as

![]() $\operatorname {\mathrm {char}}(k)> 5$

, each simple Lie algebra admitting a single pure extremal element is, in fact, generated by its extremal elements. (See also [Reference LópezFL19, Chapter 6].) However, there exist interesting simple Lie algebras not admitting any extremal element, and in that case, studying the minimal inner ideals instead is an interesting approach. We refer, for instance, to our earlier work [Reference De Medts and MeulewaeterDMM24] for examples of this situation.

$\operatorname {\mathrm {char}}(k)> 5$

, each simple Lie algebra admitting a single pure extremal element is, in fact, generated by its extremal elements. (See also [Reference LópezFL19, Chapter 6].) However, there exist interesting simple Lie algebras not admitting any extremal element, and in that case, studying the minimal inner ideals instead is an interesting approach. We refer, for instance, to our earlier work [Reference De Medts and MeulewaeterDMM24] for examples of this situation.

In addition, notice that the existence of a Galois extension

![]() $k'/k$

such that the extremal geometry of the Lie algebra over

$k'/k$

such that the extremal geometry of the Lie algebra over

![]() $k'$

contains lines, is also easily fulfilled. Indeed, if

$k'$

contains lines, is also easily fulfilled. Indeed, if

![]() $\operatorname {\mathrm {char}}(k) \neq 2$

, then it follows from [Reference Cuypers and FleischmannCF23, Theorem 1.1], and the paragraph following this result that unless the Lie algebra is a symplectic Lie algebra (i.e., of the form

$\operatorname {\mathrm {char}}(k) \neq 2$

, then it follows from [Reference Cuypers and FleischmannCF23, Theorem 1.1], and the paragraph following this result that unless the Lie algebra is a symplectic Lie algebra (i.e., of the form

![]() $\mathfrak {fsp}(V, f)$

for some nondegenerate symplectic space

$\mathfrak {fsp}(V, f)$

for some nondegenerate symplectic space

![]() $(V,f)$

), there always even exists an extension

$(V,f)$

), there always even exists an extension

![]() $k'/k$

of degree at most

$k'/k$

of degree at most

![]() $2$

fulfilling the requirement that the extremal geometry contains lines. (It is a more subtle fact that the simplicity of the Lie algebra is preserved after such a base extension, but we deal with this in Lemma 5.2.)

$2$

fulfilling the requirement that the extremal geometry contains lines. (It is a more subtle fact that the simplicity of the Lie algebra is preserved after such a base extension, but we deal with this in Lemma 5.2.)

Our Theorems B and C are very similar in structure. Notice the difference between the conditions between the two results. In Theorem B, the main condition is the fact that we require the existence of lines already over the base field k. In Theorem C, the main condition is the existence of a symplectic pair of extremal elements. (Notice that by the preceding discussion, condition (i) in the statement of Theorem C essentially only excludes symplectic Lie algebras.)

Theorem B. Let L be a simple Lie algebra defined over a field k with

![]() $|k| \geq 4$

. Assume that L is generated by its pure extremal elements and that the extremal geometry contains lines.

$|k| \geq 4$

. Assume that L is generated by its pure extremal elements and that the extremal geometry contains lines.

Then we can find two hyperbolic pairs

![]() $(x,y)$

and

$(x,y)$

and

![]() $(p,q)$

of extremal elements, with corresponding

$(p,q)$

of extremal elements, with corresponding

![]() $5$

-gradings

$5$

-gradings

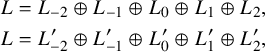

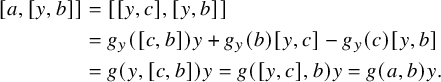

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2, \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2, \end{align*} $$

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2, \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2, \end{align*} $$

such that the gradings intersect as in Figure 1. Moreover, the structure of the Lie algebra induces various maps between the different components of this

![]() $5 \times 5$

-grading, resulting in a ‘twin cubic norm structure’ on the pair

$5 \times 5$

-grading, resulting in a ‘twin cubic norm structure’ on the pair

If the norm of this structure is not identically zero, then this results in a genuine cubic norm structure.

Theorem C. Let L be a simple Lie algebra defined over a field k with

![]() $|k| \geq 3$

. Assume that L is generated by its pure extremal elements and that

$|k| \geq 3$

. Assume that L is generated by its pure extremal elements and that

-

(i) there exists a Galois extension

$k'/k$

of degree at most

$k'/k$

of degree at most

$2$

such that the extremal geometry of

$2$

such that the extremal geometry of

$L\otimes k'$

contains lines;

$L\otimes k'$

contains lines; -

(ii) there exist symplectic pairs of extremal elements.

Then we can find two hyperbolic pairs

![]() $(x,y)$

and

$(x,y)$

and

![]() $(p,q)$

of extremal elements, with corresponding

$(p,q)$

of extremal elements, with corresponding

![]() $5$

-gradings

$5$

-gradings

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2, \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2, \end{align*} $$

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2, \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2, \end{align*} $$

such that the gradings intersect as in Figure 2. Moreover, the structure of the Lie algebra induces various maps between the different components of this

![]() $5 \times 5$

-grading, resulting in a quadrangular algebra on the pair

$5 \times 5$

-grading, resulting in a quadrangular algebra on the pair

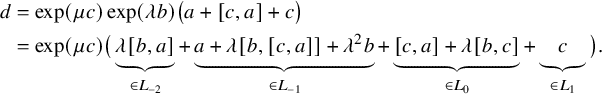

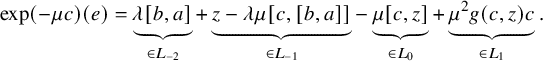

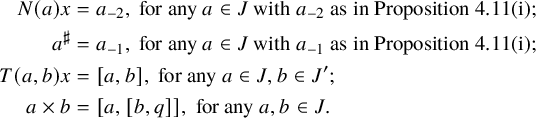

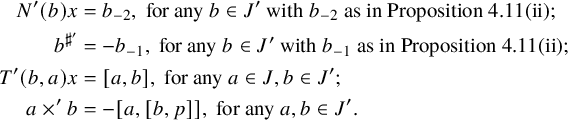

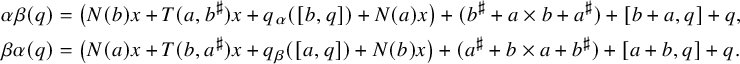

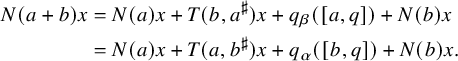

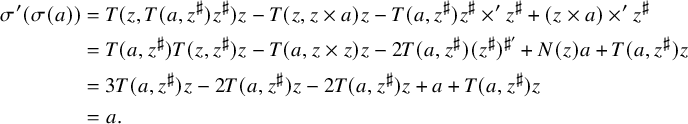

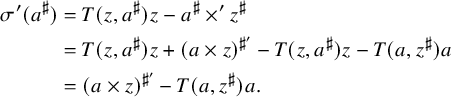

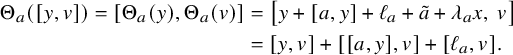

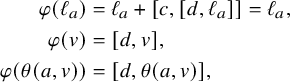

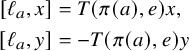

Theorem B is shown in Section 4 culminating in Theorem 4.23, and Theorem C is shown in Section 5 culminating in Theorem 5.56. For both results, we rely in a crucial way on Theorem A. For Theorem B, we use this result to define the norm N and the adjoint

![]() $\sharp $

of the cubic norm structure in Definition 4.12, relying on Proposition 4.11. For Theorem C, we use it to define the quadratic form Q on V in Definition 5.10 (relying on Lemma 5.9) and to define the map

$\sharp $

of the cubic norm structure in Definition 4.12, relying on Proposition 4.11. For Theorem C, we use it to define the quadratic form Q on V in Definition 5.10 (relying on Lemma 5.9) and to define the map

![]() $\theta \colon X \times V \to V$

in Definition 5.29. Not surprisingly, the corresponding Theorem 3.13 is used in many places of our proof.

$\theta \colon X \times V \to V$

in Definition 5.29. Not surprisingly, the corresponding Theorem 3.13 is used in many places of our proof.

1 Preliminaries

Throughout, k is an arbitrary commutative field of arbitrary characteristic. All algebras occurring in this paper are assumed to be k-algebras. This preliminary section consists of three parts. Section 1.1 deals with Lie algebras and introduces the basic theory of extremal elements and the extremal geometry. Most of this material is taken from [Reference Cohen and IvanyosCI06]. We then introduce cubic norm structures in Section 1.2 and quadrangular algebras in Section 1.3.

1.1 Lie algebras

Definition 1.1. A

![]() $\mathbb {Z}$

-grading of a Lie algebra L is a vector space decomposition

$\mathbb {Z}$

-grading of a Lie algebra L is a vector space decomposition

![]() $L=\bigoplus _{i\in \mathbb {Z}} L_i$

such that

$L=\bigoplus _{i\in \mathbb {Z}} L_i$

such that

![]() $[L_i,L_j]\leq L_{i+j}$

for all

$[L_i,L_j]\leq L_{i+j}$

for all

![]() $i,j\in \mathbb {Z}$

. If n is a natural number such that

$i,j\in \mathbb {Z}$

. If n is a natural number such that

![]() $L_i=0$

for all

$L_i=0$

for all

![]() $i\in \mathbb {Z}$

such that

$i\in \mathbb {Z}$

such that

![]() $|i|>n$

while

$|i|>n$

while

![]() $L_{-n}\oplus L_n\neq 0$

, then we call this grading a

$L_{-n}\oplus L_n\neq 0$

, then we call this grading a

![]() $(2n+1)$

-grading. We call

$(2n+1)$

-grading. We call

![]() $L_{-n}$

and

$L_{-n}$

and

![]() $L_n$

the ends of this grading. The i-component of

$L_n$

the ends of this grading. The i-component of

![]() $x\in L$

is the image of the projection of x onto

$x\in L$

is the image of the projection of x onto

![]() $L_i$

. We also set

$L_i$

. We also set

![]() $L_{\leq i}=\bigoplus _{j\leq i} L_j$

and

$L_{\leq i}=\bigoplus _{j\leq i} L_j$

and

![]() $L_{\geq i}=\bigoplus _{j\geq i} L_j$

.

$L_{\geq i}=\bigoplus _{j\geq i} L_j$

.

Definition 1.2 [Reference Cohen and IvanyosCI06, Definition 14].

Let L be a Lie algebra over k.

-

(i) A nonzero element

$x\in L$

is called extremal if there is a map

$x\in L$

is called extremal if there is a map

$g_x \colon L \to k$

, called the extremal form on x, such that for all

$g_x \colon L \to k$

, called the extremal form on x, such that for all

$y,z \in L$

, we have (1)

$y,z \in L$

, we have (1) $$ \begin{align} \big[x,[x,y]\big] &= 2g_x(y)x,\qquad\qquad\qquad\qquad\qquad \end{align} $$

(2)

$$ \begin{align} \big[x,[x,y]\big] &= 2g_x(y)x,\qquad\qquad\qquad\qquad\qquad \end{align} $$

(2) $$ \begin{align} \big[[x,y],[x,z]\big] &= g_x\big([y,z]\big)x+g_x(z)[x,y]-g_x(y)[x,z], \end{align} $$

(3)

$$ \begin{align} \big[[x,y],[x,z]\big] &= g_x\big([y,z]\big)x+g_x(z)[x,y]-g_x(y)[x,z], \end{align} $$

(3) $$ \begin{align} \big[x,[y,[x,z]]\big] &= g_x\big([y,z]\big)x-g_x(z)[x,y]-g_x(y)[x,z]. \end{align} $$

$$ \begin{align} \big[x,[y,[x,z]]\big] &= g_x\big([y,z]\big)x-g_x(z)[x,y]-g_x(y)[x,z]. \end{align} $$

The last two identities are called the Premet identities. If the characteristic of k is not

$2$

, then the Premet identities (2) and (3) follow from (1); see [Reference Cohen and IvanyosCI06, Definition 14]. Moreover, using the Jacobi identity, (2) and (3) are equivalent if (1) holds.

$2$

, then the Premet identities (2) and (3) follow from (1); see [Reference Cohen and IvanyosCI06, Definition 14]. Moreover, using the Jacobi identity, (2) and (3) are equivalent if (1) holds.Note that the extremal form

$g_x$

might not be unique if

$g_x$

might not be unique if

$\operatorname {\mathrm {char}}(k) = 2$

.

$\operatorname {\mathrm {char}}(k) = 2$

. -

(ii) We call

$x\in L$

a sandwich or an absolute zero divisor if

$x\in L$

a sandwich or an absolute zero divisor if

$[x,[x,y]]=0$

and

$[x,[x,y]]=0$

and

$[x,[y,[x,z]]]=0$

for all

$[x,[y,[x,z]]]=0$

for all

$y,z\in L$

. An extremal element is called pure if it is not a sandwich.

$y,z\in L$

. An extremal element is called pure if it is not a sandwich. -

(iii) The Lie algebra L is nondegenerate if it has no nontrivial sandwiches.

-

(iv) We denote the set of extremal elements of a Lie algebra L by

$E(L)$

or, if L is clear from the context, by E. Accordingly, we denote the set

$E(L)$

or, if L is clear from the context, by E. Accordingly, we denote the set

$\{ k x \mid x\in E(L)\}$

of extremal points in the projective space on L by

$\{ k x \mid x\in E(L)\}$

of extremal points in the projective space on L by

$\mathcal {E}(L)$

or

$\mathcal {E}(L)$

or

$\mathcal {E}$

.

$\mathcal {E}$

.

Remark 1.3.

-

(i) If

$x \in L$

is a sandwich, then the extremal form

$x \in L$

is a sandwich, then the extremal form

$g_x$

can be chosen to be identically zero; we adopt the convention from [Reference Cohen and IvanyosCI06] that

$g_x$

can be chosen to be identically zero; we adopt the convention from [Reference Cohen and IvanyosCI06] that

$g_x$

is identically zero whenever x is a sandwich in L.

$g_x$

is identically zero whenever x is a sandwich in L. -

(ii) By [Reference Cohen and IvanyosCI06, Lemma 16], the existence of two distinct functions

$g_x$

and

$g_x$

and

$g^{\prime }_x$

satisfying the identities (1) to (3) implies that

$g^{\prime }_x$

satisfying the identities (1) to (3) implies that

$\operatorname {\mathrm {char}}(k)=2$

and that x is a sandwich. Combined with our convention in (i), this means that

$\operatorname {\mathrm {char}}(k)=2$

and that x is a sandwich. Combined with our convention in (i), this means that

$g_x$

is always uniquely determined.

$g_x$

is always uniquely determined.

Definition 1.4. Let L be a Lie algebra. An inner ideal of L is a subspace I of L satisfying

![]() $[I,[I,L]]\leq I$

.

$[I,[I,L]]\leq I$

.

If

![]() $\operatorname {\mathrm {char}}(k) \neq 2$

, then the

$\operatorname {\mathrm {char}}(k) \neq 2$

, then the

![]() $1$

-dimensional inner ideals of L are precisely the subspaces spanned by an extremal element. We will need inner ideals of dimension

$1$

-dimensional inner ideals of L are precisely the subspaces spanned by an extremal element. We will need inner ideals of dimension

![]() $>1$

only once, namely in the proof of Theorem 3.8.

$>1$

only once, namely in the proof of Theorem 3.8.

Recall that if D is a linear map from a Lie algebra L to itself such that

![]() $D^n=0$

for some

$D^n=0$

for some

![]() $n\in \mathbb N$

and

$n\in \mathbb N$

and

![]() $(n-1)!\in k^\times $

, then

$(n-1)!\in k^\times $

, then

![]() $\exp (D)=\sum _{i=0}^{n-1}\frac {1}{i!}D^i$

. A crucial aspect of extremal elements is that they allow for exponential maps in any characteristic.

$\exp (D)=\sum _{i=0}^{n-1}\frac {1}{i!}D^i$

. A crucial aspect of extremal elements is that they allow for exponential maps in any characteristic.

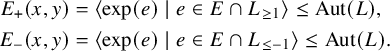

Definition 1.5 [Reference Cohen and IvanyosCI06, p. 444].

Let

![]() $x \in E$

be an extremal element, with extremal form

$x \in E$

be an extremal element, with extremal form

![]() $g_x$

. Then we define

$g_x$

. Then we define

We also write

![]() $\operatorname {\mathrm {Exp}}(x) := \{ \exp (\lambda x) \mid \lambda \in k \}$

.

$\operatorname {\mathrm {Exp}}(x) := \{ \exp (\lambda x) \mid \lambda \in k \}$

.

Lemma 1.6 [Reference Cohen and IvanyosCI06, Lemma 15].

For each

![]() $x \in E$

, we have

$x \in E$

, we have

![]() $\exp (x) \in \operatorname {\mathrm {Aut}}(L)$

. Moreover,

$\exp (x) \in \operatorname {\mathrm {Aut}}(L)$

. Moreover,

![]() $\exp (\lambda x) \exp (\mu x) = \exp ((\lambda + \mu )x)$

for all

$\exp (\lambda x) \exp (\mu x) = \exp ((\lambda + \mu )x)$

for all

![]() $\lambda ,\mu \in k$

, so

$\lambda ,\mu \in k$

, so

![]() $\operatorname {\mathrm {Exp}}(x)$

is a subgroup of

$\operatorname {\mathrm {Exp}}(x)$

is a subgroup of

![]() $\operatorname {\mathrm {Aut}}(L)$

.

$\operatorname {\mathrm {Aut}}(L)$

.

Notice that for an extremal element

![]() $x \in E$

, we always have

$x \in E$

, we always have

![]() $\operatorname {\mathrm {ad}}_x^3 = 0$

. In particular, if

$\operatorname {\mathrm {ad}}_x^3 = 0$

. In particular, if

![]() $\operatorname {\mathrm {char}}(k) \neq 2$

, then

$\operatorname {\mathrm {char}}(k) \neq 2$

, then

![]() $g_x(y) x = \tfrac {1}{2} [x, [x, y]]$

, and we recover the usual exponential map

$g_x(y) x = \tfrac {1}{2} [x, [x, y]]$

, and we recover the usual exponential map

![]() $\exp (x) = \exp (\operatorname {\mathrm {ad}}_x)$

.

$\exp (x) = \exp (\operatorname {\mathrm {ad}}_x)$

.

In this paper, we will always assume that our Lie algebra L is generated by its extremal elements. This has some powerful consequences.

Proposition 1.7 [Reference Cohen and IvanyosCI06, Proposition 20].

Suppose that L is generated by E. Then

-

(i) L is linearly spanned by E.

-

(ii) There is a unique bilinear form

$g \colon L \times L \to k$

such that

$g \colon L \times L \to k$

such that

$g_x(y) = g(x,y)$

for all

$g_x(y) = g(x,y)$

for all

$x \in E$

and all

$x \in E$

and all

$y \in L$

. The form g is symmetric.

$y \in L$

. The form g is symmetric. -

(iii) The form g associates with the Lie bracket (i.e.,

$g([x,y],z) = g(x,[y,z])$

for all

$g([x,y],z) = g(x,[y,z])$

for all

$x,y,z \in L$

).

$x,y,z \in L$

).

Proposition 1.8 [Reference Cohen and IvanyosCI06, Lemmas 21, 24, 25 and 27].

Suppose that L is generated by E. Let

![]() $x,y \in E$

be pure extremal elements. Then exactly one of the following holds:

$x,y \in E$

be pure extremal elements. Then exactly one of the following holds:

-

(a)

$kx = ky$

;

$kx = ky$

; -

(b)

$kx \neq ky$

,

$kx \neq ky$

,

$[x,y] = 0$

, and

$[x,y] = 0$

, and

$\lambda x + \mu y \in E \cup \{ 0 \}$

for all

$\lambda x + \mu y \in E \cup \{ 0 \}$

for all

$\lambda ,\mu \in k$

(we write

$\lambda ,\mu \in k$

(we write

$x \sim y$

);

$x \sim y$

); -

(c)

$kx \neq ky$

,

$kx \neq ky$

,

$[x,y] = 0$

, and

$[x,y] = 0$

, and

$\lambda x + \mu y \in E \cup \{ 0 \}$

if and only if

$\lambda x + \mu y \in E \cup \{ 0 \}$

if and only if

$\lambda =0$

or

$\lambda =0$

or

$\mu =0$

;

$\mu =0$

; -

(d)

$[x,y] \neq 0$

and

$[x,y] \neq 0$

and

$g(x,y)=0$

, in which case

$g(x,y)=0$

, in which case

$[x,y] \in E$

and

$[x,y] \in E$

and

$x \sim [x,y] \sim y$

;

$x \sim [x,y] \sim y$

; -

(e)

$[x,y] \neq 0$

and

$[x,y] \neq 0$

and

$g(x,y) \neq 0$

, in which case x and y generate an

$g(x,y) \neq 0$

, in which case x and y generate an

$\mathfrak {sl}_2(k)$

-subalgebra.

$\mathfrak {sl}_2(k)$

-subalgebra.

Moreover, we have

![]() $g(x,y) = 0$

in all four cases (a)–(d).

$g(x,y) = 0$

in all four cases (a)–(d).

This gives rise to the following definitions.

Definition 1.9.

-

(i) Let

$\langle x \rangle , \langle y \rangle \in {\mathcal {E}}(L)$

be two distinct pure extremal points. Then depending on whether the corresponding elements

$\langle x \rangle , \langle y \rangle \in {\mathcal {E}}(L)$

be two distinct pure extremal points. Then depending on whether the corresponding elements

$x,y$

are in case (b), (c), (d) or (e) of Proposition 1.8, we call the pair

$x,y$

are in case (b), (c), (d) or (e) of Proposition 1.8, we call the pair

$\langle x \rangle , \langle y \rangle $

collinear, symplectic, special or hyperbolic, respectively. Following the notation from [Reference Cohen and IvanyosCI06], we denote the five possible relations between points of

$\langle x \rangle , \langle y \rangle $

collinear, symplectic, special or hyperbolic, respectively. Following the notation from [Reference Cohen and IvanyosCI06], we denote the five possible relations between points of

${\mathcal {E}}$

by

${\mathcal {E}}$

by

${\mathcal {E}}_{-2}$

(equal),

${\mathcal {E}}_{-2}$

(equal),

${\mathcal {E}}_{-1}$

(collinear),

${\mathcal {E}}_{-1}$

(collinear),

${\mathcal {E}}_0$

(symplectic),

${\mathcal {E}}_0$

(symplectic),

${\mathcal {E}}_1$

(special),

${\mathcal {E}}_1$

(special),

${\mathcal {E}}_2$

(hyperbolic), respectively. We also use

${\mathcal {E}}_2$

(hyperbolic), respectively. We also use

$E_{-2},E_{-1},E_0,E_1,E_2$

for the corresponding relations between elements of E. Moreover, we write

$E_{-2},E_{-1},E_0,E_1,E_2$

for the corresponding relations between elements of E. Moreover, we write

$E_i(x)$

for the set of elements of E in relation

$E_i(x)$

for the set of elements of E in relation

$E_i$

with x, and similarly for

$E_i$

with x, and similarly for

${\mathcal {E}}_i(\langle x \rangle )$

.

${\mathcal {E}}_i(\langle x \rangle )$

.We refer the reader to Proposition 2.5 for the motivation for this notation.

-

(ii) The extremal geometry associated with L is the point-line geometry with point set

${\mathcal {E}}(L)$

and line set

${\mathcal {E}}(L)$

and line set  $$\begin{align*}\mathcal{F}(L) := \{ \langle x,y \rangle \mid \langle x \rangle \text{ and } \langle y \rangle \text{ are collinear} \}. \end{align*}$$

$$\begin{align*}\mathcal{F}(L) := \{ \langle x,y \rangle \mid \langle x \rangle \text{ and } \langle y \rangle \text{ are collinear} \}. \end{align*}$$

It is a partial linear space (i.e., any two distinct points lie on at most one line).

A crucial ingredient for us will be the fact that each hyperbolic pair of extremal elements gives rise to a

![]() $5$

-grading of the Lie algebra; see Proposition 2.4 below.

$5$

-grading of the Lie algebra; see Proposition 2.4 below.

We finish this section with a subtle but important fact about exponential maps arising from a

![]() $5$

-grading.

$5$

-grading.

Definition 1.10. Assume that

![]() $\operatorname {\mathrm {char}}(k) \neq 2,3$

and let L be a 5-graded finite-dimensional Lie algebra over k. We say that L is algebraic if for any

$\operatorname {\mathrm {char}}(k) \neq 2,3$

and let L be a 5-graded finite-dimensional Lie algebra over k. We say that L is algebraic if for any

![]() $(x,s) \in L_{\sigma 1} \oplus L_{\sigma 2}$

(with

$(x,s) \in L_{\sigma 1} \oplus L_{\sigma 2}$

(with

![]() $\sigma \in \{ +,- \}$

), the linear endomorphism

$\sigma \in \{ +,- \}$

), the linear endomorphism

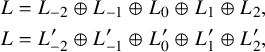

$$\begin{align*}\exp(\operatorname{\mathrm{ad}}(x+s)) = \sum_{i=0}^{4} \frac{1}{i!} \operatorname{\mathrm{ad}}(x+s)^i\end{align*}$$

$$\begin{align*}\exp(\operatorname{\mathrm{ad}}(x+s)) = \sum_{i=0}^{4} \frac{1}{i!} \operatorname{\mathrm{ad}}(x+s)^i\end{align*}$$

of L is a Lie algebra automorphism.

It is not difficult to see that when

![]() $\operatorname {\mathrm {char}}(k) \neq 2,3,5$

, then any

$\operatorname {\mathrm {char}}(k) \neq 2,3,5$

, then any

![]() $5$

-graded Lie algebra is algebraic; see [Reference Boelaert, De Medts and StavrovaBDMS19, Lemma 3.1.7]. If

$5$

-graded Lie algebra is algebraic; see [Reference Boelaert, De Medts and StavrovaBDMS19, Lemma 3.1.7]. If

![]() $\operatorname {\mathrm {char}}(k) = 5$

, this is much more delicate; see [Reference Boelaert, De Medts and StavrovaBDMS19, §4.2] and [Reference StavrovaSta22, Theorem 2.10].

$\operatorname {\mathrm {char}}(k) = 5$

, this is much more delicate; see [Reference Boelaert, De Medts and StavrovaBDMS19, §4.2] and [Reference StavrovaSta22, Theorem 2.10].

One of our key tools will precisely be an extension of the existence of such automorphisms to the case where

![]() $\operatorname {\mathrm {char}}(k)$

is arbitrary, including characteristic

$\operatorname {\mathrm {char}}(k)$

is arbitrary, including characteristic

![]() $2$

and

$2$

and

![]() $3$

; see Definitions 3.12 and 3.13 and Theorems 3.15 and 3.16 below.

$3$

; see Definitions 3.12 and 3.13 and Theorems 3.15 and 3.16 below.

1.2 Cubic norm structures

Cubic norm structures have been introduced by Kevin McCrimmon in [Reference McCrimmonMcC69]. We follow the approach from [Reference Tits and WeissTW02, Chapter 15], which is equivalent but is more practical for our purposes.

Definition 1.11. A cubic norm structure is a tuple

where k is a field, J is a vector space over k,

![]() $N \colon J\to k$

is a map called the norm,

$N \colon J\to k$

is a map called the norm,

![]() $\sharp \colon J\to J$

is a map called the adjoint,

$\sharp \colon J\to J$

is a map called the adjoint,

![]() $T \colon J\times J\to k$

is a symmetric bilinear form called the trace,

$T \colon J\times J\to k$

is a symmetric bilinear form called the trace,

![]() $\times \colon J\times J\to J$

is a symmetric bilinear map called the Freudenthal cross product, and

$\times \colon J\times J\to J$

is a symmetric bilinear map called the Freudenthal cross product, and

![]() $1$

is a nonzero element of J called the identity such that for all

$1$

is a nonzero element of J called the identity such that for all

![]() $\lambda \in k$

, and all

$\lambda \in k$

, and all

![]() $a,b,c\in J$

, we have

$a,b,c\in J$

, we have

-

(i)

$(\lambda a)^\sharp =\lambda ^2a^\sharp $

;

$(\lambda a)^\sharp =\lambda ^2a^\sharp $

; -

(ii)

$N(\lambda a)=\lambda ^3 N(a)$

;

$N(\lambda a)=\lambda ^3 N(a)$

; -

(iii)

$T(a,b\times c)=T(a\times b,c)$

;

$T(a,b\times c)=T(a\times b,c)$

; -

(iv)

$(a+b)^\sharp =a^\sharp +a\times b+b^\sharp $

;

$(a+b)^\sharp =a^\sharp +a\times b+b^\sharp $

; -

(v)

$N(a+b)=N(a)+T(a^\sharp ,b)+T(a,b^\sharp )+N(b)$

;

$N(a+b)=N(a)+T(a^\sharp ,b)+T(a,b^\sharp )+N(b)$

; -

(vi)

$T(a,a^\sharp )=3N(a)$

;

$T(a,a^\sharp )=3N(a)$

; -

(vii)

$(a^\sharp )^\sharp =N(a)a$

;

$(a^\sharp )^\sharp =N(a)a$

; -

(viii)

$a^\sharp \times (a\times b)=N(a)b+T(a^\sharp ,b)a$

;

$a^\sharp \times (a\times b)=N(a)b+T(a^\sharp ,b)a$

; -

(ix)

$a^\sharp \times b^\sharp +(a\times b)^\sharp =T(a^\sharp ,b)b+T(a,b^\sharp )a$

;

$a^\sharp \times b^\sharp +(a\times b)^\sharp =T(a^\sharp ,b)b+T(a,b^\sharp )a$

; -

(x)

$1^\sharp =1$

;

$1^\sharp =1$

; -

(xi)

$a=T(a,1)1-1\times a$

.

$a=T(a,1)1-1\times a$

.

We call this cubic norm structure nondegenerate if

![]() $\{a\in J\mid N(a)=0=T(a,J)=T(a^\sharp ,J)\} = 0$

.

$\{a\in J\mid N(a)=0=T(a,J)=T(a^\sharp ,J)\} = 0$

.

Definition 1.12. We call

![]() $a\in J$

invertible if

$a\in J$

invertible if

![]() $N(a)\neq 0$

. We denote the set of all invertible elements of J by

$N(a)\neq 0$

. We denote the set of all invertible elements of J by

![]() $J^\times $

. If all nonzero elements of J are invertible, we call J anisotropic; otherwise, we call it isotropic.

$J^\times $

. If all nonzero elements of J are invertible, we call J anisotropic; otherwise, we call it isotropic.

Lemma 1.13 [Reference Tits and WeissTW02, (15.18)].

In Definition 1.11, condition (iii) is a consequence of (iv) and (v). If

![]() $|k|>3$

, then conditions (vi), (viii) and (ix) are a consequence of (i), (ii), (iv), (v) and (vii).

$|k|>3$

, then conditions (vi), (viii) and (ix) are a consequence of (i), (ii), (iv), (v) and (vii).

Remark 1.14. Every cubic norm structure can be made into a (quadratic) Jordan algebra, where the U-operator of the Jordan algebra is given by

for all

![]() $x,y\in J$

.

$x,y\in J$

.

Definition 1.15.

-

(i) Let

$(J,k,N,\sharp ,T,\times , 1)$

and

$(J,k,N,\sharp ,T,\times , 1)$

and

$(J',k,N',\sharp ',T',\times ', 1')$

be two cubic norm structures. A vector space isomorphism

$(J',k,N',\sharp ',T',\times ', 1')$

be two cubic norm structures. A vector space isomorphism

$\varphi :J\to J'$

is an isomorphism from

$\varphi :J\to J'$

is an isomorphism from

$(J,k,N,\sharp ,T,\times , 1)$

to

$(J,k,N,\sharp ,T,\times , 1)$

to

$(J',k,N',\sharp ',T',\times ', 1')$

if

$(J',k,N',\sharp ',T',\times ', 1')$

if

$\varphi \circ \sharp =\sharp '\circ \varphi $

and

$\varphi \circ \sharp =\sharp '\circ \varphi $

and

$\varphi (1)=1'$

.

$\varphi (1)=1'$

. -

(ii) Let

$(J,k,N,\sharp ,T,\times ,1)$

be a cubic norm structure and let

$(J,k,N,\sharp ,T,\times ,1)$

be a cubic norm structure and let

$d\in J^\times $

. We define new maps

$d\in J^\times $

. We define new maps

$N_d$

,

$N_d$

,

$\sharp _d$

,

$\sharp _d$

,

$T_d$

,

$T_d$

,

$\times _d$

and

$\times _d$

and

$1_d$

by for all

$1_d$

by for all $$ \begin{align*} N_d(a) &:= N(d)^{-1} N(a) , \\ a^{\sharp_d} &:= N(d)^{-1} U_d(a^\sharp) , \\ T_d(a,b) &:= T\big( U_{N(d)^{-1} d^\sharp}(a), b \big) , \\ a \times_d b &:= N(d)^{-1} U_d(a \times b) , \\ 1_d &:= d , \end{align*} $$

$$ \begin{align*} N_d(a) &:= N(d)^{-1} N(a) , \\ a^{\sharp_d} &:= N(d)^{-1} U_d(a^\sharp) , \\ T_d(a,b) &:= T\big( U_{N(d)^{-1} d^\sharp}(a), b \big) , \\ a \times_d b &:= N(d)^{-1} U_d(a \times b) , \\ 1_d &:= d , \end{align*} $$

$a,b \in J$

. Then

$a,b \in J$

. Then

$(J,k,N_d,\sharp _d,T_d,\times _d,1_d)$

is again a cubic norm structure; see [Reference Tits and WeissTW02, (29.36)].

$(J,k,N_d,\sharp _d,T_d,\times _d,1_d)$

is again a cubic norm structure; see [Reference Tits and WeissTW02, (29.36)].

Two cubic norm structures

$(J,k,N,\sharp ,T,\times ,1)$

and

$(J,k,N,\sharp ,T,\times ,1)$

and

$(J',k,N',\sharp ',T',\times ',1')$

are called isotopic if there exists an isomorphism from

$(J',k,N',\sharp ',T',\times ',1')$

are called isotopic if there exists an isomorphism from

$(J',k,N',\sharp ',T',\times ',1')$

to

$(J',k,N',\sharp ',T',\times ',1')$

to

$(J,k,N_d,\sharp _d,T_d,\times _d,1_d)$

for a certain

$(J,k,N_d,\sharp _d,T_d,\times _d,1_d)$

for a certain

$d\in J^\times $

.

$d\in J^\times $

.

1.3 Quadrangular algebras

Quadrangular algebras have been introduced by Richard Weiss in [Reference WeissWei06] in the anisotropic case, and have been generalized to allow isotropic quadrangular algebras in [Reference Mühlherr and WeissMW19]. Our notation follows [Reference Mühlherr and WeissMW19], except that we use different letters for some of our spaces and maps.

Definition 1.16. Let V be a vector space over the field k. A quadratic form Q on V is a map

![]() $Q \colon V\to k$

such that

$Q \colon V\to k$

such that

-

○

$Q(\lambda v)=\lambda ^2Q(v)$

for all

$Q(\lambda v)=\lambda ^2Q(v)$

for all

$\lambda \in k$

and

$\lambda \in k$

and

$v\in V$

;

$v\in V$

; -

○ the map

$T \colon V\times V\to k$

defined by for all

$T \colon V\times V\to k$

defined by for all $$\begin{align*}T(u,v)=Q(u+v)-Q(u)-Q(v) \end{align*}$$

$$\begin{align*}T(u,v)=Q(u+v)-Q(u)-Q(v) \end{align*}$$

$u,v \in V$

is bilinear.

$u,v \in V$

is bilinear.

We call Q

-

○ anisotropic if

$Q(v)=0$

implies

$Q(v)=0$

implies

$v=0$

;

$v=0$

; -

○ nondegenerate if

$V^\perp := \{ v\in V \mid T(v,V)=0 \} = 0$

;

$V^\perp := \{ v\in V \mid T(v,V)=0 \} = 0$

; -

○ non-singular if it is either nondegenerate or

$\dim (V^\perp )=1$

and

$\dim (V^\perp )=1$

and

$Q(V^\perp )\neq 0$

;

$Q(V^\perp )\neq 0$

; -

○ regular if

$\{ v \in V \mid Q(v)=0=T(v,V) \} = 0$

.

$\{ v \in V \mid Q(v)=0=T(v,V) \} = 0$

.

A base point for Q is an element

![]() $e\in V$

such that

$e\in V$

such that

![]() $Q(e)=1$

. Using this base point, we can define an involution

$Q(e)=1$

. Using this base point, we can define an involution

![]() $\sigma \colon V\to V$

by

$\sigma \colon V\to V$

by

for all

![]() $v\in V$

.

$v\in V$

.

Definition 1.17 [Reference Mühlherr and WeissMW19, Definition 2.1].

A quadrangular algebra is a tuple

where

-

○ k is a field;

-

○ V is a vector space over k;

-

○

$Q \colon V \to k$

is a regular quadratic form, with associated bilinear form T;

$Q \colon V \to k$

is a regular quadratic form, with associated bilinear form T; -

○

$e \in V$

is a base point for Q;

$e \in V$

is a base point for Q; -

○ X is a vector space over k;

-

○

$\cdot \colon X\times V \to X \colon (a,v)\mapsto a \cdot v$

is a bilinear map;

$\cdot \colon X\times V \to X \colon (a,v)\mapsto a \cdot v$

is a bilinear map; -

○

$h \colon X\times X\to V$

is a bilinear map;

$h \colon X\times X\to V$

is a bilinear map; -

○

$\theta \colon X\times V\to V$

is a map;

$\theta \colon X\times V\to V$

is a map;

such that

-

(i)

$a \cdot e=a$

for all

$a \cdot e=a$

for all

$a\in X$

;

$a\in X$

; -

(ii)

$(a\cdot v)\cdot v^\sigma = Q(v)a$

for all

$(a\cdot v)\cdot v^\sigma = Q(v)a$

for all

$a\in X$

and all

$a\in X$

and all

$v\in V$

;

$v\in V$

; -

(iii)

$h(a, b \cdot v) = h(b, a \cdot v) + T(h(a,b), e)v$

for all

$h(a, b \cdot v) = h(b, a \cdot v) + T(h(a,b), e)v$

for all

$a,b \in X$

and all

$a,b \in X$

and all

$v\in V$

;

$v\in V$

; -

(iv)

$T(h(a\cdot v, b), e) = T(h(a,b), v)$

for all

$T(h(a\cdot v, b), e) = T(h(a,b), v)$

for all

$a,b \in X$

and all

$a,b \in X$

and all

$v\in V$

;

$v\in V$

; -

(v) For each

$a \in X$

, the map

$a \in X$

, the map

$v \mapsto \theta (a,v)$

is linear;

$v \mapsto \theta (a,v)$

is linear; -

(vi)

$\theta (\lambda a, v) = \lambda ^2 \theta (a, v)$

for all

$\theta (\lambda a, v) = \lambda ^2 \theta (a, v)$

for all

$a \in X$

,

$a \in X$

,

$v \in V$

and

$v \in V$

and

$\lambda \in k$

;

$\lambda \in k$

; -

(vii) There exists a function

$\gamma \colon X\times X\to k$

such that for all

$\gamma \colon X\times X\to k$

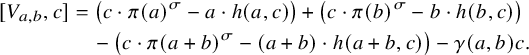

such that for all $$\begin{align*}\theta(a+b, v) = \theta(a,v) + \theta(b,v) + h(a, b\cdot v) - \gamma(a,b)v \end{align*}$$

$$\begin{align*}\theta(a+b, v) = \theta(a,v) + \theta(b,v) + h(a, b\cdot v) - \gamma(a,b)v \end{align*}$$

$a,b\in X$

and all

$a,b\in X$

and all

$v\in V$

;

$v\in V$

;

-

(viii) There exists a function

$\phi \colon X\times V \to k$

such that for all

$\phi \colon X\times V \to k$

such that for all $$ \begin{align*} \theta(a\cdot v, w) = \theta(a, w^\sigma)^\sigma Q(v) - T(w, v^\sigma) \theta(a,v)^\sigma + T(\theta(a,v), w^\sigma) v^\sigma + \phi(a,v)w \end{align*} $$

$$ \begin{align*} \theta(a\cdot v, w) = \theta(a, w^\sigma)^\sigma Q(v) - T(w, v^\sigma) \theta(a,v)^\sigma + T(\theta(a,v), w^\sigma) v^\sigma + \phi(a,v)w \end{align*} $$

$a\in X$

and all

$a\in X$

and all

$v,w \in V$

;

$v,w \in V$

;

-

(ix)

$a \cdot \theta (a,v) = (a \cdot \theta (a,e)) \cdot v$

for all

$a \cdot \theta (a,v) = (a \cdot \theta (a,e)) \cdot v$

for all

$a\in X$

and all

$a\in X$

and all

$v\in V$

.

$v\in V$

.

Notation 1.18. We set

![]() $\pi (a)=\theta (a,e)$

for all

$\pi (a)=\theta (a,e)$

for all

![]() $a\in X$

.

$a\in X$

.

Definition 1.19 [Reference Mühlherr and WeissMW19, Definition 2.3].

We call a quadrangular algebra anisotropic if Q is anisotropic and

![]() $\pi (a)$

is a multiple of e if and only if

$\pi (a)$

is a multiple of e if and only if

![]() $a=0$

.

$a=0$

.

Remark 1.20. The existence of

![]() $e \in V$

implies

$e \in V$

implies

![]() $V\neq 0$

. However, contrary to the common convention, we allow

$V\neq 0$

. However, contrary to the common convention, we allow

![]() $X=0$

. In other words, we view quadratic spaces as special (degenerate) examples of quadrangular algebras.

$X=0$

. In other words, we view quadratic spaces as special (degenerate) examples of quadrangular algebras.

2 Gradings from extremal elements

In this section, we describe the

![]() $5$

-grading associated with any pair of hyperbolic extremal elements, and we collect several properties about this grading that we will need later. We emphasize again that we do not make any assumptions on the field k, so in particular, we allow

$5$

-grading associated with any pair of hyperbolic extremal elements, and we collect several properties about this grading that we will need later. We emphasize again that we do not make any assumptions on the field k, so in particular, we allow

![]() $\operatorname {\mathrm {char}}(k) = 2$

or

$\operatorname {\mathrm {char}}(k) = 2$

or

![]() $3$

.

$3$

.

Notation 2.1. We will assume from now on that L is a Lie algebra over k generated by its pure extremal elements. As in Definition 1.2, we will write E for the set of extremal elements of L and

![]() ${\mathcal {E}}$

for the set of extremal points of L. We let g be the symmetric bilinear form from Proposition 1.7. If V is any subspace of L, we write

${\mathcal {E}}$

for the set of extremal points of L. We let g be the symmetric bilinear form from Proposition 1.7. If V is any subspace of L, we write

Remark 2.2. If, in addition, L is simple, then our assumptions imply that L does not contain sandwich elements. Indeed, the sandwich elements are contained in the radical of the form g, which is an ideal of L. Since L is generated by its pure extremal elements, the form g cannot be identically zero, so the radical of g must be trivial.

In this section, we do not assume that L is simple (except in Lemma 2.11 and Propositions 2.13 and 2.16), but we will make this important assumption in the next sections.

Definition 2.3. We define the normalizer of x as

Proposition 2.4 [Reference Cohen and IvanyosCI06, Proposition 22 and Corollary 23].

Let

![]() $x, y\in E$

such that

$x, y\in E$

such that

![]() $g(x,y)=1$

. Then

$g(x,y)=1$

. Then

-

(i) L has a

$\mathbb {Z}$

-grading with

$\mathbb {Z}$

-grading with $$\begin{align*}L=L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2,\end{align*}$$

$$\begin{align*}L=L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2,\end{align*}$$

$L_{-2}=\langle x\rangle $

,

$L_{-2}=\langle x\rangle $

,

$L_{-1}=[x,U]$

,

$L_{-1}=[x,U]$

,

$L_0=N_{L}(x)\cap N_{L}(y)$

,

$L_0=N_{L}(x)\cap N_{L}(y)$

,

$L_1=[y,U]$

and

$L_1=[y,U]$

and

$L_2=\langle y\rangle $

, where

$L_2=\langle y\rangle $

, where  $$\begin{align*}U = \big\langle x, y, [x,y] \big\rangle^\perp. \end{align*}$$

$$\begin{align*}U = \big\langle x, y, [x,y] \big\rangle^\perp. \end{align*}$$

-

(ii) Each

$L_i$

is contained in the i-eigenspace of

$L_i$

is contained in the i-eigenspace of

$\operatorname {\mathrm {ad}}_{[x,y]}$

.

$\operatorname {\mathrm {ad}}_{[x,y]}$

. -

(iii)

$\operatorname {\mathrm {ad}}_x$

defines a linear isomorphism from

$\operatorname {\mathrm {ad}}_x$

defines a linear isomorphism from

$L_1$

to

$L_1$

to

$L_{-1}$

with inverse

$L_{-1}$

with inverse

$-\operatorname {\mathrm {ad}}_y$

.

$-\operatorname {\mathrm {ad}}_y$

. -

(iv) The filtration

$L_{-2} \leq L_{\leq -1} \leq L_{\leq 0} \leq L_{\leq 1} \leq L$

only depends on x and not on y. More precisely, we have

$L_{-2} \leq L_{\leq -1} \leq L_{\leq 0} \leq L_{\leq 1} \leq L$

only depends on x and not on y. More precisely, we have  $$ \begin{align*} L_{\leq 1} &= x^\perp, \\ L_{\leq 0} &= N_L(x), \\ L_{\leq -1} &= kx + [x, x^\perp], \\ L_{-2} &= kx. \end{align*} $$

$$ \begin{align*} L_{\leq 1} &= x^\perp, \\ L_{\leq 0} &= N_L(x), \\ L_{\leq -1} &= kx + [x, x^\perp], \\ L_{-2} &= kx. \end{align*} $$

-

(v) In particular,

$g_x(L_{\leq 1})=0$

and

$g_x(L_{\leq 1})=0$

and

$g_y(L_{\geq -1})=0$

.

$g_y(L_{\geq -1})=0$

.

These gradings are closely related to the five different relations

![]() $E_i$

that we have introduced in Definition 1.9:

$E_i$

that we have introduced in Definition 1.9:

Proposition 2.5. Let x, y and

![]() $L_i$

be as in Proposition 2.4. For each

$L_i$

be as in Proposition 2.4. For each

![]() $i\in [-2,2]$

, we have

$i\in [-2,2]$

, we have

Proof. This is shown in the proof of [Reference Cohen and IvanyosCI06, Theorem 28].

Corollary 2.6. Let x, y and

![]() $L_i$

be as in Proposition 2.4. Then

$L_i$

be as in Proposition 2.4. Then

Proof. By Proposition 2.5,

![]() $E_{-1}(x) = E \cap (kx + L_{-1}) \setminus kx$

. Now observe that an element

$E_{-1}(x) = E \cap (kx + L_{-1}) \setminus kx$

. Now observe that an element

![]() $\lambda x + e$

(with

$\lambda x + e$

(with

![]() $e \in L_{-1}$

) is extremal if and only if e is extremal, because if one of them is extremal, it is collinear with x, and hence, all points on the line through x are extremal points.

$e \in L_{-1}$

) is extremal if and only if e is extremal, because if one of them is extremal, it is collinear with x, and hence, all points on the line through x are extremal points.

Lemma 2.7. Let x, y and

![]() $L_i$

be as in Proposition 2.4. Let

$L_i$

be as in Proposition 2.4. Let

![]() $a \in L_1$

and

$a \in L_1$

and

![]() $b \in L_{-1}$

. Then

$b \in L_{-1}$

. Then

![]() $[a, [y,b]] = g(a,b)y$

.

$[a, [y,b]] = g(a,b)y$

.

Proof. By Proposition 2.4(iii), we can write

![]() $a = [y,c]$

for some

$a = [y,c]$

for some

![]() $c \in L_{-1}$

. By the Premet identity (2) and Proposition 2.4(v), we then get

$c \in L_{-1}$

. By the Premet identity (2) and Proposition 2.4(v), we then get

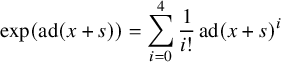

$$ \begin{align*} [a, [y,b]] &= [[y,c], [y,b]] \\ &= g_y([c,b])y + g_y(b) [y,c] - g_y(c) [y,b] \\ &= g(y, [c,b])y = g([y,c], b)y = g(a,b)y. \end{align*} $$

$$ \begin{align*} [a, [y,b]] &= [[y,c], [y,b]] \\ &= g_y([c,b])y + g_y(b) [y,c] - g_y(c) [y,b] \\ &= g(y, [c,b])y = g([y,c], b)y = g(a,b)y. \end{align*} $$

The following lemma describes an automorphism that reverses the grading.

Lemma 2.8. Let x, y and

![]() $L_i$

be as in Proposition 2.4. Consider the automorphism

$L_i$

be as in Proposition 2.4. Consider the automorphism

![]() $\varphi =\exp (y)\exp (x)\exp (y)$

. Then

$\varphi =\exp (y)\exp (x)\exp (y)$

. Then

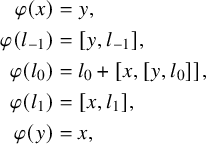

$$ \begin{align*} \varphi(x) &= y , \\ \varphi(l_{-1}) &= [y, l_{-1}] , \\ \varphi(l_0) &= l_0 + [x, [y, l_0]] , \\ \varphi(l_1) &= [x, l_1] , \\ \varphi(y) &= x , \end{align*} $$

$$ \begin{align*} \varphi(x) &= y , \\ \varphi(l_{-1}) &= [y, l_{-1}] , \\ \varphi(l_0) &= l_0 + [x, [y, l_0]] , \\ \varphi(l_1) &= [x, l_1] , \\ \varphi(y) &= x , \end{align*} $$

for all

![]() $l_{-1} \in L_{-1}$

,

$l_{-1} \in L_{-1}$

,

![]() $l_0 \in L_0$

,

$l_0 \in L_0$

,

![]() $l_1 \in L_1$

.

$l_1 \in L_1$

.

Proof. Since

![]() $g_x(y)=g_y(x)=1$

, we get

$g_x(y)=g_y(x)=1$

, we get

![]() $\exp (x)(y) = y + [x,y] + x$

and

$\exp (x)(y) = y + [x,y] + x$

and

![]() $\exp (y)(x) = x - [x,y] + y$

. Since

$\exp (y)(x) = x - [x,y] + y$

. Since

![]() $g_x([x,y]) = 0$

and

$g_x([x,y]) = 0$

and

![]() $g_y([x,y]) = 0$

, it now follows that

$g_y([x,y]) = 0$

, it now follows that

![]() $\varphi (x)=y$

and

$\varphi (x)=y$

and

![]() $\varphi (y)=x$

.

$\varphi (y)=x$

.

Next, let

![]() $l_1\in L_1$

. Then

$l_1\in L_1$

. Then

![]() $\exp (y)(l_1) = l_1$

and

$\exp (y)(l_1) = l_1$

and

![]() $\exp (x)(l_1) = l_1 + [x, l_1]$

because

$\exp (x)(l_1) = l_1 + [x, l_1]$

because

![]() $g_x(L_1) = 0$

(by Proposition 2.4(v)). By the same Proposition 2.4(v), we also have

$g_x(L_1) = 0$

(by Proposition 2.4(v)). By the same Proposition 2.4(v), we also have

![]() $g_y(L_{-1}) = 0$

, and hence,

$g_y(L_{-1}) = 0$

, and hence,

by Proposition 2.4(iii). Similarly,

![]() $\varphi (l_{-1})=[y,l_{-1}]$

for all

$\varphi (l_{-1})=[y,l_{-1}]$

for all

![]() $l_{-1}\in L_{-1}$

.

$l_{-1}\in L_{-1}$

.

Finally, let

![]() $l_0\in L_0$

. Let

$l_0\in L_0$

. Let

![]() $\lambda ,\mu \in k$

be such that

$\lambda ,\mu \in k$

be such that

![]() $[x,l_0]=\lambda x$

and

$[x,l_0]=\lambda x$

and

![]() $[y,l_0]=\mu y$

. Then by the fact that g associates with the Lie bracket, we have

$[y,l_0]=\mu y$

. Then by the fact that g associates with the Lie bracket, we have

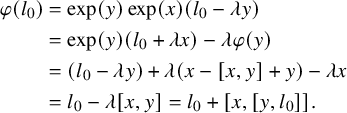

Hence,

$$ \begin{align*} \varphi(l_0) &= \exp(y)\exp(x)(l_0 - \lambda y) \\ &= \exp(y) (l_0 + \lambda x) - \lambda \varphi(y) \\ &= (l_0 - \lambda y) + \lambda (x - [x,y] + y) - \lambda x \\ &= l_0 - \lambda [x,y] = l_0 + [x,[y,l_0]].\\[-34pt] \end{align*} $$

$$ \begin{align*} \varphi(l_0) &= \exp(y)\exp(x)(l_0 - \lambda y) \\ &= \exp(y) (l_0 + \lambda x) - \lambda \varphi(y) \\ &= (l_0 - \lambda y) + \lambda (x - [x,y] + y) - \lambda x \\ &= l_0 - \lambda [x,y] = l_0 + [x,[y,l_0]].\\[-34pt] \end{align*} $$

Remark 2.9. Because of Lemma 2.8, many results involving the

![]() $5$

-grading can be ‘swapped around’. We will often do this without explicitly mentioning.

$5$

-grading can be ‘swapped around’. We will often do this without explicitly mentioning.

The automorphism group

![]() $\operatorname {\mathrm {Aut}}(L)$

contains a torus preserving the grading. (This easy fact is, of course, true for any

$\operatorname {\mathrm {Aut}}(L)$

contains a torus preserving the grading. (This easy fact is, of course, true for any

![]() $\mathbb {Z}$

-graded algebra.)

$\mathbb {Z}$

-graded algebra.)

Lemma 2.10. Let x, y and

![]() $L_i$

be as in Proposition 2.4. Let

$L_i$

be as in Proposition 2.4. Let

![]() $\lambda \in k^\times $

be arbitrary. Consider the map

$\lambda \in k^\times $

be arbitrary. Consider the map

![]() $\varphi _\lambda \colon L\to L$

defined by

$\varphi _\lambda \colon L\to L$

defined by

![]() $\varphi _\lambda (l_i)=\lambda ^il_i$

for all

$\varphi _\lambda (l_i)=\lambda ^il_i$

for all

![]() $l_i\in L_i$

, with

$l_i\in L_i$

, with

![]() $i\in [-2, 2]$

. Then

$i\in [-2, 2]$

. Then

![]() $\varphi _\lambda \in \operatorname {\mathrm {Aut}}(L)$

.

$\varphi _\lambda \in \operatorname {\mathrm {Aut}}(L)$

.

Proof. Clearly,

![]() $\varphi _\lambda $

is bijective. Consider

$\varphi _\lambda $

is bijective. Consider

![]() $l_i\in L_i$

and

$l_i\in L_i$

and

![]() $l_j\in L_j$

arbitrary, with

$l_j\in L_j$

arbitrary, with

![]() $i,j\in [-2, 2]$

. Then

$i,j\in [-2, 2]$

. Then

The following lemma will ensure the nondegeneracy of certain maps later on.

Lemma 2.11. Assume that L is simple, and let x, y and

![]() $L_i$

be as in Proposition 2.4. Let

$L_i$

be as in Proposition 2.4. Let

![]() $Z_i = \{ z \in L_i \mid [z, L_i] = 0 \}$

for

$Z_i = \{ z \in L_i \mid [z, L_i] = 0 \}$

for

![]() $i = -1$

and

$i = -1$

and

![]() $i = 1$

. Then

$i = 1$

. Then

![]() $Z_{-1} = Z_1 = 0$

.

$Z_{-1} = Z_1 = 0$

.

Proof. Let

![]() $z\in Z_{-1}$

and

$z\in Z_{-1}$

and

![]() $a\in L_{-1}$

. Then

$a\in L_{-1}$

. Then

![]() $[a,z] = 0$

by definition of

$[a,z] = 0$

by definition of

![]() $Z_{-1}$

, and

$Z_{-1}$

, and

![]() $g_y(z) = g_y(a) = 0$

by Proposition 2.4(v). By the Premet identities, we then have

$g_y(z) = g_y(a) = 0$

by Proposition 2.4(v). By the Premet identities, we then have

Since

![]() $[y, L_{-1}] = L_1$

by Proposition 2.4(iii), this shows that

$[y, L_{-1}] = L_1$

by Proposition 2.4(iii), this shows that

![]() $[y,Z_{-1}]\leq Z_1$

. Similarly,

$[y,Z_{-1}]\leq Z_1$

. Similarly,

![]() $[x, Z_1] \leq Z_{-1}$

, but then

$[x, Z_1] \leq Z_{-1}$

, but then

![]() $Z_{-1} = [x, [y, Z_{-1}]] \leq [x, Z_1] \leq Z_{-1}$

, so in fact,

$Z_{-1} = [x, [y, Z_{-1}]] \leq [x, Z_1] \leq Z_{-1}$

, so in fact,

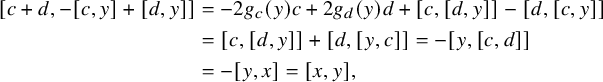

Observe now that by the Jacobi identity,

![]() $[[L_0,Z_{-1}],L_{-1}] \leq [L_0,[Z_{-1},L_{-1}]]+[Z_{-1},[L_0,L_{-1}]]=0$

, and thus,

$[[L_0,Z_{-1}],L_{-1}] \leq [L_0,[Z_{-1},L_{-1}]]+[Z_{-1},[L_0,L_{-1}]]=0$

, and thus,

![]() $[L_0,Z_{-1}]\leq Z_{-1}$

; similarly, we have

$[L_0,Z_{-1}]\leq Z_{-1}$

; similarly, we have

![]() $[L_0,Z_1]\leq Z_1$

. Moreover,

$[L_0,Z_1]\leq Z_1$

. Moreover,

and hence, the map

![]() $\varphi $

from Lemma 2.8 fixes

$\varphi $

from Lemma 2.8 fixes

![]() $[Z_{-1}, L_1]$

. However,

$[Z_{-1}, L_1]$

. However,

![]() $\varphi (Z_{-1}) = [y, Z_{-1}] = Z_1$

and

$\varphi (Z_{-1}) = [y, Z_{-1}] = Z_1$

and

![]() $\varphi (L_1) = [x, L_1] = L_{-1}$

, and therefore,

$\varphi (L_1) = [x, L_1] = L_{-1}$

, and therefore,

![]() $[Z_{-1},L_1] = [Z_1,L_{-1}]$

. We can now see that

$[Z_{-1},L_1] = [Z_1,L_{-1}]$

. We can now see that

is an ideal of L. Because L is simple, we conclude that

![]() $Z_{-1}=0$

and

$Z_{-1}=0$

and

![]() $Z_1=0$

.

$Z_1=0$

.

The idea of looking at two different

![]() $5$

-gradings obtained from hyperbolic pairs is a key ingredient for our results. The following lemma is a very first (easy) instance of this.

$5$

-gradings obtained from hyperbolic pairs is a key ingredient for our results. The following lemma is a very first (easy) instance of this.

Lemma 2.12. Consider

![]() $x,y,a,b\in E$

such that

$x,y,a,b\in E$

such that

![]() $g_x(y)=1=g_a(b)$

. Let

$g_x(y)=1=g_a(b)$

. Let

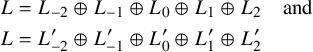

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2 \quad \text{and} \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2 \end{align*} $$

$$ \begin{align*} L &= L_{-2}\oplus L_{-1}\oplus L_0\oplus L_1\oplus L_2 \quad \text{and} \\ L &= L^{\prime}_{-2}\oplus L^{\prime}_{-1}\oplus L^{\prime}_0\oplus L^{\prime}_1\oplus L^{\prime}_2 \end{align*} $$

be the

![]() $5$

-gradings corresponding to the hyperbolic pairs

$5$

-gradings corresponding to the hyperbolic pairs

![]() $(x,y)$

and

$(x,y)$

and

![]() $(a,b)$

, respectively, as in Proposition 2.4.

$(a,b)$

, respectively, as in Proposition 2.4.

If

![]() $\varphi \in \operatorname {\mathrm {Aut}}(L)$

maps

$\varphi \in \operatorname {\mathrm {Aut}}(L)$

maps

![]() $L_{-2}$

to

$L_{-2}$

to

![]() $L^{\prime }_{-2}$

and

$L^{\prime }_{-2}$

and

![]() $L_2$

to

$L_2$

to

![]() $L^{\prime }_2$

, then

$L^{\prime }_2$

, then

![]() $\varphi (L_i)=L^{\prime }_i$

for all

$\varphi (L_i)=L^{\prime }_i$

for all

![]() $i\in [-2,2]$

.

$i\in [-2,2]$

.

Proof. By assumption, there exist

![]() $\lambda ,\mu \in k^\times $

such that

$\lambda ,\mu \in k^\times $

such that

![]() $\varphi (x)=\lambda a$

and

$\varphi (x)=\lambda a$

and

![]() $\varphi (y)=\mu b$

. We have

$\varphi (y)=\mu b$

. We have

![]() $L_0=N_L(x)\cap N_L(y)$

and

$L_0=N_L(x)\cap N_L(y)$

and

![]() $L^{\prime }_0=N_L(a)\cap N_L(b)$

by Proposition 2.4, so

$L^{\prime }_0=N_L(a)\cap N_L(b)$

by Proposition 2.4, so

![]() $L_0$

is mapped to

$L_0$

is mapped to

![]() $L^{\prime }_0$

by

$L^{\prime }_0$

by

![]() $\varphi $

.

$\varphi $

.

Next, let

![]() $U = \langle x, y, [x,y] \rangle ^\perp $

and

$U = \langle x, y, [x,y] \rangle ^\perp $

and

![]() $U' = \langle a, b, [a,b] \rangle ^\perp $

. By Remark 1.3 and Proposition 1.7(ii), the form g is uniquely determined by the Lie algebra L, so

$U' = \langle a, b, [a,b] \rangle ^\perp $

. By Remark 1.3 and Proposition 1.7(ii), the form g is uniquely determined by the Lie algebra L, so

![]() $\varphi (U) = U'$

. (Recall that ‘

$\varphi (U) = U'$

. (Recall that ‘

![]() $\perp $

’ is with respect to g.) It follows that

$\perp $

’ is with respect to g.) It follows that

![]() $\varphi $

maps

$\varphi $

maps

![]() $L_{-1} = [x,U]$

to

$L_{-1} = [x,U]$

to

![]() $L^{\prime }_{-1} = [a,U']$

and

$L^{\prime }_{-1} = [a,U']$

and

![]() $L_1 = [y,U]$

to

$L_1 = [y,U]$

to

![]() $L^{\prime }_1 = [b, U']$

.

$L^{\prime }_1 = [b, U']$

.

In Proposition 2.16, we will show that if L is simple and the extremal geometry has lines, we can write x and y as the Lie bracket of two extremal elements in

![]() $L_{-1}$

and

$L_{-1}$

and

![]() $L_1$

, respectively. We first recall some geometric facts from [Reference Cohen and IvanyosCI06].

$L_1$

, respectively. We first recall some geometric facts from [Reference Cohen and IvanyosCI06].

Proposition 2.13. Assume that L is simple and that the extremal geometry contains lines (i.e.,

![]() $\mathcal {F}(L) \neq \emptyset $

). Let

$\mathcal {F}(L) \neq \emptyset $

). Let

![]() $x,y \in {\mathcal {E}}$

. Then

$x,y \in {\mathcal {E}}$

. Then

-

(i) If

$(x,y) \in E_{-1}$

, then

$(x,y) \in E_{-1}$

, then

$E_{-1}(x) \cap E_{1}(y) \neq \emptyset $

.

$E_{-1}(x) \cap E_{1}(y) \neq \emptyset $

. -

(ii) If

$(x,y) \in E_0$

, then

$(x,y) \in E_0$

, then

$E_0(x) \cap E_{2}(y) \neq \emptyset $

.

$E_0(x) \cap E_{2}(y) \neq \emptyset $

. -

(iii) If

$(x,y) \in E_1$

, then

$(x,y) \in E_1$

, then

$E_{-1}(x) \cap E_{2}(y) \neq \emptyset $

.

$E_{-1}(x) \cap E_{2}(y) \neq \emptyset $

. -

(iv) If

$(x,y) \in E_2$

, then

$(x,y) \in E_2$

, then

$E_{-1}(x) \cap E_{1}(y) \neq \emptyset $

, while

$E_{-1}(x) \cap E_{1}(y) \neq \emptyset $

, while

$E_{-1}(x) \cap E_{\leq 0}(y) = \emptyset $

.

$E_{-1}(x) \cap E_{\leq 0}(y) = \emptyset $

.

Proof. By Remark 2.2, L does not contain sandwich elements. By [Reference Cohen and IvanyosCI06, Theorem 28],

![]() $({\mathcal {E}}, \mathcal {F})$

is a so-called root filtration space, which is either nondegenerate or has an empty set of lines. By our assumption, the latter case does not occur.

$({\mathcal {E}}, \mathcal {F})$

is a so-called root filtration space, which is either nondegenerate or has an empty set of lines. By our assumption, the latter case does not occur.

In particular, the statements of [Reference Cohen and IvanyosCI06, Lemmas 1 and 4] and [Reference Cohen and IvanyosCI07, Lemma 8] hold. Now claim (i) is [Reference Cohen and IvanyosCI06, Lemma 4(ii)] and claim (ii) is [Reference Cohen and IvanyosCI07, Lemma 8(ii)]. To show claim (iii), let

![]() $z := [x,y]$

, so

$z := [x,y]$

, so

![]() $(z,x) \in E_{-1}$

. By (i), we can find some

$(z,x) \in E_{-1}$

. By (i), we can find some

![]() $u \in E_{-1}(x) \cap E_1(u)$

. We can now invoke [Reference Cohen and IvanyosCI06, Lemma 1(v)] on the pairs

$u \in E_{-1}(x) \cap E_1(u)$

. We can now invoke [Reference Cohen and IvanyosCI06, Lemma 1(v)] on the pairs

![]() $(y,x)$

and

$(y,x)$

and

![]() $(z,u)$

to conclude that

$(z,u)$

to conclude that

![]() $u \in E_{-1}(x) \cap E_2(y)$

.

$u \in E_{-1}(x) \cap E_2(y)$

.

Finally, to show (iv), we first observe that the nondegeneracy of the root filtration space implies that

![]() $E_{-1}(x) \neq \emptyset $

(this is condition (H) on [Reference Cohen and IvanyosCI06, p. 439]) and hence, there exists a line

$E_{-1}(x) \neq \emptyset $

(this is condition (H) on [Reference Cohen and IvanyosCI06, p. 439]) and hence, there exists a line

![]() $\ell $

through

$\ell $

through

![]() $\langle x \rangle $

. We now use the defining properties (D) and (F) of a root filtration space (see [Reference Cohen and IvanyosCI06, p. 435]) to see that

$\langle x \rangle $

. We now use the defining properties (D) and (F) of a root filtration space (see [Reference Cohen and IvanyosCI06, p. 435]) to see that

![]() ${\mathcal {E}}_1(y)$

has nonempty intersection with

${\mathcal {E}}_1(y)$

has nonempty intersection with

![]() $\ell $

, as required. The final statement

$\ell $

, as required. The final statement

![]() $E_{-1}(x) \cap E_{\leq 0}(y) = \emptyset $

is just the defining property (D) itself.

$E_{-1}(x) \cap E_{\leq 0}(y) = \emptyset $

is just the defining property (D) itself.

Lemma 2.14. Let x, y and

![]() $L_i$

be as in Proposition 2.4. Then

$L_i$

be as in Proposition 2.4. Then

![]() $E_{-1}(x) \cap E_1(y) = E \cap L_{-1}$

.

$E_{-1}(x) \cap E_1(y) = E \cap L_{-1}$

.

Proof. By Proposition 2.5, we have

![]() $E_{-1}(x) = (E \cap L_{\leq -1}) \setminus L_{-2}$

. Similarly (e.g., by applying Lemma 2.8; see Remark 2.9), we have

$E_{-1}(x) = (E \cap L_{\leq -1}) \setminus L_{-2}$

. Similarly (e.g., by applying Lemma 2.8; see Remark 2.9), we have