Introduction

As the information landscape continues to evolve, the widespread dissemination of disinformation is proliferating at a rate and scale never seen before in a democratic society. In recent years, major technology companies have taken much of the public blame for this reality, given their algorithms facilitate the sharing of – and sometimes even promote – falsehoods. This, however, misses a key reality: social media, search engines, and messaging services are not fully automated technologies. Rather, they are heteromated: they are reliant on participatory humans to serve their economic goals. Focusing on users, and on the sharing rather than the origination of disinformation, we connect theories of heteromation with those surrounding the Human Infrastructure of Misinformation (HIM) with the express purpose of contributing to a more holistic understanding of how and why misinformation is so prevalent online. We also attempt to approach aspects of the HIM within the Governing Knowledge Commons (GKC) framework in order to contribute to the understanding of how shared knowledge, information, and data are governed in a collective setting.

In the past, to run an effective disinformation campaign, one often had to sneak around institutional gatekeepers. In the preinternet era in the western world, the media was largely centralized and bounded by ethical standards. So in order to promote a false story, one may need to persuade a news outlet of the truth of falsehood and then hope the organization deemed the story worthy of a column or segment. Utilizing the closed-off infrastructure of the news media was one way to reach the eyes and ears of millions (Shimmer Reference Shimer2021).

As more individuals began to use the internet, these traditional gatekeepers remained stewards of their domains. However, the information superhighway was steadily getting busier, and the road had few tolls. This internet epoch was the “Rights Era,” a classification coined by Jonathan Zittrain (Reference Zittrain2019) to highlight the feeling at the time that information deserves to be free, and individuals had the rights to disseminate and consume it.

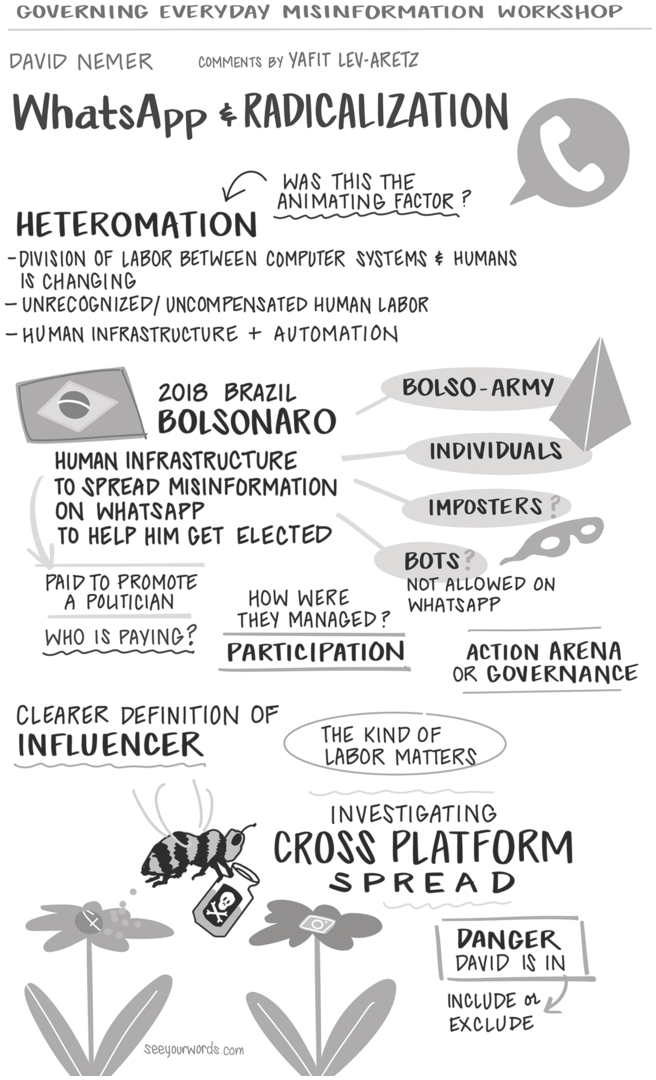

Figure 7.1 Visual themes from the human infrastructure of misinformation: a case study of Brazil’s heteromated labor.

In 2004, a Google search for the term “Jew” returned Jewwatch.com as its first result. The horribly antisemitic website denied the Holocaust and stated that it was “keeping a close watch on Jewish communities and organizations worldwide.” A Google spokesman responded that while the company was disturbed by the page rankings, it would not make any changes to preserve “the objectivity of our ranking function” (Flynn Reference Flynn2004). The Anti-Defamation League, a Jewish nongovernmental organization, defended Google, saying that the search rankings were an unintentional, unfortunate reality (Noble Reference Noble2018). To the public, and even to those with an interest in the issue, Google was simply a tool that helped users see what was popular online. Its rankings did not reflect any judgment, and the company claimed it could not control automatically generated search results. Google maintained the pipes serving users their information, but the pipes were dumb. Google’s peers, including Facebook and Twitter, served a similar custodial role.

Over time, however, the pipes became smarter and more precise. Social media companies and other tech companies built out their capabilities. Now, if a post violated a company’s rule or standard, the organization could do any of the following: delete the post or ban the poster; label the post as controversial, contextualize the post, perhaps by pinning a “fact-check” to it; or apply friction to it by refusing to promote the post or restricting users’ ability to share it – as managed by platforms such as Twitter, Facebook, and Instagram (Mena Reference Mena2020). As the capacity for intervention increased, so too did calls to intervene. Behind the black boxes of algorithms promoting or adding friction to posts, technical design decisions made to affect behavior, and institutions standing up to make decisions about content online, it can be easy to lose track of the heteromation involved: of the humans spreading disinformation and, on the other side, moderating or choosing not to moderate it.

This can be aptly shown in the case of the spread of misinformation on WhatsApp during Brazil’s 2018 general elections.Footnote 1 Critics contend that social media like Facebook, Instagram, and Twitter are plagued by filter bubbles and echo chambers, many of which are promulgated through personalized algorithmic feeds. An echo chamber is what might happen when people are overexposed to news that they like or agree with, potentially distorting their perception of reality because they see too much of one side, not enough of the other, and they start to think perhaps that reality is like this (Fletcher Reference Fletcher2020). A filter bubble is “a state of intellectual or ideological isolation that may result from algorithms feeding us information we agree with, based on our past behaviour and search history” (Fletcher Reference Fletcher2020). It was coined by Eli Pariser (Reference Pariser2011). Facebook creates filter bubbles by using personal information and online behavior to curate the information that shows up on users’ feeds.

One of the dangers of filter bubbles is that platforms become a hotbed for mis- and disinformation, since its distribution may not break out of the filter bubble at its point of origin (DiFranzo and Gloria-Garcia Reference DiFranzo and Gloria-Garcia2017). Several studies have shown that the spread of mis- and disinformation is more similar to epidemics than real news stories are and that such stories usually stay within the same communities (Jin et al. Reference Jin, Dougherty, Saraf, Cao and Ramakrishnan2013); that is, mis- and disinformation tends to not reach or convince outsiders. Mis- and disinformation and selective news filtering gained attention from scholars and the general public after they contributed to social media platforms’ ideological polarization that favored Donald Trump in the 2016 US presidential election and “Vote Leave” (known as Brexit) in Britain’s European Union membership referendum (Spohr Reference Spohr2017; Vaidhyanathan Reference Vaidhyanathan2018). But since WhatsApp runs on a peer-to-peer architecture, no algorithm curates content according to the characteristics or demographics of the users. Instead, a human infrastructure was assembled to create a pro-Bolsonaro environment on WhatsApp and spread misinformation to bolster his candidacy. In this paper, we articulate the labor executed by the Human Infrastructure of Misinformation as heteromated labor.

The field of disinformation studies has yet to address questions related to the relationship between disinformation and labor, identity, and morality. Thus, as claimed by Jonathan Ong and Jason Cabañes (Reference Ong and Cabañes2019), approaching disinformation as a culture of production expands the field into understanding the social conditions that entice people to this work and the creative industry practices that normalize fake news as a side gig. By framing the Human Infrastructure of Misinformation as heteromated labor, this chapter joins a growing body of literature that critically examines disinformation as a culture of production, which means “conceptualizing disinformation as both product and process emerging from organizational structures, labor relations, and entrepreneurial subjectivities” (Ong and Cabañes Reference Ong and Cabañes2019, 5772). We also hope to highlight how disinformation follows a process of both collaboration and an intentional product of hierarchized and distributed labor, so we can better inform disinformation interventions and regulations that address concerns with political campaigns.

Heteromation

The division of labor between computer systems and human beings has changed along both human and technical proportions. As claimed by Hamid Ekbia and Bonnie Nardi (Reference Ekbia and Nardi2014), there has been a shift from technologies of automation, those which are entirely managed by machines, to those of “heteromation,” which push critical tasks to end-users as indispensable mediators. Unlike technologies of automation, those of heteromation benefit from or depend on often unrecognized or uncompensated human labor to complete tasks. As Ekbia and Nardi (Reference Ekbia and Nardi2014) originally noted, they also do not all fit a singular mold; heteromation, like automation, is a process that can describe a wide range of business models and behaviors.

Users may or may not know they are serving as a cog in the heteromated machine. In some cases it is clear: Amazon Mechanical Turk, first launched in 2006, is a “human-as-a-service” crowdsourcing marketplace in which employees pay “MTurkers” to simulate automation through the completion of small, repetitive tasks. Sometimes it is hazier when users are working on behalf of a company: when they review a video game on YouTube or engage in community governance online, such as by serving as a nonemployee moderator on a subreddit, or a topic-based forum on the popular website Reddit, they are taking part in a heteromated process. As are those who, even without such a semi-formal role, simply “upvote” or “downvote” a post, thereby feeding the site’s algorithm so that it may show other “redditors” well-liked or controversial posts or comments. Meta, which owns Facebook, Instagram, and WhatsApp, and Alphabet, which owns Google and YouTube, each generates billions of dollars of revenue by relying on users, whether they be social media influencers, everyday people, or businesses, to create data, posts worth keeping browsers’ attention, and to ultimately view the advertisements that the companies make money from. Doug Laney (Reference Laney2012) wrote in the Wall Street Journal that “Facebook’s nearly one billion users have become the largest unpaid workforce in history.” He gathered that each user provided about $81 of worth to the site.

In Heteromation, and Other Stories of Computing and Capitalism, Ekbia and Nardi (Reference Ekbia and Nardi2017) outline five varieties of heteromated labor: communicative, cognitive, creative, emotional, and organizing. The authors first define communicative labor as the act of engaging online in return for self-validation and self-promotion. This is the Facebook model, where user signals such as “likes” and “comments” – “social data,” as the authors refer to it – are used to build an effective advertising model. Next, Ekbia and Nardi describe cognitive labor – the Amazon Mechanical Turk model – as when complex systems deliberately and knowingly use human intelligence and work to complete tasks and function properly. They then explain creative labor, when users produce creative outputs – like submitting a logo to a design contest or modifying a video game – in return for recognition, community, or a chance for monetary reward.

After that, the authors discuss emotional labor, which, in the context of heteromation occurs when individuals help machines “care” for people. As the authors note, human labor is often required to allow machines to work efficiently and effectively. Consider, for instance, the example of therapeutic robots. They may help individuals, including by helping to comfort and stimulate the elderly. Still, caregivers often must calibrate devices to the patients’ needs. Lastly, the authors tell of organizing labor, which draws on “the human capacity to organize an activity.” Examples include customer surveys.

Heteromation is a product of current conditions including: (1) historically high levels of profit in which no realm of human activity is too private, repetitive, or objectifying to escape capital’s grip; (2) the “race to the bottom” of increasing economic disparity resulting in the precariat; (3) a cultural need for high levels of stimulation fed by decades of quality popular entertainment and the generalized anxiety of the neoliberal subject which renders such stimulation particularly desirable; and, (4) feelings of uselessness or futility experienced by growing segments of the population such as the elderly, the chronically ill, the impoverished, and healthy retired people faced with twenty to thirty years without the social rewards of employment (Ekbia and Nardi Reference Ekbia and Nardi2017). Heteromation, as described, is fundamentally a product of social dilemmas that aren’t evident in the world since the community of people who manage or govern information are kept in a precarious state and are hidden from the public eye.

While some technologies of heteromation – or the companies that run them – ask or require individuals to conduct specific and defined forms of labor, others take advantage of what Zittrain (Reference Zittrain2008) calls “generativity,” or “a system’s capacity to produce unanticipated change through unfiltered contribution from broad and varied audiences.” Generative technologies are often innovative themselves; still, they typically rely on the creativity of others to sustain economic prosperity and drive growth. As Zittrain (Reference Zittrain2008) writes, while generativity’s output is innovation, its input is participation. Can you imagine if when you “googled” New York City and the only results returned were what an individual Google employee had to say about the city? Or if you logged onto Tiktok, only to see the content it paid directly for, as though it were a firm like Netflix? No, instead, Google and Tiktok help facilitate the conditions for which users produce their own content, for their own reasons, for free. And therein lies the value of platforms.

Background: WhatsApp in Brazil

When approaching a knowledge commons, in this case pro-Bolsonaro WhatsApp groups, it is important to analyze the environment that contains the resources that are to be shared and managed. According to Madison et al. (Reference Madison, Frischmann and Strandburg2009), “we must take a step back before describing the relevant characteristics of the shared resources to ask how we should define the environmental backdrop against which a commons is constructed,” thus, in this section we describe the background of where the knowledge common was nested.

WhatsApp has been popular in Brazil since it entered the market in 2009. In 2018, WhatsApp had about 120 million active users in Brazil, out of a total population of 210 million. About 96 percent of Brazilians with access to a smartphone used WhatsApp as one of their main methods of communication (Nemer Reference Nemer2019). WhatsApp’s popularity was driven by its low cost compared to SMS texting, which in Brazil could cost around fifty-five times what it would in North America. Another reason for the app’s popularity was that after Facebook bought WhatsApp for US$19 billion, the company partnered with the telecoms to offer a zero-rating plan that allowed subscribers to use WhatsApp basically for free. Zero-rating (also known as sponsored data) “refers to the practice in which mobile networks offer free data to customers who use specific services (e.g., streaming videos) or smartphone applications (e.g., [Facebook Chat], WhatsApp). Thus, customers who access this zero-rated/sponsored content do not pay for the mobile traffic generated by such use” (Omari Reference Omari2020, 7).

WhatsApp also makes it easy to create group chats and share content, including videos. The app became a hotbed for political campaigns that utilized disinformation not only because of its affordances and widespread reach in the country but also because of its end-to-end encryption, which ensured that nobody besides the sender and the receiver could read the content of messages. As such, it is nearly impossible for WhatsApp analysts to identify disinformation campaigns (Nemer Reference Nemer2022).

Attributes: The Creation and Maintenance of the Commons

Madison et al. (Reference Madison, Frischmann and Strandburg2009) ask “what technologies and skills are needed to create, obtain, and use the resources?” and “what are the characteristics of the resources?” In this section, we describe some attributes of the knowledge commons (pro-Bolsonaro WhatsApp groups) and give hints of how it was created and maintained.

During Nemer’s fieldwork in Vitória, Brazil, in December 2017, Neuza, a 27-year-old woman, showed him a short video that she got in a WhatsApp group called “Bolsonaro our president.”Footnote 2 In the video, Jair Bolsonaro tells Maria do Rosário, a member of Congress with the Workers’ Party (PT), “I would never rape you because you don’t deserve it.” The video came with the label: “This is how we treat communists.” Neuza vehemently condemned the video. Then when asked how she got into such a group, she simply told Nemer: “Someone added me to this group chat. […] There are three people I know on here; that’s why I stayed. I thought it was a group chat of friends, but all these other people do is talk about Bolsonaro. Nonstop! They send all kinds of videos and photos of him. It’s annoying and I don’t know how to leave [the group].”

Fatima (49 years old) and Regina (39 years old) also mentioned that the WhatsApp groups they were part of had become spaces to talk about the upcoming 2018 elections – with a focus on Bolsonaro. But instead of being added to a chat, they had the experience of new people being added to their existing groups. The new arrivals brought up Bolsonaro. “These two guys who I don’t know entered our church group chat and always talk about politics,” Fatima explained. “This is a church group, not politics,” she added with a laugh. “They go on and on about how socialism is evil … but wasn’t Jesus Christ a socialist?”

Listening to Neuza, Fatima, and Regina describe their experiences on WhatsApp, Nemer could relate to what they said : The same thing was happening with his own WhatsApp groups. In his extended family’s group chat, Nemer noticed that three cousins were constantly sharing homemade misinformation and pro-Bolsonaro memes and videos. Since he knew they were not the ones creating them, Nemer asked them who made the content. Their answers were always the same: “I don’t know. I got it from another group chat,” Giovane, 46 years old, said. Most of the political and misinformation content that the cousins were sharing matched what Regina and Neuza were seeing. Since WhatsApp runs on a peer-to-peer architecture, there was no algorithm curating content according to their characteristics or demographics, which is how filter bubbles work on Facebook. Spreading mis- and disinformation on WhatsApp required deliberate human labor to create and distribute this content.

Governance Action: A Social and Political Messaging Machine

If Brexit and Donald Trump’s election were made possible by Facebook’s algorithms, then the rise of Brazil’s far-right firebrand Jair Bolsonaro was partly due to human machinations on WhatsApp. On the meta-owned messaging app, a Human Infrastructure of Misinformation (HIM) was assembled to make the environment on the app feel staunchly pro-Bolsonaro through messaging and misinformation campaigns.Footnote 3 The HIM turned pro-Bolsonaro WhatsApp groups as the action arena where governance was taking place. They became the social spaces “where participants with diverse preferences interact, exchange goods and services, solve problems, dominate one another, or fight (among the many things that individuals do in action arenas)” (Ostrom Reference Ostrom2009).

Infrastructures, as anthropologist Brian Larkin (2013) defines them, are “built networks that facilitate the flow of goods, people, or ideas and allow for their exchange over space” (328). While there has been increasing work on social engagements with infrastructures in technological systems, particularly in the Global South (see Dye et al. Reference Dye, Nemer, Mangiameli, Bruckman and Kumar2018; Jack, Chen, and Jackson Reference Jack, Chen and Jackson2017; Nguyen Reference Nguyen2016; Sambasivan and Smyth Reference Sambasivan and Smyth2010), here we build on an understanding of infrastructure that goes beyond technological artifacts and focuses on humans as central to such networks. To explore the human side of infrastructure, we look at how humans organize to perform labor and accomplish tasks. We also follow Nithya Sambasivan and Thomas Smyth’s (Reference Sambasivan and Smyth2010) concern for “social practices, flows of information and materials, and the creative processes that are engaged in building and maintaining these substrates” (1).

Bolsonaro became known more for his controversial speeches rather than for having a robust and well-formulated policy platform. He celebrated dictatorship, glorified torture, promised to reverse policies that protect the Amazon Region, and threatened Brazil’s women, Black, and LGBTQIA people. However, none of his threats had an impact on Bolsonaro’s popularity, since his fragmented approach to governance attempted to please his electorate that was composed of a loose coalition brought together by the candidate’s appeal to “bullets, bibles, and bulls” – which is a conservative political agenda that aims to favor evangelical and Pentecostal politicians, pro-guns policies, and pro-agriculture and anti-environment acts (Schipani and Leahy Reference Schipani and Leahy2018). Bolsonaro ran a campaign based on the idea that his presidency was the only hope to end violence and corruption in Brazil. His supporters called him Mito (Legend or Myth) and expected him to reinstate law and order in the country. Given the hyperpolarization of the elections, Bolsonaro built his rise on people’s distrust in politics and the wariness of politicians and political establishments in general. Bolsonaro presented himself as the anti-establishment candidate, even though he had been a member of the Brazilian Congress for twenty-seven years.

Although mis- and disinformation had spread in Brazil across all forms of social media, WhatsApp’s impact was the most notable. Due to the app’s popularity, about 44 percent of the voting public in Brazil used WhatsApp to discover political information, according to the polling institute Datafolha (G1 2018). The app’s simple design allowed users to easily share text audio notes, images, and videos – which facilitated the spread of misinformation. A study of 100,000 WhatsApp images widely shared in Brazil during the election found that more than half contained misleading or blatantly false information (Tardáguila, Benevenuto, and Ortellado Reference Vaidhyanathan2018). Another study conducted by fact-checking agencies involved in Comprova found that 86 percent of false or misleading content shared on WhatsApp benefited Bolsonaro by attacking his opponent, Fernando Haddad, and his party, PT (I. Macedo Reference Macedo2018).

The Actors of the Human Infrastructure of Misinformation

Given the prevalence of WhatsApp use and the intriguing way that misinformation was spread during the 2018 presidential election, Nemer decided to research who was creating the false content and sharing it with people like Neuza, Fatima, and Regina. Nemer joined four self-declared pro-Bolsonaro WhatsApp groups via invitation links that were publicly listed in the description of conservative YouTube videos.Footnote 4 Nemer began monitoring WhatsApp groups in March 2018 that had an average of 160 members. At the peak of the election cycle, each group was posting an average of 1,000 messages per day. In August, after conducting the first thematic analysis of the data, Nemer identified three clusters of actors across the groups: the Average Brazilians, the Bolso-Army, and the Influencers, as detailed in Table 7.1. He found that misinformation was spread in these groups through a pyramid structure, similar to the classic two-step flow of communication model (Katz and Lazarsfeld, Reference Katz and Lazarsfeld1966)Footnote 5 in which each cluster of actors occupied a level. Influencers were at the top and Average Brazilians were at the bottom.

Table 7.1 Clusters of members across pro-Bolsonaro WhatsApp groups and their duties

| Cluster of actors | Duties |

|---|---|

| Influencers | Create and/or bring new misinformation to pro-Bolsonaro WhatsApp groups. |

| Bolso-Army | Reinforce the content of the Influencers’ misinformation and spread them across WhatsApp and other platforms, such as Twitter and Facebook. They also managed pro-Bolsonaro WhatsApp groups. |

| Average Brazilians | Consume misinformation and bring them to their personal groups. |

The vast majority of the actors fit within the description of the Average Brazilian. According to sociologist Esther Solano, Bolsonaro’s typical voter was male, White, and middle class and held a college degree (Moysés Reference Moysés2018). However, on these WhatsApp groups, as Nemer analyzed the actors’ conversations, he noticed that they were coming from different social classes and were both men and women. They justified their vote for Bolsonaro by sharing their life experiences and difficulties. Before entering the groups, many of them mentioned that they didn’t have a strong opinion about the candidate. However, they saw WhatsApp groups as safe spaces where they could learn more about the Mito, verify rumors and news, and obtain digital content to share on other social media accounts and groups. Many of them voted for a different right-wing candidate in the first round of voting and switched to Bolsonaro for the final runoff. One of these people was Carlos, who said in the groups that he “wasn’t going to vote in the runoff, but [did] after learning that our country was under an imminent socialist attack.” He claimed that he decided to vote for Bolsonaro on the basis of this information.

Bolsonaro’s groups functioned as echo chambers governed by the Bolso-Army and Influencers. Every time a member posted disinformation – such as poll results or memes about Bolsonaro – members rallied by cheering with the Brazilian flag – a sign of Bolsonaro’s new emphasis on Brazilian nationalism – or by posting a specific emoji. The backhand index finger pointing right or left (👉) was Bolsonaro’s trademark handgun symbol, referring to his promise to relax gun controls and allow police officers to shoot suspects with impunity. The Bolso-Army was Bolsonaro’s loyal fan base and the machinery that stood ready to attack anyone who insulted Bolsonaro on WhatsApp or other social media platforms. They began following him long before his campaign started because they were in fact part of the governance team of these WhatsApp groups and kept a vigilant eye out to promptly ban infiltrators or people who dared to challenge anything related to the candidate.

In these groups, debate or discussion about Bolsonaro’s policies was difficult. People got kicked out because they asked questions related to Bolsonaro’s refusal to participate in televised debates, his family’s mysterious assets, and even his record as a congressman. Every time average users attempted to verify information or ask a question, they were flooded with passionate Bolso-Army messages to shut down any doubt about Bolsonaro’s legacy. Their arguments were mostly founded on misinformation.

The Bolso-Army formed the glue that held the Human Infrastructure of Misinformation (HIM) together to actively disseminate the misinformation produced by influencers across pro-Bolsonaro WhatsApp groups and other social media platforms. Given their stance, which displayed extreme confidence and left no space for questions, average users felt secure with the information they were given. They recirculated it, helping to spread misinformation even further. Influencers had a decisive role in creating mis- and disinformation. There were only four or five influencers per group, and they were not the most outspoken or active participants. They worked backstage to create and share fake news in these groups and to coordinate online and offline protests. They used image-and video-editing software to create convincing and emotionally engaging digital content. They knew how to work content into memes and short texts that went viral.

The Influencers took advantage of the Bolso-Army’s loyalty to quickly spread their fake news. They often used affect (satire, irony, and humor) to create their content, creating memes about “Bolsonaro the Oppressor” to ironically show Bolsonaro’s human side. They also worked fast to create fake news to delegitimize anybody who criticized Bolsonaro before group members read the news in other venues. For example, Marine Le Pen – the iconic far-right politician from France – stated that “Bolsonaro says extreme things, unpleasant things which are insurmountable in France.” Within thirty minutes of this story coming out in a popular Brazilian publication, the Influencers pooled resources together and posted a meme saying that Le Pen was a Communist (Nemer Reference Nemer2021). Their strategy was to label everyone who might undermine Bolsonaro a Communist and to discredit mainstream news.

Traditional right-leaning news outlets such as Veja and Estado de São Paulo were actually labeled as socialist venues by members of pro-Bolsonaro groups. The mis- and disinformation produced in WhatsApp insidiously altered perception, but the absurdity of some stories was even more astonishing. A group of influencers created a flyer alerting their members that Haddad, the candidate from the Workers’ Party (PT), would sign an executive order allowing men to have sex with twelve-year-old children. When David Duke endorsed Bolsonaro for thinking like the Ku Klux Klan (KKK), the influencers were quick to produce content depicting the KKK as a product of the left-wing party, to distance Bolsonaro’s image from the KKK. During the first round of votes, they circulated fake videos that showed malfunctioning electronic voting machines to reinforce the idea that the elections were rigged.

Influencers also found public videos on YouTube and Facebook that challenged Bolsonaro and posted their links to the WhatsApp groups so the “Bolso-swarm” could descend on them to express their dislike and show support for their legend. Although these three types of members – the Average Brazilians, the Bolso-Army, and the Influencers – had different roles in the Bolsonarist WhatsApp ecosystem, they had a lot in common.

Many Influencers and the Bolso-Army were compensated between US$100 and US$250 per week to distribute pro-Bolsonaro content and manage WhatsApp groups, as claimed in 2019 by former members of the Bolso-Army. By revealing this, they implicitly criticized influential groups of businessmen who they said financed the network, and they suggested that virtual militias (known as the Virtual Activist Movement) were paid to infiltrate WhatsApp groups and spread misinformation. They didn’t directly implicate Bolsonaro’s campaign team, though they have said that at least one person who was an adviser in Bolsonaro’s government was among those paid to feed fake news to his supporters.

What happened during the 2018 presidential election debunked the idea that WhatsApp is a level playing field. WhatsApp’s peer-to-peer encrypted architecture may give users a sense of security and privacy since there is no algorithm intervening in their messages. It may also give them a sense of spontaneity since the app affords anyone the ability to produce and share content. However, as described earlier, Bolsonaro’s campaign relied on mis- and disinformation that was systematically created and spread by the HIM that orchestrated a guided campaign. It is hard to verify the exact impact that digital populism had on the 2018 presidential elections. However, given Carlos’s account and many like it, in which people felt motivated to get out and vote for Bolsonaro, the HIM behind the disinformation campaign on WhatsApp undeniably helped Bolsonaro become Brazil’s next president.

Discussion

The Human Infrastructure of Misinformation (HIM) comprises a sociotechnical arrangement where humans organize to perform labor with the aid of technological systems and accomplish tasks that pertain to the creation, distribution, and consumption of misinformation. The HIM sustained an information system, in this case, misinformation on WhatsApp, that functioned through the actions of heterogeneous actors – in other words, the members of such infrastructure were users that provided the heteromated labor necessary to make misinformation on WhatsApp work.

Hamid Ekbia and Bonnie Nardi (Reference Ekbia and Nardi2014) examine heteromated systems according to their functionality and reward structure. They categorize systems in terms of who benefits from the heteromated labor relation, whether participant compensation (monetary) is offered, and whether the system produces affective rewards. Beneficiaries are social actors that reap the major benefits of heteromated labor. Participants may benefit from affective rewards. Following the authors’ categorization, the heteromation system that HIM labored in can be organized as detailed in Table 7.2.

Table 7.2 Heteromation of the human infrastructure of misinformation

| System | Heteromated functionality | Beneficiaries | Participant compensation | Affective rewards for participants |

|---|---|---|---|---|

| Misinformation on WhatsApp | Human Infrastructure of Misinformation Influencers: Content-creation; cognitive and creative labor.Bolso-Army: Repetitive microtasks; communicative and organizing labor. Average Brazilians: emotional and communicative labor. | Politicians | Influencers: medium-high Bolso-Army: medium-high | Average Brazilians: high |

The heteromated labor performed by Influencers and the Bolso-Army was compensated in amounts of between US$ 100 and US$ 250 per week (between BRL 380 and BRL 900 approximately). Such an amount can be considered “medium-high” since the Brazilian minimum monthly wage in 2018 was set in at BRL 954 and the average monthly household income per capita was BRL 1.337 (IBGE 2019). Influencers were at the top of the HIM: They were responsible for producing and bringing new misinformation to pro-Bolsonaro groups on WhatsApp. Their cognitive labor comprised of understanding the current and complex political environment in order to promote the presidential candidate Jair Bolsonaro and/or to respond to the criticism that Bolsonaro was receiving during his campaign. Such cognitive labor led to the Influencers’ creative labor which meant creating outputs – like pro-Bolsonaro memes and short videos. Differently from the common understanding of what an Online Influencer attempts to accomplish, HIM’s Influencers were not seeking recognition or fame, instead, they wanted to remain unrecognizable. We categorize them as Influencers since they had access to a large audience in WhatsApp groups and were able to persuade others to act based on their content. They only participated once or twice a day, bringing new misinformation to the groups because they knew that they could rely on the Bolso-Army to reinforce the message embedded in the content they created.

As for the Bolso-Army, they did not engage in heteromated cognitive labor, as defined by Ekbia and Nardi (Reference Ekbia and Nardi2017). Instead, they were in charge of repetitive microtasks that followed a predefined script and/or reacted to a previous trigger. The Bolso-Army performed communicative labor as they were scripted to reinforce the Influencers’ content’s message, as soon as new content was posted to those pro-Bolsonaro groups on WhatsApp, in order to make sure that the Average Brazilians actually internalized their message, as well as to spread the same content to other WhatsApp groups and platforms such as Twitter and groups on Facebook. Differently than claimed by Ekbia and Nardi, that described communicative labor as the act of engaging online in return for self-validation and self-promotion, members of the Bolso-Army were not necessarily after self-validation and/or self-promotion; their main goal was to validate and promote the message contained in the Influencer’s posts.

Another heteromated labor performed by the Bolso-Army was to organize. Bolso-Army members were also part of the admin team that managed those WhatsApp groups – they stood ready to remove anyone from those groups that insisted on questioning Bolsonaro’s legacy and campaign or whom they perceived as infiltrators – users who joined those groups and flooded them with messages criticizing Bolsonaro. They also worked hard to maintain the tone in those groups favorable to Bolsonaro. They organized online harassment by asking Average Brazilians to descend on posts on other social media platforms to express their dislike and show support for Bolsonaro.

The Average Brazilians, at the base of the HIM, performed communicative labor as they also helped spread the misinformation posted on those pro-Bolsonaro groups to their other personal groups that were not necessarily politically oriented, such as Family, Neighborhood, and Friend groups. They often used the content posted by the Influencers as evidence for their arguments in their online debates. Average Brazilians also performed emotional labor, but not the emotional labor defined by Ekbia and Nardi (1997) which occurs when individuals help machines “care” for others, for example: when caregivers help users adopt, and calibrate, technology such as therapeutic robots. Average Brazilians performed emotional labor as defined by Hochschild (Reference Hochschild1983), which refers to regulating or managing feelings to fulfill the emotional requirement of one’s work role. They were expected to regulate their emotions during interactions with the Bolso-Army and other Average Brazilians. Fearing being kicked out of those WhatsApp groups, they often complied with the Bolso-Army’s authority and avoided asking questions that could be interpreted as them challenging Bolsonaro’s legacy. Instead, they often responded to the Influencers or Bolso-Army posts by cheering with the Brazilian flag, validating messages, or with Bolsonaro’s trademark handgun emoji (👉). That validating or positive response was perceived by Average Brazilians as a way to have their loyalty recognized by the Bolso-Army and rewarded with their permanence in the groups. Such emotional labor was done so as to produce a certain feeling in the Bolso-Army or Influencers that their labors were being appreciated and that they were succeeding in promoting Bolsonaro. In each of these instances, humans participated in the heteromated process of spreading disinformation.

Studies on heteromated systems have focused on creating awareness around labor exploitation, compensation, invisibility, and advocating for labor rights (see Bailey et al. Reference Bailey, Diniz, Nardi, Leonardi and Sholler2018; Ekbia and Nardi Reference Ekbia and Nardi2019; Irany Reference Irani2019; Sambasivan et al. Reference Sambasivan, Kapania, Highfill, Akrong, Paritosh and Aroyo2021). However, in the case of the Human Infrastructure of Misinformation, given its ethical and quasilegal practices, such awareness may not be wanted nor beneficial to its members. In many cases, we might call for unpaid heteromated labor to be compensated, or for the greater acknowledgment of the work of low-paid creators. However, when it comes to the heteromated process of the HIM, the actors involved are creating what is likely a societal net negative, calling into question the types of behaviors that should be incentivized.

As it turns out, money was a strong motivator for Influencers and members of the Bolso-Army to remain loyal and contribute to Bolsonaro’s digital propaganda machine. While some were surely ideologues or political allies looking to push Bolsonaro’s campaign and presidency, others were likely swayed by the economic opportunity of being paid up to $250 a week

Importantly, many of the labors performed by the HIM can be – and indeed often are – done by algorithms on other platforms. On Whatsapp, the Influencers, the Bolso-Army, and Average Brazilians moderate chats and spread disinformation. Those roles are, on social media like Twitter or Instagram, filled by algorithms, including recommendation engines and algorithmic content moderators. In these instances, in that a gap is created by a lack of automation, it is filled by heteromated labor. This labor may be adaptive and resilient too; when the ecosystem changes, the labor can adjust to still serve its goals (Marks Reference Marks2021).

Approaching HIM as heteromated labor and as a culture of production, which means “conceptualizing disinformation as both product and process emerging from organizational structures, labor relations, and entrepreneurial subjectivities” (Ong and Cabañes Reference Ong and Cabañes2019, 5772), exposes the broader systems of practices that normalize and incentivize its everyday work arrangements. Our study attempts to highlight how disinformation is a process of collaboration and an intentional product of hierarchized and distributed labor. It joins Jonathan Ong and Jason Cabañes’ call for proposing new “questions of ethics and accountability that consider broader institutional relations at play” (Reference Ong and Cabañes2019, 5772). Our study also sheds light on the responsibility of disinformation actors working in different capacities – such as Influences, the Bolso-Army, or low-level misinformation consumers and spreaders like the Average Brazilians. Each group’s motivations and intentions may vary, but taken together, they constitute the key players in the pro-Bolsonaro WhatsApp misinformation ecosystem. Understanding HIM as a product of everyday work arrangements “pushes us to think of how we can expand our notion of disinformation interventions from content regulation and platform banning of bad actors and their harmful content to include process regulation of the ways political campaigns and consultancies are conducted” (Ong and Cabañes Reference Ong and Cabañes2019, 5772).

In framing HIM as a culture of production, our goal is to understand the actors involved in the work arrangements that promote misinformation and to engage in a detailed study on challenging moral issues involving “complicity and collusion” in our media ecosystem (Silverstone Reference Silverstone2007). By subverting expectations of people about who exactly paid disinformers are, we critique the workings of the system at large and the different levels of responsibility at play. We hope this approach contributes to broader debates about the political value of representing perpetrators’ narratives, which in media scholar Fernando Canet’s (Reference Canet2019) view can deepen “understand[ing of] both the personal and situational dimensions that could lead a human being to commit such acts [and] help us to determine how to prevent future cases” (15).

Conclusion

In this chapter, we analyze the labor within the Human Infrastructure of Misinformation as heteromated labor through the case study of WhatsApp groups in Brazil and highlight ties with the Governing Knowledge Commons (GKC) framework. On WhatsApp, Brazilian President Jair Bolsonaro benefited from misinformation that was used to garner support and ensure that people felt that he was a popular candidate. While the key to messaging campaigns on social media is often to appeal to an algorithm that will in turn broadcast a message, on WhatsApp there is no algorithmic feed. As such, the pro-Bolsonaro campaign relied on the HIM – specifically, Influencers, the Bolso-Army, and Average Brazilians.

The work the HIM performed represented heteromated labor: the influencers provided cognitive and creative labor through their content creation; the Bolso-Army provided communicative and organizing labor by acting as administrators of large group chats and performing repetitive microtasks like forwarding and promoting messages; and the Average Brazilians provided emotional and communicative labor by spreading misinformation and regulating their emotional responses while complying with norms established by the Influencers and the Bolso-Army.

Building on the work of Ong and Cabañes, the chapter also looks at how HIMs emerge from “organizational structures, labor relations, and entrepreneurial subjectivities.” The complex misinformation ecosystem – with Influencers at the top of the pyramid, the Bolso-Army in the middle, and Average Brazilians at the bottom – was formed by an alliance of actors with varying motivations, whether they be for money, fame, ideology, or because they believed the information that they were sharing was true. Developing a further understanding of the HIM and the heteromated labor it employs at both the personal and organizational levels will allow future researchers and policymakers to better understand, and address, misinformation. We hope that future research will continue to address these important topics.