1. Introduction

Trust in scientific expertise is important. At the same time, philosophers of science agree that this trust cannot be grounded in the idea that scientific expertise is autonomous and free from any social, political, or economic values and interests. The problem is then how to maintain or enhance trust while acknowledging at the same time that science is not value-free (Holman & Wilholt, Reference Holman and Wilholt2022). Transparency is one proposed solution to this problem: Scientific experts need to be transparent about underlying values and interests in order to promote warranted trust in expertise.

Value transparency has been advocated for by many philosophers of science (e.g., de Melo-Martín & Intemann, Reference de Melo-Martín and Intemann2018; Douglas, Reference Douglas2008, Reference Douglas2009; Elliott, Reference Elliott2017; Elliott & Resnik, Reference Elliott and Resnik2014; Resnik & Elliott, Reference Resnik and Elliott2023). Yet others have pointed out dangers or difficulties of transparency when it comes to promoting trust (e.g., John, Reference John2018; Schroeder, Reference Schroeder2021). Recently, Intemann (Reference Intemann2024) has argued that arguments against transparency point to ways in which the norm of transparency needs to be qualified but fail to provide sufficient reason to abandon it completely.

However, as especially Elliott (Reference Elliott2022) has demonstrated, transparency is a complex concept and can vary along different dimensions. In this article, I will argue that there is an important ambiguity within the concept of transparency that is not only missing in Elliott’s taxonomy, but also in the overall debate on transparency and trust in science.

Transparency requirements come with a varying extent of engagement. Transparency can be more or less demanding and interfering—from merely disclosing information to providing information that is publicly accessible, or even allowing for public criticism and sanctions. Based on accounts from political theory, I will distinguish three senses of transparency: mere transparency, publicity, and accountability.

The extent of engagement matters for the debate on value transparency and trust. I will show that different arguments for transparency as a tool for improving trust, in fact, work with different senses of transparency. However, mere transparency, publicity, and accountability may backfire in distinct ways. Just talking about transparency without paying attention to this ambiguity muddles the philosophical discussion. Arguments for and against transparency are often only directed at a specific form of transparency.

Furthermore, I show that transparency is not the minimally demanding and interfering tool that it appears to be. This is especially important when transparency is used as a regulatory tool, for instance, in guidelines for providing scientific advice or writing a policy-relevant paper. Depending on how transparency requirements are supposed to facilitate trust in scientific expertise, they come with a different regulatory force.

This article proceeds as follows. I will start by presenting Elliott’s taxonomy of transparency (Section 2) and then introduce the extent of engagement as an additional dimension of transparency (Section 3). I will show how this ambiguity is hidden in arguments for transparency and trust (Section 4), before working out the varying ways in which transparency may backfire (Section 5). Finally, I will deal with transparency’s regulatory force (Section 6).

2. The Complexity of Transparency

This article rests on the idea that transparency is an ambiguous concept. This has been pointed out by Elliott (Reference Elliott2022, 347). In his taxonomy of transparency, Elliott identifies eight major dimensions along which different forms of transparency may vary. The first is the purpose dimension, which refers to the reason for which transparency is pursued or required, for example, to promote trustworthiness. The second is the audience dimension, which answers the question of who is receiving information, for instance, policymakers, journalists, or the general public. The third is the content dimension, addressing the question of what is made transparent, like data and materials, or value judgments and their implications. The following four dimensions address the question of how information should be provided: The fourth dimension, timeframes, specifies at which point something should be made transparent. The fifth dimension concerns the varying actors who are disclosing information, for example, scientists or government agencies. As a sixth dimension, the mechanisms for identifying and clarifying the information that needs to be transparent may differ, like written discussions among scientists or collaborations with community members. The seventh dimension answers the how-question in terms of venues for transmitting the information, from communication by scientists to reports from government agencies. The final dimension concerns the dangers of transparency, which involves things like violating privacy but also generating inappropriate skepticism or creating a false sense of trust.

Importantly, Elliott (Reference Elliott2022, 350) points out that different combinations of variations lead to different forms of transparency. There are interdependencies between the dimensions: For example, if the purpose of transparency is to promote high-quality policymaking, then this already determines policymakers as the audience. Likewise, not every danger is relevant for every form of transparency.

In this article, I will focus on transparency of values and interests as a tool to improve public trust in scientific experts. Footnote 1 Thus, within Elliott’s taxonomy, I am only interested in a specific form of transparency. The purpose of this form is to promote public trust in scientific experts. The audience is usually the public, but it can at times be politicians and policymakers, or the information is conveyed by science journalists and other mediators. Values and interests need to be made transparent, but if they are implicit, the content might have to be broader. The actors are different kinds of scientific experts.

I build on Elliott’s taxonomy, arguing that it is missing one important way in which transparency is an ambiguous concept. Transparency comes with what I call a varying extent of engagement Footnote 2: It can be a more or less demanding or intrusive requirement. This will be particularly relevant for the dangers of transparency, as they are often relative to the extent of engagement. I will start by explaining this dimension in the next section.

3. A Varying Extent of Engagement

The ambiguity with regard to the demandingness of transparency is explicitly emphasized in political theory (e.g., Baume, Reference Baume, Alloa and Thomä2018; Elster, Reference Elster and Elster2015; Lindstedt & Naurin, Reference Lindstedt and Naurin2010; Naurin, Reference Naurin2006; O’Neill, Reference O’Neill, Hood and Heald2006, Reference O’Neill2009). Based on these approaches, I will distinguish between mere transparency, publicity, and accountability. I take the general three-part distinction from Naurin (Reference Naurin2006) but adapt it in some respects. Before explaining each sense in more detail, two things should be noted on the terminology. First, these terms originate from debates in political philosophy. This is especially relevant for publicity: As it will become clear below, this use of the term is different from its marketing-related use in everyday language. Second, even in political theory, the three terms appear somewhat inconsistently and at points interchangeably. I only believe Naurin’s taxonomy to be helpful for the purpose of my article.

I will start with mere transparency. Transparency in this sense involves merely disclosing and disseminating information—the information has to be made available, but this does not mean that it has to be communicated in a way such that a specific audience is able to understand the information. Or, as Naurin (Reference Naurin2006, 91) puts it, mere transparency “literally means that it is possible to look into something, to see what is going on.”Footnote 3

Mere transparency appears in many areas of science, for example, by posting data or materials to repositories (Nosek et al., Reference Nosek, Alter, Banks, Borsboom, Bowman, Breckler, Buck, Chambers, Chin and Christensen2015) or disclosing conflicts of interest. However, it is also central to scientific expertise that is used in the political realm. For example, after being criticized for their lack of transparency at the beginning of the COVID-19 pandemic, the British government later disclosed members and minutes of meetings with the Scientific Advisory Group for Emergencies (Jarman, Rozenblum, Falkenbach, Rockwell, & Greer, Reference Jarman, Rozenblum, Falkenbach, Rockwell and Greer2022).

Publicity, on the other hand, goes beyond availability. I will follow Elster’s (Reference Elster and Elster2015, 3) weak notion of publicity: Publicity means that the audience has access to the information, that is, they can acquire information with little to no costs or difficulties. Note that there is also a stronger notion of publicity, which involves that the content of the information has to become known among the audience (e.g., Naurin, Reference Naurin2006). For scientific expertise, however, this notion is too strong.

Publicity requires communicating information, not just disclosing it. Especially O’Neill’s (Reference O’Neill2009, 175) characterization of effective communication is helpful in this regard. She argues that in contrast to mere information handling, effective communication is sensitive to a specific audience, and it must seek to meet some basic standards. First, effective communication involves accessibility. This standard is understood in a more cognitive sense. The information has to be intelligible, that is, the audience needs to be able to follow what is being communicated, and it has to be relevant to the intended audience. Furthermore, communicative action should also be assessable, in the sense that the audience needs to be able to judge the status of that information, for example, whether certain claims are evidence-based or speculations. Transparency requirements, O’Neill (Reference O’Neill2009, 173) notes, often range over informational content, yet in fact, effective communication is important: “The activity by which information is made transparent places it in the public domain, but does not guarantee that anybody will find it, understand it or grasp its relevance.” Publicity, as I will use the term, captures this emphasis on communication as well as cognitive accessibility and assessability.Footnote 4

This makes publicity certainly more demanding than mere transparency. It is more difficult because the audience may not be invested in the issue, or because the audience lacks the capacity to access and process the information. For scientific expertise, especially the latter becomes relevant. Publicity requires not only disclosing information but also explaining it. Experts have specialized knowledge and experience with respect to a particular area, making publicity at least time-consuming.

Finally, let us look at accountability. Accountability in the traditional sense involves more than exposing information. In case of misconduct, there have to be some kind of sanctions. Such sanctioning mechanisms can be formal, for example, losing one’s position or being summoned before a court. Yet they can also be informal and consist of embarrassment or social stigma (Naurin, Reference Naurin2006, 92). For scientific expertise, sanctioning mechanisms, in particular formal ones, are rare and much weaker (see, e.g., Langvatn & Holst, Reference Langvatn and Holst2024). In contrast to political representatives, experts are not elected to their positions. They may be ousted from a position in an advisory committee, but even then, the accountability relation is more indirect. It is usually the politicians who decide not to appoint a certain expert anymore, perhaps because they fear sanction mechanisms from the public. One of the rare cases of legal expert accountability was the prosecution of six scientists after the L’Aquila earthquake in 2009 (Douglas, Reference Douglas2021). For scientific expertise outside of formal advisory committees and the like, sanctioning is even more difficult.

However, accountability, too, is an ambiguous concept (Brown, Reference Brown2009; Mansbridge, Reference Mansbridge2009; Philp, Reference Philp2009). There are at least two different senses: holding someone accountable and giving an account. Both describe a relationship between an actor or speaker and a forum or audience. However, only the first sense clearly involves monitoring and imposing sanctions. The second sense is sometimes called “deliberative accountability” (e.g., Mansbridge, Reference Mansbridge2009, 384; Brown, Reference Brown2009, 216): The actor has to explain and justify her actions or claims, which allows others to criticize and object to it. As Mansbridge (Reference Mansbridge2009, 384) notes, deliberative accountability rests on selecting actors who are likely to share the forum’s interests—instead of assuming that actors have entirely different interests and need to be sanctioned later. Importantly, when talking about accountability in the context of scientific expertise, philosophers usually have deliberative accountability in mind (e.g., Douglas, Reference Douglas2021, 74; Douglas, Reference Douglas2009, 153; Resnik & Elliott, Reference Resnik and Elliott2023, 274).

Deliberative accountability has similarities with publicity; giving an account is related to making information publicly accessible. However, the extent of engagement for deliberative accountability is different in at least some respects. First, both forms of accountability become more alike—and thus distinct from publicity—when looking at informal sanctions. It is difficult to draw a sharp line between criticism and sanctions like embarrassment or a loss of reputation. Second, in contrast to publicity, accountability refers to a relationship in which the audience can require something from the speaker (Philp, Reference Philp2009).Footnote 5 Even in the case of deliberative accountability, the audience is not a passive receiver of information but can actively engage with it.

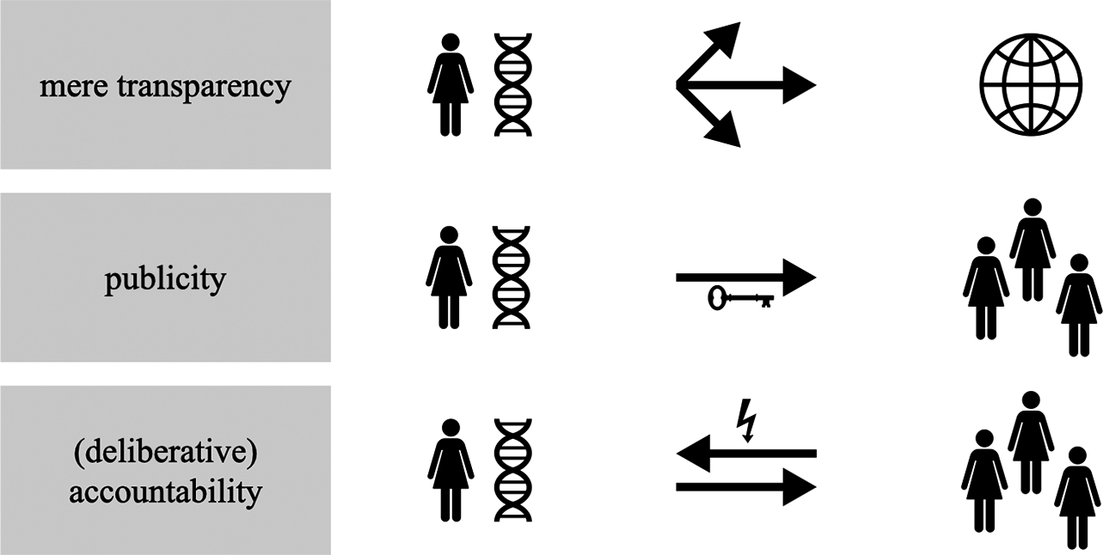

To sum up, the difference between mere transparency, publicity, and accountability can be framed in terms of communication (Figure 1): Mere transparency is only about making information available. Publicity involves making information accessible and communicating it to a specific audience. Accountability describes a two-way communication between audience and speaker: The audience reacts to the information provided by the speaker, either by objecting or by imposing sanctions, and the speaker considers this reaction. The difference is often gradual: Information can be more or less accessible to or taken in by the public, and likewise, there can be weaker and stronger public reactions, from mere criticism to imposing formal sanctions.

Figure 1. The extent of engagement in transparency requirements. On the left is the speaker. On the right is the audience, which can be very broad or more specific. The arrows show the direction of information transmission. The key represents accessibility and assessability, while the flash symbolizes criticism or sanctioning.

Before showing how this relates to trust, let me make some additional remarks. The first concerns the dimensionality. I have grouped mere transparency, publicity, and accountability under one dimension, which I called extent of engagement. It should be noted, though, that publicity and accountability have a greater extent of engagement than mere transparency, but in slightly different ways. Publicity is more demanding than mere transparency because it involves making information accessible to a specific audience. Accountability demands of the speaker to consider criticism or even sanctioning by the audience. In many cases, accountability builds on publicity, in particular when understood in the deliberative sense: Providing good criticism presupposes that the information is accessible and assessable in the first place. Yet in principle, one could also have accountability without publicity. Thus, while mere transparency, publicity, and accountability vary with respect to the extent of engagement, this does not necessarily imply that all three are placed on the same spectrum from low to high.

Second, I introduced the distinction in general terms, but it applies to values and interests in the context of scientific expertise. For example, imagine a scientist working in an expert committee. She might disclose possible conflicts of interest or any values that her decision to communicate a certain claim rests on. However, she could also make this information accessible and explain how exactly certain values and interests may have influenced her claims or which alternative values she could have considered. And finally, she can be accountable and consider how her audience views her use of values or the influence of interests on her claims.

Third, I am interested in the process of making something transparent. The extent of engagement is particularly relevant if transparency is a norm or requirement, as it highlights the demands transparency puts on the expert. This is distinct from transparency as a description of the state some information is in. However, of course, these things are linked; for information to be transparent, it has to be made transparent first. Note that the focus on making information transparent is also mirrored in debates in philosophy of science. Value transparency is discussed as something experts can or should pursue—for example, as a tool to handle illegitimate value judgments (Douglas, Reference Douglas2009; Elliott, Reference Elliott2017) or as a norm for science communication more generally (Intemann, Reference Intemann2024; John, Reference John2018). The distinction between mere transparency, publicity, and accountability is not only missing in Elliott’s taxonomyFootnote 6 but also in these broader debates.

Now, a norm is not quite the same as a requirement. However, even if one does not require transparency but only believes that scientific experts should generally aspire transparency, the extent of engagement is relevantFootnote 7. Depending on which sense of transparency the expert strives for, this becomes a more or less demanding task.

Additionally, transparency is also a frequent institutional requirement. For example, in the OECD’s (2015) “checklist for science advice,” transparency appears as a tool for dealing with potential conflicts of interest as well as producing advice that is sound, unbiased, and legitimate (for similar guidelines, see, e.g., EASAC, 2014). Members of governmental expert bodies, like the Canadian COVID-19 vaccine task force, can even be formally required to disclose potential conflicts of interest (Government of Canada, 2024). Moreover, value transparency is advocated for by journal editors, for example, when writing policy papers in conservation science (Game, Schwartz, & Knight, Reference Game, Schwartz and Knight2015). The extent of engagement is particularly important if transparency appears as an institutional requirement: Transparency requirements can be more or less demanding and interfering, and as such have a varying regulatory force. I will come back to this point in Section 6.

In the following section, I will show that the ambiguity with regard to the extent of engagement is hidden in arguments concerning trust and value transparency.

4. Transparency as a Tool for Improving Trust

This article investigates public epistemic trust, that is, the trust laypeople place in scientific experts making certain claims. Philosophers of science have pointed out different grounds for appropriate trust in scientists and scientific experts (for some categorization attempts, see, e.g., Furman, Reference Furman2020; Metzen, Reference Metzen2024). I will not give a full account of trust in this article but instead highlight ways in which trust is connected to values and interests. The general idea is that approaches to trust must reflect the value-ladenness of science. Since scientific expertise is not free from non-epistemic values and interests, trust cannot be based on purely epistemic factors.

For instance, it has been argued that trust should be based on value alignment: Scientific experts are trustworthy if they take laypeople’s values and interests into account when making claims and recommendations, or at least if they do not act contrary to those values and interests (e.g., Goldenberg, Reference Goldenberg2023; Irzik & Kurtulmus, Reference Irzik and Kurtulmus2019; Wilholt, Reference Wilholt2013).Footnote 8 Others stress that trust is not necessarily about value alignment, but more generally about a legitimate use of values and interests. Ensuring such legitimacy might entail very different procedures, like restricting the proper role of values, or having venues for criticism on the community level (e.g., Douglas, Reference Douglas2009; Holman & Wilholt, Reference Holman and Wilholt2022; Oreskes, Reference Oreskes2019). Furthermore, for expertise that is used in political contexts, some philosophers rather focus on the relationship between scientific expertise and politics. They argue that while trustworthy scientific advice cannot be value-free, experts can still refrain from prescribing values and interests to political decision-makers (e.g., Carrier, Reference Carrier2022; Gundersen, Reference Gundersen2024). Such views on trust do not have to be conflicting accounts, as there may be different factors that contribute to trust in scientific experts.

Calls for transparency usually follow from these general views on trust. I will argue that upon closer examination, these are calls for different senses of transparency. Depending on what one believes to be the relevant connection between trust and values or interests, publicity or accountability may be needed for establishing such trust, instead of mere transparency. This also relates to the purpose dimension in Elliott’s (Reference Elliott2022) taxonomy: If we think of the purpose of promoting trust in more fine-grained terms, it becomes plausible that this might involve different forms of transparency. Yet because the ambiguity with respect to the extent of engagement is not adequately considered in arguments for transparency, it may wrongly seem that they all work with the same understanding of transparency.

We can distinguish at least four different arguments for why value transparency improves trust. First, transparency enables members of the public to place trust in those experts who do not act contrary to the members’ own values and interests. Second, transparency can demonstrate that values and interests legitimately influence scientific expertise. Third, if experts conditionalize their advice by making values and interests transparent, they remain more neutral and politically independent. Finally, if values and interests lead to unwanted distortions or biases, transparency enables criticism and rectification. I will explain each argument in more detail below.

Note that the distinction is somewhat artificial. The different arguments are often combined and can overlap. For example, Intemann (Reference Intemann2024) stresses the first and fourth arguments. Douglas (Reference Douglas2009) combines at least the second, third, and fourth arguments, although she uses the term “explicitness” (see also Douglas, Reference Douglas2018, 3). Additionally, philosophers advocating for transparency are aware that transparency by itself is not a sufficient tool for promoting trust; it needs to be accompanied by tools that promote diversity or lay participation (e.g., Intemann, Reference Intemann2024, 12). Transparency can also be merely an auxiliary tool for enabling participation. For example, in order to set up an expert panel that is diverse in terms of values, one needs to know the potential members’ values first.

The aim of my article is not to argue against the value of transparency, and I do not want to claim that other reasons for the importance of transparency do not matter. Instead, looking at existing arguments in an isolated manner helps to see that they involve a varying extent of engagement.

4.a. Demonstrating aligned values and interests

The first argument for transparency is related to the emphasis on value alignment. If one believes that members of the public place trust in experts who employ values and interests shared by the public—or at least do not act contrary to these values and interests—making the underlying values and interests explicit helps to assess the trustworthiness of experts and place one’s trust accordingly (e.g., Intemann, Reference Intemann2024, 6; Elliott, Reference Elliott2022, 344).

This argument rests mostly on mere transparency: For enabling trust that is based on an alignment of values and interests, it may be sufficient to disclose one’s values and interests. Take, for example, conflict of interest policies (Elliott, Reference Elliott2008; Elliott & Resnik, Reference Elliott and Resnik2014): A standard approach adopted by scientific journals, universities, or funding agencies is to require researchers to disclose their financial conflicts when applying for grants or publishing a paper. This approach has also spilled over to other areas of expertise. Science Media Centers, for instance, in the United Kingdom, require scientific experts to disclose conflicts of interest. They publish such information alongside statements that they distribute to the media (SMC, 2022).

The worry is that financial and other conflicts of interest are compromising the judgments of scientists (Elliott, Reference Elliott2008, 2). To what extent disclosure policies are effective in tackling this problem is a contested issue (e.g., de Melo-Martín & Intemann, Reference de Melo-Martín and Intemann2009). Yet in a minimal version, disclosure at least allows others to recognize the possibility that a certain expert is following her own financial interests or other sectional interests, rather than the public interest or the interest of the respective audience. This helps laypeople in placing their trust: They could trust those experts with no conflict of interest but be more wary otherwise—especially if the interests are strong, or if the disclosed interests differ greatly from one’s interests.

Disclosing values can, for example, be relevant for the trust policymakers place in scientific experts. Think of a city manager who has to help her city deal with the flooding risks associated with climate change. Disclosing value influences in climate modeling choices allows the city manager to make adaptation plans that align with the right values and provide the desired protection. If she thinks that the protection of citizens is important, she could, for instance, base her decisions on projections that are able to deal with worst-case flooding scenarios and not only with plausible scenarios (Elliott, Reference Elliott2022, 344; Parker & Lusk, Reference Parker and Lusk2019). If the city manager makes adaptation plans in accordance with the citizens’ values, this would also enhance public trust in the expertiseFootnote 9.

Moreover, making values explicit can directly affect public trust. For example, one reason why vaccine skeptics may distrust medical experts is that these experts make their safety assessments based on public health values, while the skeptics are concerned with their individual health (Irzik & Kurtulmus, Reference Irzik and Kurtulmus2019). A way of improving trust would be to provide recommendations more explicitly aligned with individual values, for instance, in conversations between doctor and patient.

4.b. Demonstrating legitimate influence of values and interest

The second argument dealing with trust and transparency is related to the first one. Yet, here the focus is more on the proper procedures for dealing with values, not on the values itself. Transparency can demonstrate that values and interests influence scientific expertise only in a legitimate way. For example, it can show that values were used only in a proper role (Douglas, Reference Douglas2009, 96; Bennett, Reference Bennett2020, 254) or that illegitimate value influences were handled through a collective critical evaluation by the scientific community (Oreskes, Reference Oreskes2019, 68; Solomon, Reference Solomon2021).

However, in most cases, mere transparency is not enough for this argument to work. Whether a certain value judgment was made appropriately is not immediately obvious to laypeople because they do not possess the specialized knowledge and experience of experts. Instead, publicity is required. Take Douglas’ distinction between direct and indirect roles of values, where only the latter—that is, values as guidance in what counts as sufficient warrant—is appropriate for expert reasoning. Demonstrating that values were only used in such an indirect role requires “the honest assessments of evidence and uncertainty, of what counts as sufficient evidence and why” (Douglas, Reference Douglas2008, 13). Experts need to explain what evidence there is, where the evidentiary bar should be set, in what way values enter these considerations, and the relevant possible evidence that would convince them of a different claim.

Douglas (Reference Douglas2008, 12) uses the example from uncertainties in climate projections: In the United States, the Bush administration argued in the 2000s that uncertainties are too great to warrant climate action. However, the scientific experts who set a lower evidentiary bar and recommended mandatory climate action were, in fact, making legitimate value judgments, as they used values only in their indirect role. Merely disclosing the value judgments is not sufficient for maintaining trust in such cases, experts need to explain why they made the judgments in a certain way and acknowledge the implications this may have.

Kitcher’s (Reference Kitcher2011, 151) notion of “ideal transparency” can be interpreted similarly. For Kitcher, ideal transparency entails that the methodological standards and procedures used in accepting or rejecting scientific claims are decided based on publicly shared values. However, additionally, the public needs to understand how values enter the certification process—in particular, that they were only used for judging whether the evidence is good enough (Kitcher, Reference Kitcher2011, 163–164). This, again, requires publicity.

4.c. Demonstrating political neutrality

The third argument is specific to expertise that is used in political contexts, like scientific policy advice. Many philosophers have argued that scientific experts should conditionalize their advice. They should act as “honest brokers” (Pielke, Reference Pielke2007) and be transparent about the way values and interests may have influenced their claims. If possible, this includes discussing different policy pathways with different underlying value assumptions. Transparency allows experts to remain neutral and independent from politics, which enables public trust in scientific expertise (Carrier, Reference Carrier2022, 17; Douglas, Reference Douglas2009, 153; Elliott & Resnik, Reference Elliott and Resnik2014, 649; Gundersen, Reference Gundersen2024, 139).

In some cases, this argument works with mere transparency. Take the flooding risks example again and assume that the experts provide different projections based on different modeling choices, for instance, one that includes worst-case scenarios but might overestimate certain factors and thus lead to expensive yet potentially unnecessary measures, and one that only includes more plausible scenarios but supports cheaper adaptation plans. By disclosing underlying values, the experts demonstrate their neutrality to the public because this shows that they do not prescribe or choose any values themselves. Ultimately, it is the city manager who has to make the value judgment and opt for a certain policy.

However, in other cases, policymakers and politicians might try to manipulate scientific advice. They could, for example, downplay a certain policy option and claim that they act based on scientific constraints, or they could point to the overall scientific uncertainty and argue against implementing any policy, as in the Bush administration example mentioned above (Douglas, Reference Douglas2008). In such cases, scientific experts need to explain their advice, as well as the underlying values and interests to the public, if they want to remain neutral. When facing politicization, publicity may be required to enable public trust in expertise.

Furthermore, mere transparency might be insufficient if the underlying evidence is already shaped by commercial or political values and interests. For example, Fernàndez Pinto (Reference Fernàndez Pinto2020) points out that commercial interests can lead to inappropriate consensus: If pharmaceutical companies predominantly publish studies with positive results, this can make a drug appear more effective than it actually is. Now, imagine an expert group that wants to provide conditionalized advice concerning this drug to policymakers. Demonstrating neutrality seems to require more in this case than just disclosing the existence of commercial interests—the experts would need to explain the mechanism through which pharmaceutical companies influenced the available evidence or why other policy pathways are valid even though they depart from the existing consensus.

4.d. Enabling criticism and rectification

According to the fourth argument, transparency is a tool to enable criticism and thus rectification, which improves the trustworthiness of expertise. This argument can be geared toward situations in which scientists are aware of possible value influences, but it is not clear whether the value influence is legitimate. Making value judgments explicit allows public scrutiny, and in turn, if the experts are open to such criticism and adapt their claims and recommendations, produces more trustworthy expertise (e.g., Douglas, Reference Douglas2009, 172; Intemann, Reference Intemann2024, 5).

In addition, criticism is important for uncovering implicit values and interests. This argument is especially prominent for criticism within the scientific community: If there are venues for criticism, members of the community can point out problematic background assumptions or other illegitimate influences of values and interests (e.g., de Melo-Martín & Intemann, Reference de Melo-Martín and Intemann2018, 122). However, others show that a scientific community should be open to external criticism as well, for example, by social movements (Bueter, Reference Bueter2017) or laypeople with local knowledge (Wylie, Reference Wylie, Padovani, Richardson and Tsou2015). Note that the content of transparency has to be more broad here: For uncovering value influences, parts of the data, code, or materials have to be open to scrutiny.

In most cases, mere transparency is not enough to enable public criticism. The public needs to be able to understand the influence of values and interests. For uncovering implicit values, the audience also needs to have some knowledge about the available evidence and methods. This is explicitly acknowledged: For example, Bueter (Reference Bueter2017) points to the role the women’s health movement had in making problematic background assumptions in medical research and care explicit. From the beginning, however, researchers and medical practitioners played an important part in the women’s health movement, which later resulted in establishing the field of gender medicine. In other cases, the focus is on participatory research practices where laypeople work together with scientific experts (Wylie, Reference Wylie, Padovani, Richardson and Tsou2015), or deliberative processes where “public voices need both to be heard and to be tutored” (Kitcher, Reference Kitcher2011, 220).

However, the extent of engagement involved in this argument goes beyond publicity. It requires deliberative accountability: The experts need to explain their interests and values to the public and respond to public criticism. Sanctions play only a minor role. Criticism can, of course, affect the expert’s reputation and thus be a sanctioning mechanism, but this is not required for this argument to work. Experts can also be open to criticism without fearing a loss of reputation. Deliberative accountability is demanding because experts need to actively engage with the audience and react to their objections.

Some philosophers are explicit in calling this accountability (e.g., Douglas, Reference Douglas2009, 172, Douglas, Reference Douglas2021; Resnik & Elliott, Reference Resnik and Elliott2023, 274), while others stick to the umbrella term of transparency. Intemann (Reference Intemann2024, 5), for example, argues that “making value judgments transparent demonstrates an openness to having those assumptions evaluated and potentially corrected or revised in future research.” Yet, being open to criticism is essentially what I call deliberative accountability here.

Note that there is a related argument, which is prominent in political theory: Transparency may force authorities to act in the public interest instead of their own interests if they know that their decisions are subjected to public scrutiny (Kogelmann, Reference Kogelmann2021). Here, it is not the reaction to criticism that enhances trustworthiness but the anticipation of sanctions, like being voted out of office in the next election. The argument rests on accountability, yet sanctions play a more explicit role here: They drive the improvement of trustworthiness.

To the best of my knowledge, this argument has not explicitly been defended in philosophy of scienceFootnote 10. In addition, of course, scientific experts differ from political representatives in important respects. However, it is important for experts to act in the public interest and produce claims and recommendations accordingly. It does not seem entirely implausible that the argument has some relevance for scientific expertise: For instance, knowing that the advice an expert gives publicly will be scrutinized by others—like other experts, journalists, or NGOs—might make the expert more careful in weighing the evidence and making appropriate value judgments.

5. How Transparency can Backfire

We have seen that arguments for why transparency enhances trust work with different conceptions of transparency. If one wants to promote public trust, scientific experts thus ought to be transparent in different ways. However, transparency requirements can backfire: They can reduce public trust in scientific expertise instead of promoting it. Philosophers have pointed out different dangers of transparency (e.g., Elliott, Reference Elliott2008; Elliott, Reference Elliott2022; Intemann, Reference Intemann2024; John, Reference John2018; Nguyen, Reference Nguyen2022; Schroeder, Reference Schroeder2021). I will show that possible dangers are, in fact, relative to the extent of engagement of transparency requirements. Mere transparency, publicity, and accountability each come with different costs.

It is important to distinguish two ways in which trust can be reduced. Transparency requirements can affect the credibility of expertise, and they can affect the trustworthiness of expertise. As I use these terms here, trustworthiness is a property of the speaker, whereas credibility reflects how others perceive the speaker and her claims (Whyte & Crease, Reference Whyte and Crease2010). Public trust can fail because scientific experts lack actual trustworthiness, but it can also fail if trustworthy experts are not perceived as such.

Note that transparency may prompt problems that I will not deal with in this article. For example, transparency requirements can clash with other rights like the protection of personal data—these are general problems that are not directly related to trust or the extent of engagement.

Finally, whether or not transparency requirements actually backfire is of course an empirical question (for some attempts to provide empirical answers, see, e.g., Elliott, McCright, Allen, & Dietz, Reference Elliott, McCright, Allen and Dietz2017; Hicks & Lobato, Reference Hicks and Lobato2022; Cologna, Baumberger, Knutti, Oreskes, & Berthold, Reference Cologna, Baumberger, Knutti, Oreskes and Berthold2022). My point is not so much to demonstrate that these dangers are inevitable. I rather want to show that there are considerations that make them plausible, but that these considerations vary depending on the extent of engagement of transparency requirements. I also do not claim that the following list of dangers is exhaustive.

5.a. Mere transparency

Because mere transparency requires information only to be disclosed and disseminated but not actually made accessible to a specific audience, it can reduce the credibility of expertise. Disclosing values and interests without providing sufficient explanations can make experts appear compromised even when they are, in fact, trustworthy. As Schroeder (Reference Schroeder2021, 551) notes: “If the public can’t trace the impact of those values, transparency doesn’t amount to much more than a warning—offering, in many cases, a reason to distrust, rather than to trust.” A conflict of interest disclosure, for example, can alert laypeople that the result might be biased even though it is not because they do not understand who a certain funder is or how the disclosed interests may affect the expertise (Intemann, Reference Intemann2024, 7; Schroeder, Reference Schroeder2021, 550).

Relatedly, disclosing values and interests may be problematic if the audience holds a “false folk philosophy of science” (John, Reference John2018, 81), that is, nonexperts can have false beliefs about the epistemic and social practices underlying scientific claims. In such nonideal environments, transparency can lead to the impression that scientists are not acting as they should. This is especially the case if laypeople think that science should be value-free. Disclosing that values did influence scientific claims then easily undermines trust, despite the fact that the influence was actually legitimate. This can even be used as a strategy to discount expertise: For example, climate skeptics often position themselves as objective while they portray mainstream scientists, such as experts working for the Intergovernmental Panel on Climate Change, as self-interested or biased (Kovaka, Reference Kovaka2021, 2366).

Besides negatively impacting the credibility of scientific expertise, mere transparency may also reduce its trustworthiness. This has mostly been pointed out with regard to conflict of interest disclosures. As Elliott (Reference Elliott2008, Reference de Melo-Martín and Intemann9) notes, “disclosures may also cause the sources of information to be more biased than they would otherwise be.” Scholars from psychology and economics have discussed the phenomenon of “moral licensing” (Loewenstein, Sah, & Cain, Reference Loewenstein, Sah and Cain2012, 669): Disclosing their interest can make advisors feel more comfortable in providing biased information because they know that the audience has been warned. Likewise, mere transparency may involve “strategic exaggeration,” where advisors provide more biased information to counteract anticipated discounting. Both effects are plausible if the audience cannot assess how the disclosed values or interests actually influence the expertise.

5.b. Publicity

Publicity requires experts to meet standards of accessibility and assessability: to explain the use and influence of values and interests in a way that laypeople are able to grasp such information. This avoids the credibility problems I have mentioned in the last section.

However, there are some important practical limitations. In many cases of scientific expertise, value choices are complex. Clearly explaining the importance of each individual choice and the possibility of alternative choices is not an easy task, and scaling this up to all value choices is even harder. What is more, even if experts were able to communicate very well, avoiding the credibility problem also requires a great deal of attention on the side of the public (Schroeder, Reference Schroeder2021, 552). Thus, because scientific experts have specialized knowledge, publicity may fail or may not be sufficient—in which case it may still backfire and reduce the credibility of expertise. Similarly, it can be hard and time-consuming to overcome a false folk philosophy of science, which requires not only communicating how values and interests have influenced scientific claims but also what the influence ought to look like and why (John, Reference John2018, 82).

Furthermore, publicity introduces new dangers if we look at the trustworthiness of scientific expertise. This has especially been emphasized by Nguyen (Reference Nguyen2022) who raises different objections against transparency. These are specific to publicity because they follow from increased demands on communicating expertiseFootnote 11. As Nguyen (Reference Nguyen2022, 9–10) writes: “In order for the assessment procedure to be made available to the public—in order for it to be auditable by outsiders to the discipline—it must be put in terms that are accessible to the public. However, insofar as the most appropriate terms of assessment require expertise to comprehend, then the demand for public accessibility can interfere with the application of expertise.”

First, publicity can change the experts’ recorded justification. If experts’ actual reasons are inaccessible to nonexperts, they might invent other reasons for public presentation, often making these reports uninformative or evasive. Second, publicity may be more epistemically intrusive and pressure experts to only act in ways that allow for public justification—they limit their actions to those for which they can find public justification, or seek or prefer public reasons in their own considerations (Nguyen, Reference Nguyen2022, 2–3).

Nguyen’s argument is not about transparency of values or interests, but it can be adapted. Making value judgments and influences accessible and assessable to a lay audience is a difficult and time-consuming task. In some cases, it may be impossible. Requiring experts to do so despite these difficulties may pressure them to adjust their explanations.

5.c. Accountability

In contrast to publicity, accountability involves responding to public criticism and perhaps even sanctioning mechanisms. This introduces additional possible dangers for trust in expertise. While public scrutiny is often important, it can also negatively affect an expert’s claims and recommendations, as well as the public perception of the expertise. This is especially relevant in cases where the risk of politicization is high or where expertise involves the inclusion of vulnerable groups.

A lack of public accessibility or practical problems in making expertise accessible can create problems for accountability. Not only it is difficult for lay people to criticize experts or hold them to account (Langvatn & Holst, Reference Langvatn and Holst2024, 105), but it is also difficult to judge whether criticism by others is appropriate. This is most salient for accountability for the content of expertise (which is usually why scholars argue against this form of accountability, e.g., Douglas, Reference Douglas2021), yet if value choices are difficult to identify, trace, and explain in lay terms, these problems are not eliminated by requiring accountability only for values and interests.

Inappropriate criticism can then contribute to a loss of credibility. Take the “Climategate” affair: Based on leaked emails from the University of East Anglia, climate skeptics accused scientists of fraud and misconduct, which also influenced public perception of the trustworthiness of science through a broader media uptake. Of course, greater public accountability may in fact be a solution to the problem of Climategate (e.g., Beck, Reference Beck2012)Footnote 12. However, this case, nevertheless, shows the danger of inappropriate criticism: It is difficult for laypeople to assess criticism directed at scientific expertise. As long as circumstances are nonideal, that is, if it is difficult to make expert claims and underlying values and interests accessible to the public, or if the public is easily influenced by powerful interest groups, public scrutiny can be weaponized.

Public scrutiny can also negatively affect the expertise itself—thus reducing its trustworthiness. This has been explicitly pointed out for deliberative and participatory settings in which scientific experts work closely together with local stakeholders, for example, policy dialogues in public health contexts. Mitchell, Reinap, Moat, and Kuchenmüller (Reference Mitchell, Reinap, Moat and Kuchenmüller2023, 8) argue that participants, especially nonscientists, may be afraid of saying something wrong: Opening up tentative deliberations to public scrutiny “risks changing their nature—if participants are concerned about saying the ‘wrong’ thing, revealing their ignorance, being held to account for something they casually suggest, or being criticized or humiliated for their opinions, they will be much more guarded about what they say.” Transparency and public scrutiny can backfire due to the vulnerability of the setting and participants.

The expertise itself may also be changed by criticism that is voiced or influenced by powerful interest groups. Biddle, Kidd, and Leuschner (Reference Biddle, Kidd and Leuschner2017) have argued that criticism can be “epistemically detrimental”: It can produce intimidation by creating an atmosphere in which scientists refrain from addressing certain topics or from arguing as forcefully as they believe is appropriate. Such criticism can even be epistemically corrupting. The reasonable anticipation of intimidation or threat can foster a disposition to preemptively understate epistemic claims.

Note that these latter discussions on epistemic intimidation do not happen in relation to transparency or accountability. However, they indicate that demanding experts to respond to public criticism can be dangerous if that criticism is inappropriate or turns into harassment. Again, examples of such effects often come from climate science (Biddle, Kidd, & Leuschner, Reference Biddle, Kidd and Leuschner2017). Here, problematic dissenting views often spilled over to mainstream media and politics, where they led to different attempts to intimidate scientists, from open letters to congressional hearings. Similar instances of intimidation attempts have also been pointed out in the context of COVID-19 (e.g., Nogrady, Reference Nogrady2021).

6. Transparency’s Regulatory Force

Value transparency is neither an easy solution nor an ineffective or dangerous mechanism for promoting trust. It is a tool that not only comes with benefits but also with costs—which might be inevitable or outweighed by the benefits. These trade-offs vary along the intended extent of engagement. Different senses of transparency are implicit in arguments for transparency, which then come with different benefits and costs.

Importantly, transparency can be a regulatory tool: As I have pointed out in Section 3, transparency is not only discussed as a rather abstract norm for science communication, but it also appears as a more specific, institutional requirement for scientific advisory bodies and providing policy-relevant expertise. Because it is a demand placed on scientific experts, transparency restricts the autonomy of science to a certain extent and introduces some form of public oversight (e.g., Resnik, Reference Resnik2008).

The complexities of transparency matter in the context of regulation: It is important to think of the relevant sense of transparency that needs to be at work and the dangers that this sense might involve. Existing approaches like Elliott’s (Reference Elliott2022) taxonomy are clearly helpful in this regard. However, the extent of engagement is crucial, too—not only because it explicates an additional ambiguity, but also because it directly addresses transparency’s regulatory force.

Transparency can seem to be something that is beneficial for several reasons, as well as less demanding and interfering compared to other regulatory tools. This makes it attractive for scientific expertise, whose autonomy is clearly valued. However, considering the extent of engagement reveals that transparency can be a more demanding regulatory tool than it appears to be. Depending on the benefits one wants to gain from implementing transparency requirements, publicity or accountability might be necessary—which come with higher demands for scientific experts and restrict their autonomy to a greater extent.

This parallels debates outside of science, where transparency is discussed as a mechanism for promoting sound markets or good government. For instance, Etzioni (Reference Etzioni2010) argues that transparency may appear as an alternative to regulating the actions of companies or politicians, yet in fact, transparency is a form of regulation.

The philosophical discussion regarding conflicts of interest can serve as a case in point here: While disclosure policies are very popular, they have been criticized for various reasons, as I noted in Section 5.a. This suggests that transparency requirements either need to involve a different level of engagement and thus reduce autonomy—for example, by establishing additional avenues for critical evaluation by peer researchers and regulators (de Melo-Martín & Intemann, Reference de Melo-Martín and Intemann2009, 1641; Elliott, Reference Elliott2008, 21)—or one needs to resort to other regulatory instruments.

Again, this does neither speak for nor against transparency requirements. In many cases of scientific expertise, we want some public oversight, for example, because the expertise might be distorted, or because we fear biased political uptake. However, it underlines the importance of being aware of the extent of engagement. Transparency is a regulatory tool, as are other tools like diversity or participatory measures—and it is not necessarily preferable based on concerns over a lower regulatory force.

7. Conclusion

I have argued that transparency is an ambiguous requirement, even beyond the complexities that Elliott (Reference Elliott2022) has pointed out. I distinguished between three senses of transparency: mere transparency, publicity, and accountability. Mere transparency means merely disclosing information, publicity involves making information publicly accessible and communicating it to a specific audience, and accountability describes a two-way communication that entails reacting to criticism or sanctions. Arguments for transparency of values and interests as a tool for facilitating trust in science work with different senses of transparency. However, this also comes with different costs, as requiring mere transparency, publicity, and accountability can backfire in different ways.

Acknowledging the ambiguity of transparency benefits the debate in philosophy of science. Whether or not transparency promotes trust depends on the respective argument and the form of transparency that is involved. Counterarguments like those from John (Reference John2018), Schroeder (Reference Schroeder2021), or Nguyen (Reference Nguyen2022) are directed at specific forms of transparency and thus only at specific arguments for transparency. Taking the extent of engagement into account allows for a more nuanced view of the relationship between transparency and trust. Furthermore, it is important to consider the extent of engagement if transparency is an institutional requirement. Requiring transparency amounts to different things and involves different trade-offs. Merely talking of transparency in a general sense hides the fact that it can be a more demanding and interfering regulatory tool than it appears to be.

Acknowledgments

The author would like to thank Torsten Wilholt for discussing various earlier versions of this article. The author also received detailed and helpful comments from Kevin Elliott, Robert Frühstückl, Hannah Hilligardt, Anton Killin, and Faik Kurtulmus. Furthermore, this article benefited from presenting it at the PhilLiSci meeting at Bielefeld University, the SOCRATES seminar at Leibniz University Hannover, and the GRK 2073 colloquium. Finally, the author is grateful to the two anonymous reviewers for their comments and suggestions.

Funding statement

The research leading to these results received funding from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—Project 254954344/GRK2073.

Competing interests

The authors declare none.

Hanna Metzen is a postdoctoral researcher at Bielefeld University. Her research focusses on public trust in scientific expertise. Currently, she also works on the notion of scientific authority as well as the role of science journalists in trust relationships.