Highlights

What is already known

-

• Statistical models that are estimated based on small data sets, are very likely to suffer from overfitting.

-

• If multiple data sets cannot be combined into one data set, the statistical analysis could be performed in a federated manner.

What is new

-

• This article describes a method for performing Bayesian federated inference (BFI) for homogeneous and heterogeneous multicenter data. In each center, the data is analyzed only once. The local inference results are centrally combined to obtain the parameter estimates without any need for repeated “cycling” across centers.

-

• An R software package implementing the proposed methodology is available and a manual is described in the article.

Potential impact for RSM readers outside the authors’ field

-

• The proposed methodology can be applied if data sets cannot be combined, also if the data are not of a medical nature.

-

• The BFI estimates are more accurate than the estimates obtained from a single center analysis.

1 Introduction

Prediction models aim to predict the outcome of interest for individuals (or subjects), based on their values of the covariates in the model. To build a prediction model by selecting covariates and estimating the corresponding regression parameters, the sample size should be sufficiently large. If too many variables (possible covariates) relative to the number of events or observations are included, the model may become overly flexible and erroneously ‘explain’ noise or random variations in the data, rather than estimating meaningful relationships between the covariates and the outcome. This is called overfitting and may lead to unreliable predictions of the outcome for new individuals.Reference Harrell, Lee and Mark 1 To overcome overfitting a minimum of 10 observations or events per variable (EPV) is often advised.Reference Harrell 2 , Reference Harrell, Lee, Califf, Pryor and Rosati 3 Based on this criterion, data sets are often too small to take all available variables in consideration. Merging different data sets from different (medical) centers could in principle alleviate the problem, but is often difficult for regulatory and logistic reasons. An alternative route would be to analyse the local data in the centers and combine the obtained inference results intelligently. With this approach the (individual) data do not need to be shared across centers. In this article, we focus on methodology to combine the local inference results for estimating parametric regression models for a general population of interest. The data sets in the centers are considered as samples from this population.

In literature, several methods have been described. Probably the best-known strategy to obtain effect estimates from different inference results, is meta-analysis.Reference Borenstein, Hedges and Higgins 4 In a meta-analysis, relevant, already published results are combined. Here we consider the situation where the local analyses have yet to be performed. This means that the collaborating centers discuss in advance which local analyses will be performed and what inference results should be shared to build the final combined model. It also means that more information can be shared than is usually available in publications, like the estimated covariance matrix of the estimators of the model parameters.

Federated Learning (FL) is a machine learning approach that was developed several years ago, mainly for analyzing data from different mobile devices.Reference McMahan, Moore, Ramage, Hampson and Arcas 5 It aims to construct from the inference results obtained in the separate centers, what would have been found if the analysis was performed on the combined data set. With this approach, the local data stay at their owners’ centers, only parameter estimates are cycled around and updated based on the local data until a convergence criterion is met. In recent years the FL approach has improved quite a bit (e.g., on optimization in the local centers and the aggregation of the local results, dealing with heterogeneity and client-drift,Reference Li, Sahu, Zaheer, Sanjabi, Talwalkar and Smith 6 , Reference Zhu, Hong and Zhou 7 , Reference Karimireddy 8 , Reference Shi, Zhang, Xiao and Niu 9 methodology for causality related research questionsReference Vo, Lee, Hoang and Leong 10 , Reference Han, Hou, Cho, Duan and Cai 11 ). Also FL in a Bayesian setting for deep learning models has been proposed.Reference Maddox, Izmailov, Garipov, Vetrov and Wilson 12 , Reference Al-Shedivat, Gillenwater, Xing and Rostamizadeh 13 , Reference Cao, Chen, Fan, Gama, Ong and Kumar 14 , Reference Chen and Chao 15 The posterior distributions are estimated in the local centers and communicated to the central server for aggregation. However, practically this Bayesian procedure is challenging, especially for deep learning models due to the high dimensionality of the parameters. An overview of the most important recent developments and a list of references is given in Liu et al.Reference Liu, Jiang and Zheng 16 FL performs excellently in e.g., image analysisReference Rieke, Hancox and Li 17 , Reference Sheller, Edwards and Reina 18 , Reference Gafni, Shlezinger, Cohen, Eldar and Poor 19 or for data from mobile devices, but has clearly some drawbacks in other applications. For instance, apart from obvious ones such as data security and convergence problems, if one aims to estimate statistical models based on inference results from different medical centers, one needs to handle challenges like heterogeneity of the populations across centers, clustering of centers, center-specific covariates (like location), missing covariates in the data, and the fact that data may be stored in different ways (covariates are named differently or are even defined differently). Furthermore, most FL strategies require many iterative inference cycles across the local centers. In case the centers are hospitals (the situation we are considering here), a cycling mechanism is complex and may lead to considerable extra work; a one-shot approach is preferred.

Also in the field of distributed statistical inference, multiple strategies have been proposed to combine inference results from different computers (centers).Reference Gaoa, Liu, Wang, Wang, Yana and Zhang

20

To cope with massive data sets which can not be analyzed on a single computer, a data set is divided into smaller data sets, which are analyzed separately and the results are combined afterwards. An interesting one-shot algorithm has been proposed by Jordan et al.Reference Jordan, Lee and Yang

21

They proposed a communication-efficient surrogate likelihood framework for distributed statistical inference for homogeneous data. Instead of maximizing the full likelihood for regular parametric models or the penalized likelihood in high-dimensional models, this surrogate likelihood is maximized. The surrogate likelihood expression was determined so that only a minimum amount of information is transferred from the local machines to the central server (of the order

![]() $O(d)$

bits where d is the dimension of the parameter space). Later, the method was generalized to be able to deal with certain forms of heterogeneity.Reference Duan, Ning and Chen

22

$O(d)$

bits where d is the dimension of the parameter space). Later, the method was generalized to be able to deal with certain forms of heterogeneity.Reference Duan, Ning and Chen

22

In this article we describe the BFI framework for parametric regression models. This methodology was developed especially for combining inference results from different centers to estimate statistical (regression) models without the need for repeated communication rounds with the local centers. In every center the data are analysed only once and the inference results (parameter and accuracy estimates) are sent to a central server, where the local inference results are combined. Explicit expressions for the combined (BFI) estimators in terms of the local inference results have been derived. Via these expressions the BFI estimates can be easily updated at a later moment if the data collection or the analysis in several centers are delayed, without contacting all other centers again (this would not be possible when using an iterative updating mechanism). The fact that only one communication round is sufficient is important in our (medical) setting, since assistance from the local medical and technical staff are needed every time local analyses are performed.

The BFI estimates are defined as the maximizers of a surrogate expression of the full log posterior density. This expression depends on the local estimates and is different from the one proposed by Jordan (2018).Reference Jordan, Lee and Yang

21

In the BFI framework more information (of the order

![]() $O(d^2)$

) is shared with the central server than would normally be acceptable in a FL or distributed statistical inference setting. This additional information improves the accuracy of the estimator. The BFI methodology was developed for estimating (low-dimensional) GLMs. High dimensional models (with large d), typically the models of interest in FL and distributed statistical inference, are not the focus of the BFI methodology; estimation accuracy is more important than communication efficiency.

$O(d^2)$

) is shared with the central server than would normally be acceptable in a FL or distributed statistical inference setting. This additional information improves the accuracy of the estimator. The BFI methodology was developed for estimating (low-dimensional) GLMs. High dimensional models (with large d), typically the models of interest in FL and distributed statistical inference, are not the focus of the BFI methodology; estimation accuracy is more important than communication efficiency.

The mathematical theory of the BFI methodology for parameteric models, like GLMs, was published by the authors in Jonker et al.Reference Jonker, Pazira and Coolen 23 In this article, we extend the theory further to allow for different kinds of heterogeneity between the centers. Among others, we consider the situation in which there is heterogeneity in the population characteristics, there is clustering, the distribution of the outcome variable is shifted, and the regression or nuisance parameters differ between the centers. The asymptotic distributions of the BFI estimators are derived and it is proven that the estimators are asymptotically efficient. Asymptotically, no information is lost if the data from the centers cannot be combined. These asymptotic distributions of the estimators are used for the construction of credible intervals. For finite samples (by means of simulation studies) and asymptotically, the BFI estimators are compared to the estimators that are obtained by averaging the local estimators (weighted for local sample size). In this article, we also focus on applications: a data example is given and the R code (from our R package BFIReference Pazira, Massa and Jonker 24 ) for analyzing the data with the BFI methodology is explained.

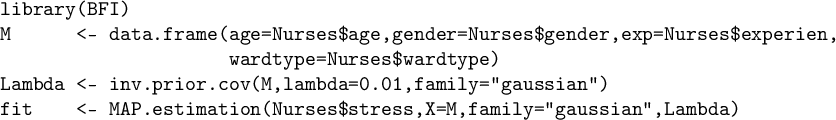

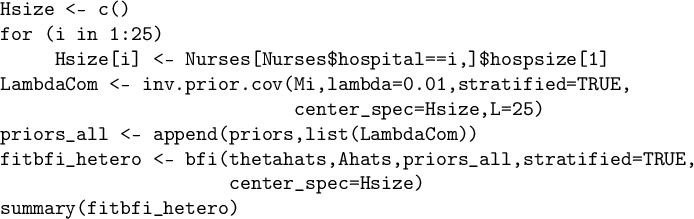

This article is organized as follows. In Section 2 the BFI framework for generalized linear models for homogeneous sub-populations in the local centers is explained. In Section 3 different types of heterogeneity across these sub-populations and data sets are described and, moreover, it is explained how the BFI methodology can be adjusted to takes these into account. To study the performance of the BFI method in different settings, the results of simulation studies are described in Section 4. In the same section also the analysis of a heterogeneous data set using the BFI methodology is described. A discussion is given in Section 5. The article ends with three appendices. In the first appendix we explain how to do the analysis with our R package, the second appendix contains the mathematical details of the derivation of the estimators and in the third appendix the asymptotic distributions of the BFI and the weighted average estimators are derived and compared.

2 The Bayesian Federated Inference (BFI) framework

Suppose that data of L medical centers are locally available, but these data sets cannot be merged to a single integrated data set for statistical analysis. The data for individual i from center

![]() $\ell $

is denoted as the pair

$\ell $

is denoted as the pair

![]() $(\mathbf {x}_{\ell i},y_{\ell i})$

with

$(\mathbf {x}_{\ell i},y_{\ell i})$

with

![]() $\mathbf {x}_{\ell i}$

a vector of covariates and

$\mathbf {x}_{\ell i}$

a vector of covariates and

![]() $y_{\ell i}$

the outcome of interest. Let

$y_{\ell i}$

the outcome of interest. Let

![]() $\mathbf {D}_\ell $

denote the data subset in center

$\mathbf {D}_\ell $

denote the data subset in center

![]() $\ell $

:

$\ell $

:

where

![]() $n_\ell $

denotes the number of individuals in subset

$n_\ell $

denotes the number of individuals in subset

![]() $\ell $

,

$\ell $

,

![]() $\ell =1,\ldots ,L$

, and let

$\ell =1,\ldots ,L$

, and let

![]() $\mathbf {D}$

be the fictive combined data set (the union of the subsets

$\mathbf {D}$

be the fictive combined data set (the union of the subsets

![]() $\mathbf {D}_1,\ldots ,\mathbf {D}_L$

).

$\mathbf {D}_1,\ldots ,\mathbf {D}_L$

).

The data pair

![]() $(\mathbf {x}_{\ell i},y_{\ell i})$

is the realisation of the stochastic pair

$(\mathbf {x}_{\ell i},y_{\ell i})$

is the realisation of the stochastic pair

![]() $(\mathbf {X}_{\ell i},Y_{\ell i})$

. Suppose that the variables

$(\mathbf {X}_{\ell i},Y_{\ell i})$

. Suppose that the variables

![]() $(\mathbf {X}_{\ell i},Y_{\ell i}), i=1,\ldots ,n_\ell , \ell =1,\ldots , L$

are independent and identically distributed, and that

$(\mathbf {X}_{\ell i},Y_{\ell i}), i=1,\ldots ,n_\ell , \ell =1,\ldots , L$

are independent and identically distributed, and that

![]() $\mathbf {X}_{\ell i}$

and

$\mathbf {X}_{\ell i}$

and

![]() $Y_{\ell i}$

are linked via a generalized linear model (GLM) with link function h:

$Y_{\ell i}$

are linked via a generalized linear model (GLM) with link function h:

where

![]() $\mathbf {\beta }$

is a vector of unknown regression parameters and

$\mathbf {\beta }$

is a vector of unknown regression parameters and

![]() $\mathbf {\eta }$

a vector of unknown nuisance parameters. If the first element in the covariate vector

$\mathbf {\eta }$

a vector of unknown nuisance parameters. If the first element in the covariate vector

![]() $\mathbf {X}_{\ell i}$

equals one for all individuals, the model includes an intercept.Footnote

i

$\mathbf {X}_{\ell i}$

equals one for all individuals, the model includes an intercept.Footnote

i

For

![]() $\mathbf {\theta }_1:=(\mathbf {\eta }, \mathbf {\beta })$

, the conditional density of

$\mathbf {\theta }_1:=(\mathbf {\eta }, \mathbf {\beta })$

, the conditional density of

![]() $Y_{\ell i}|(\mathbf {X}_{\ell i}=\mathbf {x},\mathbf {\theta }_1)$

is given by

$Y_{\ell i}|(\mathbf {X}_{\ell i}=\mathbf {x},\mathbf {\theta }_1)$

is given by

![]() $y|\mathbf {x},\mathbf {\theta }_1 \to p(y|\mathbf { x},\mathbf {\theta }_1)$

and for the vector of covariates

$y|\mathbf {x},\mathbf {\theta }_1 \to p(y|\mathbf { x},\mathbf {\theta }_1)$

and for the vector of covariates

![]() $\mathbf {X}_{\ell i}| \mathbf {\theta }_2$

this is

$\mathbf {X}_{\ell i}| \mathbf {\theta }_2$

this is

![]() $\mathbf {x}|\mathbf {\theta }_2 \to p(\mathbf {x}|\mathbf {\theta }_2)$

, for

$\mathbf {x}|\mathbf {\theta }_2 \to p(\mathbf {x}|\mathbf {\theta }_2)$

, for

![]() $\mathbf {\theta }_2$

a parameter vector.Footnote

ii

Then, for

$\mathbf {\theta }_2$

a parameter vector.Footnote

ii

Then, for

![]() $\mathbf {\theta }:=(\mathbf {\theta }_1,\mathbf {\theta }_2)$

it follows that the density of

$\mathbf {\theta }:=(\mathbf {\theta }_1,\mathbf {\theta }_2)$

it follows that the density of

![]() $y,\mathbf {x}|\mathbf {\theta }$

can be written as

$y,\mathbf {x}|\mathbf {\theta }$

can be written as

![]() $y,\mathbf {x}|\mathbf {\theta } \to p(y,\mathbf {x}|\mathbf {\theta }) \;=\; p(y|\mathbf {x},\mathbf {\theta }_1) p(\mathbf {x}|\mathbf {\theta }_2)$

. We work in a Bayesian setting;

$y,\mathbf {x}|\mathbf {\theta } \to p(y,\mathbf {x}|\mathbf {\theta }) \;=\; p(y|\mathbf {x},\mathbf {\theta }_1) p(\mathbf {x}|\mathbf {\theta }_2)$

. We work in a Bayesian setting;

![]() $\mathbf {\theta }$

is stochastic as well. For mathematical simplicity, we assume statistical independence between

$\mathbf {\theta }$

is stochastic as well. For mathematical simplicity, we assume statistical independence between

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

. Thus,

$\mathbf {\theta }_2$

. Thus,

![]() $p(\mathbf {\theta }_1,\mathbf {\theta }_2)=p(\mathbf {\theta }_1)p(\mathbf {\theta }_2)$

in the combined data set

$p(\mathbf {\theta }_1,\mathbf {\theta }_2)=p(\mathbf {\theta }_1)p(\mathbf {\theta }_2)$

in the combined data set

![]() $\mathbf {D}$

and

$\mathbf {D}$

and

![]() $p_\ell (\mathbf {\theta }_1,\mathbf {\theta }_2)=p_\ell (\mathbf {\theta }_1)p_\ell (\mathbf {\theta }_2)$

in center

$p_\ell (\mathbf {\theta }_1,\mathbf {\theta }_2)=p_\ell (\mathbf {\theta }_1)p_\ell (\mathbf {\theta }_2)$

in center

![]() $\ell $

, for all

$\ell $

, for all

![]() $\ell $

(the “

$\ell $

(the “

![]() $\ell $

” in the subscript refers to the center). We choose the prior parameter distributions for

$\ell $

” in the subscript refers to the center). We choose the prior parameter distributions for

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

to be Gaussian with mean zero and inverse covariance matrices

$\mathbf {\theta }_2$

to be Gaussian with mean zero and inverse covariance matrices

![]() $\boldsymbol {\Lambda }_1$

and

$\boldsymbol {\Lambda }_1$

and

![]() $\boldsymbol {\Lambda }_2$

, respectively, in the combined data set, and

$\boldsymbol {\Lambda }_2$

, respectively, in the combined data set, and

![]() $\boldsymbol {\Lambda }_{1,\ell }$

and

$\boldsymbol {\Lambda }_{1,\ell }$

and

![]() $\boldsymbol {\Lambda }_{2,\ell }$

in center

$\boldsymbol {\Lambda }_{2,\ell }$

in center

![]() $\ell $

,

$\ell $

,

![]() $\ell =1,\ldots ,L$

. For parameters that are positive by definition, like the variance of the error term in the linear regression model, a mean zero Gaussian prior is assumed for a transformation (e.g., the logarithm) of the parameter.

$\ell =1,\ldots ,L$

. For parameters that are positive by definition, like the variance of the error term in the linear regression model, a mean zero Gaussian prior is assumed for a transformation (e.g., the logarithm) of the parameter.

The maximum a posteriori (MAP) estimate of

![]() $\mathbf {\theta } =(\mathbf {\theta }_1,\mathbf {\theta }_2)$

maximizes the a posteriori density of the data with respect to

$\mathbf {\theta } =(\mathbf {\theta }_1,\mathbf {\theta }_2)$

maximizes the a posteriori density of the data with respect to

![]() $\mathbf {\theta }$

, by definition. For the combined data set

$\mathbf {\theta }$

, by definition. For the combined data set

![]() $\mathbf {D}$

, this estimate is denoted as

$\mathbf {D}$

, this estimate is denoted as

![]() $\widehat {\mathbf {\theta }} = (\widehat {\mathbf {\theta }}_1,\widehat {\mathbf {\theta }}_2)$

and, for the local data set

$\widehat {\mathbf {\theta }} = (\widehat {\mathbf {\theta }}_1,\widehat {\mathbf {\theta }}_2)$

and, for the local data set

![]() $\mathbf {D}_\ell $

the notation

$\mathbf {D}_\ell $

the notation

![]() $\widehat {\mathbf {\theta }}_\ell =(\widehat {\mathbf {\theta }}_{1,\ell },\widehat {\mathbf {\theta }}_{2,\ell })$

is used. If the prior density is chosen to be non-informative (large prior variances), the MAP estimates will be close to the maximum likelihood estimates. The estimator

$\widehat {\mathbf {\theta }}_\ell =(\widehat {\mathbf {\theta }}_{1,\ell },\widehat {\mathbf {\theta }}_{2,\ell })$

is used. If the prior density is chosen to be non-informative (large prior variances), the MAP estimates will be close to the maximum likelihood estimates. The estimator

![]() $\widehat {\mathbf {\theta }}$

is fictive as the data set

$\widehat {\mathbf {\theta }}$

is fictive as the data set

![]() $\mathbf {D}$

can not be created. In the following we derive expressions for

$\mathbf {D}$

can not be created. In the following we derive expressions for

![]() $\widehat {\mathbf {\theta }}$

in terms of the MAP estimators based on the local data sets

$\widehat {\mathbf {\theta }}$

in terms of the MAP estimators based on the local data sets

![]() $\mathbf {D}_\ell $

. Once the estimates in the separate centers have been found, these expressions tell us how to combine them to obtain (an approximation of)

$\mathbf {D}_\ell $

. Once the estimates in the separate centers have been found, these expressions tell us how to combine them to obtain (an approximation of)

![]() $\widehat {\mathbf {\theta }}$

.

$\widehat {\mathbf {\theta }}$

.

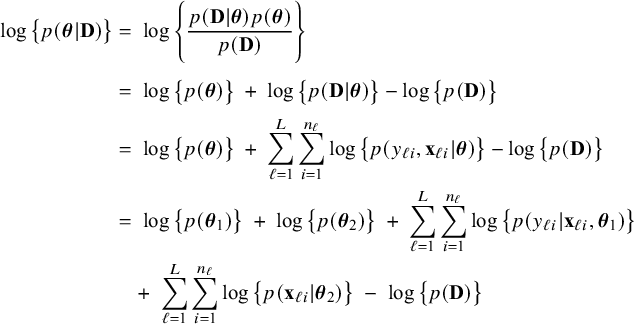

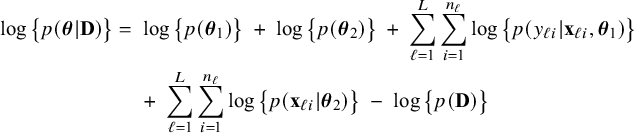

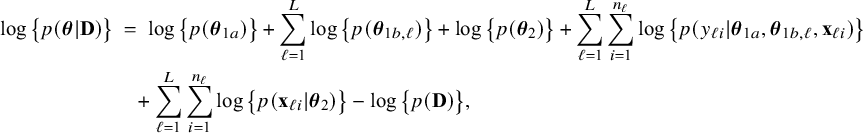

For the fictive combined data set

![]() $\mathbf {D}$

the log posterior density can be written as

$\mathbf {D}$

the log posterior density can be written as

$$ \begin{align} \log \big\{p(\boldsymbol{\theta}|\mathbf{D})\big\} &=\; \log \Bigg\{\frac{p(\mathbf{D}|\boldsymbol{\theta}) p(\boldsymbol{\theta})}{p(\mathbf{D})} \Bigg\} \nonumber \\[4pt] &=\; \log \big\{p(\boldsymbol{\theta})\big\} \;+\; \log \big\{p(\mathbf{D}|\boldsymbol{\theta})\big\} - \log \big\{p(\mathbf{D})\big\} \nonumber \\[2pt] &=\; \log \big\{p(\boldsymbol{\theta})\big\} \;+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}, \mathbf{x}_{\ell i}|\boldsymbol{\theta})\big\} - \log \big\{p(\mathbf{D})\big\} \nonumber\\ &=\; \log \big\{p(\boldsymbol{\theta}_1)\big\} \;+\; \log \big\{p(\boldsymbol{\theta}_2)\big\} \;+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\}\nonumber\\ &\quad+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \;-\; \log \big\{p(\mathbf{D})\big\} \end{align} $$

$$ \begin{align} \log \big\{p(\boldsymbol{\theta}|\mathbf{D})\big\} &=\; \log \Bigg\{\frac{p(\mathbf{D}|\boldsymbol{\theta}) p(\boldsymbol{\theta})}{p(\mathbf{D})} \Bigg\} \nonumber \\[4pt] &=\; \log \big\{p(\boldsymbol{\theta})\big\} \;+\; \log \big\{p(\mathbf{D}|\boldsymbol{\theta})\big\} - \log \big\{p(\mathbf{D})\big\} \nonumber \\[2pt] &=\; \log \big\{p(\boldsymbol{\theta})\big\} \;+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}, \mathbf{x}_{\ell i}|\boldsymbol{\theta})\big\} - \log \big\{p(\mathbf{D})\big\} \nonumber\\ &=\; \log \big\{p(\boldsymbol{\theta}_1)\big\} \;+\; \log \big\{p(\boldsymbol{\theta}_2)\big\} \;+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\}\nonumber\\ &\quad+\; \sum_{\ell=1}^L \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \;-\; \log \big\{p(\mathbf{D})\big\} \end{align} $$

by Bayes’ rule (first equality), independence between the observations (third equality), and, among others, independence between

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

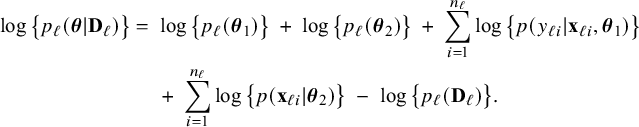

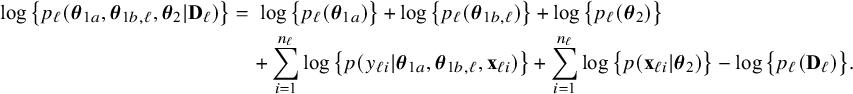

(fourth equality). Similarly, the logarithm of the posterior density in center

$\mathbf {\theta }_2$

(fourth equality). Similarly, the logarithm of the posterior density in center

![]() $\ell $

can be written as

$\ell $

can be written as

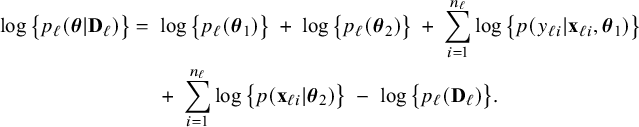

$$ \begin{align} \log \big\{p_\ell(\boldsymbol{\theta}|\mathbf{D}_\ell)\big\} &=\; \log \big\{p_\ell(\boldsymbol{\theta}_1)\big\} \;+\; \log \big\{p_\ell(\boldsymbol{\theta}_2)\big\} \;+\; \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\}\nonumber\\&\quad \;+\; \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \;-\; \log \big\{p_\ell(\mathbf{D}_\ell)\big\}. \end{align} $$

$$ \begin{align} \log \big\{p_\ell(\boldsymbol{\theta}|\mathbf{D}_\ell)\big\} &=\; \log \big\{p_\ell(\boldsymbol{\theta}_1)\big\} \;+\; \log \big\{p_\ell(\boldsymbol{\theta}_2)\big\} \;+\; \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\}\nonumber\\&\quad \;+\; \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \;-\; \log \big\{p_\ell(\mathbf{D}_\ell)\big\}. \end{align} $$

The log posterior densities

![]() $\log \{p(\mathbf {\theta }|\mathbf {D})\}$

and

$\log \{p(\mathbf {\theta }|\mathbf {D})\}$

and

![]() $\log \{p_\ell (\mathbf {\theta }|\mathbf {D}_\ell )\}$

are decomposed into terms that depend on either

$\log \{p_\ell (\mathbf {\theta }|\mathbf {D}_\ell )\}$

are decomposed into terms that depend on either

![]() $\mathbf {\theta }_1$

or on

$\mathbf {\theta }_1$

or on

![]() $\mathbf {\theta }_2$

, but never on both. As a consequence, maximization with respect to

$\mathbf {\theta }_2$

, but never on both. As a consequence, maximization with respect to

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

to obtain their MAP estimators can be performed independently. By reordering the terms in expression (2), we find

$\mathbf {\theta }_2$

to obtain their MAP estimators can be performed independently. By reordering the terms in expression (2), we find

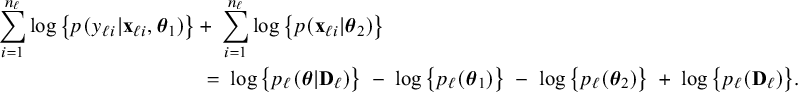

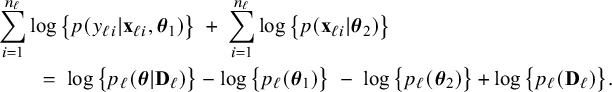

$$ \begin{align*} \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\} &+\; \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \nonumber\\ &\;=\; \log \big\{p_\ell(\boldsymbol{\theta}|\mathbf{D}_\ell)\big\} \;-\; \log \big\{p_\ell(\boldsymbol{\theta}_1)\big\} \;-\; \log \big\{p_\ell(\boldsymbol{\theta}_2)\big\} \;+\; \log \big\{p_\ell(\mathbf{D}_\ell)\big\}. \end{align*} $$

$$ \begin{align*} \sum_{i=1}^{n_\ell}\log \big\{p(y_{\ell i}| \mathbf{x}_{\ell i},\boldsymbol{\theta}_1)\big\} &+\; \sum_{i=1}^{n_\ell}\log \big\{p(\mathbf{x}_{\ell i}|\boldsymbol{\theta}_2)\big\} \nonumber\\ &\;=\; \log \big\{p_\ell(\boldsymbol{\theta}|\mathbf{D}_\ell)\big\} \;-\; \log \big\{p_\ell(\boldsymbol{\theta}_1)\big\} \;-\; \log \big\{p_\ell(\boldsymbol{\theta}_2)\big\} \;+\; \log \big\{p_\ell(\mathbf{D}_\ell)\big\}. \end{align*} $$

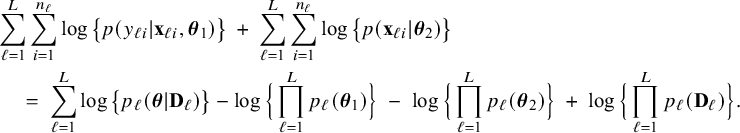

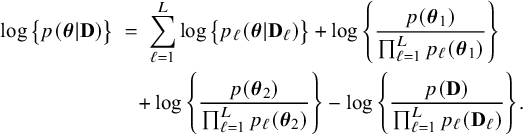

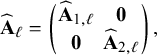

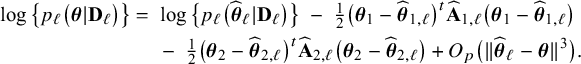

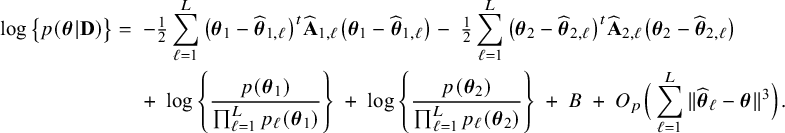

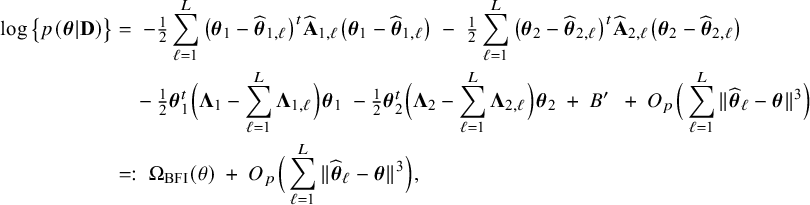

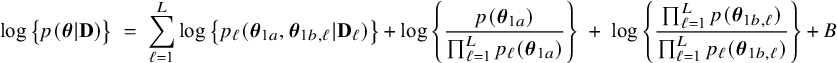

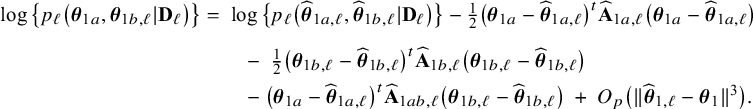

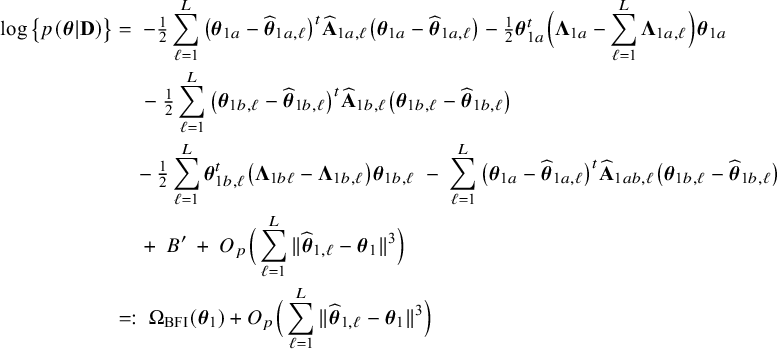

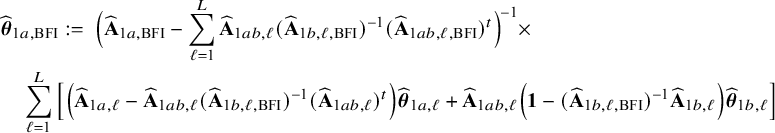

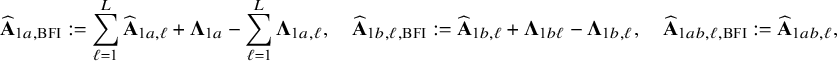

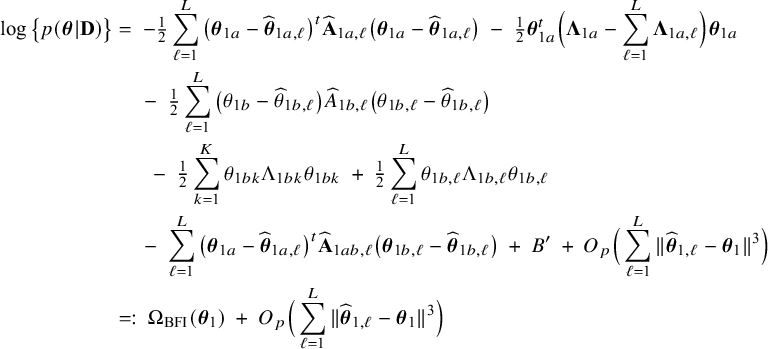

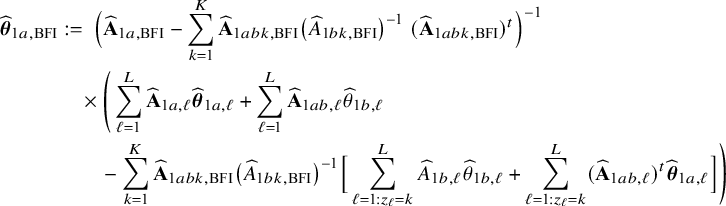

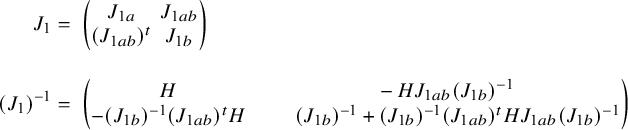

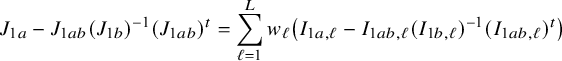

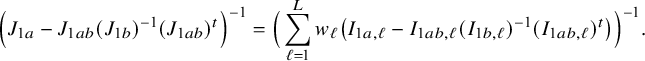

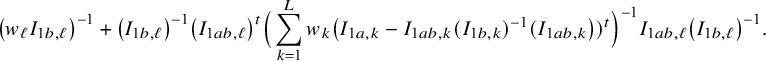

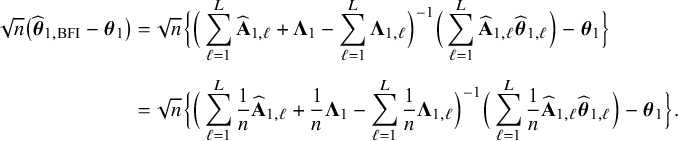

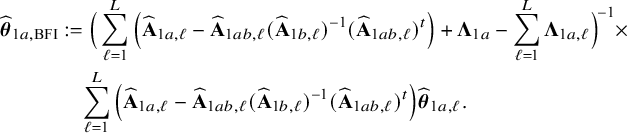

The right hand side of this expression can be inserted into expression (1). Then, the log posterior density for the full data set

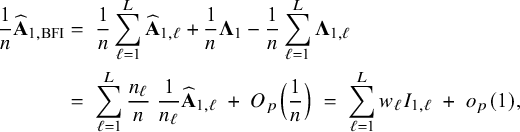

![]() $\log \{p(\mathbf {\theta }|\mathbf {D})\}$

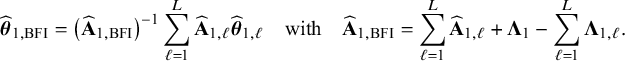

is written as a sum of the local log posterior densities in the centers and the log prior densities (more details are given in Appendix II.A). For deriving the BFI estimators of the parameters, the local log posterior densities are approximated by second order Taylor expansions around the local MAP estimates. Instead of maximizing the full log posterior density for the combined data, the quadratic approximation is maximized with respect to the parameters. The parameter value where the maximum is attained is defined as the BFI estimate. For

$\log \{p(\mathbf {\theta }|\mathbf {D})\}$

is written as a sum of the local log posterior densities in the centers and the log prior densities (more details are given in Appendix II.A). For deriving the BFI estimators of the parameters, the local log posterior densities are approximated by second order Taylor expansions around the local MAP estimates. Instead of maximizing the full log posterior density for the combined data, the quadratic approximation is maximized with respect to the parameters. The parameter value where the maximum is attained is defined as the BFI estimate. For

![]() $\widehat {\mathbf {A}}_{1,\ell }$

and

$\widehat {\mathbf {A}}_{1,\ell }$

and

![]() $\widehat {\mathbf {A}}_{2,\ell }$

the second derivatives of

$\widehat {\mathbf {A}}_{2,\ell }$

the second derivatives of

![]() $-\log \{p_\ell (\mathbf {\theta }|\mathbf {D}_\ell )\}$

with respect to

$-\log \{p_\ell (\mathbf {\theta }|\mathbf {D}_\ell )\}$

with respect to

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

and evaluated in the local MAP estimators

$\mathbf {\theta }_2$

and evaluated in the local MAP estimators

![]() $\widehat {\mathbf {\theta }}_{1,\ell }$

and

$\widehat {\mathbf {\theta }}_{1,\ell }$

and

![]() $\widehat {\mathbf {\theta }}_{2,\ell }$

, in center

$\widehat {\mathbf {\theta }}_{2,\ell }$

, in center

![]() $\ell $

, the BFI estimators equal

$\ell $

, the BFI estimators equal

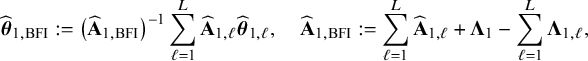

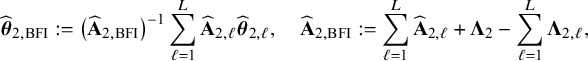

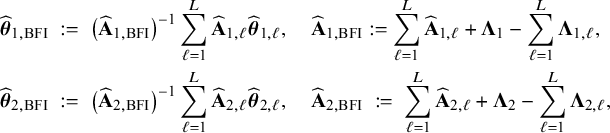

$$ \begin{align} \hspace{-5mm} & \widehat{\boldsymbol{\theta}}_{1,{\mathrm{{BFI}}}} := \big(\widehat{\mathbf{A}}_{1,{\mathrm{{BFI}}}}\big)^{-1}\sum_{\ell=1}^L \widehat{\mathbf{ A}}_{1,\ell}\widehat{\boldsymbol{\theta}}_{1,\ell},\quad \widehat{\mathbf{A}}_{1,{\mathrm{{BFI}}}} := \sum_{\ell=1}^L \widehat{\mathbf{A}}_{1,\ell}+\boldsymbol{\Lambda}_1-\sum_{\ell=1}^L \boldsymbol{\Lambda}_{1,\ell}, \end{align} $$

$$ \begin{align} \hspace{-5mm} & \widehat{\boldsymbol{\theta}}_{1,{\mathrm{{BFI}}}} := \big(\widehat{\mathbf{A}}_{1,{\mathrm{{BFI}}}}\big)^{-1}\sum_{\ell=1}^L \widehat{\mathbf{ A}}_{1,\ell}\widehat{\boldsymbol{\theta}}_{1,\ell},\quad \widehat{\mathbf{A}}_{1,{\mathrm{{BFI}}}} := \sum_{\ell=1}^L \widehat{\mathbf{A}}_{1,\ell}+\boldsymbol{\Lambda}_1-\sum_{\ell=1}^L \boldsymbol{\Lambda}_{1,\ell}, \end{align} $$

$$ \begin{align} \hspace{-5mm} & \widehat{\boldsymbol{\theta}}_{2,{\mathrm{{BFI}}}} := \big(\widehat{\mathbf{A}}_{2,{\mathrm{{BFI}}}}\big)^{-1}\sum_{\ell=1}^L \widehat{\mathbf{A}}_{2,\ell}\widehat{\boldsymbol{\theta}}_{2,\ell}, \quad \widehat{\mathbf{A}}_{2,{\mathrm{{BFI}}}} := \sum_{\ell=1}^L \widehat{\mathbf{A}}_{2,\ell}+\boldsymbol{\Lambda}_2-\sum_{\ell=1}^L \boldsymbol{\Lambda}_{2,\ell}, \end{align} $$

$$ \begin{align} \hspace{-5mm} & \widehat{\boldsymbol{\theta}}_{2,{\mathrm{{BFI}}}} := \big(\widehat{\mathbf{A}}_{2,{\mathrm{{BFI}}}}\big)^{-1}\sum_{\ell=1}^L \widehat{\mathbf{A}}_{2,\ell}\widehat{\boldsymbol{\theta}}_{2,\ell}, \quad \widehat{\mathbf{A}}_{2,{\mathrm{{BFI}}}} := \sum_{\ell=1}^L \widehat{\mathbf{A}}_{2,\ell}+\boldsymbol{\Lambda}_2-\sum_{\ell=1}^L \boldsymbol{\Lambda}_{2,\ell}, \end{align} $$

see Appendix II.A for the derivation. With these expressions we can compute approximations of

![]() $\widehat {\mathbf {\theta }}_1$

and

$\widehat {\mathbf {\theta }}_1$

and

![]() $\widehat {\mathbf {\theta }}_2$

a posteriori from the inference results on the subsets and there is no need to do inference on the (fictive) combined data set

$\widehat {\mathbf {\theta }}_2$

a posteriori from the inference results on the subsets and there is no need to do inference on the (fictive) combined data set

![]() $\mathbf {D}$

to find the BFI estimates. In the calculations of the BFI estimators, we assume independence between the parameters

$\mathbf {D}$

to find the BFI estimates. In the calculations of the BFI estimators, we assume independence between the parameters

![]() $\theta _1$

and

$\theta _1$

and

![]() $\theta _2$

. This assumption was made for mathematical convenience, as the log posterior density splits into terms that are a function of

$\theta _2$

. This assumption was made for mathematical convenience, as the log posterior density splits into terms that are a function of

![]() $\theta _1$

or of

$\theta _1$

or of

![]() $\theta _2$

, but never of both, and as a consequence, separate expressions for

$\theta _2$

, but never of both, and as a consequence, separate expressions for

![]() $\widehat {\theta }_{1,{\mathrm { {BFI}}}}$

and

$\widehat {\theta }_{1,{\mathrm { {BFI}}}}$

and

![]() $\widehat {\theta }_{2,{\mathrm { {BFI}}}}$

are found. This independence assumption is not essential. If the parameters are dependent, the calculations can be performed in a similar way and a single expression for the BFI estimator for

$\widehat {\theta }_{2,{\mathrm { {BFI}}}}$

are found. This independence assumption is not essential. If the parameters are dependent, the calculations can be performed in a similar way and a single expression for the BFI estimator for

![]() $(\theta _1,\theta _2)$

is found.

$(\theta _1,\theta _2)$

is found.

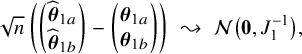

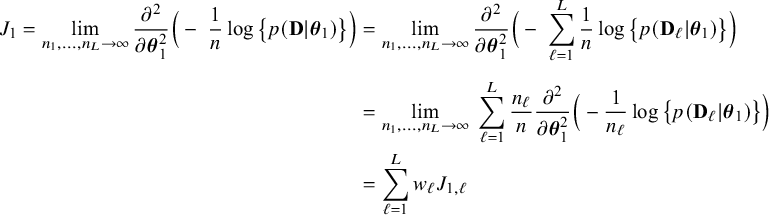

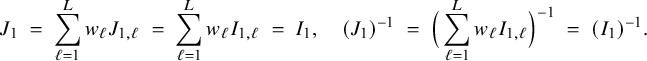

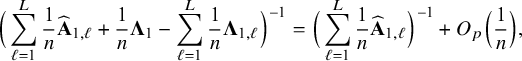

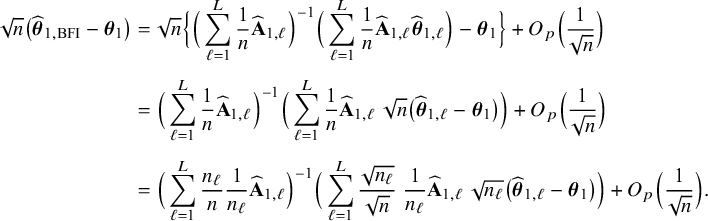

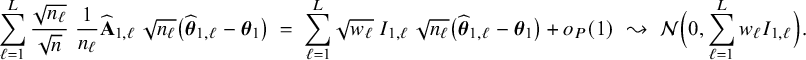

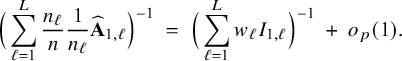

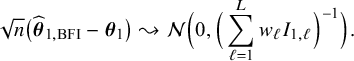

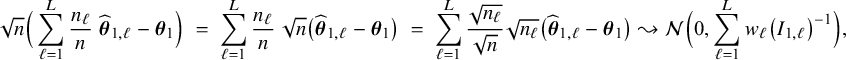

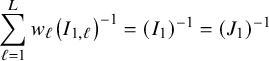

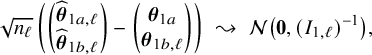

In Appendix III.B we prove that under the assumption of no model misspecification (including homogeneity between the centers), the BFI estimators

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

![]() $\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

are asymptotically Gaussian and efficient (minimum asymptotic variance). For

$\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

are asymptotically Gaussian and efficient (minimum asymptotic variance). For

![]() $n_\ell , \ell =1,\ldots ,L$

the local sample sizes and

$n_\ell , \ell =1,\ldots ,L$

the local sample sizes and

![]() $n=n_1+\ldots +n_L$

the total sample size, it is proven that

$n=n_1+\ldots +n_L$

the total sample size, it is proven that

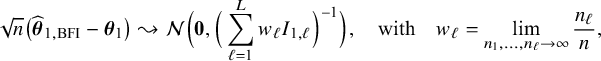

$$ \begin{align*} \sqrt{n}\big(\widehat{\boldsymbol{\theta}}_{1,{\mathrm{{BFI}}}}-\boldsymbol{\theta}_1\big) \leadsto {\mathcal N}\Big(\mathbf{0}, \Big(\sum_{\ell=1}^L w_\ell I_{1,\ell}\Big)^{-1}\Big),\quad\mbox{with} \quad w_\ell = \lim_{n_1,\ldots,n_\ell \rightarrow \infty} \frac{n_\ell}{n}, \end{align*} $$

$$ \begin{align*} \sqrt{n}\big(\widehat{\boldsymbol{\theta}}_{1,{\mathrm{{BFI}}}}-\boldsymbol{\theta}_1\big) \leadsto {\mathcal N}\Big(\mathbf{0}, \Big(\sum_{\ell=1}^L w_\ell I_{1,\ell}\Big)^{-1}\Big),\quad\mbox{with} \quad w_\ell = \lim_{n_1,\ldots,n_\ell \rightarrow \infty} \frac{n_\ell}{n}, \end{align*} $$

and

![]() $I_{1,\ell }$

the Fisher information matrix in center

$I_{1,\ell }$

the Fisher information matrix in center

![]() $\ell $

(the notation ‘

$\ell $

(the notation ‘

![]() $\leadsto $

’ means convergence in distribution). The matrix

$\leadsto $

’ means convergence in distribution). The matrix

![]() $\sum _{\ell =1}^L w_\ell I_{1,\ell }$

equals the Fisher information matrix for estimating

$\sum _{\ell =1}^L w_\ell I_{1,\ell }$

equals the Fisher information matrix for estimating

![]() $\mathbf {\theta }_1$

in the combined data set (see Appendix III.A). The BFI estimator asymptotically follows the same distribution as the MAP and the Maximum Likelihood estimators on the combined data. Apparently, no information is lost as a consequence of the fact that the data sets cannot be shared. In the homogeneous setting

$\mathbf {\theta }_1$

in the combined data set (see Appendix III.A). The BFI estimator asymptotically follows the same distribution as the MAP and the Maximum Likelihood estimators on the combined data. Apparently, no information is lost as a consequence of the fact that the data sets cannot be shared. In the homogeneous setting

![]() $I_{1,\ell }=I_1, \ell =1,\ldots ,L$

, independent of

$I_{1,\ell }=I_1, \ell =1,\ldots ,L$

, independent of

![]() $\ell $

, and

$\ell $

, and

![]() $\sum _{\ell =1}^L w_\ell I_{1,\ell }=I_1$

. Further, since

$\sum _{\ell =1}^L w_\ell I_{1,\ell }=I_1$

. Further, since

![]() $n^{-1} \widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

converges in probability to

$n^{-1} \widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

converges in probability to

![]() $\sum _{\ell =1}^L w_\ell I_{1,\ell }$

(see Appendix III.B), the asymptotic covariance matrix can be estimated by the inverse of

$\sum _{\ell =1}^L w_\ell I_{1,\ell }$

(see Appendix III.B), the asymptotic covariance matrix can be estimated by the inverse of

![]() $n^{-1} \widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

. Similar results hold for the BFI estimator

$n^{-1} \widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

. Similar results hold for the BFI estimator

![]() $\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

.

$\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

.

It follows that for a sufficiently large total sample size, the BFI estimators

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

![]() $\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

are approximately Gaussian with mean

$\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

are approximately Gaussian with mean

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

and with covariance matrices that can be estimated by

$\mathbf {\theta }_2$

and with covariance matrices that can be estimated by

![]() $\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}$

and

$\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}$

and

![]() $\widehat {\mathbf { A}}_{2,{\mathrm { {BFI}}}}^{-1}$

. From this, credible intervals for

$\widehat {\mathbf { A}}_{2,{\mathrm { {BFI}}}}^{-1}$

. From this, credible intervals for

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

can be constructed. Let

$\mathbf {\theta }_2$

can be constructed. Let

![]() $\mathbf {\theta }_{1,(k)}$

be the

$\mathbf {\theta }_{1,(k)}$

be the

![]() $k^{th}$

element of

$k^{th}$

element of

![]() $\mathbf {\theta }_1$

. This parameter is estimated by

$\mathbf {\theta }_1$

. This parameter is estimated by

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}} (k)}$

, the

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}} (k)}$

, the

![]() $k^{th}$

element of

$k^{th}$

element of

![]() $\widehat {\theta }_{1,{\mathrm { {BFI}}}}$

and its approximate

$\widehat {\theta }_{1,{\mathrm { {BFI}}}}$

and its approximate

![]() $(1-2\alpha ) 100\%$

credible interval equals

$(1-2\alpha ) 100\%$

credible interval equals

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}} (k)} \pm \xi _\alpha \; \big (\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}\big )_{k,k}^{1/2},$

for

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}} (k)} \pm \xi _\alpha \; \big (\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}\big )_{k,k}^{1/2},$

for

![]() $\xi _\alpha $

the upper

$\xi _\alpha $

the upper

![]() $\alpha $

-quantile of the standard Gaussian distribution and

$\alpha $

-quantile of the standard Gaussian distribution and

![]() $\big (\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}\big )_{k,k}^{1/2}$

equal to the square root of the

$\big (\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}^{-1}\big )_{k,k}^{1/2}$

equal to the square root of the

![]() $(k,k)^{th}$

element of the inverse of the estimator

$(k,k)^{th}$

element of the inverse of the estimator

![]() $\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

. Hypothesis testing is also straightforward by the asymptotic normality.

$\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

. Hypothesis testing is also straightforward by the asymptotic normality.

3 Heterogeneity across centers

In the derivation of the estimators for the aggregated BFI model in (3) and (4), homogeneity of the populations across the different centers is assumed. This assumption means that the parameters

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

are the same in every center. This assumption may not be true, and the BFI approach has to be adjusted to take this heterogeneity into account. This is the topic of the present section.

$\mathbf {\theta }_2$

are the same in every center. This assumption may not be true, and the BFI approach has to be adjusted to take this heterogeneity into account. This is the topic of the present section.

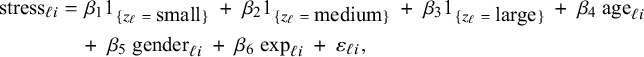

In order to explain different types of heterogeneity, a specific example is used throughout the article. This example is also used in Section 4 and Appendix I to illustrate the BFI methodology and to study its performance. Here we give only a brief description, a more extensive description is given in Section 4.2. The example data come from a hypothetical study on stress among nurses on different wards in different hospitals.Reference Hox, Moerbeek and Schoot 26 The data were simulated from a linear mixed effects model. The outcome of interest is job-related stress. For every nurse, information on stress, age, experience (in years), gender, wardtype (general, special care), hospital, and hospital size (small, medium, large) is available.

Heterogeneity in the populations across multiple centers may occur if, for instance, some medical centers are located in large cities and others in more rural areas. It might also be that in some hospitals the stress level among nurses is significantly higher than in others due to factors that are not nurse specific, like the size of the hospital or management decisions within a hospital (which are not in the data). In this section the following types of heterogeneity are considered:

-

1. Heterogeneity of population characteristics in the centers, e.g., the age distributions of the nurses differ. Then, the values of the parameter

$\mathbf {\theta }_2$

differ across centers. This is considered in Section 3.1.

$\mathbf {\theta }_2$

differ across centers. This is considered in Section 3.1. -

2. Heterogeneity across centers in outcome mean. This may happen if the mean stress-level of the nurses vary across the centers due to factors that have not been measured (e.g., type of management). This is considered in Section 3.2.

-

3. Heterogeneity across centers due to interaction effects; the effect of a covariate varies across the centers. For instance, it might be that the effect of the wardtype on the outcome differs across medical centers. This means that the regression coefficient for wardtype is center-specific. This situation is considered in Section 3.3.

-

4. Heterogeneity across centers due to center-specific nuisance parameters; e.g., the variance of the error term in a linear regression model. See Section 3.4.

-

5. Heterogeneity across centers due to clustering; e.g., clustering by the location of the hospitals. This situation is considered in Section 3.5.

-

6. Heterogeneity across centers due to center-specific covariates. An example of such a covariate is hospital size, which is the same for every nurse in a hospital, but may vary across hospitals. See Section 3.6

These types of between-center heterogeneity are due to center-specific parameters (types 1–4), due to clustering (type 5) and due to missing covariates (type 6). There may be more forms of heterogeneity that can be taken into account with the BFI methodology. The aim of the BFI approach is to increase the sample size relative to the parameter dimension to overcome overfitting. By significantly increasing the number of parameters in the BFI model, to account for heterogeneity, the very objective of the BFI approach would thereby be undermined.

3.1 Heterogeneity of population characteristics

Characteristics of the populations who visit the L centers may differ, for instance because the centers are located in different countries or regions. In the example, the fractions of female nurses differ across the centers.

The parameter

![]() $\mathbf {\theta }$

was decomposed in

$\mathbf {\theta }$

was decomposed in

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

. The parameter

$\mathbf {\theta }_2$

. The parameter

![]() $\mathbf {\theta }_2$

describes the distribution of the covariates

$\mathbf {\theta }_2$

describes the distribution of the covariates

![]() $\mathbf {X}$

, whereas the parameter

$\mathbf {X}$

, whereas the parameter

![]() $\mathbf {\theta }_1$

describes the relationship between the covariates and the outcome (so the regression coefficients and the nuisance model parameters). Under the assumption that

$\mathbf {\theta }_1$

describes the relationship between the covariates and the outcome (so the regression coefficients and the nuisance model parameters). Under the assumption that

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

are independent, the local log posterior densities were decomposed into terms that depend on either

$\mathbf {\theta }_2$

are independent, the local log posterior densities were decomposed into terms that depend on either

![]() $\mathbf {\theta }_1$

or

$\mathbf {\theta }_1$

or

![]() $\mathbf {\theta }_2$

, but never on both (see expression (2)). As a consequence, when calculating the MAP estimates of

$\mathbf {\theta }_2$

, but never on both (see expression (2)). As a consequence, when calculating the MAP estimates of

![]() $\mathbf {\theta }_1$

and

$\mathbf {\theta }_1$

and

![]() $\mathbf {\theta }_2$

, separate functions have to be maximized. Therefore, even if we would take into account that the populations vary across the centers, the expressions of the BFI estimators

$\mathbf {\theta }_2$

, separate functions have to be maximized. Therefore, even if we would take into account that the populations vary across the centers, the expressions of the BFI estimators

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and

![]() $\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

in (3) would not change and

$\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

in (3) would not change and

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

is still asymptotically unbiased. However, because the estimators depend on (summary statistics) of the covariates, the estimates

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

is still asymptotically unbiased. However, because the estimators depend on (summary statistics) of the covariates, the estimates

![]() $\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and in particularly its accuracy, which is represented by

$\widehat {\mathbf {\theta }}_{1,{\mathrm { {BFI}}}}$

and in particularly its accuracy, which is represented by

![]() $\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

, may and often do change. This is investigated in the next section using simulation studies. For

$\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

, may and often do change. This is investigated in the next section using simulation studies. For

![]() $\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

in (4) new expressions can be derived that take the heterogeneity into account. The exact expressions depend on the simultaneous distributions of the covariates and the type of heterogeneity that is assumed. Therefore, it is not possible to provide new, explicit expressions that are universally valid. In the simplest case, the covariates are assumed to be independent (which is usually not the case in practice). Then, if it is also assumed that the priors of the coordinates of

$\widehat {\mathbf {\theta }}_{2,{\mathrm { {BFI}}}}$

in (4) new expressions can be derived that take the heterogeneity into account. The exact expressions depend on the simultaneous distributions of the covariates and the type of heterogeneity that is assumed. Therefore, it is not possible to provide new, explicit expressions that are universally valid. In the simplest case, the covariates are assumed to be independent (which is usually not the case in practice). Then, if it is also assumed that the priors of the coordinates of

![]() $\mathbf {\theta }_2$

are independent, the part of the log-likelihood function that is related to the parameter

$\mathbf {\theta }_2$

are independent, the part of the log-likelihood function that is related to the parameter

![]() $\mathbf {\theta }_2$

can be written as a sum of terms, where the distribution parameters corresponding to the covariates are present in distinct terms. Now new expressions for the BFI estimators of the coordinates of

$\mathbf {\theta }_2$

can be written as a sum of terms, where the distribution parameters corresponding to the covariates are present in distinct terms. Now new expressions for the BFI estimators of the coordinates of

![]() $\mathbf {\theta }_2$

and therefore also for the vector

$\mathbf {\theta }_2$

and therefore also for the vector

![]() $\mathbf {\theta }_2$

can be calculated along the same lines as in the Appendices II.B and II.C.

$\mathbf {\theta }_2$

can be calculated along the same lines as in the Appendices II.B and II.C.

3.2 Heterogeneity across outcome means

If the combined data would be available for analysis, a multi-level model that includes a random center effect for possible unmeasured heterogeneity across centers would be considered. As an alternative one could include a fixed effect for the different centers. In both cases, this means that every center has its own center-specific intercept. At a local level, so within a center, it is not possible to estimate a center-effect. When combining the MAP estimators from the different centers into a BFI estimator for the combined model, different intercepts across the centers can be allowed in the model. This is explained below and the mathematical derivation can be found in Appendix II.B.

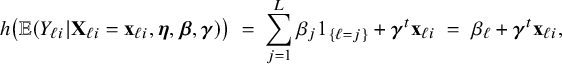

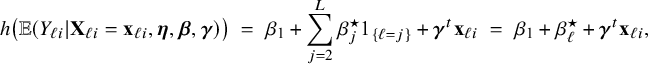

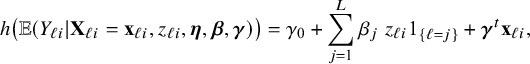

Suppose a regression model is fitted in every center based on the local data only. The BFI strategy as explained before, combines the fitted models to a model with a single general intercept. In Appendix II.B the BFI calculations are given for combining the local models in the situation that one or multiple regression parameters may vary across the centers and center-specific parameters are adopted in the aggregated BFI model. By taking this “varying regression parameter” to be the intercept in the resulting combined BFI model, every center has its own estimated intercept (and there is no general intercept). To be more specific, an estimate of the following aggregated BFI generalized linear model is obtained for an individual in center

![]() $\ell $

$\ell $

$$ \begin{align} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}= \mathbf{x}_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) \;=\; \sum_{j=1}^{L} \beta_j 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i} \;=\; \beta_\ell + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align} $$

$$ \begin{align} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}= \mathbf{x}_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) \;=\; \sum_{j=1}^{L} \beta_j 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i} \;=\; \beta_\ell + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align} $$

where the indicator function

![]() $1_{\{\ell =j\}}$

equals 1 if

$1_{\{\ell =j\}}$

equals 1 if

![]() $\ell =j$

and 0 if

$\ell =j$

and 0 if

![]() $\ell \neq j$

. The parameters

$\ell \neq j$

. The parameters

![]() $\beta _1,\ldots ,\beta _L$

are the center-specific intercepts and

$\beta _1,\ldots ,\beta _L$

are the center-specific intercepts and

![]() $\mathbf {\gamma }$

is the vector of regression parameters. The vector of covariates

$\mathbf {\gamma }$

is the vector of regression parameters. The vector of covariates

![]() $\mathbf {x}_{\ell i}$

does not include a 1 for the intercept. So, the aggregated BFI model for a nurse from center

$\mathbf {x}_{\ell i}$

does not include a 1 for the intercept. So, the aggregated BFI model for a nurse from center

![]() $\ell $

has an intercept

$\ell $

has an intercept

![]() $\beta _\ell $

, which is specific for that center. The model can be easily rewritten into a form with a general intercept and parameters for the effect relative to the reference center which is taken to be center 1:

$\beta _\ell $

, which is specific for that center. The model can be easily rewritten into a form with a general intercept and parameters for the effect relative to the reference center which is taken to be center 1:

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}=\mathbf{x}_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) \;=\; \beta_1 + \sum_{j=2}^{L} \beta_j^\star 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i} \;=\; \beta_1 + \beta_\ell^\star + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align*} $$

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}=\mathbf{x}_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) \;=\; \beta_1 + \sum_{j=2}^{L} \beta_j^\star 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i} \;=\; \beta_1 + \beta_\ell^\star + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align*} $$

where

![]() $\beta _\ell ^\star = \beta _\ell - \beta _1$

, for

$\beta _\ell ^\star = \beta _\ell - \beta _1$

, for

![]() $\ell =2,\ldots ,L$

, with

$\ell =2,\ldots ,L$

, with

![]() $\beta _\ell $

as in model (5). So, by allowing different intercepts when combining the fitted local models, the BFI model accounts for a “center-effect”.

$\beta _\ell $

as in model (5). So, by allowing different intercepts when combining the fitted local models, the BFI model accounts for a “center-effect”.

3.3 Heterogeneity due to center interaction effects

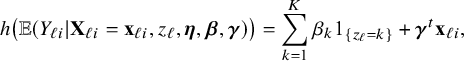

Next suppose that the effect of a covariate (a regression parameter) may vary across the centers. For instance, suppose that the effect of wardtype on job related stress may differ across the centers. In the regression model for the combined data, an interaction between the covariate wardtype and the hospital would be included. To obtain these estimates with the BFI approach, the calculations from Appendix II.B can be followed again, but this time for a regression parameter instead of the intercept. That gives an aggregated BFI model of the form:

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}=\mathbf{x}_{\ell i}, z_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) = \gamma_0 + \sum_{j=1}^{L} \beta_j ~ z_{\ell i} 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{ x}_{\ell i}, \end{align*} $$

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}=\mathbf{x}_{\ell i}, z_{\ell i},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) = \gamma_0 + \sum_{j=1}^{L} \beta_j ~ z_{\ell i} 1_{\{\ell=j\}} + \boldsymbol{\gamma}^t \mathbf{ x}_{\ell i}, \end{align*} $$

where

![]() $\mathbf {\gamma }_0$

is the intercept,

$\mathbf {\gamma }_0$

is the intercept,

![]() $\beta _j$

the wardtype effect on stress in center j,

$\beta _j$

the wardtype effect on stress in center j,

![]() $z_{\ell i}$

the indicator function that indicates whether nurse i from hospital

$z_{\ell i}$

the indicator function that indicates whether nurse i from hospital

![]() $\ell $

is from a special care ward (0 general, 1 special care),

$\ell $

is from a special care ward (0 general, 1 special care),

![]() $\mathbf {\gamma }$

the remaining regression parameters and

$\mathbf {\gamma }$

the remaining regression parameters and

![]() $\mathbf {x}_{\ell i}$

the vector of covariates (so without wardtype).

$\mathbf {x}_{\ell i}$

the vector of covariates (so without wardtype).

3.4 Heterogeneity due to having distinct nuisance parameters

The nuisance parameter of the statistical model, for example the variance of the error term in a linear regression model, may differ between the medical centers. Here too, the calculations for the BFI estimator in Appendix II.B can be applied. This yields an estimated aggregated BFI model with a specific nuisance parameter for each center.

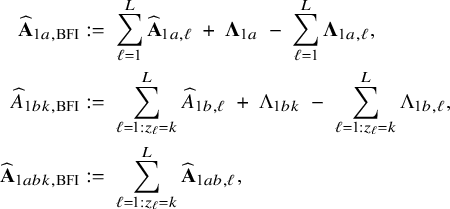

3.5 Heterogeneity due to center-clustering

Local centers can be clustered based on, for example, geospatial regions, type of center (e.g., academic/non-academic hospital) or its size (small/medium/large). If the data can be combined, clustering can be taken into account by including a categorical variable in the model that represents this clustering. Within a center, this is not possible, because all persons in the center are in the same cluster and thus have the same variable value (which would lead to collinearity with the intercept); the regression model must be fitted without the corresponding variable. In this local model, the estimated intercept includes the clustering effect. When combining the models with the BFI approach, we must take this clustering into account. New expressions for the BFI estimators have been derived (Appendix II.C). For K giving the number of clusters, the resulting BFI model has categorical specific intercepts:

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}= \mathbf{x}_{\ell i},z_{\ell},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) = \sum_{k=1}^K \beta_k 1_{\{z_{\ell}=k\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align*} $$

$$ \begin{align*} h\big(\mathbb{E} (Y_{\ell i}| \mathbf{X}_{\ell i}= \mathbf{x}_{\ell i},z_{\ell},\boldsymbol{\eta},\boldsymbol{\beta},\boldsymbol{\gamma})\big) = \sum_{k=1}^K \beta_k 1_{\{z_{\ell}=k\}} + \boldsymbol{\gamma}^t \mathbf{x}_{\ell i}, \end{align*} $$

with

![]() $\beta _k$

the intercept for the

$\beta _k$

the intercept for the

![]() $k^{th}$

cluster,

$k^{th}$

cluster,

![]() $z_{\ell }$

represents the cluster of center

$z_{\ell }$

represents the cluster of center

![]() $\ell $

, and

$\ell $

, and

![]() $1_{\{z_{\ell }=k\}}$

is an indicator function that equals 1 if

$1_{\{z_{\ell }=k\}}$

is an indicator function that equals 1 if

![]() $z_{\ell }=k$

and 0 if

$z_{\ell }=k$

and 0 if

![]() $z_{\ell }\neq k$

. As before, this model can be easily reformulated to a model with an intercept and a reference group.

$z_{\ell }\neq k$

. As before, this model can be easily reformulated to a model with an intercept and a reference group.

3.6 Heterogeneity due to center-specific covariates

Covariates that are included in the local models are also included in the aggregated BFI model. If a variable does not vary within a center (e.g., the size of the medical staff or the percentage of female patients) it can not be included in the regression model for the center and is, therefore, not automatically included in the BFI model. The effect of such a variable is then hidden in the intercepts of the local models. In this subsection we explain how the BFI approach can be adjusted to estimate a (combined) BFI model that includes this center-specific covariate. Although the problem is the same for categorical and continuous variables, the statistical solutions are not. This has to do with the way the variable is included in the aggregated BFI model. If the variable is categorical, one or more binary dummy variables need to be included in the model to represent every category (minus 1). If the variable is included in the model as a continuous variable, only one variable needs to be included (under the assumption of linearity) that holds for all centers.

If the variable is categorical and every center has its own specific category, we are in the situation as described in Section 3.2, where the aggregated model has a center-specific intercept. If the number of categories is lower than the number of centers and multiple centers are in the same category, we actually have to deal with clustering as described in Section 3.5.

If the center-specific variable is continuous, for example the number of patients that is yearly treated in the corresponding center or the percentage of female patients, we actually want to fit a BFI model (based on all data) of the form:

where

![]() $\nu _0$

is the intercept,

$\nu _0$

is the intercept,

![]() $z_\ell $

is the continuous center-specific variable, and

$z_\ell $

is the continuous center-specific variable, and

![]() $\nu _1$

its corresponding unknown regression coefficient. The question is how to estimate the model parameters, and especially

$\nu _1$

its corresponding unknown regression coefficient. The question is how to estimate the model parameters, and especially

![]() $\nu _0$

and

$\nu _0$

and

![]() $\nu _1$

. This is explained below.

$\nu _1$

. This is explained below.

First all local models without this variable are fitted as described before. Next, the models are combined with the BFI methodology under the assumption that all intercepts may be different (the calculations are given in Appendix II.B and is also explained in Section 3.2). This yields an estimate of the model with a center-specific intercept:

for center

![]() $\ell $

. The effect of the continuous variable is hidden in this intercept:

$\ell $

. The effect of the continuous variable is hidden in this intercept:

![]() $\beta _\ell = \nu _0 + \nu _1 z_\ell $

. To estimate

$\beta _\ell = \nu _0 + \nu _1 z_\ell $

. To estimate

![]() $\nu _0$

and

$\nu _0$

and

![]() $\nu _1$

based on the estimated intercepts

$\nu _1$

based on the estimated intercepts

![]() $\widehat {\beta }_\ell , \ell =1,\ldots ,L$

and

$\widehat {\beta }_\ell , \ell =1,\ldots ,L$

and

![]() $z_\ell , \ell =1,\ldots ,L$

, one could make a scatter plot of the points

$z_\ell , \ell =1,\ldots ,L$

, one could make a scatter plot of the points

![]() $(z_1,\widehat {\beta }_1), \ldots , (z_L,\widehat {\beta }_L)$

. Next, after fitting the least squares line through the points, the parameter

$(z_1,\widehat {\beta }_1), \ldots , (z_L,\widehat {\beta }_L)$

. Next, after fitting the least squares line through the points, the parameter

![]() $\nu _0$

can be estimated by the intercept of the least square line and

$\nu _0$

can be estimated by the intercept of the least square line and

![]() $\nu _1$

by its slope. This approach ignores differences in the precision of the estimates of the hospital-specific intercepts. This precision can be taken into account as follows. For sufficiently large samples, the (local) MAP estimators are approximately normally distributed, with a mean and a variance that can be estimated as described in the article. For each center, a value is randomly drawn from this distribution and based on the obtained values,

$\nu _1$

by its slope. This approach ignores differences in the precision of the estimates of the hospital-specific intercepts. This precision can be taken into account as follows. For sufficiently large samples, the (local) MAP estimators are approximately normally distributed, with a mean and a variance that can be estimated as described in the article. For each center, a value is randomly drawn from this distribution and based on the obtained values,

![]() $\nu _0$

and

$\nu _0$

and

![]() $\nu _1$

are estimated as described above. This procedure is repeated many times (B), yielding B estimates of

$\nu _1$

are estimated as described above. This procedure is repeated many times (B), yielding B estimates of

![]() $\nu _0$

and

$\nu _0$

and

![]() $\nu _1$

. Final estimates for

$\nu _1$

. Final estimates for

![]() $\nu _0$

and

$\nu _0$

and

![]() $\nu _1$

can be computed by taking their averages.

$\nu _1$

can be computed by taking their averages.

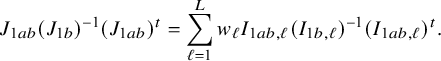

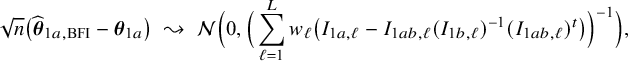

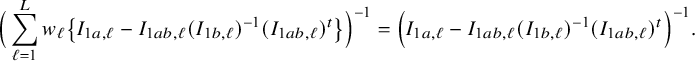

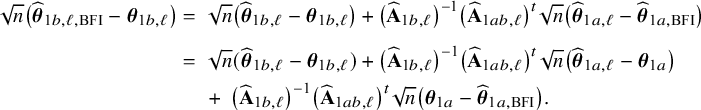

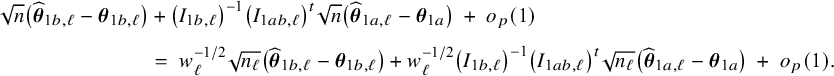

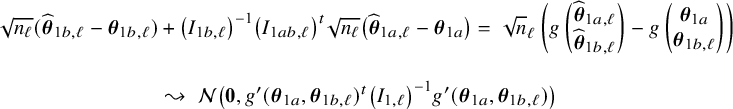

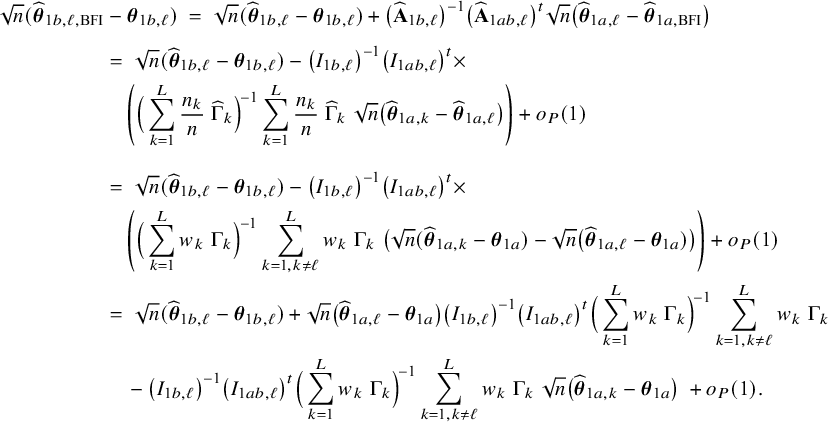

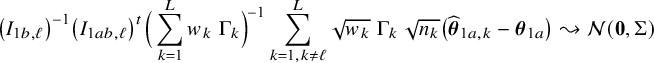

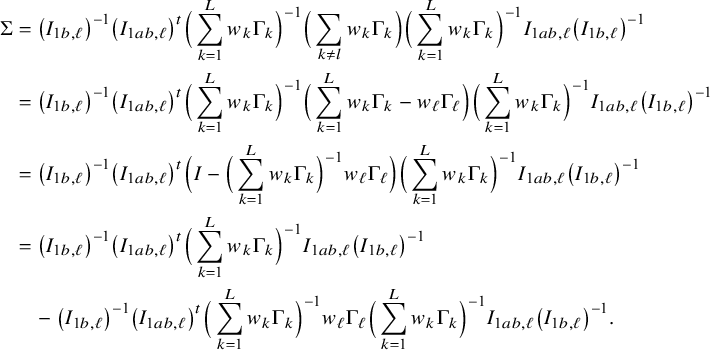

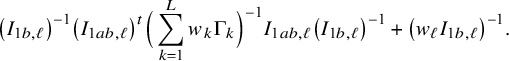

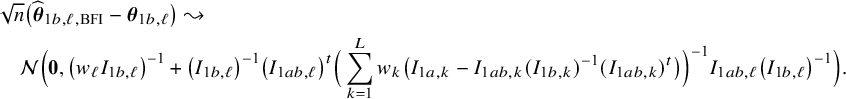

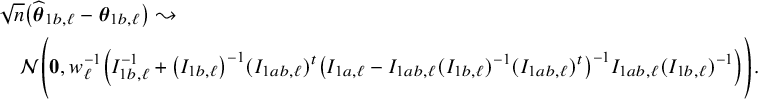

3.7 Asymptotic performance of the BFI estimator under heterogeneity

For both the homogeneous and the heterogeneous settings, the asymptotic distributions of the BFI estimators are derived in Appendix III. In the homogeneous setting, it turns out that the BFI estimator is asymptotically zero-mean Gaussian with covariance matrix equal to the inverse of the Fisher information matrix; the BFI estimator is asymptotically efficient. This distribution is equal to the asymptotic distribution of the MAP and maximum likelihood estimators that would have been based on the combined data; hence asymptotically no information is lost if the data cannot be merged.

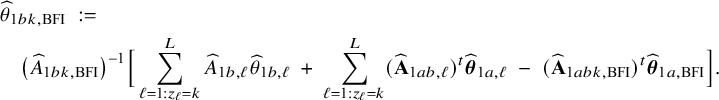

In the heterogeneous setting with center-specific parameters, the parameters of interest can be split into those that are the same between the centers and that are center-specific. Expressions of the corresponding BFI estimators are given in (A.9) and (A.10) in Appendix II.B. In Appendix III.C it is proven that both BFI estimators are asymptotically Gaussian with covariance matrices that equal those for the MAP estimators and MLEs that would have been based on the combined data. Also in the heterogenous setting the BFI estimators are asymptotically efficient. Again asymptotically no information is lost if the data sets cannot be combined. In Appendix III.C it is proved that the BFI estimator for the center-specific parameter is asymptotically more accurate than the MAP estimator based on the local data of the center only. This is because the BFI estimator uses information from all centers to estimate the parameters that are the same across centers, while the MAP estimator uses local data only. A more accurate estimate of the shared parameters leads to a more accurate estimate of the non-shared parameters.

Expressions of the BFI estimators for the setting in which the centers can be clustered are given in Appendix II.C. These expressions are complicated. Therefore, the derivation of the asymptotic distribution is not given here, but can be derived in the same way as for the setting with center-specific parameters.

Since the BFI estimator of

![]() $\mathbf {\theta }_1$

is asymptotically Gaussian and the asymptotic covariance matrix can be estimated by the inverse of

$\mathbf {\theta }_1$

is asymptotically Gaussian and the asymptotic covariance matrix can be estimated by the inverse of

![]() $\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

, credible intervals can be easily constructed, as explained for the homogeneous setting. Hypotheses can be tested using the Wald test.

$\widehat {\mathbf {A}}_{1,{\mathrm { {BFI}}}}$

, credible intervals can be easily constructed, as explained for the homogeneous setting. Hypotheses can be tested using the Wald test.

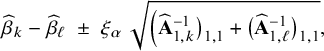

3.8 Methods for checking heterogeneity

In this article we extend the BFI methodology to account for heterogeneity between centers. Before combining the local estimates, we should verify whether this heterogeneity is actually present and whether it is necessary to account for it.

Suppose we want to investigate whether it is necessary to take into account the heterogeneity of the intercepts. Then, first the MAP estimates of the local intercepts, say

![]() $\widehat {\beta }_{\ell }, \ell =1,\ldots ,L$

, should be compared. However, there will always be differences between the estimates. The question is whether the observed differences are due to randomness or whether the true values of the intercepts are sufficiently different to take this into account in the modelling. The latter can be verified by constructing credible intervals. In order to compare the parameter estimates between two centers, say centers k and

$\widehat {\beta }_{\ell }, \ell =1,\ldots ,L$

, should be compared. However, there will always be differences between the estimates. The question is whether the observed differences are due to randomness or whether the true values of the intercepts are sufficiently different to take this into account in the modelling. The latter can be verified by constructing credible intervals. In order to compare the parameter estimates between two centers, say centers k and

![]() $\ell $

, a credible interval for the difference of the two intercepts can be constructed. Such a calculation is based on the statistical independence of the estimators

$\ell $

, a credible interval for the difference of the two intercepts can be constructed. Such a calculation is based on the statistical independence of the estimators

![]() $\widehat {\beta }_{k}$

and

$\widehat {\beta }_{k}$

and

![]() $\widehat {\beta }_{\ell }$

(since the data from the different centers are assumed to be independent) and the fact that

$\widehat {\beta }_{\ell }$

(since the data from the different centers are assumed to be independent) and the fact that

![]() $\widehat {\beta }_{k}$

and

$\widehat {\beta }_{k}$

and

![]() $\widehat {\beta }_{\ell }$

are approximately Gaussian with mean

$\widehat {\beta }_{\ell }$

are approximately Gaussian with mean

![]() $\beta _k$

and

$\beta _k$

and

![]() $\beta _\ell $

and standard deviations

$\beta _\ell $

and standard deviations

![]() $\big (\widehat {\mathbf { A}}_{1,k}^{-1}\big )_{1,1}^{1/2}$

and

$\big (\widehat {\mathbf { A}}_{1,k}^{-1}\big )_{1,1}^{1/2}$

and

![]() $\big (\widehat {\mathbf {A}}_{1,\ell }^{-1}\big )_{1,1}^{1/2}$

, respectively, (if the first element of the parameter vectors

$\big (\widehat {\mathbf {A}}_{1,\ell }^{-1}\big )_{1,1}^{1/2}$

, respectively, (if the first element of the parameter vectors

![]() $\mathbf {\theta }_{1,k}$

and

$\mathbf {\theta }_{1,k}$

and

![]() $\mathbf {\theta }_{1,\ell }$

correspond to the intercept). Then, the

$\mathbf {\theta }_{1,\ell }$

correspond to the intercept). Then, the

![]() $(1-2\alpha ) 100\%$

credible interval for the difference

$(1-2\alpha ) 100\%$

credible interval for the difference

![]() $\beta _k-\beta _\ell $

equals

$\beta _k-\beta _\ell $

equals

$$ \begin{align*} \widehat{\beta}_{k}-\widehat{\beta}_{\ell} \;\pm\; \xi_\alpha \; \sqrt{\Big(\widehat{\mathbf{A}}_{1,k}^{-1}\big)_{1,1}+\big(\widehat{\mathbf{A}}_{1,\ell}^{-1}\big)_{1,1}}, \end{align*} $$

$$ \begin{align*} \widehat{\beta}_{k}-\widehat{\beta}_{\ell} \;\pm\; \xi_\alpha \; \sqrt{\Big(\widehat{\mathbf{A}}_{1,k}^{-1}\big)_{1,1}+\big(\widehat{\mathbf{A}}_{1,\ell}^{-1}\big)_{1,1}}, \end{align*} $$

for

![]() $\xi _\alpha $

equal to the upper

$\xi _\alpha $

equal to the upper

![]() $\alpha $

-quantile of the standard Gaussian distribution. With the latter interval we can verify whether the parameters in the centers k and

$\alpha $

-quantile of the standard Gaussian distribution. With the latter interval we can verify whether the parameters in the centers k and

![]() $\ell $

are different. If the sample sizes in the centers are small, the credible intervals may be wide and it may be difficult to conclude on hetereogeneity.

$\ell $

are different. If the sample sizes in the centers are small, the credible intervals may be wide and it may be difficult to conclude on hetereogeneity.

Similarly, the

![]() $(1-2\alpha ) 100\%$

credible intervals for the difference between the true

$(1-2\alpha ) 100\%$

credible intervals for the difference between the true

![]() $\beta $

-value in all centers except

$\beta $

-value in all centers except

![]() $\ell $

and the true parameter value in center

$\ell $

and the true parameter value in center

![]() $\ell $

equals:

$\ell $

equals:

where subscript

![]() $-\ell $

means that the BFI estimator was computed not including the estimator from center

$-\ell $

means that the BFI estimator was computed not including the estimator from center

![]() $\ell $

. With this interval we can verify whether the intercept in center

$\ell $

. With this interval we can verify whether the intercept in center

![]() $\ell $

differs from the intercepts in the other centers assuming that these intercepts equal.

$\ell $

differs from the intercepts in the other centers assuming that these intercepts equal.

In the same way, one can check whether it is necessary to take into account any of the other types of heterogeneity.

4 Performance of BFI methodology

The BFI methodology for GLMs was introduced in Jonker et alReference Jonker, Pazira and Coolen 23 and extended to survival models for homogeneous populations in Pazira et al.Reference Pazira, Massa, Weijers, Coolen and Jonker 25 Simulation studies in those papers show good performance of the methodology in the homogeneous setting. In this article we focus on different types of heterogeneity. The results of simulation studies (Section 4.1) and data analyses (Section 4.2) are described below.

4.1 Simulation studies

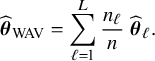

4.1.1 One-shot estimators for comparison

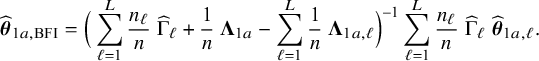

As explained in the introduction, we are only interested in one-shot estimators, i.e., estimators that can be calculated after a single communication with the centers, like the BFI estimator. To enable performance comparison for the BFI estimator, we consider two one-shot estimators. The most interesting one is the weighted average estimator (WAV) which is defined as the weighted average of the local MAP estimators with the weights equal to

![]() $n_\ell /n$

(where

$n_\ell /n$

(where

![]() $n=\sum _{\ell =1}^{L} n_\ell $

); estimates based on larger data-sets are given larger weights. The weighted average estimator for

$n=\sum _{\ell =1}^{L} n_\ell $

); estimates based on larger data-sets are given larger weights. The weighted average estimator for

![]() $\mathbf {\theta }$

is defined as:

$\mathbf {\theta }$

is defined as:

$$ \begin{align*} \widehat{\boldsymbol{\theta}}_{{\mathrm{{WAV}}}} &= \sum_{\ell=1}^{L} \frac{n_\ell}{n} ~\widehat{\boldsymbol{\theta}}_{\ell}. \end{align*} $$

$$ \begin{align*} \widehat{\boldsymbol{\theta}}_{{\mathrm{{WAV}}}} &= \sum_{\ell=1}^{L} \frac{n_\ell}{n} ~\widehat{\boldsymbol{\theta}}_{\ell}. \end{align*} $$

In case of clustering, the WAV estimator for the parameter that is specific for a particular cluster is defined as the weighted average of the local MAP estimators of the centers in that cluster. If a parameter may vary between all centers, the corresponding WAV estimator is defined as the MAP estimator in the local center. The second one-shot estimator for

![]() $\mathbf {\theta }$

is the single center estimator

$\mathbf {\theta }$

is the single center estimator

![]() $\widehat {\mathbf {\theta }}_{{\mathrm{{single}}}}$

, defined as the MAP estimator in the center with the largest local sample size. The single center estimator cannot be defined in case of center or cluster specific parameters.

$\widehat {\mathbf {\theta }}_{{\mathrm{{single}}}}$

, defined as the MAP estimator in the center with the largest local sample size. The single center estimator cannot be defined in case of center or cluster specific parameters.

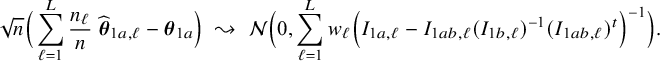

In Appendix III the asymptotic distributions of the weighted average and the single center estimators are derived. As expected, the asymptotic variance of the single center estimator is larger than the one of the BFI estimator, because it is based on fewer data points. In the homogeneous setting, the WAV estimator turns out to be asymptotically efficient (minimum variance) and it follows asymptotically the same distribution as the BFI estimator. In the heterogeneous setting, the WAV estimator of the parameter that differs between the centers is defined as the (corresponding) single center estimator. As explained in Section 3.7, the BFI estimator has a smaller asymptotic variance than this estimator. In this section the finite sample behaviour of the estimators are compared by means of simulation studies.

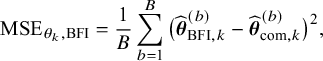

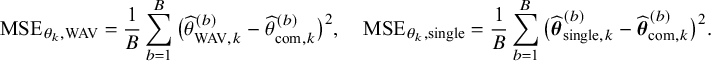

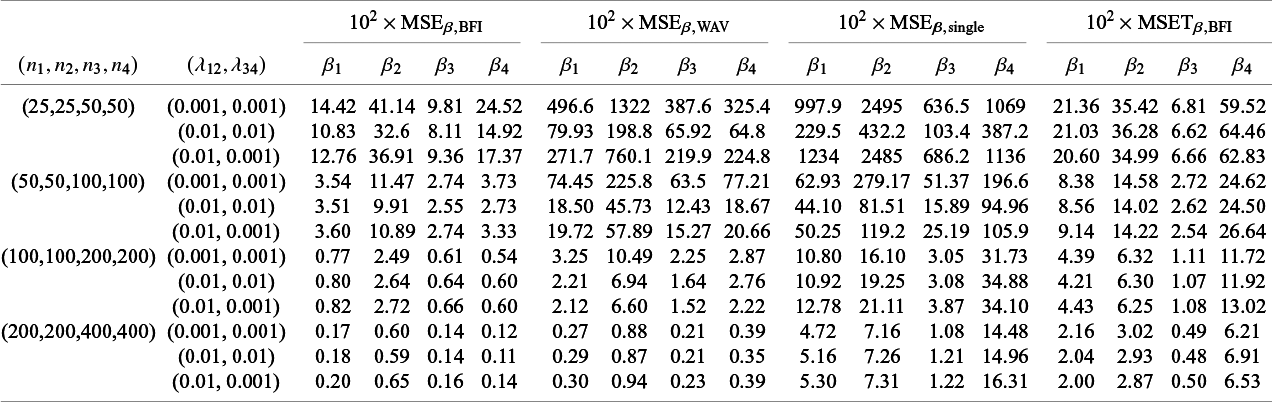

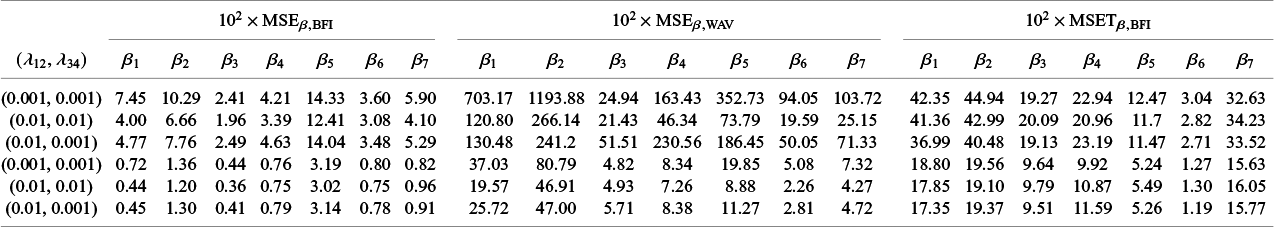

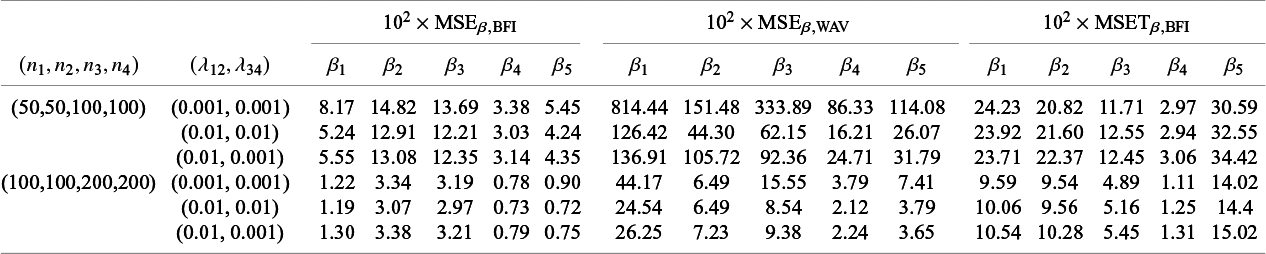

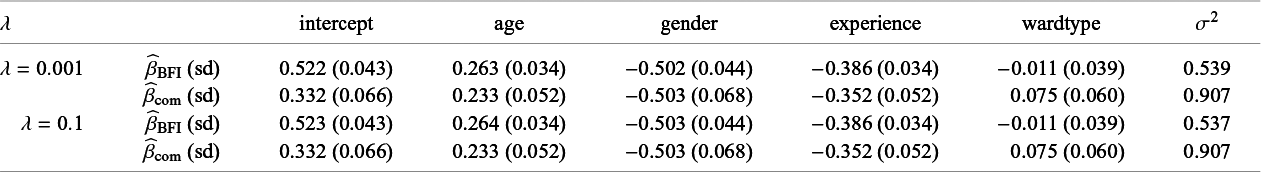

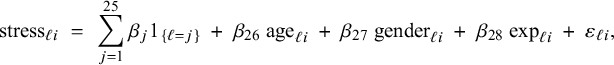

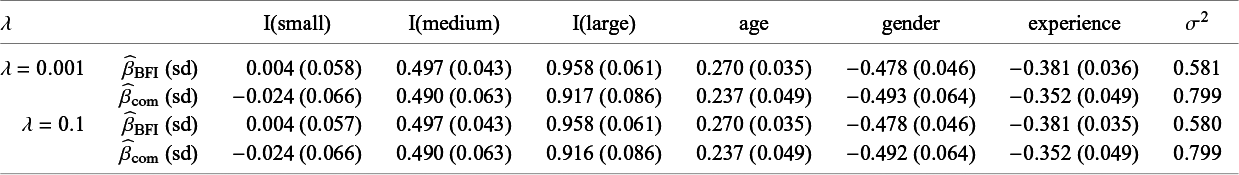

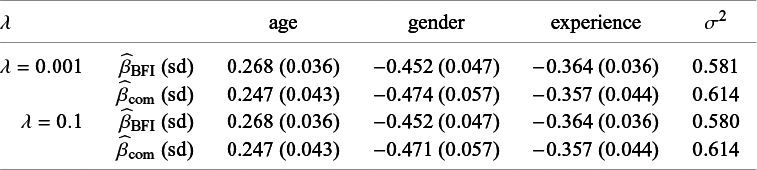

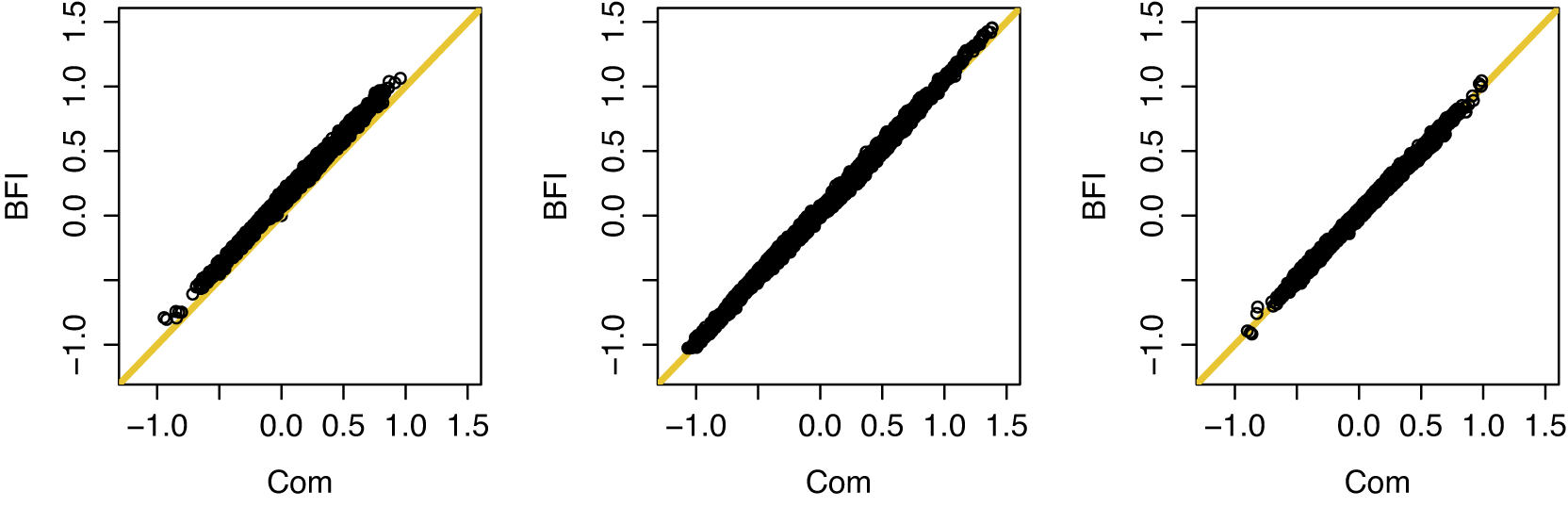

4.1.2 Performance measures for finite samples