1 Introduction and motivation

Tensor calculus is an essential tool in physics and applied mathematics. It was instrumental already a century ago in the formulation of Einstein’s general relativity, and its usage has spread to many areas of science. At its heart lies linear algebra, which is defined as the study of linear maps between vector spaces. In applications, one commonly manipulates representations of linear algebraic objects: vectors as 1-dimensional arrays and linear maps as matrices (2-dimensional arrays). Indeed, assuming a given basis, the representations are equivalent to the algebraic objects. Likewise, tensors are often thought of as a higher-dimensional version of matrices: their algebraic formulation is as a category of linear maps between vector spaces.

Viewing the above situation through the lens of programming language theory, the algebraic formulation forms a set of combinators and the array-based representations is a possible semantics for them. Even though the praxis is to blur the distinction between algebraic objects and their coefficient representations, it is a source of confusion in the case of tensor calculus, which studies tensor fields, in the sense of tensor-valued functions defined over a manifold. (We provide some evidence in Section 8.1.) Notably, difficulties arise because the basis varies over the manifold. The first contribution of this paper is to provide a clear conceptual picture by highlighting the syntax-semantics distinction.

On the practical side, the situation is similar. One can find a plethora of languages and libraries purportedly geared towards tensor manipulation, but they inevitably focus on their multi-dimensional array representations. There is nearly no support for algebraic tensor field expressions. In this paper, we work towards bridging this gap, by applying programming-language methodology to the notations of tensor algebra and tensor calculus—thus viewing them as domain-specific languages. For the readership with a programming language background, we aim to provide a down-to-earth presentation of tensor notations. We capture all their important properties, in particular by making use of linear types. We also aim to attract a readership that already has a working knowledge of tensors. For them we aim to fully formalise the relationship between the representation-oriented notation for tensor fields and its linear-algebraic semantics. We do so by viewing this syntax as terms in a (linear-typed) lambda calculus. As usual with dsls, this presentation comes with an executable semantics. This means we end up with a usable tool to manipulate tensor fields, which is the second contribution of this paper.

1.1 Overview

To make the presentation more pedagogical, we delay the introduction of tensor fields over manifolds until Section 5. Until then, the reader can think of each tensor as “just” an element of a certain vector space. This allows us to present the core concepts in a simpler setting, even though they will apply unchanged in the more general context. As hinted above, we will use an algebraic semantics for tensors, following a categorical structure (Section 3). Together, the combinators forming this categorical structure form a point-free edsl, which we refer to as Roger in reference to Roger Penrose (see Section 8.5 as for why).

Every Roger program can be evaluated to morphisms in any suitable tensor category. This includes matrices, but also string diagrams with the appropriate structure as well. Roger is useful in its own right, but has all the downsides of a point-free language, and thus is not in wide use in the mathematics community, where the so-called Einstein notation is preferred. The Einstein notation mimics the usual notation to access components of matrices, but speaks about these components in a wholesale manner, that is, with index variables that range over all the dimensions. We formalise this notation in an index-based edsl (Section 4). We refer to this edsl as Albert in the rest of the paper. Expressions in Albert evaluate to morphisms in Roger, and thus in any tensor category.

In sum, because the index-notation, diagram notation and matrices are instances of tensor categories, programs written in any of our edsls can be executed as tensor programs using the matrix instance or can generate index or diagram notation for the code in question. The relationships between these notations and edsls are depicted in Figure 1.

Fig. 1: Tensor notations, edsls and relationships between them. Even though the index notation, the morphism notation and the string diagram notation are all equivalent mathematically, in our implementation Roger is coded as a (set of) type-classes, and the index and diagram notations are instances of it.

This means that a function in Albert, say

will, depending on the type, either:

1. render itself in Einstein notation as

$t{^i}\nabla{_i}u$

;

$t{^i}\nabla{_i}u$

;2. render itself as the diagram

or

or3. run on matrix representations of the tensors t and u and compute the result (a scalar field in this case, representing the directional derivative of u in the direction of t).

Together, Albert and Roger form a Haskell library for expressing tensors.Footnote 1 This library leverages linear types as implemented in ghc 9. This implementation defines an executable semantics of Albert, and is presented in section 7. All the examples presented in this paper were prepared using our library. In particular, the diagrams are generated with it.

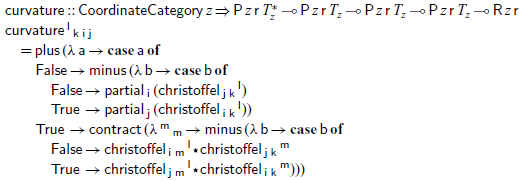

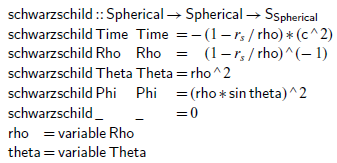

In Section 5, we move to deal with tensor fields proper. Essentially this means that every expression in either edsl corresponds to a tensor field, and that we can manipulate derivatives of such fields. With this addition, the edsls can be used for symbolic calculations of tensor fields. We can, for example, apply covariant derivatives to tensor expressions, re-express them in terms of partial derivatives and Christoffel symbols, and instantiate those to concrete coordinates systems. We demonstrate this workflow in Section 6, where we express Einstein’s General Relativity equation for the curvature of space-time and verify that the Schwarzschild metric tensor is a solution.

We start in Section 2 with a summary of the notions of linear algebra and tensors.

2 Background: linear algebra and tensors

The goal of this section is both to provide the canonical presentation as reference and to expose its abstruse character. The summary does not replace a proper introduction to the topic, and we urge the reader to turn to an appropriate reference if necessary (see references in Section 8.1).

A typical definition of tensor that one might find is the following:

An nth-rankFootnote 2 tensor in m-dimensional space is a mathematical object that has n indices and

![]() $m^n$

components and obeys certain transformation rules.

$m^n$

components and obeys certain transformation rules.

(Rowland & Weisstein, Reference Rowland and Weisstein2023)

(The transformations in question relate to change of basis, as we will see.) This kind of definition is heavily geared towards coordinate representations, rather than their algebraic definition. Why do pedagogical accounts widely refer to coordinate representations rather than semantics? One answer is that calculations are eventually always performed using coordinates. Another answer is that the kind of algebraic thinking required to grasp tensors may be too abstract to form an intuition. Our point of view is that it is indeed at the wrong abstraction level, and that the categorical structures are better suited to reasoning about tensors than the pure linear-algebraic ones. Nonetheless, we will have to refer to the algebraic definitions of tensors down the road, so we provide a minimal recap below.

2.1 Pure algebraic point of view

The main object of study are homomorphisms between vector spaces: linear transformations, also called linear maps. We will later see that tensors are such maps.

Definition 1 (vector space). A vector space (over a field S) is a commutative group

![]() $\mathsf{v}$

equipped with a compatible notion of scaling by elements of S.

$\mathsf{v}$

equipped with a compatible notion of scaling by elements of S.

A vector space must additionally satisfy a number of laws, including that scaling is a linear operation:

![]() $s \triangleleft (x+y) = s \triangleleft x+ s \triangleleft y$

.

$s \triangleleft (x+y) = s \triangleleft x+ s \triangleleft y$

.

The exact nature of this field of scalars (S) has little bearing on the algebraic developmentFootnote

3

, but we assume throughout that they are real numbers. Note that S is itself a vector space, with scaling

![]() ${(}\mskip 0.0mu\triangleleft \mskip 0.0mu{)}$

then being scalar multiplication.

${(}\mskip 0.0mu\triangleleft \mskip 0.0mu{)}$

then being scalar multiplication.

Definition 2 (linear map). A function

![]() $f: V \!\longrightarrow\! W$

is a linear map iff. for all collections of scalars

$f: V \!\longrightarrow\! W$

is a linear map iff. for all collections of scalars

![]() $c_{i}$

and vectors

$c_{i}$

and vectors

![]() $\vec v_{i}$

we have

$\vec v_{i}$

we have

\begin{equation*}f \left(\sum _{i} c_{i} \triangleleft \vec v_{i}\right) = \sum _{i} c_{i} \triangleleft (f(\vec v_{i}))\end{equation*}

\begin{equation*}f \left(\sum _{i} c_{i} \triangleleft \vec v_{i}\right) = \sum _{i} c_{i} \triangleleft (f(\vec v_{i}))\end{equation*}

For a fixed domain and codomain, linear maps themselves form a vector space.

The eager reader should be warned that, for now, indices are used to range of over arbitrary sets of vectors and scalars (and bound by

![]() $\sum$

), in a usual way. Indices take a special meaning only when we get to coordinates and the Einstein notation (from Sections 2.2 and 2.3).

$\sum$

), in a usual way. Indices take a special meaning only when we get to coordinates and the Einstein notation (from Sections 2.2 and 2.3).

Definition 3 (covector space). Given a vector space V, the covector space

![]() $V^*$

is defined as the set of linear maps

$V^*$

is defined as the set of linear maps

![]() $V \!\longrightarrow\! S$

.

$V \!\longrightarrow\! S$

.

Since covector spaces are special cases of linear maps, they form vector spaces too. In a similar vein, the set of linear maps

![]() $f: S \!\longrightarrow\! W$

is isomorphic to W. (Indeed

$f: S \!\longrightarrow\! W$

is isomorphic to W. (Indeed

![]() $f(s) = f (s \triangleleft 1) = s \triangleleft f(1)$

, and thus the vector f(1) in W fully determines the linear function f.)

$f(s) = f (s \triangleleft 1) = s \triangleleft f(1)$

, and thus the vector f(1) in W fully determines the linear function f.)

Definition 4 (bilinear map). A function

![]() $f: V \times W \longrightarrow U$

is a bilinear map iff. for all

$f: V \times W \longrightarrow U$

is a bilinear map iff. for all

![]() $c_{i}, d_j : S$

,

$c_{i}, d_j : S$

,

![]() $\vec v_{i} : V$

, and

$\vec v_{i} : V$

, and

![]() $\vec w_j : W$

we have

$\vec w_j : W$

we have

\begin{equation*}f \left(\sum _{i} c_{i} \triangleleft \vec v_{i}, \sum _j d_j \triangleleft \vec w_j\right) = \sum _{i,j} c_{i}d_j \triangleleft (f(\vec v_{i},\vec w_j))\end{equation*}

\begin{equation*}f \left(\sum _{i} c_{i} \triangleleft \vec v_{i}, \sum _j d_j \triangleleft \vec w_j\right) = \sum _{i,j} c_{i}d_j \triangleleft (f(\vec v_{i},\vec w_j))\end{equation*}

Definition 5 (Tensor product of vector spaces). Given two vector spaces V and W, their tensor product is a vector space, denoted by

![]() $V\otimes W$

, together with a bilinear map

$V\otimes W$

, together with a bilinear map

![]() $\phi:(V \times W) \!\longrightarrow\! (V\otimes W)$

with the following universal property. For every vector space Z and every bilinear map

$\phi:(V \times W) \!\longrightarrow\! (V\otimes W)$

with the following universal property. For every vector space Z and every bilinear map

![]() $h:(V \times W) \!\longrightarrow\! Z$

, there exists a unique linear map

$h:(V \times W) \!\longrightarrow\! Z$

, there exists a unique linear map

![]() $h' : (V\otimes W) \!\longrightarrow\! Z$

such that

$h' : (V\otimes W) \!\longrightarrow\! Z$

such that

![]() $h = h' \circ \phi$

. The output

$h = h' \circ \phi$

. The output

![]() $\phi(v,w)$

is often denoted by

$\phi(v,w)$

is often denoted by

![]() $v\otimes w$

, overloading the same symbol. (We let the reader check that the tensor product always exists.)

$v\otimes w$

, overloading the same symbol. (We let the reader check that the tensor product always exists.)

Examples: Here is an attempt at providing an intuition for what is, and is not, a bilinear function. Consider the simplest case of the definition of bilinear map where there is just one vector

![]() $\vec v$

as the first argument and one vector

$\vec v$

as the first argument and one vector

![]() $\vec w$

as the second argument to f. We then have

$\vec w$

as the second argument to f. We then have

![]() $f(\vec v,0) = f(1\triangleleft \vec v,0\triangleleft \vec w) = (1 \times 0)\triangleleft f(\vec v,\vec w) = 0$

. This means that vector addition is not bilinear because

$f(\vec v,0) = f(1\triangleleft \vec v,0\triangleleft \vec w) = (1 \times 0)\triangleleft f(\vec v,\vec w) = 0$

. This means that vector addition is not bilinear because

![]() $\vec v+0=\vec v \neq 0$

. Similarly, f cannot be first or second projection, because they are also linear, not bilinear.

$\vec v+0=\vec v \neq 0$

. Similarly, f cannot be first or second projection, because they are also linear, not bilinear.

We also have that we can “move constant factors” between

![]() $\vec v$

and

$\vec v$

and

![]() $\vec w$

:

$\vec w$

:

![]() $f(c \triangleleft \vec v, 1 \triangleleft \vec w) = (c \times 1) \triangleleft f(\vec v,\vec w) = (1 \times c) \triangleleft f(\vec v,\vec w) =f(1 \triangleleft \vec v, c \triangleleft \vec w)$

. In connection with the tensor product this means that even though, for any two vectors

$f(c \triangleleft \vec v, 1 \triangleleft \vec w) = (c \times 1) \triangleleft f(\vec v,\vec w) = (1 \times c) \triangleleft f(\vec v,\vec w) =f(1 \triangleleft \vec v, c \triangleleft \vec w)$

. In connection with the tensor product this means that even though, for any two vectors

![]() $\vec v : V$

and

$\vec v : V$

and

![]() $\vec w : W$

, we can construct a tensor

$\vec w : W$

, we can construct a tensor

![]() $u = \phi(\vec v,\vec w) : V\otimes W$

which looks like we have embedded a pair, we cannot extract

$u = \phi(\vec v,\vec w) : V\otimes W$

which looks like we have embedded a pair, we cannot extract

![]() $\vec v$

and

$\vec v$

and

![]() $\vec w$

again—they are mixed up together (entangled).

$\vec w$

again—they are mixed up together (entangled).

What a bilinear function can (and must) do, as we can see from the definition, when given two linear combinations, is to compute a linear combination based on all pairwise products of the coefficients, without depending on the coefficients themselves.

Order of a tensor

Often, tensors are used in a context where there is a single (atomic) underlying vector space

![]() $\mathsf{T}$

which is not just the scalars. Then the complexity of a vector space built from

$\mathsf{T}$

which is not just the scalars. Then the complexity of a vector space built from

![]() $\mathsf{T}$

can be measured by its order. The order of

$\mathsf{T}$

can be measured by its order. The order of

![]() $\mathsf{T}$

is defined to be 1 and the order of the scalar space is 0. The order of a tensor space

$\mathsf{T}$

is defined to be 1 and the order of the scalar space is 0. The order of a tensor space

![]() $\mathsf{V}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{W}$

is the sum of the order of spaces

$\mathsf{V}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{W}$

is the sum of the order of spaces

![]() $\mathsf{V}$

and

$\mathsf{V}$

and

![]() $\mathsf{W}$

, and this way we can build spaces of arbitrarily large order. The order of a linear map can be defined either as the pair of the orders of its input and output spaces, or as their sum (depending on convention). For example, a linear operator on an atomic vector space has order (1,1) or 2 in the respective conventions. Morphisms of order three or more are properly called tensors. Conversely, tensors of any order (including 0, 1 and 2) are linear maps, of the appropriate domain and codomain. When there is more that one underlying vector space, the order is not enough to characterise a tensor space: the full type needs to be specified, as in Section 3. (Yet this level of complexity won’t be exercised in this paper.)

$\mathsf{W}$

, and this way we can build spaces of arbitrarily large order. The order of a linear map can be defined either as the pair of the orders of its input and output spaces, or as their sum (depending on convention). For example, a linear operator on an atomic vector space has order (1,1) or 2 in the respective conventions. Morphisms of order three or more are properly called tensors. Conversely, tensors of any order (including 0, 1 and 2) are linear maps, of the appropriate domain and codomain. When there is more that one underlying vector space, the order is not enough to characterise a tensor space: the full type needs to be specified, as in Section 3. (Yet this level of complexity won’t be exercised in this paper.)

2.2 Coordinate representations

In practice, the algebraic definitions are not easy to manipulate for concrete problems, thus one most commonly works with coordinate representations instead. (Our goal will be to break free of those eventually.) As a reminder, given a basis

![]() $\vec e_{i}$

, any vector

$\vec e_{i}$

, any vector

![]() $\vec x\in V$

can be uniquely expressed as

$\vec x\in V$

can be uniquely expressed as

![]() $\vec x = \sum _{i} x^i\triangleleft \vec e_{i}$

where each

$\vec x = \sum _{i} x^i\triangleleft \vec e_{i}$

where each

![]() $x^i $

coordinate is a scalar. In this way, given a basis

$x^i $

coordinate is a scalar. In this way, given a basis

![]() $\vec e_{i}$

, a vector space is isomorphic to its set of coordinate representations. Note that a superscript is used for the index of such coordinates. The general convention that governs whether one should write indices in low or high positions is explained in Section 2.3; for now, it is enough to know that they are indexing notations.

$\vec e_{i}$

, a vector space is isomorphic to its set of coordinate representations. Note that a superscript is used for the index of such coordinates. The general convention that governs whether one should write indices in low or high positions is explained in Section 2.3; for now, it is enough to know that they are indexing notations.

Like vectors, linear maps are also commonly manipulated as matrices of coefficients. For a linear map f from a vector space with basis

![]() $\vec {d_{i}}$

to a space with basis

$\vec {d_{i}}$

to a space with basis

![]() $\vec e_j$

, each column is given by the coefficients of

$\vec e_j$

, each column is given by the coefficients of

![]() $f(\vec {d_{i}})$

. Indeed, using

$f(\vec {d_{i}})$

. Indeed, using

![]() $F{_i}{^j}$

to denote the coefficients, we have:

$F{_i}{^j}$

to denote the coefficients, we have:

\begin{equation*} f(\vec x) = f\left(\sum _{i} x^i \triangleleft \vec {d_{i}}\right) = \sum _{i} x^i \triangleleft f(\vec {d_{i}}) = \sum _{i} x^i \triangleleft \left(\sum _j F{_i}{^j} \triangleleft \vec e_j\right) = \sum _j \left(\sum _i F{_i}{^j} x^i \right) \triangleleft \vec e_j\end{equation*}

\begin{equation*} f(\vec x) = f\left(\sum _{i} x^i \triangleleft \vec {d_{i}}\right) = \sum _{i} x^i \triangleleft f(\vec {d_{i}}) = \sum _{i} x^i \triangleleft \left(\sum _j F{_i}{^j} \triangleleft \vec e_j\right) = \sum _j \left(\sum _i F{_i}{^j} x^i \right) \triangleleft \vec e_j\end{equation*}

In general, the values of the matrix coefficients

![]() $F{_i}{^j}$

depend on the choice of bases

$F{_i}{^j}$

depend on the choice of bases

![]() $\vec {d_{i}}$

and

$\vec {d_{i}}$

and

![]() $\vec e_j$

, but to reduce the number of moving parts one usually works with a coherent set of bases.

$\vec e_j$

, but to reduce the number of moving parts one usually works with a coherent set of bases.

Coherent bases

Starting from an atomic vector space T, one can build a collection of more complicated tensor spaces using tensor product, dual, and the unit (the scalar field S). For coordinate representations each such space could, in general, have its own basis, but it is standard to work with a collection of coherent bases. Given a basis

![]() $\vec e_{i}$

for a finite-dimensional atomic vector space T, the coherent basis for

$\vec e_{i}$

for a finite-dimensional atomic vector space T, the coherent basis for

![]() $T^*$

is the set of covectors

$T^*$

is the set of covectors

![]() $\tilde e^j$

such that

$\tilde e^j$

such that

![]() $\tilde e^j(\vec e_{i}) = \delta_{i}^j$

. (It is usually called the dual basis.) Likewise, given two coherent bases

$\tilde e^j(\vec e_{i}) = \delta_{i}^j$

. (It is usually called the dual basis.) Likewise, given two coherent bases

![]() $\vec {d_{i}}$

and

$\vec {d_{i}}$

and

![]() $\vec e_{i}$

respectively for V and W, the coherent basis for

$\vec e_{i}$

respectively for V and W, the coherent basis for

![]() $V\otimes W$

is

$V\otimes W$

is

![]() $b_{i,j} = \phi(\vec {d_{i}},\vec e_j)$

, where

$b_{i,j} = \phi(\vec {d_{i}},\vec e_j)$

, where

![]() $\phi$

is given by Definition 5. Note that this basis is indexed by a pair. Accordingly, if the dimension of V is m and the dimension of W is n, the dimension of

$\phi$

is given by Definition 5. Note that this basis is indexed by a pair. Accordingly, if the dimension of V is m and the dimension of W is n, the dimension of

![]() $V\otimes W$

is

$V\otimes W$

is

![]() $m \times n$

. Additionally, re-associating tensor spaces do not change coherent bases (

$m \times n$

. Additionally, re-associating tensor spaces do not change coherent bases (

![]() $\vec e_{(i,j),k}$

is the same as

$\vec e_{(i,j),k}$

is the same as

![]() $\vec {d}_{i,(j,k)}$

, up to applying the corresponding associator). Finally, the scalar vector space has dimension one, and thus has a single base vector, which is coherently chosen to be the unit of the scalar field (the number

$\vec {d}_{i,(j,k)}$

, up to applying the corresponding associator). Finally, the scalar vector space has dimension one, and thus has a single base vector, which is coherently chosen to be the unit of the scalar field (the number

![]() $1 : S$

).

$1 : S$

).

Coordinate transformations

Assuming one basis

![]() $\vec e_j$

and another basis

$\vec e_j$

and another basis

![]() $\vec {d_{i}}$

for the same vector space V such that

$\vec {d_{i}}$

for the same vector space V such that

![]() $\vec {d_{i}} = \sum _j F{_i}{^j} \vec e_j$

, then the coordinates in basis

$\vec {d_{i}} = \sum _j F{_i}{^j} \vec e_j$

, then the coordinates in basis

![]() $\vec {d_{i}}$

for

$\vec {d_{i}}$

for

![]() $\vec x$

are

$\vec x$

are

![]() $\hat x^j = \sum _{i} F{_i}{^j} x^i $

. We say that the matrix F is the transformation matrix for V given the choice of bases made above, and denote it J(V). Then the transformation matrices for vector spaces built from an atomic space T using the coherent set of bases defined above are given by the following structural rules:

$\hat x^j = \sum _{i} F{_i}{^j} x^i $

. We say that the matrix F is the transformation matrix for V given the choice of bases made above, and denote it J(V). Then the transformation matrices for vector spaces built from an atomic space T using the coherent set of bases defined above are given by the following structural rules:

\begin{align*}J(V\otimes W)&= J(V)\otimes J(W)&\text{Kronecker product of matrices}\\J(V^*)&= J(V)^{-1}&\text{matrix inverse}\\J(S)&= 1&\text{scalar unit}\end{align*}

\begin{align*}J(V\otimes W)&= J(V)\otimes J(W)&\text{Kronecker product of matrices}\\J(V^*)&= J(V)^{-1}&\text{matrix inverse}\\J(S)&= 1&\text{scalar unit}\end{align*}

Furthermore, the matrix representation G of a linear map

![]() $g : V \!\longrightarrow\! W$

is transformed to:

$g : V \!\longrightarrow\! W$

is transformed to:

![]() $J(W) \cdot G \cdot J(V^*)$

, where

$J(W) \cdot G \cdot J(V^*)$

, where

![]() $(\cdot)$

is matrix multiplication. These are the “transformation rules” that Rowland & Weisstein (Reference Rowland and Weisstein2023) allude to in the above quote.

$(\cdot)$

is matrix multiplication. These are the “transformation rules” that Rowland & Weisstein (Reference Rowland and Weisstein2023) allude to in the above quote.

2.3 Einstein notation

The previous section showed how to deal with concrete matrices, using a concrete choice of bases. The next step is to manipulate symbolic expressions involving matrices. The language of such expressions (together with a couple of simple conventions) is colloquially referred to as Einstein notation.

In this notation, every index ranges over the dimensions of an atomic vector space.Footnote 4 Consequently, the total number of free (non repeated) indices indicates the order of a tensor expression in index notation. An index can be written as a subscript (and called a low index) or as a superscript (and called a high index).

The location (high or low) of the index is dictated by which coordinate transformation applies to it. That is, if a high index ranges over the dimensions of V, then J(V) applies, whereas

![]() $J(V^*)$

applies for a low index. Additionally, every reference to a tensor is fully saturated, in the sense that a symbolic tensor is always applied to as many indices as its order. Thus, for instance,

$J(V^*)$

applies for a low index. Additionally, every reference to a tensor is fully saturated, in the sense that a symbolic tensor is always applied to as many indices as its order. Thus, for instance,

![]() $x^i$

denote (the components of) a vector, and

$x^i$

denote (the components of) a vector, and

![]() $y_j$

denote (the components of) a covector. The expression

$y_j$

denote (the components of) a covector. The expression

![]() $t{_i}{^j}$

refers to (components of) a linear transformation of order (1,1). In the absence of contraction (see below), multiplication increases the order of tensors. For instance,

$t{_i}{^j}$

refers to (components of) a linear transformation of order (1,1). In the absence of contraction (see below), multiplication increases the order of tensors. For instance,

![]() $x^i\,y_j$

also has order (1,1). In general, if t and u are expressions denoting tensors of order m and n, respectively, then their product

$x^i\,y_j$

also has order (1,1). In general, if t and u are expressions denoting tensors of order m and n, respectively, then their product

![]() $t\,u$

denotes a tensor of order

$t\,u$

denotes a tensor of order

![]() $m+n$

.

$m+n$

.

Contraction

In Einstein notation, the convention is that, within a term, a repeated index is implicitly summed over. (In terms familiar to this journal: such indices are implicitly bound by a summation operator.) Because summation is a linear operator, within a term all the well-scoped locations of the summation operator are equivalent—so it makes a lot of sense to omit them. Additionally, when an index is repeated, it must be repeated exactly twice; once as a high index and once as a low index. Mentioning an index twice is called contraction. Viewing tensors as higher dimensional matrices of coefficients, contraction consists in summing coefficients along a diagonal. Therefore, a contraction reduces the order of the tensor by two.Footnote

5

The indices which are contracted are sometimes called “dummy” and those that are not contracted are called “live” (In terms familiar to the functional programming community, dummies are bound variables and live indices are free variables.) To be well-scoped, every term in a sum must use the same live indices. For instance, the expression

![]() $t{_l}{^j}u{_m}{^k}v{_i}{^l}{^m} + v{_i}{^j}{^k}$

denotes a tensor of order (1,2). Its live indices are

$t{_l}{^j}u{_m}{^k}v{_i}{^l}{^m} + v{_i}{^j}{^k}$

denotes a tensor of order (1,2). Its live indices are

![]() ${}_i,{}^j,{}^k$

, and the indices m and l are dummies.

${}_i,{}^j,{}^k$

, and the indices m and l are dummies.

At this stage, one can see the Einstein notation as a convenient way to notate expressions which manipulate coordinates of tensors. The high/low index convention makes it clear which transformations apply. Yet it may be mysterious why indices must be repeated exactly twice, and why (live) indices cannot be omitted from a term. The answer lies in the following observation. Even though the Einstein notation may originate as a convenient way to express coordinates, it really is intended to describe algebraic objects. The physicists Thorne & Blandford (Reference Thorne and Blandford2015) put it this way:

[we suggest to] momentarily think of [Einstein notation] as a relationship between components of tensors in a specific basis; then do a quick mind-flip and regard it quite differently, as a relationship between geometric, basis-independent tensors with the

indices playing the roles of slot names.

(A “slot” is a component of input or output tensor space.) The key of this “mind flip” is that live indices correspond to inputs (or outputs) of linear functions, and contraction corresponds to connecting inputs to outputs. The main contribution of this paper is to work out this connection in full as a pair of two edsls.

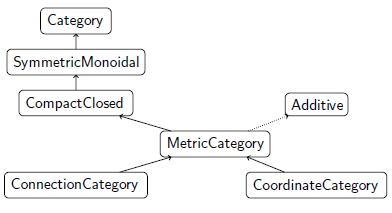

3 Categorical structures

The key concepts needed to understand the essence of Einstein notation are the categorical structures that tensors inhabit. Besides, these structures will be instrumental in our design: we will let the user of Albert write expressions which are (close to) Einstein notation, but they will be evaluated to morphisms in the appropriate category. The underlying category can then be specialised according to the application at hand.

The categorical approach consists in raising the abstraction level, and focusing on the ways that linear maps are combined to construct more complex ones. The first step is to view linear maps as morphisms of a category whose objects are vector spaces. We render the type of morphisms from

![]() $\mathsf{a}$

to

$\mathsf{a}$

to

![]() $\mathsf{b}$

as

$\mathsf{b}$

as

![]() $\mathsf{a}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{b}$

, corresponding to

$\mathsf{a}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{b}$

, corresponding to

![]() $z\mskip 3.0mu\mathsf{a}\mskip 3.0mu\mathsf{b}$

in Haskell code.

$z\mskip 3.0mu\mathsf{a}\mskip 3.0mu\mathsf{b}$

in Haskell code.

Vector spaces form a commutative monoid under tensor product. Hence, linear maps form a symmetric monoidal category, or smc, whose combinators are as follows.

In the above smc class definition, we follow the usual convention of using the same symbol

![]() ${(}\mskip 0.0mu{\otimes }\mskip 0.0mu{)}$

both for the product of objects and the parallel composition of morphisms. In fact, this morphism operator is also called a tensor product in the literature. An smc comes with a number of laws, which are both unsurprising and extensively documented elsewhere (Barr & Wells, Reference Barr and Wells1999). We omit them here. The operations

${(}\mskip 0.0mu{\otimes }\mskip 0.0mu{)}$

both for the product of objects and the parallel composition of morphisms. In fact, this morphism operator is also called a tensor product in the literature. An smc comes with a number of laws, which are both unsurprising and extensively documented elsewhere (Barr & Wells, Reference Barr and Wells1999). We omit them here. The operations

![]() $\sigma $

(swap),

$\sigma $

(swap),

![]() $\alpha$

,

$\alpha$

,

![]() $\bar{\alpha}$

(associators) witness the commutative monoidal structure which tensor products possess. The unit of the tensor product, written

$\bar{\alpha}$

(associators) witness the commutative monoidal structure which tensor products possess. The unit of the tensor product, written

![]() $\mathbf{1}$

, is the scalar vector space (

$\mathbf{1}$

, is the scalar vector space (

![]() $\mathsf{S}$

), which is witnessed by the isomorphisms

$\mathsf{S}$

), which is witnessed by the isomorphisms

![]() $\rho$

and

$\rho$

and

![]() $\bar{\rho}$

, called unitors.

$\bar{\rho}$

, called unitors.

As an example, take the morphism

![]() $\mathsf{ex}$

=

$\mathsf{ex}$

=

![]() ${(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\sigma \mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\alpha\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\sigma \mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\alpha\mskip 3.0mu{\circ }\mskip 3.0mu\alpha$

. It is polymorphic, but has in particular type

${(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\sigma \mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\alpha\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\sigma \mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\alpha\mskip 3.0mu{\circ }\mskip 3.0mu\alpha$

. It is polymorphic, but has in particular type

![]() ${(}\mskip 0.0mu{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}$

. Its input and output orders are both 4, for a total order of (4,4) or 8. It is written

${(}\mskip 0.0mu{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\mskip 0.0mu{)}$

. Its input and output orders are both 4, for a total order of (4,4) or 8. It is written

![]() $\delta{_i}{^m}\delta{_j}{^p}\delta{_k}{^n}\delta{_l}{^o}$

in Einstein notation, which makes more explicit the connection between inputs and output. An even more explicit notation is its rendering as a diagram:

$\delta{_i}{^m}\delta{_j}{^p}\delta{_k}{^n}\delta{_l}{^o}$

in Einstein notation, which makes more explicit the connection between inputs and output. An even more explicit notation is its rendering as a diagram:  .

.

This diagram notation can be generalised to all morphisms in a smc and is known as string diagrams. It is a two-dimensional instance of the abstract categorical structure. It is also fully abstract, in the sense that every diagram can be mapped to a unique morphism in the underlying smc. Figures 2 and 3 show several of the atomic diagrams which make up smcs. The guide for this notation is that each morphism is represented by a network of wires. Wires are drawn in a way that makes it clear which inputs are connected to which outputs. Because unit objects can be added and dropped at will (using

![]() $\rho$

and

$\rho$

and

![]() $\bar{\rho}$

), under some conventions the corresponding wires are not drawn at all. Here we choose to draw them as grey lines.

$\bar{\rho}$

), under some conventions the corresponding wires are not drawn at all. Here we choose to draw them as grey lines.

Fig. 2: Diagram, categorical and index notations for identity and composition.

Fig. 3: Diagram, categorical, and Einstein notations for morphisms of symmetric monoidal categories. They are in general polymorphic, but we display them here as acting on an atomic vector space T, or the simplest allowable combination thereof (see the last row in the figure for the monomorphic type of the respective morphisms). The morphisms

![]() $\bar{\alpha}$

and

$\bar{\alpha}$

and

![]() $\bar{\rho}$

are not shown, but are drawn symmetrically to

$\bar{\rho}$

are not shown, but are drawn symmetrically to

![]() $\alpha$

and

$\alpha$

and

![]() $\rho$

, respectively.

$\rho$

, respectively.

The diagram notation is defined in such a way that morphisms that are equal under the category laws have topologically equivalent diagram representations (Selinger, Reference Selinger2011). That is, if we can deform one diagram to another without cutting wires, then they are equivalent. We can illustrate this kind of topological reasoning with the following simple example. Assuming an abstract tensor

![]() $\mathsf{u}\mskip 3.0mu{:}\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{T}$

, one can check that

$\mathsf{u}\mskip 3.0mu{:}\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{T}$

, one can check that

![]() $\sigma \mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\sigma \mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}\mskip 0.0mu{)}$

is equivalent to

$\sigma \mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\sigma \mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}\mskip 0.0mu{)}$

is equivalent to

![]() $\mathsf{u}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}$

by applying a number of algebraic laws, but this is an error-prone process. If we first convert the morphisms to diagram form, we need to check

$\mathsf{u}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{u}$

by applying a number of algebraic laws, but this is an error-prone process. If we first convert the morphisms to diagram form, we need to check ![]() which is a matter of repositioning the second box.Footnote 6

which is a matter of repositioning the second box.Footnote 6

At this point, a reader familiar with programming languages might be tempted to assume that

![]() $\mathsf{V}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{W}$

is like a pair of

$\mathsf{V}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{W}$

is like a pair of

![]() $\mathsf{V}$

and

$\mathsf{V}$

and

![]() $\mathsf{W}$

. That is, that tensors would not only form a category, but even a Cartesian category. This is not the case: tensors are not equipped with projections nor duplications. This observation justifies the fact that contraction in Einstein notation must involve exactly two indices. Indeed, contraction corresponds to connecting loose wires in the diagram notation, and because we do not have a Cartesian category, only two loose wires can be connected (to make a new continuous wire).

$\mathsf{W}$

. That is, that tensors would not only form a category, but even a Cartesian category. This is not the case: tensors are not equipped with projections nor duplications. This observation justifies the fact that contraction in Einstein notation must involve exactly two indices. Indeed, contraction corresponds to connecting loose wires in the diagram notation, and because we do not have a Cartesian category, only two loose wires can be connected (to make a new continuous wire).

Addition and scaling

As we saw, tensors of the same type (same domain and codomain) form themselves a vector space, and as such can be scaled and added together. The corresponding categorical structure is called an additive category. Thus, every tensor category z will satisfy the

![]() $\mathsf{Additive}$

constraint:

$\mathsf{Additive}$

constraint:

Recalling the definition of

![]() $\mathsf{VectorSpace}$

from Section 2.1,

$\mathsf{VectorSpace}$

from Section 2.1,

![]() $\mathsf{Additive}$

implies that we have the following two operations for every

$\mathsf{Additive}$

implies that we have the following two operations for every

![]() $\mathsf{a}$

and

$\mathsf{a}$

and

![]() $\mathsf{b}$

:

$\mathsf{b}$

:

An additive category requires that composition

![]() ${(}\mskip 0.0mu{\circ }\mskip 0.0mu{)}$

and tensor products

${(}\mskip 0.0mu{\circ }\mskip 0.0mu{)}$

and tensor products

![]() ${(}\mskip 0.0mu{\otimes }\mskip 0.0mu{)}$

are bilinear. In full:

${(}\mskip 0.0mu{\otimes }\mskip 0.0mu{)}$

are bilinear. In full:

We note in passing that there is no obviously good way to represent addition using diagrams. If diagrams should be added together we write them side by side with a plus sign in between.

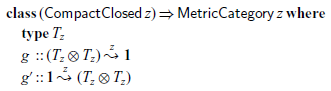

Compact Closed Category There remains to capture the relationship between a vector space V and its associated covector space

![]() $V^*$

. This is done abstractly using a compact closed category structure (Selinger, Reference Selinger2011). In a compact closed category, every object has a dual, and duals generalise the notion of co-vector space.

$V^*$

. This is done abstractly using a compact closed category structure (Selinger, Reference Selinger2011). In a compact closed category, every object has a dual, and duals generalise the notion of co-vector space.

In the tensor instance,

![]() $\eta$

and

$\eta$

and

![]() $\epsilon$

produce and consume correlated products of vectors and covectors. While the algebraic view is very abstract and can be hard to grasp (it is for the authors), the diagrams help. Compact closed categories are required to satisfy the so-called snake laws: one is

$\epsilon$

produce and consume correlated products of vectors and covectors. While the algebraic view is very abstract and can be hard to grasp (it is for the authors), the diagrams help. Compact closed categories are required to satisfy the so-called snake laws: one is

![]() ${(}\mskip 0.0mu\epsilon\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\eta\mskip 0.0mu{)}$

=

${(}\mskip 0.0mu\epsilon\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu\bar{\alpha}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\eta\mskip 0.0mu{)}$

=

![]() $\sigma $

, or

$\sigma $

, or ![]() , and the other is symmetrical. These laws ensure that the object

, and the other is symmetrical. These laws ensure that the object

![]() ${\mathsf{a}^*}$

is just like the object

${\mathsf{a}^*}$

is just like the object

![]() $\mathsf{a}$

, but travelling backwards (input and output roles are exchanged). To reflect this, in the diagrammatic representation of

$\mathsf{a}$

, but travelling backwards (input and output roles are exchanged). To reflect this, in the diagrammatic representation of

![]() $\eta$

and

$\eta$

and

![]() $\epsilon$

, we indicate the

$\epsilon$

, we indicate the

![]() ${\mathsf{a}^*}$

object with a left-pointing arrow, as shown in Figure 4(a). Indeed, there is no difference between an input vector space

${\mathsf{a}^*}$

object with a left-pointing arrow, as shown in Figure 4(a). Indeed, there is no difference between an input vector space

![]() $\mathsf{a}$

and an output vector space

$\mathsf{a}$

and an output vector space

![]() ${\mathsf{a}^*}$

. Accordingly, in the Einstein notation, no difference is being made between inputs and outputs. Instead only co- or contra-variance is reflected notationally. Consequently neither

${\mathsf{a}^*}$

. Accordingly, in the Einstein notation, no difference is being made between inputs and outputs. Instead only co- or contra-variance is reflected notationally. Consequently neither

![]() $\eta$

nor

$\eta$

nor

![]() $\epsilon$

are visible in the Einstein notation, except perhaps as a Kronecker

$\epsilon$

are visible in the Einstein notation, except perhaps as a Kronecker

![]() $\delta$

(see Figure 4(a)). For instance, the morphism

$\delta$

(see Figure 4(a)). For instance, the morphism

![]() $\epsilon\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\bar{\rho}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\epsilon\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\alpha\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu{(}\mskip 0.0mu\sigma \mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}$

, or

$\epsilon\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\bar{\rho}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\mathsf{id}\mskip 3.0mu{\otimes }\mskip 3.0mu\epsilon\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu\alpha\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\circ }\mskip 3.0mu{(}\mskip 0.0mu{(}\mskip 0.0mu\sigma \mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{id}\mskip 0.0mu{)}$

, or  in diagram notation, is written

in diagram notation, is written

![]() $\delta{_i}{^k}\delta{_j}{^l}$

in Einstein notation. Figure 4(b) shows how an input object

$\delta{_i}{^k}\delta{_j}{^l}$

in Einstein notation. Figure 4(b) shows how an input object

![]() $\mathsf{a}$

(of any morphism) can be converted to an output

$\mathsf{a}$

(of any morphism) can be converted to an output

![]() ${\mathsf{a}^*}$

, and vice versa. One can even combine both ideas and connect the output of a morphism

${\mathsf{a}^*}$

, and vice versa. One can even combine both ideas and connect the output of a morphism

![]() $\mathsf{t}$

back to its input. By doing so, one constructs the trace of

$\mathsf{t}$

back to its input. By doing so, one constructs the trace of

![]() $\mathsf{t}$

.Footnote 7

$\mathsf{t}$

.Footnote 7

Fig. 4: Illustration of compact closed categories in various notations. Note that Einstein notation does not change when bending connections using

![]() $\eta$

or

$\eta$

or

![]() $\epsilon$

, though in the third example, the new connection is notated by repeated use of the index.

$\epsilon$

, though in the third example, the new connection is notated by repeated use of the index.

We now have a complete description of the tensor combinators—the core of Roger. Unfortunately, in practice, it is inconvenient to use as such. Indeed, most of the tensor expressions encountered in practice consists of building a network of connections between atomic blocks. Unfortunately, using the categorical combinators for this purpose is tedious. For instance, contracting two input indices is particularly tedious in the point-free notation, because it is realised as a composition with

![]() $\eta$

or

$\eta$

or

![]() $\epsilon$

with a large number of smc combinators to select the appropriate dimensions to contract. It is akin to programming with SKI combinators instead of using the lambda calculus. Using variable names for indices, like in the Einstein notation, would be much more convenient. We will get there in Section 4.

$\epsilon$

with a large number of smc combinators to select the appropriate dimensions to contract. It is akin to programming with SKI combinators instead of using the lambda calculus. Using variable names for indices, like in the Einstein notation, would be much more convenient. We will get there in Section 4.

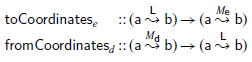

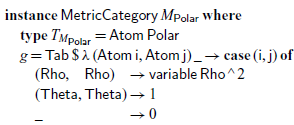

3.1 Matrix instances

An important instance of the compact closed category structure is the category of matrices of coefficients, which we encountered in Section 2.2. In our host functional language, we define them as a function from (both input and output) indices to coefficients (of type

![]() $\mathsf{S}$

):Footnote

8

$\mathsf{S}$

):Footnote

8

To emphasise the dependency on the basis, we use a subscript when referring to a specific matrix category morphism, as in

![]() $M_{\mathsf{b}}$

where

$M_{\mathsf{b}}$

where

![]() $\mathsf{b}$

is a reference to the choice of basis. In the Haskell implementation, this basis is represented by a phantom type parameter.

$\mathsf{b}$

is a reference to the choice of basis. In the Haskell implementation, this basis is represented by a phantom type parameter.

The identity morphism is the identity matrix, and composition is matrix multiplication:

In this instance, the objects are identified with sets that index the bases of the vector spaces that they stand for. These sets are assumed to be enumerable and bounded (so we have access to their

![]() $\mathsf{inhabitants}$

) and we can compare indices for equality.Footnote 9 The instance of the smc structure for matrix representations in coherent bases is then:

$\mathsf{inhabitants}$

) and we can compare indices for equality.Footnote 9 The instance of the smc structure for matrix representations in coherent bases is then:

Because objects index the bases of the corresponding vector spaces, tensor products are represented as usual pairs. In the above definition, the right-hand sides are Haskell code. This means that the asterisk operator

![]() ${(}\mskip 0.0mu{*}\mskip 0.0mu{)}$

denotes multiplication between scalars as components of matrices. In contrast, the operator

${(}\mskip 0.0mu{*}\mskip 0.0mu{)}$

denotes multiplication between scalars as components of matrices. In contrast, the operator

![]() $(\star)$

defined in Section 4 denotes multiplication between abstract scalar (order-0) tensor expressions (independent of the chosen tensor representation).

$(\star)$

defined in Section 4 denotes multiplication between abstract scalar (order-0) tensor expressions (independent of the chosen tensor representation).

With the coherent choice of bases,

![]() $\eta$

and

$\eta$

and

![]() $\epsilon$

are simply realised as the identity.

$\epsilon$

are simply realised as the identity.

The object

![]() ${\mathsf{a}^*}$

has the same dimensionality as

${\mathsf{a}^*}$

has the same dimensionality as

![]() $\mathsf{a}$

, so in our Haskell encoding we use

$\mathsf{a}$

, so in our Haskell encoding we use

![]() $\mathbf{newtype}$

for it,

$\mathbf{newtype}$

for it, ![]() . For concision (and following tradition), we use an asterisk as a shorthand, so

. For concision (and following tradition), we use an asterisk as a shorthand, so

![]() ${\mathsf{a}^*}$

stands for the type

${\mathsf{a}^*}$

stands for the type

![]() $\mathsf{DualObject}\mskip 3.0mu\mathsf{a}$

.

$\mathsf{DualObject}\mskip 3.0mu\mathsf{a}$

.

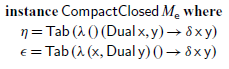

Coordinate representation functors. It is worth mentioning that the transformation functions between linear maps

![]() $\mathsf{L}$

and their representations

$\mathsf{L}$

and their representations

![]() $M_{\mathsf{e}}$

in a given basis

$M_{\mathsf{e}}$

in a given basis

![]() $\mathsf{e}$

are a pair of functors which are the identity on objects and just change the morphisms:

$\mathsf{e}$

are a pair of functors which are the identity on objects and just change the morphisms:

Furthermore, this pair defines an isomorphism between the respective compact-closed categories. Therefore, even though different representations form different categories, one can always transform one to another. The transformation between systems of coordinates, usually presented using transformation matrices (see Section 2.2) can be understood as the composition of

![]() $\mathsf{fromCoordinates}$

in the source basis, and

$\mathsf{fromCoordinates}$

in the source basis, and

![]() $\mathsf{toCoordinates}$

in the target basis:

$\mathsf{toCoordinates}$

in the target basis:

4 Design of

${\mathbf{A}\scriptstyle \mathbf{LBERT}}$

${\mathbf{A}\scriptstyle \mathbf{LBERT}}$

In this section, we provide the design of Albert. We intend our design to match Einstein notation as closely as possible. This aim is achieved except for the following two differences:

1. Indices can range over the dimensions of any space (not just atomic vector spacesFootnote 10 )

2. Indices are always explicitly bound

The first difference is motivated by polymorphism considerations: we make many functions polymorphic over the vector space that they manipulate, and as a consequence, the corresponding indices can range over the dimensions of tensor or scalar vector spaces. For instance, when we write

![]() $\mathsf{delta}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}$

, indices (

$\mathsf{delta}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}$

, indices (

![]() $_{\mathsf{i}}$

,

$_{\mathsf{i}}$

,

![]() $^{\mathsf{j}}$

) may range over order-2 tensor spaces, in which case the corresponding Einstein notation would be the product of two Kronecker deltas.

$^{\mathsf{j}}$

) may range over order-2 tensor spaces, in which case the corresponding Einstein notation would be the product of two Kronecker deltas.

The second difference is motivated by the need to follow the functional programming conventions, which is required to embed the dsl in Haskell, or any functional language without macros. Besides, to avoid confusion, in Albert we choose names in all letters for combinators. For instance, when the conventional notation is the Greek letter

![]() $\delta$

, we write

$\delta$

, we write

![]() $\mathsf{delta}$

in Albert.

$\mathsf{delta}$

in Albert.

The principles and most of the interface of Albert are presented in this section. The tensor-field specific functions are discussed in Section 5. The complete interface is summarised in Figures 9 and 10.

Fig. 9: Syntax of the index language of Albert, as types of combinators.

Fig. 10: Syntax of the expression sub-language of Albert, as types of combinators. We repeat christoffel symbol and metric here even though they can be defined by the user as the embedding of the corresponding Roger primitives.

Types All types are parameterised by z, the category which tensors inhabit. The type of an index ranging over the dimensions of vector space

![]() $\mathsf{a}$

is

$\mathsf{a}$

is

![]() $\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{a}$

(think of

$\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{a}$

(think of

![]() $\mathsf{P}$

as “port” or “end of a wire carrying

$\mathsf{P}$

as “port” or “end of a wire carrying

![]() $\mathsf{a}$

” in the diagrams), where the variable

$\mathsf{a}$

” in the diagrams), where the variable

![]() $\mathsf{r}$

is a technical (scoping) device (made precise in Section 7). For the purpose of using Albert, it suffices to know that this variable

$\mathsf{r}$

is a technical (scoping) device (made precise in Section 7). For the purpose of using Albert, it suffices to know that this variable

![]() $\mathsf{r}$

should be consistent throughout any given expression.

$\mathsf{r}$

should be consistent throughout any given expression.

The type of expressions is

![]() $\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

. Expressions of this type closely match expressions in Einstein notation. In particular, expressions with several free index variables correspond to higher-order tensors. For example, assuming two free index variables

$\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

. Expressions of this type closely match expressions in Einstein notation. In particular, expressions with several free index variables correspond to higher-order tensors. For example, assuming two free index variables

![]() $_{\mathsf{i}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}$

and

$_{\mathsf{i}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}$

and

![]() $^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}$

, then

$^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}$

, then

![]() $\mathsf{w}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a covector over

$\mathsf{w}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a covector over

![]() $\mathsf{T}$

;

$\mathsf{T}$

;

![]() $\mathsf{v}\mskip 3.0mu^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a vector in

$\mathsf{v}\mskip 3.0mu^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a vector in

![]() $\mathsf{T}$

; and

$\mathsf{T}$

; and

![]() $\mathsf{t}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a linear map from

$\mathsf{t}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

represents a linear map from

![]() $\mathsf{T}$

to

$\mathsf{T}$

to

![]() $\mathsf{T}$

. (Why this is so will become clear when we present the semantics of tensors, which we will do before the end of the section.)

$\mathsf{T}$

. (Why this is so will become clear when we present the semantics of tensors, which we will do before the end of the section.)

In sum, exactly as in Einstein notation, our tensor expressions define and manipulate tensors as (abstract) scalar-valued expressions depending on indices. Likewise, the order of the underlying tensor is the sum of the order of the free index variables occurring in it. Even though we present index variables as either super- or subscripts, they are just regular variable names.

Because the underlying category z is not Cartesian, every input must be connected to a single output, and vice-versa. Hence, index variables occur exactly once in each term. We enforce this restriction by using (and binding) index variables linearly.Footnote

11

Accordingly, the types of the variables

![]() $\mathsf{v}\mskip 0.0mu{,}\mskip 3.0mu\mathsf{w}\mskip 0.0mu{,}\mskip 3.0mu\mathsf{t}$

mentioned above involve (type-)linear functions. For instance

$\mathsf{v}\mskip 0.0mu{,}\mskip 3.0mu\mathsf{w}\mskip 0.0mu{,}\mskip 3.0mu\mathsf{t}$

mentioned above involve (type-)linear functions. For instance

![]() $\mathsf{w}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a covector over

$\mathsf{w}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a covector over

![]() $\mathsf{T}$

;

$\mathsf{T}$

;

![]() $\mathsf{v}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a vector in

$\mathsf{v}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a vector in

![]() $\mathsf{T}$

; and

$\mathsf{T}$

; and

![]() $\mathsf{t}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a linear map over

$\mathsf{t}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{T}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{T}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

is a linear map over

![]() $\mathsf{T}$

. This means that Albert uses a higher-order abstract syntax, that is, the abstraction mechanism of the host language provides us with the means to abstract over index variables. The order of a tensor variable is given by taking the sum of the order of the index parameters in its type. So, for instance,

$\mathsf{T}$

. This means that Albert uses a higher-order abstract syntax, that is, the abstraction mechanism of the host language provides us with the means to abstract over index variables. The order of a tensor variable is given by taking the sum of the order of the index parameters in its type. So, for instance,

![]() $\mathsf{delta}$

has order 2m if its type argument

$\mathsf{delta}$

has order 2m if its type argument

![]() $\mathsf{a}$

stands for a vector space of order m:

$\mathsf{a}$

stands for a vector space of order m:

Tensor embedding, evaluation, and index manipulation Next, we turn our attention to embedding Roger into Albert. This is done by means of the following combinators:

The special case of a vector, where the target object is the unit, is common enough that it deserves a function of its own. In the general case, we take advantage of the compact-closed structure, and turn the output object (

![]() $\mathsf{b}$

) of the morphism into an input of an index over the dual object (

$\mathsf{b}$

) of the morphism into an input of an index over the dual object (

![]() ${\mathsf{b}^*}$

).

${\mathsf{b}^*}$

).

The converse operation consists in evaluating a tensor expression into a morphism:

The fact that we can move between these two dsls freely (using embedding and evaluation) means we can combine their strengths. In both embedding and evaluation, neither

![]() $\mathsf{a}$

nor

$\mathsf{a}$

nor

![]() $\mathsf{b}$

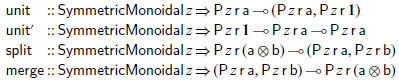

need be atomic types. To match the convention of Einstein notation, the user of Albert can break down the corresponding indices into their components after embedding, or conversely combine components before evaluation. Likewise, unit objects might need to be introduced or discarded. The interface for performing such operations is provided in the form of the following four combinators:

$\mathsf{b}$

need be atomic types. To match the convention of Einstein notation, the user of Albert can break down the corresponding indices into their components after embedding, or conversely combine components before evaluation. Likewise, unit objects might need to be introduced or discarded. The interface for performing such operations is provided in the form of the following four combinators:

To sum up, when z is a smc, the

![]() $\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

type transformer defines a homomorphism between the monoid of (linear) Haskell pairs and that of tensor products of the category z. As an illustration, a function

$\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

type transformer defines a homomorphism between the monoid of (linear) Haskell pairs and that of tensor products of the category z. As an illustration, a function

![]() $\mathsf{t}$

of type

$\mathsf{t}$

of type

![]() $\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{a}\mskip 3.0mu{\otimes }\mskip 3.0mu{\mathsf{b}^*}\mskip 0.0mu{)}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

can be curried to

$\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{a}\mskip 3.0mu{\otimes }\mskip 3.0mu{\mathsf{b}^*}\mskip 0.0mu{)}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

can be curried to

![]() $\mathsf{t'}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{a}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{b}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

. When using

$\mathsf{t'}\mskip 3.0mu{::}\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu\mathsf{a}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{P}\mskip 3.0muz\mskip 3.0mu\mathsf{r}\mskip 3.0mu{\mathsf{b}^*}\mskip 3.0mu{\multimap }\mskip 3.0mu\mathsf{R}\mskip 3.0muz\mskip 3.0mu\mathsf{r}$

. When using

![]() $\mathsf{t'}$

, each index is its own variable, closely matching Einstein notation.

$\mathsf{t'}$

, each index is its own variable, closely matching Einstein notation.

Multiplication and contraction

Another pervasive operation in Einstein notation is multiplication. In Albert, we use a multiplication operator with a linear type:

According to the typing rules of the linear function types, the occurrences of variables are accumulated in a function call. This way, the order of the product

![]() $\mathsf{t}(\star)\mathsf{u}$

is the sum of the orders of

$\mathsf{t}(\star)\mathsf{u}$

is the sum of the orders of

![]() $\mathsf{t}$

and

$\mathsf{t}$

and

![]() $\mathsf{u}$

. Contraction is realised by the following combinator.

$\mathsf{u}$

. Contraction is realised by the following combinator.

There are a couple of contrasting points when compared to the Einstein notation. First, we bind index variables explicitly, and thus we use an explicit contraction combinator. Indeed, while in Einstein notation indices are not explicitly bound, this liberty cannot be taken in an edsl based on a lambda calculus. Second, we consider the high and low indices involved in the contraction to be separate variables. Indeed, in Einstein notation each version of the index (high or low) must occur exactly once, and thus making them separate linearly bound variables is natural. One can think of it as the contraction creating a wire, with each end of the wire bound to a separate name. Nonetheless, the convention to use the same variable name in different positions is a convenient one. We recover it in this paper by a typographical trick: we use the same Latin letter for both indices and make the position as sub- or superscript integral to variable names. (This is purely a matter of convention and users of Albert are free to use whichever variable names they prefer.) Therefore, for instance, in Albert the composition of two linear transformations

![]() $\mathsf{t}$

and

$\mathsf{t}$

and

![]() $\mathsf{u}$

, as shown in Figure 2, is realised as

$\mathsf{u}$

, as shown in Figure 2, is realised as ![]() .

.

Addition and zero In Einstein notation, one can use the addition operator as if it were the point-wise addition of each of the components, for instance

![]() $t_{i}^{\,j} + u_{i}^{\,j}$

. Note that the live indices are used in each of the operands of the sum, thus repeated in the whole expression. This means that the following linear type for the sum operator would be incorrect:

$t_{i}^{\,j} + u_{i}^{\,j}$

. Note that the live indices are used in each of the operands of the sum, thus repeated in the whole expression. This means that the following linear type for the sum operator would be incorrect:

This is because in the expression

![]() $\mathsf{plus}_{wrong}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{t}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 0.0mu{)}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{u}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 0.0mu{)}$

, both

$\mathsf{plus}_{wrong}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{t}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 0.0mu{)}\mskip 3.0mu{(}\mskip 0.0mu\mathsf{u}\mskip 3.0mu_{\mathsf{i}}\mskip 3.0mu^{\mathsf{j}}\mskip 0.0mu{)}$

, both

![]() $\mathsf{i}$

and

$\mathsf{i}$

and

![]() $\mathsf{j}$

occur twice, while the type would require indices to be split between the left and right operand. Thus, we must use another type. We settle on the following one:

$\mathsf{j}$

occur twice, while the type would require indices to be split between the left and right operand. Thus, we must use another type. We settle on the following one:

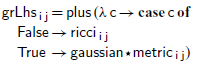

This type allows to code

![]() $t_{i}^{\,j} + u_{i}^{\,j}$

as follows:Footnote 12

$t_{i}^{\,j} + u_{i}^{\,j}$

as follows:Footnote 12

The above is well typed. Indeed, 1. the argument of

![]() $\mathsf{plus}$

is type-linear, so any use of indices in its body is considered type-linear; and 2. only one branch of a

$\mathsf{plus}$

is type-linear, so any use of indices in its body is considered type-linear; and 2. only one branch of a

![]() $\mathbf{case}$

is considered to be run, and therefore the same indices can (and must) be used in all the branches. The fact that only one branch is run is counter-intuitive because the semantics depends on both of them. We explain our solution to this apparent contradiction in Section 7.2.

$\mathbf{case}$

is considered to be run, and therefore the same indices can (and must) be used in all the branches. The fact that only one branch is run is counter-intuitive because the semantics depends on both of them. We explain our solution to this apparent contradiction in Section 7.2.

Conversely, there is a zero tensor of every possible order. Thus, we have a zero combinator with an index argument ranging over an arbitrary space:

The scaling operator

![]() ${(}\mskip 0.0mu\triangleleft \mskip 0.0mu{)}$

underpins non-zero constants, with no particular difficulty.

${(}\mskip 0.0mu\triangleleft \mskip 0.0mu{)}$

underpins non-zero constants, with no particular difficulty.

With the primitives of additive categories, one can construct the tensor

![]() $\mathsf{antisym}\mskip 3.0mu{=}$

$\mathsf{antisym}\mskip 3.0mu{=}$

![]() $\mathsf{id}\mskip 3.0mu{-}\mskip 3.0mu\sigma $

::

$\mathsf{id}\mskip 3.0mu{-}\mskip 3.0mu\sigma $

::

![]() $\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}$

. This tensor can be rendered graphically as the difference

$\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}\,\mskip 1.0mu\overset{z}{\leadsto }\mskip 1.0mu\,\mathsf{T}\mskip 3.0mu{\otimes }\mskip 3.0mu\mathsf{T}$

. This tensor can be rendered graphically as the difference ![]() , but it is useful enough to receive the special notation

, but it is useful enough to receive the special notation ![]() . Indeed, composing it with an arbitrary tensor gives its antisymmetric part with respect to the two connected indices.Footnote 13

. Indeed, composing it with an arbitrary tensor gives its antisymmetric part with respect to the two connected indices.Footnote 13

5 Tensor calculus: Fields and their derivatives

We have up to now worked with tensors as elements of certain vector spaces, but to further illustrate the capabilities of Albert, we apply it to tensor calculus, starting with the notion of fields.

5.1 Tensor fields

In this context a field means that a different value is associated with every position on a given manifold.Footnote

14

We denote the position parameter by

![]() $\mathbf X$

. Hence, the scalars (from Definition 1) are no longer just real numbers, but rather real-valued expressions depending on

$\mathbf X$

. Hence, the scalars (from Definition 1) are no longer just real numbers, but rather real-valued expressions depending on

![]() $\mathbf X$

.Footnote

15

For instance,

$\mathbf X$

.Footnote

15

For instance,

![]() $\mathbf X$

could be a position on the surface of the earth, and a scalar field could be the temperature at each such point.

$\mathbf X$

could be a position on the surface of the earth, and a scalar field could be the temperature at each such point.

A vector field associates a vector with every position; for instance, the wind direction. The perhaps surprising aspect is that each of these vectors may inhabit a different, local, vector space, which can be thought of as tangent to the manifold at the considered point. So in our example, we assumed that the wind is parallel to the earth surface. Hereafter, we assume such a local space for each category z, and call it

![]() $T_{z}$

, leaving the dependency on position

$T_{z}$

, leaving the dependency on position

![]() $\mathbf X$

implicit. Even though in the typical case the local vector space is different at each position, it keeps the same dimensionality. Therefore, as an object, it is independent of

$\mathbf X$

implicit. Even though in the typical case the local vector space is different at each position, it keeps the same dimensionality. Therefore, as an object, it is independent of

![]() $\mathbf X$

. In Haskell terms,

$\mathbf X$

. In Haskell terms,

![]() $T_{z}$

is an associated type; see Section 5.2.

$T_{z}$

is an associated type; see Section 5.2.

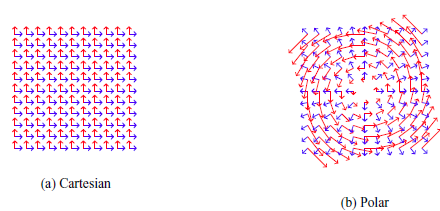

When we deal with matrix representations and want to perform computations with them, we need a way to identify the position

![]() $\mathbf X$

. For a general manifold, this is difficult to do, but we will restrict our scope to the case where a single coordinate system is sufficient. We also need a basis at each position, which gives a meaning to the entries in a tensor matrix representation (the meaning of these coordinates change with position). Furthermore, different choices of coordinate system are possible for the same manifold. The coordinates used to identify the position will be referred to as the global coordinates, while the coordinates of a tensor will be referred to as local coordinates. This terminology is not usual in mathematical praxis. However, we find that making this distinction is useful to lift ambiguities.Footnote

16

$\mathbf X$

. For a general manifold, this is difficult to do, but we will restrict our scope to the case where a single coordinate system is sufficient. We also need a basis at each position, which gives a meaning to the entries in a tensor matrix representation (the meaning of these coordinates change with position). Furthermore, different choices of coordinate system are possible for the same manifold. The coordinates used to identify the position will be referred to as the global coordinates, while the coordinates of a tensor will be referred to as local coordinates. This terminology is not usual in mathematical praxis. However, we find that making this distinction is useful to lift ambiguities.Footnote

16