1. Introduction

The field of statistical modeling and analysis of complex networks has gained increasing interest over the last two decades. This is driven by the fact that different types of systems can be reasonably formalized as relationships between individuals or interactions between objects. Analyzing such structures consequently allows to uncover and describe the phenomena that affect these systems. Network-structured data arise in various fields, for example, social and political sciences, economics, biology, and neurosciences, to name but a few. In this regard, a connectivity pattern between entities might describe friendships among members of a social group (Eagle et al., Reference Eagle, Pentland and Lazer2009), the trading between nations (Bhattacharya et al., Reference Bhattacharya, Mukherjee, Saramäki, Kaski and Manna2008), interactions of proteins (Schwikowski et al., Reference Schwikowski, Uetz and Fields2000), or the functional coactivation within the human brain (Bassett et al., Reference Bassett, Zurn and Gold2018, Crossley et al., Reference Crossley, Mechelli, Vértes, Winton-Brown, Patel, Ginestet and Bullmore2013).

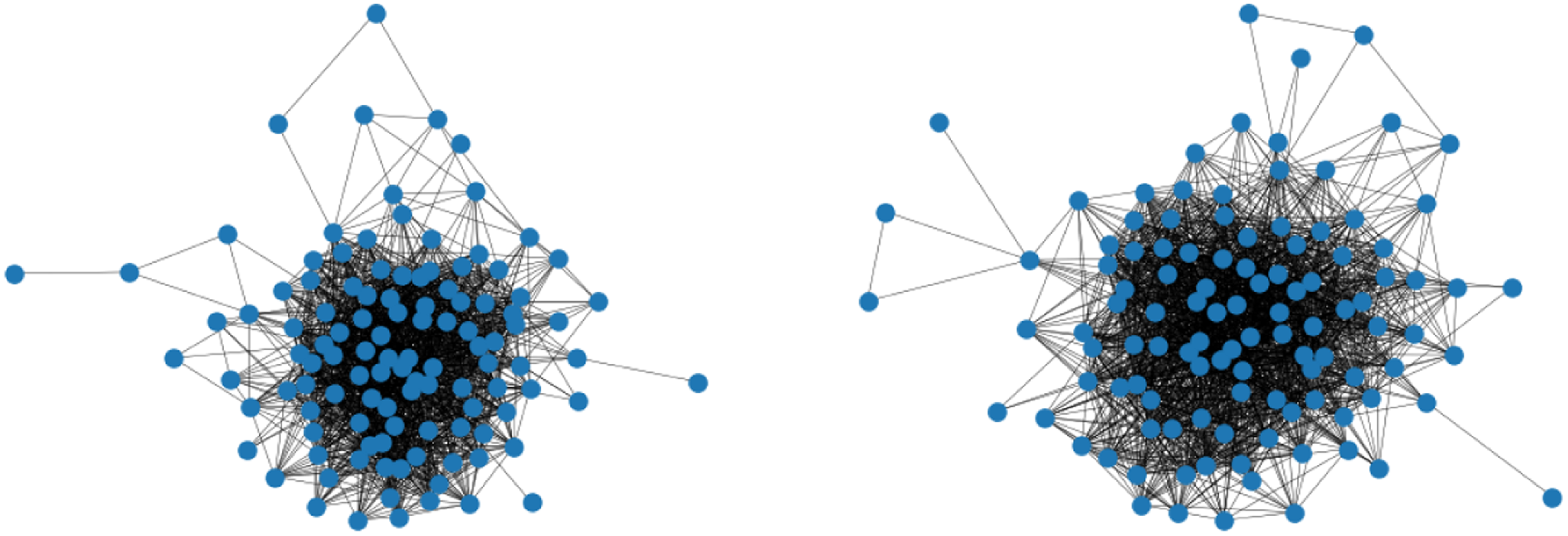

In many situations, uncovering the underlying connectivity structure is not the only concern but also the comparison of akin networks and the exploration of potential differences. For instance, this might be of interest in the context of brain coactivation, which we consider here as motivating example. Recently, a lot of work has been going on investigating how the functional connectivity in the brain differs when people are affected by cognitive disorders like Alzheimer’s disease or autism spectrum disorder (Song et al., Reference Song, Epalle and Lu2019, Subbaraju et al., Reference Subbaraju, Suresh, Sundaram and Narasimhan2017, Pascual-Belda et al., Reference Pascual-Belda, Díaz-Parra and Moratal2018). Two such functional coactivation networks—resulting from averaging over the measurements within two different subject groups, respectively—are illustrated in Figure 1. One inquiry which is then particularly relevant to the research question posed is whether a significant difference can be observed in these brain processes. More generally, this can be phrased as a hypothesis test on structural equivalence of two networks as exemplarily raised for the situation in Figure 1.

Figure 1. Functional coactivation networks of the human brain. The illustrated connectivity patterns result from averaging over multiple measurements for subjects with autism spectrum disorder (left) and typical development (right). Do these networks reveal a significant structural difference?.

To this end, we pursue constructing a statistical approach for network comparison that allows for a conclusion about significance. More precisely, we aim to test whether two networks can be considered as independent samples drawn from the same probability distribution. This is apparently in itself a technically difficult question since the two networks can have different sizes, and hence the two distributions need to be somehow different. Therefore, it is crucial that the applied distributional framework constitutes a rather universal probability measure. In fact, this is not a trivial property, and many network models entail conceptual issues that impede a direct comparison. For example, in the Exponential Random Graph Model (Robins et al., Reference Robins, Pattison, Kalish and Lusher2007), a concrete model parameterization has different implications for different network sizes, making corresponding coefficient estimates hardly comparable. Hence, for the given research question, statistical models seem advantageous where the specification of size and edge probabilities can be explicitly disentangled.

For such a comparative analysis of networks, we will demonstrate that Graphon Models (Lovász and Szegedy, Reference Lovász and Szegedy2006, Diaconis and Janson, Reference Diaconis and Janson2008, Sischka and Kauermann, Reference Sischka and Kauermann2022) are a very useful tool. First, the graphon model is very flexible and able to capture complex network structures. Secondly, the graphon itself can be interpreted as local density or intensity function on networks of nonparametric fashion. Lastly, the model’s design fulfills the above requirement of decoupling the network’s structure and size, allowing for modeling multiple networks simultaneously (Navarro and Segarra, Reference Navarro and Segarra2022). Hence, the graphon framework appears as a natural choice for comparison purposes.

The rest of the paper is organized as follows. In Section 2, we start with reviewing methods from the network comparison literature. A formalization of the test problem is elaborated and discussed in Section 3. Section 4 then deals with the joint graphon estimation, which is essential for the testing procedure to be applicable. Based on the joint graphon model, in Section 5, we develop a network comparison strategy for testing on equivalence of the underlying structures. The general applicability of the complete approach is demonstrated in Section 6, where we consider its performance on simulated and real-world data. The discussion and conclusion in Section 7 complete the paper.

2. Concepts for network comparison

When it comes to the comparison of networks, as a first step, one needs to decide on a concept for specifying network structures. In general, the various approaches can be broadly distinguished according to whether they rely on a descriptive or a model-based structural framework. Survey articles in this field are given by Soundarajan et al., (Reference Soundarajan, Eliassi-Rad and Gallagher2014), Yaveroğlu et al., (Reference Yaveroğlu, Milenković and Pržulj2015), Emmert-Streib et al., (Reference Emmert-Streib, Dehmer and Shi2016), and Tantardini et al., (Reference Tantardini, Ieva, Tajoli and Piccardi2019). A more general perspective on how complex data objects—such as adjacency matrices—might be compared is pointed out by Marron and Alonso (Reference Marron and Alonso2014). Looking into the aforementioned compendia and examining the general literature on network comparison, however, reveals a lack of model-based comparison approaches. This can also be seen in the following, where we briefly review the different strategies that have been proposed so far.

Starting with approaches that are based on extracted network statistics, the most intuitive strategy is presumably to simply compare global characteristics such as the clustering coefficient or the average path length (Newman, Reference Newman2018, pp. 364 ff., Butts Reference Butts2008, p. 31). An extensive analysis on the capability of common network metrics in the setting of monitoring and identifying differences in dynamic network structures has been carried out by Flossdorf and Jentsch (Reference Flossdorf and Jentsch2021). However, a single feature captures the overall network structure in most cases only very poorly since networks that are structured completely differently can apparently still possess a very similar value for that global statistic. Flossdorf et al., (Reference Flossdorf, Fried and Jentsch2023) tackle this issue by using multiple metrics. Aiming in a similar direction, Wilson and Zhu (Reference Wilson and Zhu2008) consider the differences in the graph spectra, see also Gera et al., (Reference Gera, Alonso, Crawford, House, Mendez-Bermudez, Knuth and Miller2018). Yet, for the spectrum, it is often unclear which information in terms of local structural properties is extracted from the network and hence such approaches somehow ascribe only too little importance to the attributes of interest.

Another branch of the literature on descriptive network comparison relies on the concept of graphlets, i.e. prespecified subgraph patterns that are assumed to be sufficient for describing the present structure. Papers that, in one way or another, consider differences in the frequencies of graphlets are, among others, Pržulj et al., (Reference Pržulj, Corneil and Jurisica2004), Pržulj (Reference Pržulj2007), Ali et al., (Reference Ali, Rito, Reinert, Sun and Deane2014), and Faisal et al., (Reference Faisal, Newaz, Chaney, Li, Emrich, Clark and Milenković2017). Since the counting procedure is rather complex for larger graphlets, it is sort of a consensus to include only those that consist of no more than five nodes. Apparently, this seems somehow arbitrary and incomplete in terms of capturing all structural aspects. Moreover, Yaveroğlu et al., (Reference Yaveroğlu, Malod-Dognin, Davis, Levnajic, Janjic, Karapandza, Stojmirovic and Pržulj2014) found high correlations among the graphlet-related statistics, including complete redundancies. On the other hand, a connection between subgraph frequencies and the concrete specification of the graphon model is exemplarily elaborated in Borgs et al., (Reference Borgs, Chayes, Lovász, Sós and Vesztergombi2008), Bickel et al., (Reference Bickel, Chen and Levina2011), and Latouche and Robin (Reference Latouche and Robin2016).

Despite their wide dissemination, descriptive network statistics entail two general shortcomings. First, it is very difficult to assess which network segments accommodate the structural key differences. To be precise, the nodes or edges (present or absent) that contribute most to a quantified structural discrepancy can only hardly be detected. Secondly, drawing inference on the dissimilarity of two networks based on multiple network statistics is a complex inferential problem. For example, Butts (Reference Butts2008) aims to overcome this deficit by applying a simplistic conditional uniform graph distribution.

On the other hand, probabilistic models for network data induce distributions on structural patterns that extend to desired distributional assumptions on structural differences. As a consequence, these modeling frameworks might provide concepts for network comparison. Yet, due to a certain level of model complexity, which is required to adequately represent inherently complex network data, individual shortcomings are entailed that often prevent a direct comparison. While, for example, the Latent Distance Model (Hoff et al., Reference Hoff, Raftery and Handcock2002) does not yield any model-related key component to be compared, coefficient estimates from the exponential random graph model are not directly comparable across separated networks. The graphon model and the Stochastic Blockmodel (see Holland et al., Reference Holland, Laskey and Leinhardt1983 and Snijders and Nowicki, Reference Snijders and Nowicki1997 and Reference Nowicki and Snijders2001) suffer from identifiability issues (Diaconis and Janson, Reference Diaconis and Janson2008, Thm. 7.1 ) that make a comparison of corresponding individual estimates very complicated. The blockmodel’s adaptivity is additionally highly dependent on the choice of the number of blocks. Onnela et al., (Reference Onnela, Fenn, Reid, Porter, Mucha, Fricker and Jones2012) tackle this issue by observing the networks’ complete disintegration processes, which they describe by the profiles over well-known network statistics. Appropriately summarizing the differences in these profiles has been demonstrated to provide a reasonable distance measure between networks that leads to good classification.

Overall, in view of the issues mentioned above, it seems little surprising that the literature on model-based strategies for network comparison is rather scant. More specifically, to the best of our knowledge, there exists no nonparametric statistical test on the equivalence of network structures. This shortcoming is what we aim to address in this paper. To do so, we formulate a joint smooth graphon framework that can subsequently be used for constructing an appropriate testing procedure.

3. Notation and formulation of the test problem

We consider the setting where two undirected networks of sizes

![]() $N^{(1)}$

and

$N^{(1)}$

and

![]() $N^{(2)}$

have been observed. Let

$N^{(2)}$

have been observed. Let

![]() $\boldsymbol{y}^{(g)} = [y_{ij}^{(g)}]_{i,j = 1, \ldots , N^{(g)}}$

for

$\boldsymbol{y}^{(g)} = [y_{ij}^{(g)}]_{i,j = 1, \ldots , N^{(g)}}$

for

![]() $g =1, 2$

denote the two respective adjacency matrices, where

$g =1, 2$

denote the two respective adjacency matrices, where

![]() $y_{ij}^{(g)} = 1$

if, in network

$y_{ij}^{(g)} = 1$

if, in network

![]() $g$

, there exists an edge between nodes

$g$

, there exists an edge between nodes

![]() $i$

and

$i$

and

![]() $j$

and

$j$

and

![]() $y^{(g)}_{ij}=0$

otherwise. Since we assume the networks to be undirected, this specifically means that

$y^{(g)}_{ij}=0$

otherwise. Since we assume the networks to be undirected, this specifically means that

![]() $y_{ij}^{(g)} = y_{ji}^{(g)}$

, leading to a symmetric

$y_{ij}^{(g)} = y_{ji}^{(g)}$

, leading to a symmetric

![]() $\boldsymbol{y}^{(g)} \in \{0,1\}^{N^{(g)} \times N^{(g)}}$

. Additionally, for the diagonal elements, we set

$\boldsymbol{y}^{(g)} \in \{0,1\}^{N^{(g)} \times N^{(g)}}$

. Additionally, for the diagonal elements, we set

![]() $y_{ii}^{(g)}=0$

, reflecting the absence of self-loops. In general, we consider

$y_{ii}^{(g)}=0$

, reflecting the absence of self-loops. In general, we consider

![]() $\boldsymbol{y}^{(g)}$

to be a realization of a random network

$\boldsymbol{y}^{(g)}$

to be a realization of a random network

![]() $\boldsymbol{Y}^{(g)}$

of size

$\boldsymbol{Y}^{(g)}$

of size

![]() $N^{(g)}$

which is subject to probability mass

$N^{(g)}$

which is subject to probability mass

![]() $\mathbb{P}(\boldsymbol{Y}^{(g)} = \boldsymbol{y}^{(g)} \, ; N^{(g)})$

. The question we aim to tackle is whether

$\mathbb{P}(\boldsymbol{Y}^{(g)} = \boldsymbol{y}^{(g)} \, ; N^{(g)})$

. The question we aim to tackle is whether

![]() $\boldsymbol{y}^{(1)}$

and

$\boldsymbol{y}^{(1)}$

and

![]() $\boldsymbol{y}^{(2)}$

are drawn from the same distribution. To suitably specify such a distribution, we rely on the smooth graphon model. The data-generating process is thereby as follows. Assume that we independently draw uniformly distributed random variables

$\boldsymbol{y}^{(2)}$

are drawn from the same distribution. To suitably specify such a distribution, we rely on the smooth graphon model. The data-generating process is thereby as follows. Assume that we independently draw uniformly distributed random variables

Conditional on

![]() ${\boldsymbol{U}}^{(g)}=(U^{(g)}_1, \ldots , U_{N^{(g)}}^{(g)})$

, we then draw the edges i.i.d. through

${\boldsymbol{U}}^{(g)}=(U^{(g)}_1, \ldots , U_{N^{(g)}}^{(g)})$

, we then draw the edges i.i.d. through

with

![]() ${\boldsymbol{u}}^{(g)}=(u^{(g)}_1, \ldots , u_{N^{(g)}}^{(g)}) \in [0,1]^{N^{(g)}}$

and under the setting of

${\boldsymbol{u}}^{(g)}=(u^{(g)}_1, \ldots , u_{N^{(g)}}^{(g)}) \in [0,1]^{N^{(g)}}$

and under the setting of

![]() $Y_{ij}^{(g)} \equiv Y_{ji}^{(g)}$

for

$Y_{ij}^{(g)} \equiv Y_{ji}^{(g)}$

for

![]() $j\lt i$

and

$j\lt i$

and

![]() $Y_{ii}^{(g)} \equiv 0$

. The function

$Y_{ii}^{(g)} \equiv 0$

. The function

![]() $w^{(g)}: [0,1]^2 \rightarrow [0,1]$

is called graphon (see Lovász and Szegedy, Reference Lovász and Szegedy2006 and Diaconis and Janson, Reference Diaconis and Janson2008). We assume

$w^{(g)}: [0,1]^2 \rightarrow [0,1]$

is called graphon (see Lovász and Szegedy, Reference Lovász and Szegedy2006 and Diaconis and Janson, Reference Diaconis and Janson2008). We assume

![]() $w^{(g)}(\cdot ,\cdot )$

to be smooth according to some Hölder or Lipschitz condition (cf. Wolfe and Olhede, Reference Wolfe and Olhede2013 or Chan and Airoldi, Reference Chan and Airoldi2014). Relying on this data-generating process, the graphon-based probability model can be defined through

$w^{(g)}(\cdot ,\cdot )$

to be smooth according to some Hölder or Lipschitz condition (cf. Wolfe and Olhede, Reference Wolfe and Olhede2013 or Chan and Airoldi, Reference Chan and Airoldi2014). Relying on this data-generating process, the graphon-based probability model can be defined through

In this distribution model, the network’s size and structure are apparently dissociated, which therefore allows for a size-independent comparison of underlying structures. Hence, our goal is to develop a statistical test on the hypothesis

In this context, we emphasize that data-generating process (2) is not unique because it is invariant to permutations of

![]() $w^{(g)}(\cdot ,\cdot )$

, as discussed in detail by Diaconis and Janson(2008, Sec. 7). Thus, the formulation of

$w^{(g)}(\cdot ,\cdot )$

, as discussed in detail by Diaconis and Janson(2008, Sec. 7). Thus, the formulation of

![]() $H_0$

needs to be formally understood from the perspective of corresponding equivalence classes. For the concrete implementation of the test procedure, we employ a concrete representation of the two graphons as described below. Specifically, under the assumption of

$H_0$

needs to be formally understood from the perspective of corresponding equivalence classes. For the concrete implementation of the test procedure, we employ a concrete representation of the two graphons as described below. Specifically, under the assumption of

![]() $H_0$

, we define the joint graphon

$H_0$

, we define the joint graphon

Since

![]() $w^{(1)}(\cdot ,\cdot )$

and

$w^{(1)}(\cdot ,\cdot )$

and

![]() $w^{(2)}(\cdot ,\cdot )$

are assumed to be smooth, this also holds for

$w^{(2)}(\cdot ,\cdot )$

are assumed to be smooth, this also holds for

![]() $w^{\text{joint}}(\cdot ,\cdot )$

. Given such joint graphon, the node position vectors

$w^{\text{joint}}(\cdot ,\cdot )$

. Given such joint graphon, the node position vectors

![]() ${\boldsymbol{u}}^{(1)}$

and

${\boldsymbol{u}}^{(1)}$

and

![]() ${\boldsymbol{u}}^{(2)}$

referring to

${\boldsymbol{u}}^{(2)}$

referring to

![]() $w^{\text{joint}}(\cdot ,\cdot )$

then provide a specific type of network alignment. This is what we utilize for a direct comparison of

$w^{\text{joint}}(\cdot ,\cdot )$

then provide a specific type of network alignment. This is what we utilize for a direct comparison of

![]() $\boldsymbol{y}^{(1)}$

and

$\boldsymbol{y}^{(1)}$

and

![]() $\boldsymbol{y}^{(2)}$

. Having said that, in real-world applications, neither (

$\boldsymbol{y}^{(2)}$

. Having said that, in real-world applications, neither (

![]() ${\boldsymbol{u}}^{(1)}, {\boldsymbol{u}}^{(2)}$

) nor

${\boldsymbol{u}}^{(1)}, {\boldsymbol{u}}^{(2)}$

) nor

![]() $w^{\text{joint}}(\cdot ,\cdot )$

is observed. Thus, in order to achieve this alignment, we first need to formulate an appropriate estimation procedure for the joint graphon model. Note that, strictly speaking, according to formulation (3) this requires the presence of dense networks. Yet, the following modeling technique and the subsequent testing procedure are reasonable if the empirical densities are high enough and the observed networks are considered to be fixed in

$w^{\text{joint}}(\cdot ,\cdot )$

is observed. Thus, in order to achieve this alignment, we first need to formulate an appropriate estimation procedure for the joint graphon model. Note that, strictly speaking, according to formulation (3) this requires the presence of dense networks. Yet, the following modeling technique and the subsequent testing procedure are reasonable if the empirical densities are high enough and the observed networks are considered to be fixed in

![]() $N^{(g)}$

,

$N^{(g)}$

,

![]() $g=1,2$

.

$g=1,2$

.

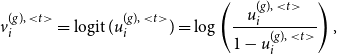

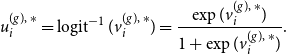

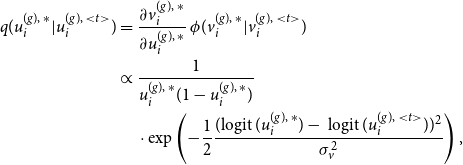

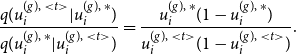

4. EM-based joint graphon estimation

In this section, we present an iterative estimation procedure for the joint smooth graphon model under the assumption that null hypothesis (4) is true. To do so, we follow the EM-based estimation routine of Sischka and Kauermann (Reference Sischka and Kauermann2022), extending it to the situation of two networks.

4.1 MCMC E-step

Starting with the E-step of our iterative algorithm, we assume the joint graphon

![]() $w^{\text{joint}}(\cdot , \cdot )$

to be known for the moment. Based on that, the latent positions of the networks can be separately determined using MCMC techniques. To be precise, we apply Gibbs sampling by formulating the full conditional distribution of

$w^{\text{joint}}(\cdot , \cdot )$

to be known for the moment. Based on that, the latent positions of the networks can be separately determined using MCMC techniques. To be precise, we apply Gibbs sampling by formulating the full conditional distribution of

![]() $U_i^{(g)}$

through

$U_i^{(g)}$

through

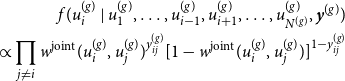

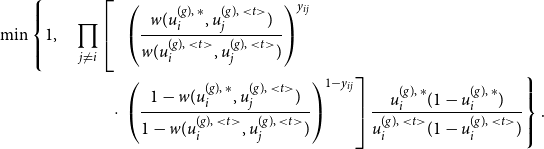

\begin{align} f(u_i^{(g)} \mid u_1^{(g)},\ldots , u_{i-1}^{(g)},u_{i+1}^{(g)}, \ldots , u_{N^{(g)}}^{(g)}, \boldsymbol{y}^{(g)}) \nonumber \\ \propto \prod _{j \neq i} w^{\text{joint}}(u_i^{(g)} , u_j^{(g)})^{y_{ij}^{(g)}} [1 - w^{\text{joint}}(u_i^{(g)} , u_j^{(g)})]^{1-{y_{ij}^{(g)}}} \end{align}

\begin{align} f(u_i^{(g)} \mid u_1^{(g)},\ldots , u_{i-1}^{(g)},u_{i+1}^{(g)}, \ldots , u_{N^{(g)}}^{(g)}, \boldsymbol{y}^{(g)}) \nonumber \\ \propto \prod _{j \neq i} w^{\text{joint}}(u_i^{(g)} , u_j^{(g)})^{y_{ij}^{(g)}} [1 - w^{\text{joint}}(u_i^{(g)} , u_j^{(g)})]^{1-{y_{ij}^{(g)}}} \end{align}

for all

![]() $i = 1,\ldots , N^{(g)}$

and

$i = 1,\ldots , N^{(g)}$

and

![]() $g=1, 2$

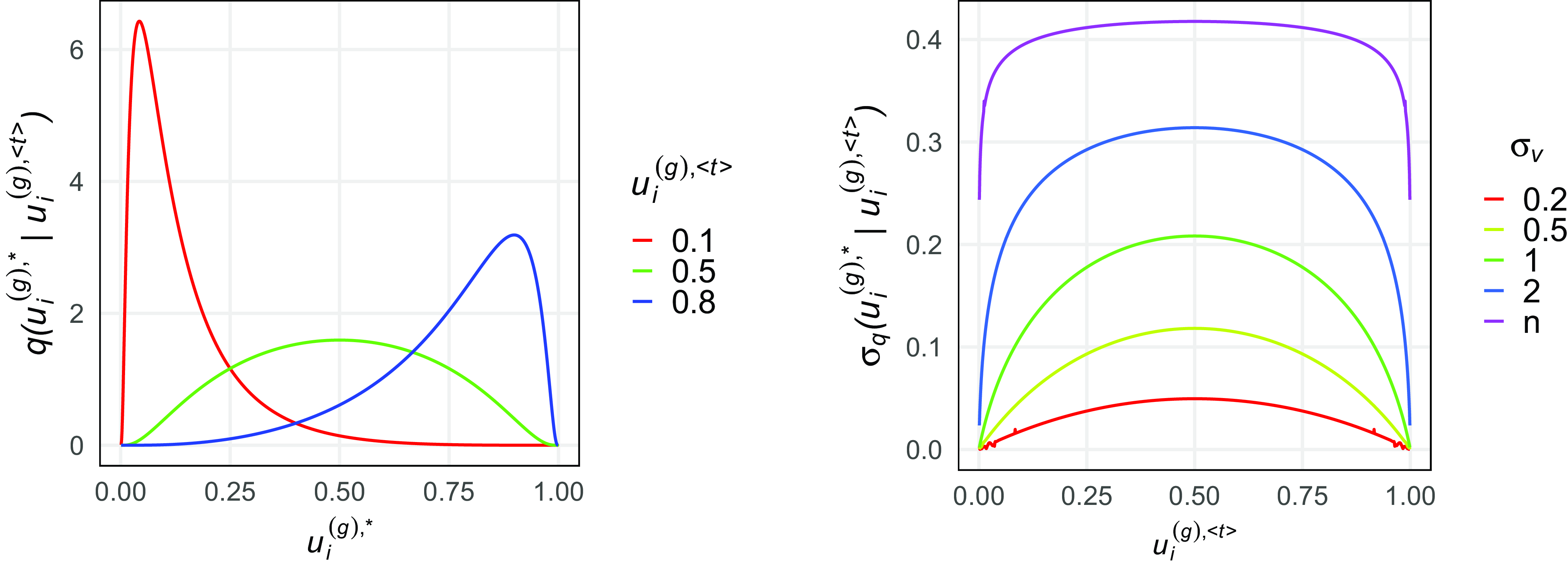

. Details on the concrete implementation of the Gibbs sampler are given in Section A of the Appendix. The resulting MCMC sequence (after cutting the burn-in period and appropriate thinning) then reflects the joint conditional distribution

$g=1, 2$

. Details on the concrete implementation of the Gibbs sampler are given in Section A of the Appendix. The resulting MCMC sequence (after cutting the burn-in period and appropriate thinning) then reflects the joint conditional distribution

![]() $f({\boldsymbol{u}}^{(g)} \mid \boldsymbol{y}^{(g)})$

. Thus, the marginal conditional means of the latent positions, i.e.

$f({\boldsymbol{u}}^{(g)} \mid \boldsymbol{y}^{(g)})$

. Thus, the marginal conditional means of the latent positions, i.e.

![]() $\mathbb{E} (U_i^{(g)} \mid \boldsymbol{Y}^{(g)} = \boldsymbol{y}^{(g)})$

for

$\mathbb{E} (U_i^{(g)} \mid \boldsymbol{Y}^{(g)} = \boldsymbol{y}^{(g)})$

for

![]() $i=1,\ldots ,N^{(g)}$

, can be approximated by taking the mean over the MCMC samples, which we denote by

$i=1,\ldots ,N^{(g)}$

, can be approximated by taking the mean over the MCMC samples, which we denote by

![]() $\bar {{\boldsymbol{u}}}^{(g)} = (\bar {u}_1^{(g)}, \ldots , \bar {u}_{N^{(g)}}^{(g)})$

. This posterior mean vector, however, requires further adjustment due to additional identifiability issues that cannot be coped with by the standard EM-type algorithm. To illustrate this, let model assumption (1) be more relaxed in the sense that the

$\bar {{\boldsymbol{u}}}^{(g)} = (\bar {u}_1^{(g)}, \ldots , \bar {u}_{N^{(g)}}^{(g)})$

. This posterior mean vector, however, requires further adjustment due to additional identifiability issues that cannot be coped with by the standard EM-type algorithm. To illustrate this, let model assumption (1) be more relaxed in the sense that the

![]() $U_i^{(g)}$

’s are assumed to follow a continuous distribution

$U_i^{(g)}$

’s are assumed to follow a continuous distribution

![]() $F^{(g)}(\cdot )$

. Under this configuration, the model (

$F^{(g)}(\cdot )$

. Under this configuration, the model (

![]() $F^{(g)}(\cdot )$

,

$F^{(g)}(\cdot )$

,

![]() $w^{(g)}(\cdot ,\cdot )$

) is equivalent to any other model (

$w^{(g)}(\cdot ,\cdot )$

) is equivalent to any other model (

![]() ${F^{(g)}}^\prime (\cdot )$

,

${F^{(g)}}^\prime (\cdot )$

,

![]() ${w^{(g)}}^\prime (\cdot ,\cdot )$

) constructed through

${w^{(g)}}^\prime (\cdot ,\cdot )$

) constructed through

with

![]() $\varphi : [0,1] \rightarrow [0,1]$

being a strictly increasing continuous function (that is, in contrast to Diaconis and Janson, Reference Diaconis and Janson2008, Sec. 7 not measure-preserving). Specifically, that means

$\varphi : [0,1] \rightarrow [0,1]$

being a strictly increasing continuous function (that is, in contrast to Diaconis and Janson, Reference Diaconis and Janson2008, Sec. 7 not measure-preserving). Specifically, that means

for all

![]() $N^{(g)} \geq 2$

. As a matter of conception, this issue cannot be solved by the EM algorithm since it aims at specifying a model that adapts optimally to the given data instead of perfectly recovering the underlying model structure. Consequently, the EM approach is not able to distinguish between the two conceptually equivalent model specifications (

$N^{(g)} \geq 2$

. As a matter of conception, this issue cannot be solved by the EM algorithm since it aims at specifying a model that adapts optimally to the given data instead of perfectly recovering the underlying model structure. Consequently, the EM approach is not able to distinguish between the two conceptually equivalent model specifications (

![]() $F^{(g)}(\cdot )$

,

$F^{(g)}(\cdot )$

,

![]() $w^{(g)}(\cdot ,\cdot )$

) and (

$w^{(g)}(\cdot ,\cdot )$

) and (

![]() ${F^{(g)}}^\prime (\cdot )$

,

${F^{(g)}}^\prime (\cdot )$

,

![]() ${w^{(g)}}^\prime (\cdot ,\cdot )$

). Nonetheless, this identifiability issue can simply be tackled by adjusting

${w^{(g)}}^\prime (\cdot ,\cdot )$

). Nonetheless, this identifiability issue can simply be tackled by adjusting

![]() $\bar {{\boldsymbol{u}}}^{(g)}$

before estimating the graphon in the M-step. To do so, we just impose that the inferred node positions follow an ideal sample drawn from the standard uniform distribution and thus intend to sprawl out the posterior means in an equidistant manner. More precisely, as an order-preserving adjusted estimate that is directly derived from the Gibbs sampler we utilize the “uniformized” posterior mean

$\bar {{\boldsymbol{u}}}^{(g)}$

before estimating the graphon in the M-step. To do so, we just impose that the inferred node positions follow an ideal sample drawn from the standard uniform distribution and thus intend to sprawl out the posterior means in an equidistant manner. More precisely, as an order-preserving adjusted estimate that is directly derived from the Gibbs sampler we utilize the “uniformized” posterior mean

where

![]() $\operatorname {rank}(\bar {u}_i^{(g)})$

is the rank from the smallest to the largest of element

$\operatorname {rank}(\bar {u}_i^{(g)})$

is the rank from the smallest to the largest of element

![]() $\bar {u}_i^{(g)}$

within

$\bar {u}_i^{(g)}$

within

![]() $\bar {{\boldsymbol{u}}}^{(g)}$

. In this context, note that the values

$\bar {{\boldsymbol{u}}}^{(g)}$

. In this context, note that the values

![]() $i/(N^{(g)}+1)$

with

$i/(N^{(g)}+1)$

with

![]() $i=1,\ldots ,N^{(g)}$

represent the expectations of

$i=1,\ldots ,N^{(g)}$

represent the expectations of

![]() $N^{(g)}$

ordered random variables that are independently drawn from the standard uniform distribution. As a result, with

$N^{(g)}$

ordered random variables that are independently drawn from the standard uniform distribution. As a result, with

![]() $\hat {{\boldsymbol{u}}}^{(g)} = (\hat {u}_1^{(g)}, \ldots , \hat {u}_{N^{(g)}}^{(g)})$

we obtain a plausible realization of the node positions of network

$\hat {{\boldsymbol{u}}}^{(g)} = (\hat {u}_1^{(g)}, \ldots , \hat {u}_{N^{(g)}}^{(g)})$

we obtain a plausible realization of the node positions of network

![]() $g$

. Apparently, this relies on the current joint graphon estimate

$g$

. Apparently, this relies on the current joint graphon estimate

![]() $\hat {w}^{\text{joint}}(\cdot , \cdot )$

, which is applied as substitute in conditional distribution (5). In the next step, we formulate the procedure for updating

$\hat {w}^{\text{joint}}(\cdot , \cdot )$

, which is applied as substitute in conditional distribution (5). In the next step, we formulate the procedure for updating

![]() $\hat {w}^{\text{joint}}(\cdot , \cdot )$

given

$\hat {w}^{\text{joint}}(\cdot , \cdot )$

given

![]() $\hat {{\boldsymbol{u}}}^{(1)}$

and

$\hat {{\boldsymbol{u}}}^{(1)}$

and

![]() $\hat {{\boldsymbol{u}}}^{(2)}$

.

$\hat {{\boldsymbol{u}}}^{(2)}$

.

4.2 Spline-based M-step

For a semiparametric estimation of the joint smooth graphon, we choose a linear B-spline regression approach. To this end, we assume the joint graphon to be approximated through

where

![]() $\otimes$

is the Kronecker product,

$\otimes$

is the Kronecker product,

![]() ${\boldsymbol{B}}(u) \in \mathbb{R}^{1 \times L}$

is a linear B-spline basis on

${\boldsymbol{B}}(u) \in \mathbb{R}^{1 \times L}$

is a linear B-spline basis on

![]() $[0,1]$

, normalized to have a maximum value of one, and

$[0,1]$

, normalized to have a maximum value of one, and

![]() $\boldsymbol{\theta } \in \mathbb{R}^{L^2}$

is the parameter vector to be estimated. The inner B-spline knots are specified as lying equidistantly on a regular two-dimensional grid within

$\boldsymbol{\theta } \in \mathbb{R}^{L^2}$

is the parameter vector to be estimated. The inner B-spline knots are specified as lying equidistantly on a regular two-dimensional grid within

![]() $[0,1]^2$

, where

$[0,1]^2$

, where

![]() $\boldsymbol{\theta }$

is indexed accordingly through

$\boldsymbol{\theta }$

is indexed accordingly through

![]() $\boldsymbol{\theta } = \left ( \theta _{11},\ldots , \theta _{1L}, \theta _{21}, \ldots , \theta _{LL} \right )^\top$

. Based on this representation and given the node positions

$\boldsymbol{\theta } = \left ( \theta _{11},\ldots , \theta _{1L}, \theta _{21}, \ldots , \theta _{LL} \right )^\top$

. Based on this representation and given the node positions

![]() $\hat {{\boldsymbol{u}}}^{(1)}$

and

$\hat {{\boldsymbol{u}}}^{(1)}$

and

![]() $\hat {{\boldsymbol{u}}}^{(2)}$

, we formulate the marginal log-likelihood over both networks as

$\hat {{\boldsymbol{u}}}^{(2)}$

, we formulate the marginal log-likelihood over both networks as

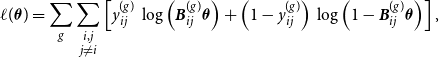

\begin{equation} \ell (\boldsymbol{\theta }) = \sum _g \sum \limits _{\substack {i,j \\ j \neq i}} \left [ y_{ij}^{(g)} \, \log \left ( {\boldsymbol{B}}_{ij}^{(g)} \boldsymbol{\theta } \right ) + \left ( 1- y_{ij}^{(g)} \right ) \, \log \left ( 1 - {\boldsymbol{B}}_{ij}^{(g)} \boldsymbol{\theta } \right ) \right ], \end{equation}

\begin{equation} \ell (\boldsymbol{\theta }) = \sum _g \sum \limits _{\substack {i,j \\ j \neq i}} \left [ y_{ij}^{(g)} \, \log \left ( {\boldsymbol{B}}_{ij}^{(g)} \boldsymbol{\theta } \right ) + \left ( 1- y_{ij}^{(g)} \right ) \, \log \left ( 1 - {\boldsymbol{B}}_{ij}^{(g)} \boldsymbol{\theta } \right ) \right ], \end{equation}

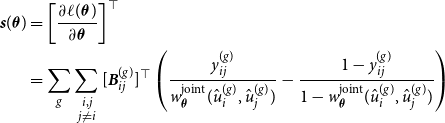

where

![]() ${\boldsymbol{B}}_{ij}^{(g)} = {\boldsymbol{B}}(\hat {u}_i^{(g)}) \otimes {\boldsymbol{B}}(\hat {u}_j^{(g)})$

. Furthermore, through standard calculations, we are able to derive the score function

${\boldsymbol{B}}_{ij}^{(g)} = {\boldsymbol{B}}(\hat {u}_i^{(g)}) \otimes {\boldsymbol{B}}(\hat {u}_j^{(g)})$

. Furthermore, through standard calculations, we are able to derive the score function

![]() ${\boldsymbol{s}}(\boldsymbol{\theta })$

and the Fisher information

${\boldsymbol{s}}(\boldsymbol{\theta })$

and the Fisher information

![]() ${\boldsymbol{F}}(\boldsymbol{\theta })$

, as demonstrated in Section B of the Appendix. Fisher scoring can then be used to maximize

${\boldsymbol{F}}(\boldsymbol{\theta })$

, as demonstrated in Section B of the Appendix. Fisher scoring can then be used to maximize

![]() $\ell (\boldsymbol{\theta })$

. In addition, we include side constraints to ensure that

$\ell (\boldsymbol{\theta })$

. In addition, we include side constraints to ensure that

![]() $w_{\boldsymbol{\theta }}^{\text{joint}}(\cdot ,\cdot )$

is bounded to

$w_{\boldsymbol{\theta }}^{\text{joint}}(\cdot ,\cdot )$

is bounded to

![]() $[0,1]$

and symmetric. In the linear B-spline setting, this means restricting the parameters by the conditions

$[0,1]$

and symmetric. In the linear B-spline setting, this means restricting the parameters by the conditions

for all

![]() $l \gt k$

. Apparently, all three conditions are of linear form and thus can be written in matrix format. Taken together, the Fisher scoring becomes a quadratic programming problem that can be solved using standard software (see Andersen et al., Reference Andersen, Dahl and Vandenberghe2016 or Turlach and Weingessel, Reference Turlach and Weingessel2013).

$l \gt k$

. Apparently, all three conditions are of linear form and thus can be written in matrix format. Taken together, the Fisher scoring becomes a quadratic programming problem that can be solved using standard software (see Andersen et al., Reference Andersen, Dahl and Vandenberghe2016 or Turlach and Weingessel, Reference Turlach and Weingessel2013).

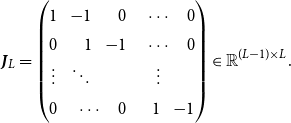

Moreover, we intend to add penalization on the B-spline estimate. As outlined in Eilers and Marx (Reference Eilers and Marx1996) and Ruppert et al., (Reference Ruppert, Wand and Carroll2003, Reference Ruppert, Wand and Carroll2009), penalized spline estimation under the setting of a rather large spline basis yields a preferable outcome since it guarantees a functional fit that covers the data adequately but is still smooth. Thus, this approach enables to precisely capture the underlying structure while avoiding overfitting. To realize this, we add a first-order penalty, meaning that elements of

![]() $\boldsymbol{\theta }$

get penalized that are neighboring on the notional two-dimensional grid, i.e.

$\boldsymbol{\theta }$

get penalized that are neighboring on the notional two-dimensional grid, i.e.

![]() $\theta _{kl}$

and

$\theta _{kl}$

and

![]() $\theta _{(k+1)l}$

as well as

$\theta _{(k+1)l}$

as well as

![]() $\theta _{kl}$

and

$\theta _{kl}$

and

![]() $\theta _{k(l+1)}$

. For the log-likelihood, the score function, and the Fisher information, this leads to the penalized versions in the form of

$\theta _{k(l+1)}$

. For the log-likelihood, the score function, and the Fisher information, this leads to the penalized versions in the form of

\begin{equation} \begin{gathered} \ell _{\text{p}} (\boldsymbol{\theta }, \lambda ) = \ell (\boldsymbol{\theta }) - \frac {1}{2} \lambda \boldsymbol{\theta }^\top \boldsymbol{P} \boldsymbol{\theta }, \quad {\boldsymbol{s}}_{\text{p}}(\boldsymbol{\theta }, \lambda ) = {\boldsymbol{s}}(\boldsymbol{\theta }) - \lambda \boldsymbol{P} \boldsymbol{\theta }, \\ \text{and} \quad {\boldsymbol{F}}_{\text{p}}(\boldsymbol{\theta }, \lambda ) = {\boldsymbol{F}} (\boldsymbol{\theta }) + \lambda \boldsymbol{P}, \end{gathered} \end{equation}

\begin{equation} \begin{gathered} \ell _{\text{p}} (\boldsymbol{\theta }, \lambda ) = \ell (\boldsymbol{\theta }) - \frac {1}{2} \lambda \boldsymbol{\theta }^\top \boldsymbol{P} \boldsymbol{\theta }, \quad {\boldsymbol{s}}_{\text{p}}(\boldsymbol{\theta }, \lambda ) = {\boldsymbol{s}}(\boldsymbol{\theta }) - \lambda \boldsymbol{P} \boldsymbol{\theta }, \\ \text{and} \quad {\boldsymbol{F}}_{\text{p}}(\boldsymbol{\theta }, \lambda ) = {\boldsymbol{F}} (\boldsymbol{\theta }) + \lambda \boldsymbol{P}, \end{gathered} \end{equation}

respectively, where

![]() $\boldsymbol{P}$

is a penalization matrix of appropriate shape (see Section B of the Appendix). For an adequate choice of the penalty parameter

$\boldsymbol{P}$

is a penalization matrix of appropriate shape (see Section B of the Appendix). For an adequate choice of the penalty parameter

![]() $\lambda$

in the two/dimensional spline regression, we follow Kauermann et al., (Reference Kauermann, Schellhase and Ruppert2013) and apply the corrected Akaike Information Criterion (

$\lambda$

in the two/dimensional spline regression, we follow Kauermann et al., (Reference Kauermann, Schellhase and Ruppert2013) and apply the corrected Akaike Information Criterion (

![]() $\operatorname {AIC}_{\text{c}}$

, see Hurvich and Tsai, Reference Hurvich and Tsai1989 and Burnham and Anderson, Reference Burnham and Anderson2002). This is defined as

$\operatorname {AIC}_{\text{c}}$

, see Hurvich and Tsai, Reference Hurvich and Tsai1989 and Burnham and Anderson, Reference Burnham and Anderson2002). This is defined as

where

![]() $\hat {\boldsymbol{\theta }}_{\text{p}}$

is the corresponding penalized parameter estimate and

$\hat {\boldsymbol{\theta }}_{\text{p}}$

is the corresponding penalized parameter estimate and

![]() $\operatorname {df} (\lambda )$

specifies the degrees of freedom of the penalized B-spline function. More precisely, according to Wood (Reference Wood2017, pp. 211 ff.), the latter is defined trough

$\operatorname {df} (\lambda )$

specifies the degrees of freedom of the penalized B-spline function. More precisely, according to Wood (Reference Wood2017, pp. 211 ff.), the latter is defined trough

with

![]() $\operatorname {tr} \{\cdot \}$

being the trace of a matrix. A numerical optimization of the corrected

$\operatorname {tr} \{\cdot \}$

being the trace of a matrix. A numerical optimization of the corrected

![]() $\operatorname {AIC}$

with respect to

$\operatorname {AIC}$

with respect to

![]() $\lambda$

concludes the estimation of

$\lambda$

concludes the estimation of

![]() $\boldsymbol{\theta }$

, resulting in the estimate

$\boldsymbol{\theta }$

, resulting in the estimate

![]() $\hat {w}^{\text{joint}}(\cdot , \cdot )$

of the current M-step. The convergence rate of

$\hat {w}^{\text{joint}}(\cdot , \cdot )$

of the current M-step. The convergence rate of

![]() $\hat {w}^{\text{joint}}(\cdot , \cdot )$

towards

$\hat {w}^{\text{joint}}(\cdot , \cdot )$

towards

![]() $w^{\text{joint}}(\cdot , \cdot )$

for growing

$w^{\text{joint}}(\cdot , \cdot )$

for growing

![]() $N^{(g)}$

,

$N^{(g)}$

,

![]() $g=1,2$

, and

$g=1,2$

, and

![]() $L = \scriptstyle \mathcal{O} (\min \{N^{(1)}, N^{(2)}\})$

and under the assumption of (

$L = \scriptstyle \mathcal{O} (\min \{N^{(1)}, N^{(2)}\})$

and under the assumption of (

![]() $\hat {{\boldsymbol{u}}}^{(1)}, \hat {{\boldsymbol{u}}}^{(2)}$

) being true then depends on the graphon’s smoothness, which has been postulated as a rather general assumption by the Hölder/Lipschitz condition in Section 3. Here,

$\hat {{\boldsymbol{u}}}^{(1)}, \hat {{\boldsymbol{u}}}^{(2)}$

) being true then depends on the graphon’s smoothness, which has been postulated as a rather general assumption by the Hölder/Lipschitz condition in Section 3. Here,

![]() $\scriptstyle \mathcal{O}(\cdot )$

refers to Landau’s small-

$\scriptstyle \mathcal{O}(\cdot )$

refers to Landau’s small-

![]() $\scriptstyle \mathcal{O}$

notation.

$\scriptstyle \mathcal{O}$

notation.

Finally, the EM-type estimation procedure described above allows us to adequately estimate both the joint graphon

![]() $w^{\text{joint}}(\cdot ,\cdot )$

and the corresponding node positions

$w^{\text{joint}}(\cdot ,\cdot )$

and the corresponding node positions

![]() ${\boldsymbol{u}}^{(1)}$

and

${\boldsymbol{u}}^{(1)}$

and

![]() ${\boldsymbol{u}}^{(2)}$

of the two networks. Specifically, given that

${\boldsymbol{u}}^{(2)}$

of the two networks. Specifically, given that

![]() ${\boldsymbol{u}}^{(1)}$

and

${\boldsymbol{u}}^{(1)}$

and

![]() ${\boldsymbol{u}}^{(2)}$

refer to the same graphon representation, this allows to formulate an appropriate test procedure based on local comparison.

${\boldsymbol{u}}^{(2)}$

refer to the same graphon representation, this allows to formulate an appropriate test procedure based on local comparison.

5. Two-sample test on network structures

Returning to the test problem raised in Section 3, we now develop a statistical test procedure on hypothesis (4), i.e. whether

![]() $\boldsymbol{y}^{(1)}$

and

$\boldsymbol{y}^{(1)}$

and

![]() $\boldsymbol{y}^{(2)}$

are drawn from the same distribution. To do so, we utilize the network alignment resulting from the (inferred) joint smooth graphon model. More precisely, we exploit the fact that two edge variables

$\boldsymbol{y}^{(2)}$

are drawn from the same distribution. To do so, we utilize the network alignment resulting from the (inferred) joint smooth graphon model. More precisely, we exploit the fact that two edge variables

![]() $Y_{i_1 j_1}^{(1)}$

and

$Y_{i_1 j_1}^{(1)}$

and

![]() $Y_{i_2 j_2}^{(2)}$

that have nearby positions—i.e. for which the distance between

$Y_{i_2 j_2}^{(2)}$

that have nearby positions—i.e. for which the distance between

![]() $(u_{i_1}^{(1)}, u_{j_1}^{(1)})^\top$

and

$(u_{i_1}^{(1)}, u_{j_1}^{(1)})^\top$

and

![]() $(u_{i_2}^{(2)}, u_{j_2}^{(2)})^\top$

is small—possess similar probabilities to form a connection. In a more formalized way, this means that, from

$(u_{i_2}^{(2)}, u_{j_2}^{(2)})^\top$

is small—possess similar probabilities to form a connection. In a more formalized way, this means that, from

![]() $\Vert (u_{i_1}^{(1)}, u_{j_1}^{(1)})^\top - (u_{i_2}^{(2)}, u_{j_2}^{(2)})^\top \Vert \approx 0$

, it follows that

$\Vert (u_{i_1}^{(1)}, u_{j_1}^{(1)})^\top - (u_{i_2}^{(2)}, u_{j_2}^{(2)})^\top \Vert \approx 0$

, it follows that

where

![]() $\Vert \cdot \Vert$

is the Euclidean distance. Following this intuition, we divide the unit square into small segments and compare between networks the ratio of present versus absent edges occurring in these segments (see Figure 2 for an exemplary division). For that purpose, we choose a suitable

$\Vert \cdot \Vert$

is the Euclidean distance. Following this intuition, we divide the unit square into small segments and compare between networks the ratio of present versus absent edges occurring in these segments (see Figure 2 for an exemplary division). For that purpose, we choose a suitable

![]() $K \in \mathbb{N}$

, specify a corresponding boundary sequence

$K \in \mathbb{N}$

, specify a corresponding boundary sequence

![]() $a_0=0 \lt a_1 \lt \ldots \lt a_K=1$

, and define the following two quantities for

$a_0=0 \lt a_1 \lt \ldots \lt a_K=1$

, and define the following two quantities for

![]() $l,k = 1,\ldots ,K$

with

$l,k = 1,\ldots ,K$

with

![]() $l \geq k$

:

$l \geq k$

:

\begin{align} \begin{split} d_{kl}^{(g)} &= \sum \limits _{\substack {i,j \\ j \gt i}} y_{ij}^{(g)} \unicode {x1D7D9}_{\{u_i^{(g)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(g)} \in [a_{l-1}, a_l)\}} \\ m_{kl}^{(g)} &= \sum \limits _{\substack {i,j \\ j \gt i}} \unicode {x1D7D9}_{\{u_i^{(g)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(g)} \in [a_{l-1}, a_l)\}}. \end{split} \end{align}

\begin{align} \begin{split} d_{kl}^{(g)} &= \sum \limits _{\substack {i,j \\ j \gt i}} y_{ij}^{(g)} \unicode {x1D7D9}_{\{u_i^{(g)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(g)} \in [a_{l-1}, a_l)\}} \\ m_{kl}^{(g)} &= \sum \limits _{\substack {i,j \\ j \gt i}} \unicode {x1D7D9}_{\{u_i^{(g)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(g)} \in [a_{l-1}, a_l)\}}. \end{split} \end{align}

This means

![]() $d_{kl}^{(g)}$

and

$d_{kl}^{(g)}$

and

![]() $m_{kl}^{(g)}$

represent the number of present (

$m_{kl}^{(g)}$

represent the number of present (

![]() $y_{ij}^{(g)} = 1$

) and (a priori) possible edges of network

$y_{ij}^{(g)} = 1$

) and (a priori) possible edges of network

![]() $g$

, respectively, within the constructed rectangle

$g$

, respectively, within the constructed rectangle

![]() $[a_{k-1}, a_k) \times [a_{l-1}, a_l)$

. The corresponding cross-network counts can be calculated by

$[a_{k-1}, a_k) \times [a_{l-1}, a_l)$

. The corresponding cross-network counts can be calculated by

![]() $d_{kl} = d_{kl}^{(1)} + d_{kl}^{(2)}$

and

$d_{kl} = d_{kl}^{(1)} + d_{kl}^{(2)}$

and

![]() $m_{kl} = m_{kl}^{(1)} + m_{kl}^{(2)}$

. Note that different network sizes are implicitly taken into account, since the sums in (8) run over the number of edges in network

$m_{kl} = m_{kl}^{(1)} + m_{kl}^{(2)}$

. Note that different network sizes are implicitly taken into account, since the sums in (8) run over the number of edges in network

![]() $g$

, which is suppressed in the notation for simplicity of notation. Since

$g$

, which is suppressed in the notation for simplicity of notation. Since

![]() $w^{\text{joint}}(\cdot ,\cdot )$

is smooth, we further assume that the induced probability on edge variables within

$w^{\text{joint}}(\cdot ,\cdot )$

is smooth, we further assume that the induced probability on edge variables within

![]() $[a_{k-1}, a_k) \times [a_{l-1}, a_l)$

is approximately constant. That allows for putting the observed ratios between present and absent edges in direct relation. In this light, we formulate the following contingency table to keep track of homogeneity between the networks within rectangle

$[a_{k-1}, a_k) \times [a_{l-1}, a_l)$

is approximately constant. That allows for putting the observed ratios between present and absent edges in direct relation. In this light, we formulate the following contingency table to keep track of homogeneity between the networks within rectangle

![]() $(k,l)$

:

$(k,l)$

:

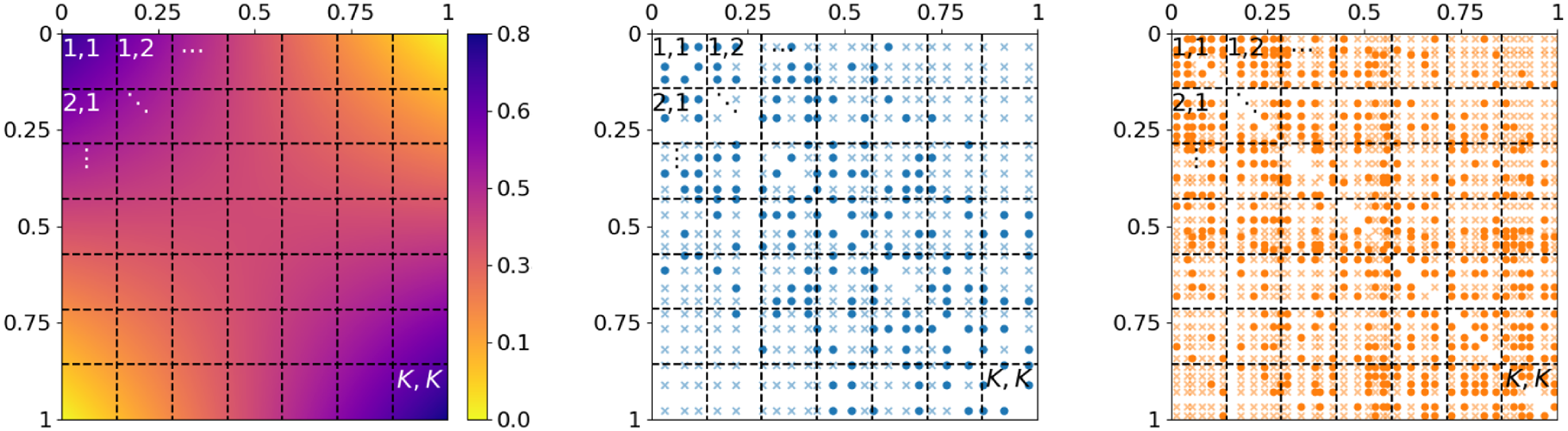

Figure 2. Dividing the unit square as domain of the graphon model into small segments for comparing network structure on a microscopic level. Left: division of

![]() $w^{\text{joint}}(\cdot ,\cdot )$

into approximately piecewise-constant rectangles. Middle and right: edge positions

$w^{\text{joint}}(\cdot ,\cdot )$

into approximately piecewise-constant rectangles. Middle and right: edge positions

![]() $(u_{i}^{(g)}, u_{j}^{(g)})^\top$

of two simulated networks with respect to

$(u_{i}^{(g)}, u_{j}^{(g)})^\top$

of two simulated networks with respect to

![]() $w^{\text{joint}}(\cdot ,\cdot )$

; weakly colored crosses and intensively colored circles represent absent and present edges, respectively. The two networks can be compared by pairwise contrasting the edge proportions within the labeled rectangles.

$w^{\text{joint}}(\cdot ,\cdot )$

; weakly colored crosses and intensively colored circles represent absent and present edges, respectively. The two networks can be compared by pairwise contrasting the edge proportions within the labeled rectangles.

Apparently, if

![]() $H_0$

is assumed to be true, we would expect the proportions of present edges,

$H_0$

is assumed to be true, we would expect the proportions of present edges,

![]() $d_{kl}^{(1)} / m_{kl}^{(1)}$

and

$d_{kl}^{(1)} / m_{kl}^{(1)}$

and

![]() $d_{kl}^{(2)} / m_{kl}^{(2)}$

, to be similar. This can be assessed by contrasting the observed numbers of edges with their expectations conditional on the given margin totals, which is in line with the construction of Fisher’s exact test on

$d_{kl}^{(2)} / m_{kl}^{(2)}$

, to be similar. This can be assessed by contrasting the observed numbers of edges with their expectations conditional on the given margin totals, which is in line with the construction of Fisher’s exact test on

![]() $2 \times 2$

contingency tables. In this regard, the theoretical random counterpart of

$2 \times 2$

contingency tables. In this regard, the theoretical random counterpart of

![]() $d_{kl}^{(1)}$

can be defined as

$d_{kl}^{(1)}$

can be defined as

\begin{align*} D_{kl}^{(1)} &= \sum \limits _{\substack {i,j \\ j \gt i}} Y_{ij}^{(1)} \unicode {x1D7D9}_{\{u_i^{(1)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(1)} \in [a_{l-1}, a_l)\}}, \end{align*}

\begin{align*} D_{kl}^{(1)} &= \sum \limits _{\substack {i,j \\ j \gt i}} Y_{ij}^{(1)} \unicode {x1D7D9}_{\{u_i^{(1)} \in [a_{k-1}, a_k)\}} \unicode {x1D7D9}_{\{u_j^{(1)} \in [a_{l-1}, a_l)\}}, \end{align*}

for which under

![]() $H_0$

it approximately holds that

$H_0$

it approximately holds that

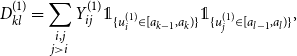

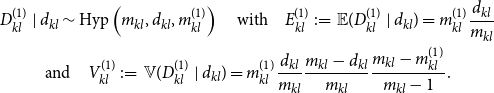

\begin{equation} \begin{gathered} D_{kl}^{(1)} \mid d_{kl} \sim \mbox{Hyp} \left ( m_{kl}, d_{kl}, m_{kl}^{(1)} \right ) \quad \mbox{with} \quad E_{kl}^{(1)} \,:\!=\, \mathbb{E} (D_{kl}^{(1)} \mid d_{kl} ) = m_{kl}^{(1)} \frac {d_{kl}}{m_{kl}} \\ \mbox{and} \quad V_{kl}^{(1)} \,:\!=\, {\mathbb{V}} (D_{kl}^{(1)} \mid d_{kl} ) = m_{kl}^{(1)} \frac {d_{kl}}{m_{kl}} \frac {m_{kl} - d_{kl}}{m_{kl}} \frac {m_{kl} - m_{kl}^{(1)}}{m_{kl} -1}. \end{gathered} \end{equation}

\begin{equation} \begin{gathered} D_{kl}^{(1)} \mid d_{kl} \sim \mbox{Hyp} \left ( m_{kl}, d_{kl}, m_{kl}^{(1)} \right ) \quad \mbox{with} \quad E_{kl}^{(1)} \,:\!=\, \mathbb{E} (D_{kl}^{(1)} \mid d_{kl} ) = m_{kl}^{(1)} \frac {d_{kl}}{m_{kl}} \\ \mbox{and} \quad V_{kl}^{(1)} \,:\!=\, {\mathbb{V}} (D_{kl}^{(1)} \mid d_{kl} ) = m_{kl}^{(1)} \frac {d_{kl}}{m_{kl}} \frac {m_{kl} - d_{kl}}{m_{kl}} \frac {m_{kl} - m_{kl}^{(1)}}{m_{kl} -1}. \end{gathered} \end{equation}

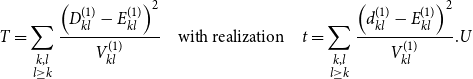

Based on these specifications, we define our test statistic as

\begin{equation} T = \sum \limits _{\substack {k,l \\ l \geq k}} \frac {\left ( D_{kl}^{(1)} - E_{kl}^{(1)} \right )^2}{ V_{kl}^{(1)} } \quad \mbox{with realization} \quad t = \sum \limits _{\substack {k,l \\ l \geq k}} \frac {\left ( d_{kl}^{(1)} - E_{kl}^{(1)} \right )^2}{ V_{kl}^{(1)} }.U \end{equation}

\begin{equation} T = \sum \limits _{\substack {k,l \\ l \geq k}} \frac {\left ( D_{kl}^{(1)} - E_{kl}^{(1)} \right )^2}{ V_{kl}^{(1)} } \quad \mbox{with realization} \quad t = \sum \limits _{\substack {k,l \\ l \geq k}} \frac {\left ( d_{kl}^{(1)} - E_{kl}^{(1)} \right )^2}{ V_{kl}^{(1)} }.U \end{equation}

Note that here we only sum over the squared standardized deviations of the first network as the hypergeometric distribution is symmetric, making the same information for the second network redundant. Moreover, summands for which

![]() $V_{kl}^{(1)} = 0$

—resulting from

$V_{kl}^{(1)} = 0$

—resulting from

![]() $m_{kl}^{(1)}$

,

$m_{kl}^{(1)}$

,

![]() $m_{kl} - m_{kl}^{(1)}$

,

$m_{kl} - m_{kl}^{(1)}$

,

![]() $d_{kl}$

, or

$d_{kl}$

, or

![]() $m_{kl} - d_{kl}$

being zero—carry no relevant information and thus can simply be omitted from the calculation. We can now easily simulate a sample of the distribution under

$m_{kl} - d_{kl}$

being zero—carry no relevant information and thus can simply be omitted from the calculation. We can now easily simulate a sample of the distribution under

![]() $H_0$

by drawing

$H_0$

by drawing

![]() $D_{kl}^{(1)} \mid d_{kl}$

according to (9) and calculating

$D_{kl}^{(1)} \mid d_{kl}$

according to (9) and calculating

![]() $T$

as in (10). From this, we can easily derive a critical value

$T$

as in (10). From this, we can easily derive a critical value

![]() $c_{1-\alpha }$

or

$c_{1-\alpha }$

or

![]() $p$

-value by comparing the realization

$p$

-value by comparing the realization

![]() $t$

of the simulated values. Moreover, asymptotic calculation is possible if

$t$

of the simulated values. Moreover, asymptotic calculation is possible if

![]() $m_{kl}^{(1)}$

is large,

$m_{kl}^{(1)}$

is large,

![]() $m_{kl}$

and

$m_{kl}$

and

![]() $d_{kl}$

are large compared to

$d_{kl}$

are large compared to

![]() $m_{kl}^{(1)}$

, and

$m_{kl}^{(1)}$

, and

![]() $d_{kl} / m_{kl}$

is not close to zero or one. Then

$d_{kl} / m_{kl}$

is not close to zero or one. Then

![]() $D_{kl}^{(1)}$

is approximately normally distributed and we can conclude that

$D_{kl}^{(1)}$

is approximately normally distributed and we can conclude that

Here, the test statistic is essentially the sum of squared (conditionally) independent random variables that approximately follow a standard normal distribution. This allows again to derive a critical value or a

![]() $p$

-value, respectively. The choice of an appropriate

$p$

-value, respectively. The choice of an appropriate

![]() $K$

applied for these calculations is discussed in Section C of the Appendix. Note that altogether the presented test procedure follows a conception similar to the one underlying the log-rank test for time-to-event data, which also includes the construction of a conditional distribution as in (9). Regarding the latter, it seems worth noting that conditioning on the margins in the corresponding contingency table does not imply adding any assumptions on

$K$

applied for these calculations is discussed in Section C of the Appendix. Note that altogether the presented test procedure follows a conception similar to the one underlying the log-rank test for time-to-event data, which also includes the construction of a conditional distribution as in (9). Regarding the latter, it seems worth noting that conditioning on the margins in the corresponding contingency table does not imply adding any assumptions on

![]() $w^{(1)}(\cdot , \cdot )$

or

$w^{(1)}(\cdot , \cdot )$

or

![]() $w^{(2)}(\cdot , \cdot )$

, or the structural relation between these two. It rather means to presume that the realization of these marginal totals carries no information about the relationship between

$w^{(2)}(\cdot , \cdot )$

, or the structural relation between these two. It rather means to presume that the realization of these marginal totals carries no information about the relationship between

![]() $w^{(1)}(\cdot , \cdot )$

and

$w^{(1)}(\cdot , \cdot )$

and

![]() $w^{(2)}(\cdot , \cdot )$

, equivalently as for Fisher’s test on contingency tables (Yates Reference Yates1984). We also refer to (Lang Reference Lang1996) for further discussion in this line.

$w^{(2)}(\cdot , \cdot )$

, equivalently as for Fisher’s test on contingency tables (Yates Reference Yates1984). We also refer to (Lang Reference Lang1996) for further discussion in this line.

Apparently, when conducting the test procedure on real-world networks, we obtain the joint graphon and the corresponding alignment of the networks by applying the estimation procedure described in Section 4. In the end, this enables us to appropriately approximate test statistic (10). In this context, it is important to consider the general behavior of the joint graphon estimation under the alternative, that is, if hypothesis (4) does not hold. We stress that the intuition of the estimation procedure is to align the two networks as well as possible with respect to some suitable joint graphon model. Consequently, the expectation of

![]() $T$

will be higher the more the true graphons

$T$

will be higher the more the true graphons

![]() $w^{(1)}(\cdot ,\cdot )$

and

$w^{(1)}(\cdot ,\cdot )$

and

![]() $w^{(2)}(\cdot ,\cdot )$

differ after “optimal” alignment. This clearly implies that the power of our test is higher for instances that deviate more strongly from the null hypothesis.

$w^{(2)}(\cdot ,\cdot )$

differ after “optimal” alignment. This clearly implies that the power of our test is higher for instances that deviate more strongly from the null hypothesis.

6. Applications

In this section, we showcase the applicability of the joint graphon estimation routine and the subsequent testing procedure. To give a comprehensive insight, this comprises both simulated and real-world networks. For an optimal estimation result and to best approximate test statistic (10), we repeat the estimation and testing procedure several times. In a modeling-oriented context, we would then typically pick the outcome with the lowest corrected

![]() $\operatorname {AIC}$

. However, since here the focus is on the statistical testing aspect, we choose the estimation result which leads to the highest

$\operatorname {AIC}$

. However, since here the focus is on the statistical testing aspect, we choose the estimation result which leads to the highest

![]() $p$

-value, assuming that this provides an optimal lower bound for the outcome under the (potentially existing) oracle network alignment.

$p$

-value, assuming that this provides an optimal lower bound for the outcome under the (potentially existing) oracle network alignment.

Moreover, applying test statistic (10) requires to specify

![]() $K$

and the sequence

$K$

and the sequence

![]() $a_0, \ldots , a_K$

. For the sake of simplicity and due to its minor impact, in the following applications we employ equidistant boundaries

$a_0, \ldots , a_K$

. For the sake of simplicity and due to its minor impact, in the following applications we employ equidistant boundaries

![]() $a_0, \ldots , a_K$

as an intuitive approach, that is, setting

$a_0, \ldots , a_K$

as an intuitive approach, that is, setting

![]() $a_k = k/K$

. In turn, under equidistant node positions, the consistent number of network entries per network and rectangle,

$a_k = k/K$

. In turn, under equidistant node positions, the consistent number of network entries per network and rectangle,

![]() $m_{kl}^{(g)}$

, can be easily deduced, and we choose

$m_{kl}^{(g)}$

, can be easily deduced, and we choose

![]() $K$

such that this parameter is “not too small” for both networks. For more details, see Section C of the Appendix.

$K$

such that this parameter is “not too small” for both networks. For more details, see Section C of the Appendix.

6.1 Simulation studies

6.1.1 Exemplary application to synthetic data

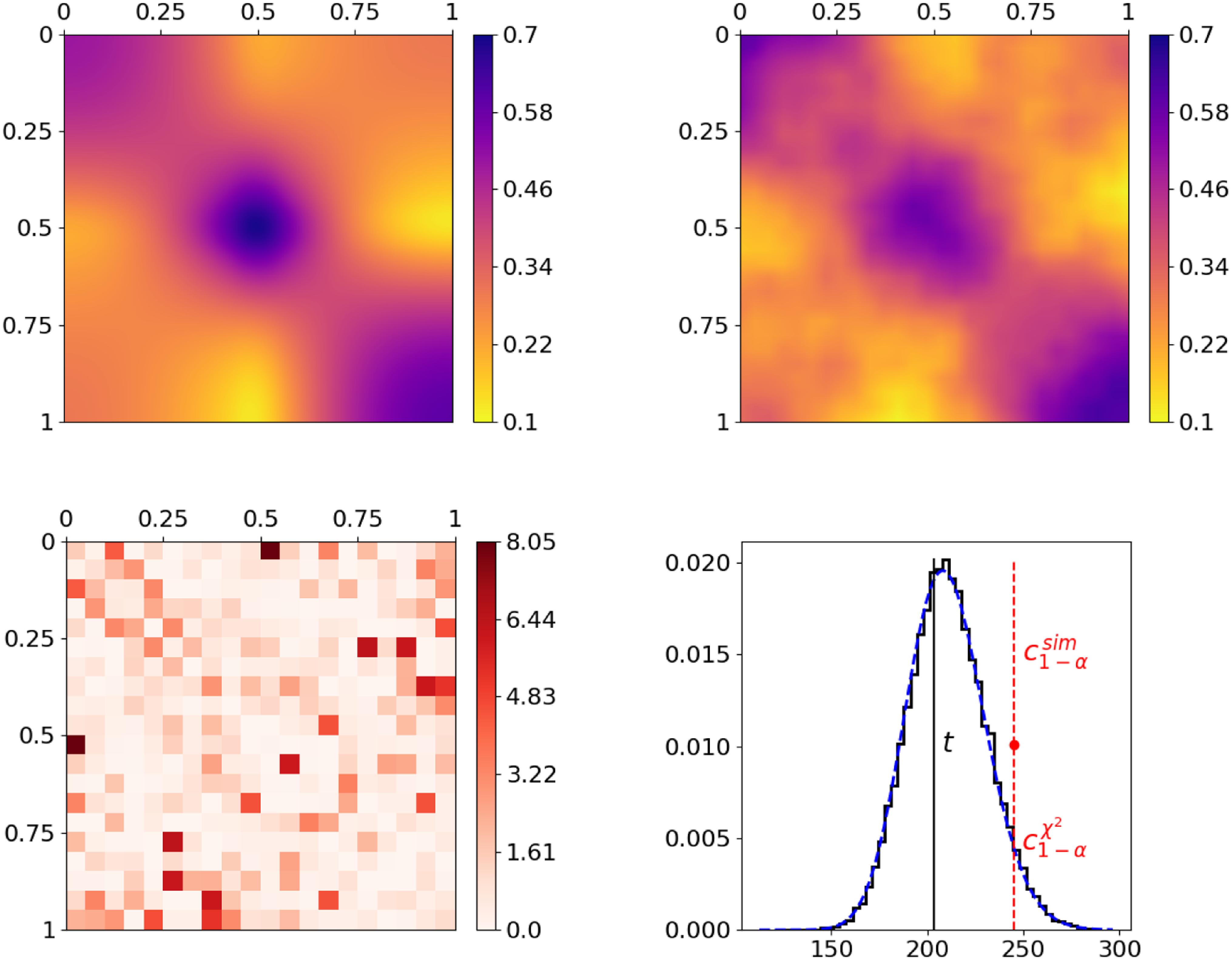

Figure 3. Joint graphon estimation for simulated networks with subsequent testing on equivalence of the underlying distribution models. The top row shows the true and the jointly estimated graphon on the left and right, respectively. The realizations of the terms of test statistic (10), representing the dissimilarities of the two networks per rectangle, are visualized at the bottom left, where

![]() $m_{kl}^{(g)} \geq 100$

for

$m_{kl}^{(g)} \geq 100$

for

![]() $k \neq l$

and

$k \neq l$

and

![]() $\geq 45$

otherwise (cf. contingency table on page 16). The final result of the test statistic (black solid vertical line) as well as its distribution under

$\geq 45$

otherwise (cf. contingency table on page 16). The final result of the test statistic (black solid vertical line) as well as its distribution under

![]() $H_0$

are illustrated at the bottom right, where the black solid step function and the blue dashed curve depict the simulated and the asymptotic chi-squared distribution, respectively. The red dashed vertical lines (separated by red dot) visualize the critical values at a significance level of

$H_0$

are illustrated at the bottom right, where the black solid step function and the blue dashed curve depict the simulated and the asymptotic chi-squared distribution, respectively. The red dashed vertical lines (separated by red dot) visualize the critical values at a significance level of

![]() $5\%$

, derived from the simulated (upper line) and the asymptotic distribution (lower line).

$5\%$

, derived from the simulated (upper line) and the asymptotic distribution (lower line).

To demonstrate the general capability of the joint graphon estimation and the performance of the subsequent testing procedure, we consider the graphon in the top left plot of Figure 3. Its formation is inspired by and can be interpreted as a stochastic blockmodel with smooth transitions between communities. Based on this ground-truth model specification, we simulate two networks with

![]() $N^{(1)} = 200$

and

$N^{(1)} = 200$

and

![]() $N^{(2)} = 300$

by making use of data-generating process (2). The underlying structure can then be recovered by applying the joint graphon estimation, where, for initialization, we make use of an uninformative random node positioning. This yields the graphon estimate at the top right, which apparently fully captures the structure of the ground-truth graphon. Based on the associated estimated node positions, we subsequently conduct the testing procedure on whether the underlying distributions are equivalent. To this end, we start with calculating the rectangle-wise differences according to the construction of test statistic (10). The results are depicted as a heat map at the bottom left plot of Figure 3. This reveals that the difference in the local edge density is rather low to moderate in most rectangles, whereas it is distinctly higher in a few others. Aggregating these differences yields a test statistic of

$N^{(2)} = 300$

by making use of data-generating process (2). The underlying structure can then be recovered by applying the joint graphon estimation, where, for initialization, we make use of an uninformative random node positioning. This yields the graphon estimate at the top right, which apparently fully captures the structure of the ground-truth graphon. Based on the associated estimated node positions, we subsequently conduct the testing procedure on whether the underlying distributions are equivalent. To this end, we start with calculating the rectangle-wise differences according to the construction of test statistic (10). The results are depicted as a heat map at the bottom left plot of Figure 3. This reveals that the difference in the local edge density is rather low to moderate in most rectangles, whereas it is distinctly higher in a few others. Aggregating these differences yields a test statistic of

![]() $203.2$

as depicted by the black solid vertical line at the bottom right. Contrasting this result with the simulated

$203.2$

as depicted by the black solid vertical line at the bottom right. Contrasting this result with the simulated

![]() $95\%$

quantile of the distribution of

$95\%$

quantile of the distribution of

![]() $T$

under

$T$

under

![]() $H_0$

as the critical value (red dashed vertical line) yields no rejection. Hence, the underlying distributions of the two networks do not significantly differ with respect to a significance level of

$H_0$

as the critical value (red dashed vertical line) yields no rejection. Hence, the underlying distributions of the two networks do not significantly differ with respect to a significance level of

![]() $5\%$

. As a final remark with regard to the bottom right plot, the simulated distribution of

$5\%$

. As a final remark with regard to the bottom right plot, the simulated distribution of

![]() $T$

(black solid step function) and its theoretical approximation (blue dashed curve)—both relying on the assumption of

$T$

(black solid step function) and its theoretical approximation (blue dashed curve)—both relying on the assumption of

![]() $H_0$

being true—are very close to one another. Consequently, they also provide very similar critical values, namely

$H_0$

being true—are very close to one another. Consequently, they also provide very similar critical values, namely

![]() $243.6$

and

$243.6$

and

![]() $244.8$

, respectively. This demonstrates that asymptotic distribution (11) represents a good approximation.

$244.8$

, respectively. This demonstrates that asymptotic distribution (11) represents a good approximation.

6.1.2 Performance analysis under

$H_0$

$H_0$

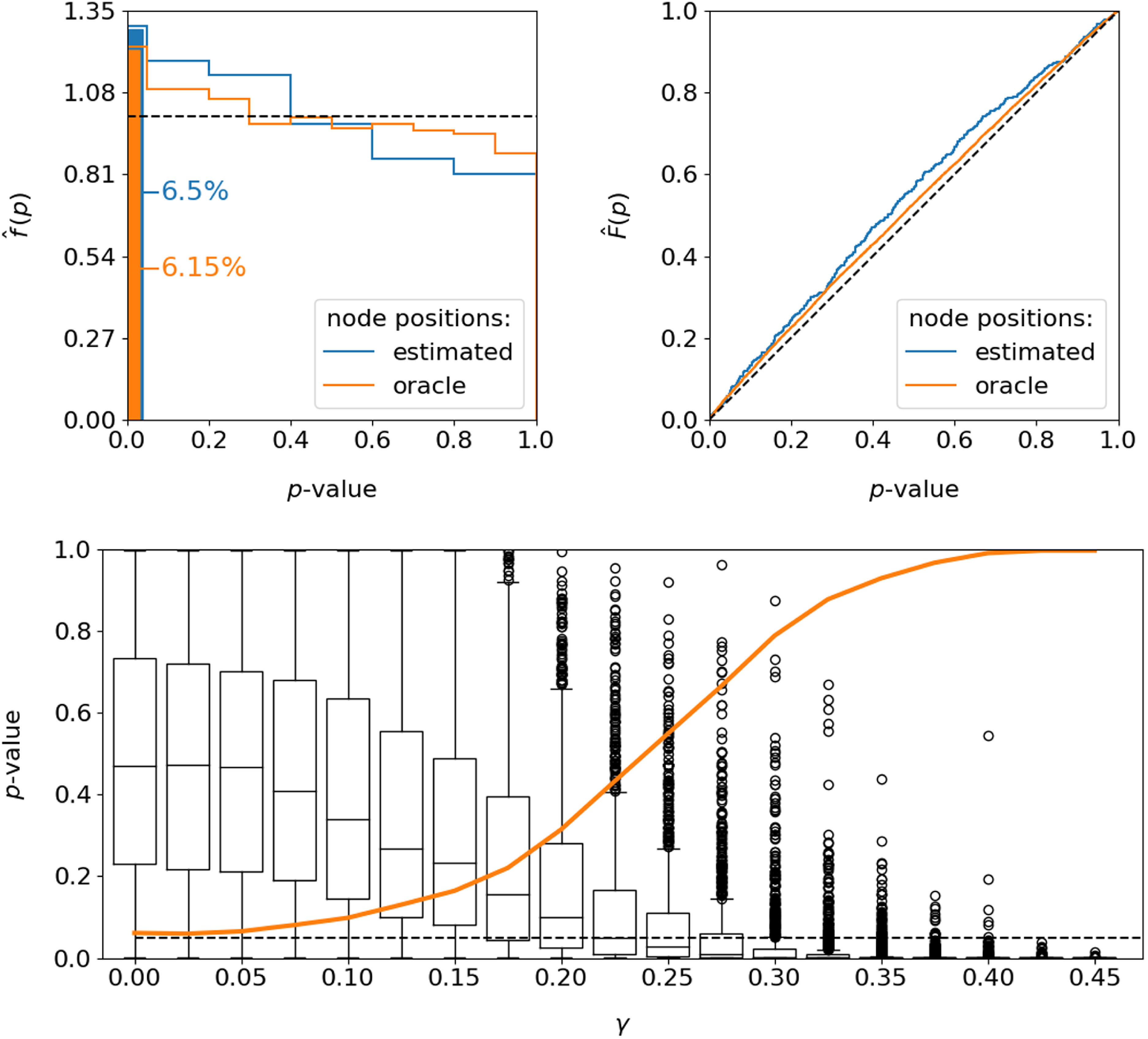

To evaluate the performance of the testing procedure in this example more profoundly, we repeat the above proceeding

![]() $400$

times, with newly simulated networks in each trial (remaining with

$400$

times, with newly simulated networks in each trial (remaining with

![]() $N^{(1)} = 200$

and

$N^{(1)} = 200$

and

![]() $N^{(2)} = 300$

). Note that we run the estimation procedure always ten times (with different random node positions as varying initialization) and finally pick the highest

$N^{(2)} = 300$

). Note that we run the estimation procedure always ten times (with different random node positions as varying initialization) and finally pick the highest

![]() $p$

-value as the actual result for the given network pair. These repetitions already provide a broad insight into the method’s performance under the given setting. An even more extensive evaluation becomes possible when, in contrast to the proceeding above, the testing procedure is performed on the basis of the oracle node positions. These positions already describe an optimal network alignment and can thus be used directly as a substitute for

$p$

-value as the actual result for the given network pair. These repetitions already provide a broad insight into the method’s performance under the given setting. An even more extensive evaluation becomes possible when, in contrast to the proceeding above, the testing procedure is performed on the basis of the oracle node positions. These positions already describe an optimal network alignment and can thus be used directly as a substitute for

![]() $(\hat {{\boldsymbol{u}}}^{(1)},\hat {{\boldsymbol{u}}}^{(2)})$

that will even lead to the smallest test statistic possible. Moreover, it allows us to dramatically reduce the computational burden since it releases us from the preceding (computationally expensive) model estimation. As a consequence, we are able to increase the number of conducted tests to

$(\hat {{\boldsymbol{u}}}^{(1)},\hat {{\boldsymbol{u}}}^{(2)})$

that will even lead to the smallest test statistic possible. Moreover, it allows us to dramatically reduce the computational burden since it releases us from the preceding (computationally expensive) model estimation. As a consequence, we are able to increase the number of conducted tests to

![]() $10,000$

. From these two repetition studies (using either

$10,000$

. From these two repetition studies (using either

![]() $\hat {{\boldsymbol{u}}}^{(g)}$

or

$\hat {{\boldsymbol{u}}}^{(g)}$

or

![]() ${\boldsymbol{u}}^{(g)}$

), we obtain rejection rates of

${\boldsymbol{u}}^{(g)}$

), we obtain rejection rates of

![]() $6.5\%$

and

$6.5\%$

and

![]() $6.15\%$

under the estimated and oracle node positioning, respectively. That means the test is slightly overconfident relative to the nominal significance level of

$6.15\%$

under the estimated and oracle node positioning, respectively. That means the test is slightly overconfident relative to the nominal significance level of

![]() $5\%$

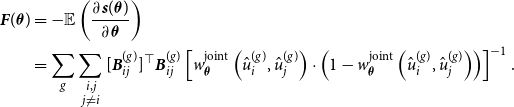

. The test is not exact in any way, as it is built upon the asymptotic statement (11) which explains the small bias of the test. The top row of Figure 4 shows additionally the empirical distributions of the observed

$5\%$

. The test is not exact in any way, as it is built upon the asymptotic statement (11) which explains the small bias of the test. The top row of Figure 4 shows additionally the empirical distributions of the observed

![]() $p$

-values, illustrated as densities (left) and cumulative distribution functions (right). In accordance with the mildly inflated rejection rates, this exhibits a slight tendency to underestimate the

$p$

-values, illustrated as densities (left) and cumulative distribution functions (right). In accordance with the mildly inflated rejection rates, this exhibits a slight tendency to underestimate the

![]() $p$

-value, i.e. interpreting the discrepancy as too high in distributional terms.

$p$

-value, i.e. interpreting the discrepancy as too high in distributional terms.

Figure 4. Performance of the testing procedure with regard to the resulting

![]() $p$

-value; results are simulation-based. Top: empirical distribution of the

$p$

-value; results are simulation-based. Top: empirical distribution of the

![]() $p$

-value under

$p$

-value under

![]() $H_0$

, illustrated as density (left, including a depiction of rejection rates at significance level of 5%) and cumulative distribution function (right). The black dashed lines illustrate the desired distributional behavior of an optimal test. Number of repetitions for estimated / oracle node positions:

$H_0$

, illustrated as density (left, including a depiction of rejection rates at significance level of 5%) and cumulative distribution function (right). The black dashed lines illustrate the desired distributional behavior of an optimal test. Number of repetitions for estimated / oracle node positions:

![]() $400$

/

$400$

/

![]() $10,000$

. Bottom: distribution of the

$10,000$

. Bottom: distribution of the

![]() $p$

-value under

$p$

-value under

![]() $H_1$

and the usage of oracle node positions (in box plot format); based on

$H_1$

and the usage of oracle node positions (in box plot format); based on

![]() $1,000$

repetitions each. The x-axis illustrates different settings according to formulation (12) (higher value of

$1,000$

repetitions each. The x-axis illustrates different settings according to formulation (12) (higher value of

![]() $\gamma$

implies stronger deviation from

$\gamma$

implies stronger deviation from

![]() $H_0$

). The black dashed horizontal line represents the

$H_0$

). The black dashed horizontal line represents the

![]() $5\%$

significance level, and the orange curve illustrates the corresponding power.

$5\%$

significance level, and the orange curve illustrates the corresponding power.

6.1.3 Performance analysis under

$H_1$

$H_1$

Conclusively, we are interested in evaluating the test performance under a false null hypothesis, which apparently requires formulating a suitable alternative. To this end, we “shrink” the heterogeneity within the graphon such that the present structure becomes less pronounced. The resulting graphon specification consequently tends more towards an Erdős–Rényi model, with the global density remaining unchanged. To be precise, based on

![]() $w^{(1)}(\cdot ,\cdot )$

, we formulate

$w^{(1)}(\cdot ,\cdot )$

, we formulate

with

![]() $\gamma \in [0,1]$

and

$\gamma \in [0,1]$

and

![]() $\bar {w}^{(1)} = \iint w^{(1)}(u,v) \mathop {}\!\mathrm{d} u \mathop {}\!\mathrm{d} v$

. Apparently, increasing the mixing parameter

$\bar {w}^{(1)} = \iint w^{(1)}(u,v) \mathop {}\!\mathrm{d} u \mathop {}\!\mathrm{d} v$

. Apparently, increasing the mixing parameter

![]() $\gamma$

leads to a stronger deviation from

$\gamma$

leads to a stronger deviation from

![]() $H_0$

. At the same time, this setting guarantees an optimal alignment of

$H_0$

. At the same time, this setting guarantees an optimal alignment of

![]() $w^{(1)}(\cdot ,\cdot )$

and

$w^{(1)}(\cdot ,\cdot )$

and

![]() $w^{(2)}(\cdot ,\cdot )$

, meaning that there exists no rearrangement of

$w^{(2)}(\cdot ,\cdot )$

, meaning that there exists no rearrangement of

![]() $w^{(2)}(\cdot ,\cdot )$

that is closer to

$w^{(2)}(\cdot ,\cdot )$

that is closer to

![]() $w^{(1)}(\cdot ,\cdot )$

than specification (12). For this experiment, we again choose

$w^{(1)}(\cdot ,\cdot )$

than specification (12). For this experiment, we again choose

![]() $N^{(1)} = 200$

and

$N^{(1)} = 200$

and

![]() $N^{(2)} = 300$

. Moreover, here we rely exclusively on the oracle node positions. This provides a lower bound of the power since the rejection rate can be expected to be higher when using estimated node positions instead (cf. previous analysis under

$N^{(2)} = 300$

. Moreover, here we rely exclusively on the oracle node positions. This provides a lower bound of the power since the rejection rate can be expected to be higher when using estimated node positions instead (cf. previous analysis under

![]() $H_0$

). The results for this setup are presented in the bottom plot of Figure 4, where the distribution of the resulting

$H_0$

). The results for this setup are presented in the bottom plot of Figure 4, where the distribution of the resulting

![]() $p$

-value is illustrated for different settings of

$p$

-value is illustrated for different settings of

![]() $\gamma$

. The orange curve additionally visualizes the resulting power, i.e. the proportion of cases with

$\gamma$

. The orange curve additionally visualizes the resulting power, i.e. the proportion of cases with

![]() $p \lt 0.05$

. These results clearly show that the probability of detecting the false null hypothesis monotonically increases as the parameter

$p \lt 0.05$

. These results clearly show that the probability of detecting the false null hypothesis monotonically increases as the parameter

![]() $\gamma$

gets larger, which underpins the appropriateness of our test procedure.

$\gamma$

gets larger, which underpins the appropriateness of our test procedure.

Overall, the obtained simulation results demonstrate that the elaborated estimation and testing procedure yields reasonable results for assessing structural differences between networks. Building upon these findings, we next want to investigate the method’s performance on real-world data.

6.2 Real-world examples

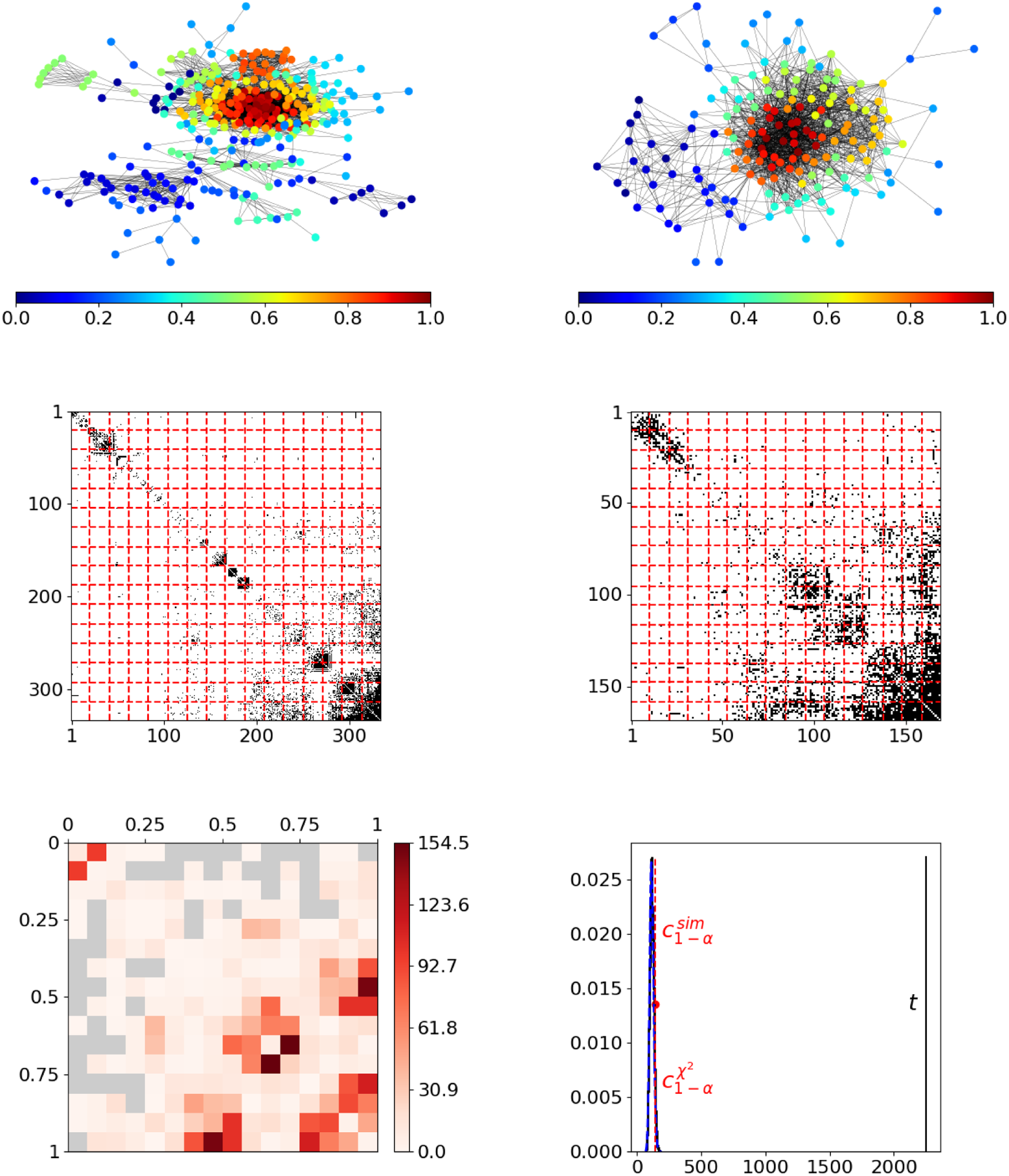

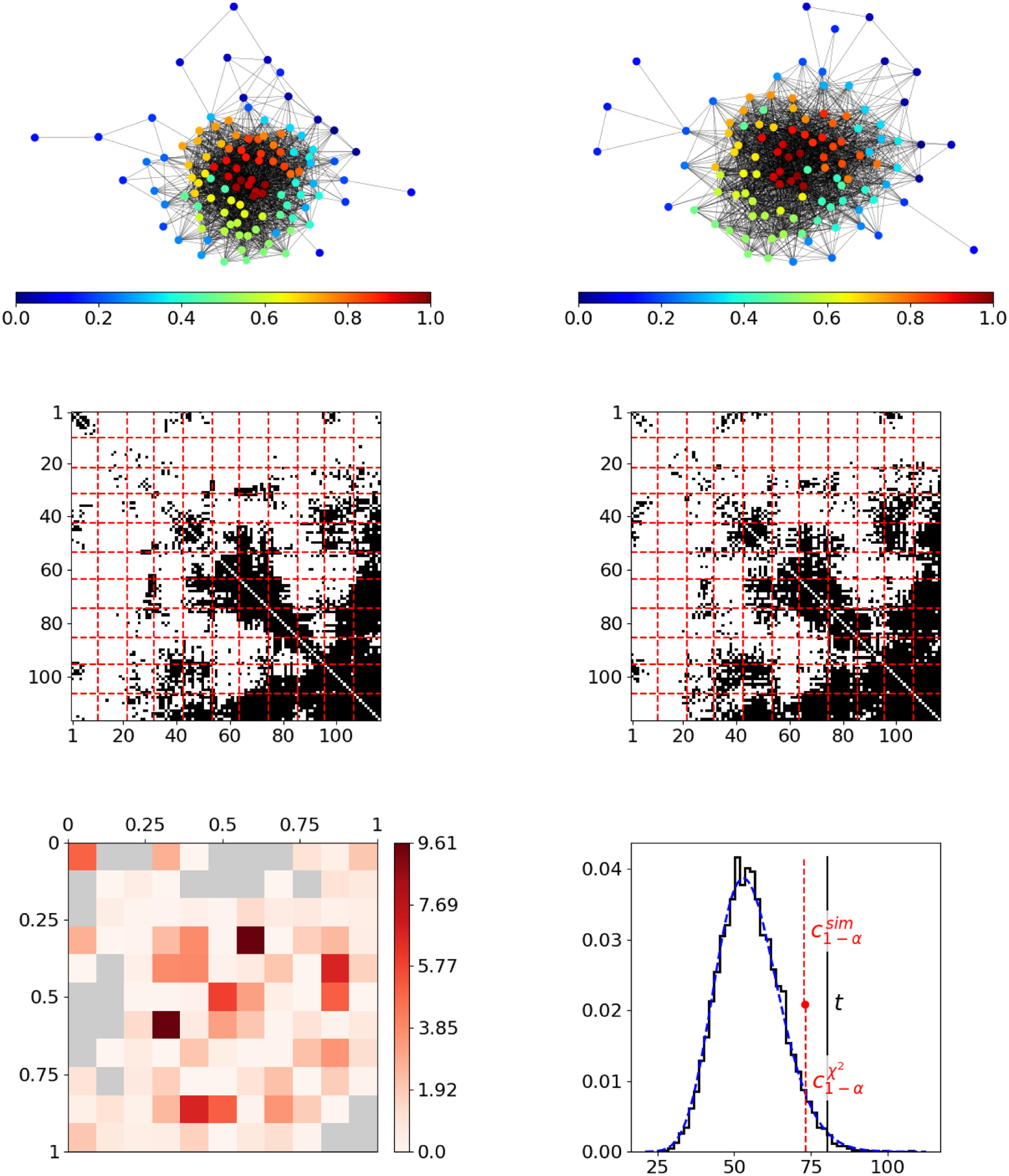

6.2.1 Facebook ego networks

As a first real-world example, we consider two Facebook ego networks, with each describing the friendship behavior between all friends of one user (“ego”). These networks have been assembled by Leskovec and McAuley (Reference Leskovec and McAuley2012) and are publicly available on the Stanford Large Network Dataset Collection (Leskovec and Krevl, Reference Leskovec and Krevl2014). The two ego networks consist of

![]() $333$

and

$333$

and

![]() $168$

individuals, respectively, where the ego nodes are not included. An illustration of these networks is given in the top row of Figure 5, with nodes being colored according to resulting positions. In both networks, these final estimated node positions appear to be in line with the given network structure in terms of reflecting the nodes’ embedding within the network. Moreover, they seem to be aligned across networks. For example, in both networks, the reddish nodes represent the rather centric individuals, whereas the nodes from the dark blue spectrum constitute a moderately interconnected branch that is more detached from the rest of the network. However, the segment-wise differences depicted at the bottom left in Figure 5 exhibit some strong deviations. This can be clearly traced back to the blockwise division of the adjacency matrices as it results from partitioning the domain of edge positions (middle row). The aggregated differences ultimately result in a test statistic that is far from the sector of plausible values under the null hypothesis, as illustrated at the bottom right. Consequently, we can conclude that the structural behavior in the two networks differs significantly.

$168$