1. Introduction

Experimental measurements of fluid flows inside or around an object often produce velocity images that contain noise. These images may be post-processed to either reveal obscured flow patterns or to extract a quantity of interest (e.g. pressure or wall shear stress). For example, magnetic resonance velocimetry (MRV) (Fukushima Reference Fukushima1999; Mantle & Sederman Reference Mantle and Sederman2003; Elkins & Alley Reference Elkins and Alley2007; Markl et al. Reference Markl, Frydrychowicz, Kozerke, Hope and Wieben2012; Demirkiran et al. Reference Demirkiran2021) can measure all three components of a time varying velocity field but the measurements become increasingly noisy as the spatial resolution is increased. To achieve an image of acceptable signal-to-noise ratio (SNR), repeated scans are often averaged, leading to long signal acquisition times. To address that problem, fast acquisition protocols (pulse sequences) can be used, but these may be difficult to implement and can lead to artefacts depending on the magnetic relaxation properties and the magnetic field homogeneity of the system studied. Another way to accelerate signal acquisition is by using sparse sampling techniques in conjunction with a reconstruction algorithm. The latter approach is an active field of research, commonly referred to as compressed sensing (Donoho Reference Donoho2006; Lustig, Donoho & Pauly Reference Lustig, Donoho and Pauly2007; Benning et al. Reference Benning, Gladden, Holland, Schönlieb and Valkonen2014; Corona et al. Reference Corona, Benning, Gladden, Reci, Sederman and Schoenlieb2021; Peper et al. Reference Peper, Gottwald, Zhang, Coolen, van Ooij, Nederveen and Strijkers2020). Compressed sensing (CS) algorithms exploit a priori knowledge about the structure of the data, which is encoded in a regularization norm (e.g. total variation, wavelet bases), but without considering the physics of the problem. Even though the present study concerns the reconstruction of fully-sampled, noisy MRV images, the method that we present here can be applied to sparsely sampled MRV data.

For images depicting fluid flow, a priori knowledge can come in the form of a Navier–Stokes (N–S) problem. The problem of reconstructing and segmenting a flow image then can be expressed as a generalized inverse Navier–Stokes problem whose flow domain, boundary conditions and model parameters have to be inferred for the modelled velocity to approximate the measured velocity in an appropriate metric space. This approach not only produces a reconstruction that is an accurate fluid flow inside or around the object (a solution to a Navier–Stokes problem), but also provides additional physical knowledge (e.g. pressure), which is otherwise difficult to measure. Inverse Navier–Stokes problems have been intensively studied during the last decade, mainly enabled by the increase of available computing power. Recent applications in fluid mechanics range from the forcing inference problem (Hoang, Law & Stuart Reference Hoang, Law and Stuart2014) to the reconstruction of scalar image velocimetry (SIV) (Gillissen et al. Reference Gillissen, Vilquin, Kellay, Bouffanais and Yue2018; Sharma et al. Reference Sharma, Rypina, Musgrave and Haller2019) and particle image velocimetry (PIV) (Gillissen, Bouffanais & Yue Reference Gillissen, Bouffanais and Yue2019) signals, as well as the identification of optimal sensor arrangements (Mons, Chassaing & Sagaut Reference Mons, Chassaing and Sagaut2017; Verma et al. Reference Verma, Papadimitriou, Lüthen, Arampatzis and Koumoutsakos2019). Regularization methods that can be used for model parameters are reviewed by Stuart (Reference Stuart2010) from a Bayesian perspective and by Benning & Burger (Reference Benning and Burger2018) from a variational perspective. The well-posedness of Bayesian inverse Navier–Stokes problems is addressed by Cotter et al. (Reference Cotter, Dashti, Robinson and Stuart2009).

Recently, Koltukluoğlu & Blanco (Reference Koltukluoğlu and Blanco2018) treat the reduced inverse Navier–Stokes problem of finding only the Dirichlet boundary condition for the inlet velocity that matches the modelled velocity field to MRV data for a steady three-dimensional (3-D) flow in a glass replica of the human aorta. They measure the model-data discrepancy using the ![]() $L^2$-norm and introduce additional variational regularization terms for the Dirichlet boundary condition. The same formulation is extended to periodic flows by Koltukluoğlu (Reference Koltukluoğlu2019), Koltukluoğlu, Cvijetić & Hiptmair (Reference Koltukluoğlu, Cvijetić and Hiptmair2019), using the harmonic balance method for the temporal discretization of the Navier–Stokes problem. Funke et al. (Reference Funke, Nordaas, Evju, Alnæs and Mardal2019) address the problem of inferring both the inlet velocity (Dirichlet) boundary condition and the initial condition, for unsteady blood flows and four-dimensional (4-D) MRV data, with applications to cerebral aneurysms. We note that the above studies consider rigid boundaries and require a priori an accurate, and time-averaged, geometric representation of the blood vessel.

$L^2$-norm and introduce additional variational regularization terms for the Dirichlet boundary condition. The same formulation is extended to periodic flows by Koltukluoğlu (Reference Koltukluoğlu2019), Koltukluoğlu, Cvijetić & Hiptmair (Reference Koltukluoğlu, Cvijetić and Hiptmair2019), using the harmonic balance method for the temporal discretization of the Navier–Stokes problem. Funke et al. (Reference Funke, Nordaas, Evju, Alnæs and Mardal2019) address the problem of inferring both the inlet velocity (Dirichlet) boundary condition and the initial condition, for unsteady blood flows and four-dimensional (4-D) MRV data, with applications to cerebral aneurysms. We note that the above studies consider rigid boundaries and require a priori an accurate, and time-averaged, geometric representation of the blood vessel.

To find the shape of the flow domain, e.g. the blood vessel boundaries, computed tomography (CT) or magnetic resonance angiography (MRA) is often used. The acquired image is then reconstructed, segmented and smoothed. This process not only requires substantial effort and the design of an additional experiment (e.g. CT, MRA), but it also introduces geometric uncertainties (Morris et al. Reference Morris2016; Sankaran et al. Reference Sankaran, Kim, Choi and Taylor2016), which, in turn, affect the predictive confidence of arterial wall shear stress distributions and their mappings (Katritsis et al. Reference Katritsis, Kaiktsis, Chaniotis, Pantos, Efstathopoulos and Marmarelis2007; Sotelo et al. Reference Sotelo, Urbina, Valverde, Tejos, Irarrazaval, Andia, Uribe and Hurtado2016). For example, Funke et al. (Reference Funke, Nordaas, Evju, Alnæs and Mardal2019) report discrepancies between the modelled and the measured velocity fields near the flow boundaries, and they suspect they are caused by geometric errors that were introduced during the segmentation process. In general, the assumption of rigid boundaries either implies that a time-averaged geometry has to be used or that an additional experiment (e.g. CT, MRA) has to be conducted to register the moving boundaries to the flow measurements.

A more consistent approach to this problem is to treat the blood vessel geometry as an unknown when solving the generalized inverse Navier–Stokes problem. In this way, the inverse Navier–Stokes problem simultaneously reconstructs and segments the velocity fields and can better adapt to the MRV experiment by correcting the geometric errors and improving the reconstruction.

In this study, we address the problem of simultaneous velocity field reconstruction and boundary segmentation by formulating a generalized inverse Navier–Stokes problem, whose flow domain, boundary conditions and model parameters are all considered as unknown. To regularize the problem, we use a Bayesian framework and Gaussian measures in Hilbert spaces. This further allows us to estimate the posterior Gaussian distributions of the unknowns using a quasi-Newton method, which has not yet been addressed for this type of problem. We provide an algorithm for the solution of this generalized inverse Navier–Stokes problem, and demonstrate it on synthetic images of two-dimensional (2-D) steady flows and real MRV images of a steady axisymmetric flow.

This paper consists of two parts. In § 2, we formulate the generalized inverse Navier–Stokes problem and an algorithm that solves it. In § 3, we test the method using both synthetic and real MRV velocity images and describe the set-up of the MRV experiment.

2. An inverse Navier–Stokes problem for noisy flow images

In this section, we formulate the generalized inverse Navier–Stokes problem and provide an algorithm for its solution. In what follows, ![]() $L^2(\varOmega )$ denotes the space of square-integrable functions in

$L^2(\varOmega )$ denotes the space of square-integrable functions in ![]() $\varOmega$, with inner product

$\varOmega$, with inner product ![]() $\big \langle {\cdot },{\cdot } \big \rangle$ and norm

$\big \langle {\cdot },{\cdot } \big \rangle$ and norm ![]() $\big \lVert {\cdot } \big \rVert _{L^2(\varOmega )}$, and

$\big \lVert {\cdot } \big \rVert _{L^2(\varOmega )}$, and ![]() $H^k(\varOmega )$ the space of square-integrable functions with

$H^k(\varOmega )$ the space of square-integrable functions with ![]() $k$ square-integrable derivatives in

$k$ square-integrable derivatives in ![]() $\varOmega$. For a given covariance operator,

$\varOmega$. For a given covariance operator, ![]() $\mathcal {C}$, we also define the covariance-weighted

$\mathcal {C}$, we also define the covariance-weighted ![]() $L^2$ spaces, endowed with the inner product

$L^2$ spaces, endowed with the inner product ![]() ${\big \langle {\cdot },{\cdot } \big \rangle _\mathcal {C} := \big \langle {\cdot },\mathcal {C}^{-1}{\cdot } \big \rangle }$, which generates the norm

${\big \langle {\cdot },{\cdot } \big \rangle _\mathcal {C} := \big \langle {\cdot },\mathcal {C}^{-1}{\cdot } \big \rangle }$, which generates the norm ![]() $\big \lVert {\cdot } \big \rVert _{\mathcal {C}}$. The Euclidean norm in the space of real numbers

$\big \lVert {\cdot } \big \rVert _{\mathcal {C}}$. The Euclidean norm in the space of real numbers ![]() $\mathbb {R}^n$ is denoted by

$\mathbb {R}^n$ is denoted by ![]() $\lvert {\cdot } \rvert _{\mathbb {R}^n}$. We use the superscript

$\lvert {\cdot } \rvert _{\mathbb {R}^n}$. We use the superscript ![]() $({\cdot })^\star$ to denote a measurement,

$({\cdot })^\star$ to denote a measurement, ![]() $({\cdot })^\circ$ to denote a reconstruction and

$({\cdot })^\circ$ to denote a reconstruction and ![]() $({\cdot })^\bullet$ to denote the ground truth.

$({\cdot })^\bullet$ to denote the ground truth.

2.1. The inverse Navier–Stokes problem

An ![]() $n$-dimensional velocimetry experiment usually provides noisy flow velocity images on a domain

$n$-dimensional velocimetry experiment usually provides noisy flow velocity images on a domain ![]() $I \subset \mathbb {R}^n$, depicting the measured flow velocity

$I \subset \mathbb {R}^n$, depicting the measured flow velocity ![]() $\boldsymbol {u}^\star$ inside an object

$\boldsymbol {u}^\star$ inside an object ![]() $\varOmega \subset I$ with boundary

$\varOmega \subset I$ with boundary ![]() $\partial \varOmega = \varGamma \cup \varGamma _i \cup \varGamma _o$ (figure 1). An appropriate model is the Navier–Stokes problem

$\partial \varOmega = \varGamma \cup \varGamma _i \cup \varGamma _o$ (figure 1). An appropriate model is the Navier–Stokes problem

\begin{equation} \left.

\begin{array}{cl@{}}

\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}-\nu{\Delta}

\boldsymbol{u} + \boldsymbol{\nabla} p = \boldsymbol{0} &

\text{in}\ \varOmega,\\ \boldsymbol{\nabla}

\boldsymbol{\cdot} \boldsymbol{u} = 0 & \text{in}\

\varOmega,\\ \boldsymbol{u} = \boldsymbol{0} & \text{on}\

\varGamma, \\ \boldsymbol{u} = \boldsymbol{g}_i &

\text{on}\ \varGamma_i,\\

-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}+p\boldsymbol{\nu}

= \boldsymbol{g}_o & \text{on } \varGamma_o \end{array}

\right\} \end{equation}

\begin{equation} \left.

\begin{array}{cl@{}}

\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}-\nu{\Delta}

\boldsymbol{u} + \boldsymbol{\nabla} p = \boldsymbol{0} &

\text{in}\ \varOmega,\\ \boldsymbol{\nabla}

\boldsymbol{\cdot} \boldsymbol{u} = 0 & \text{in}\

\varOmega,\\ \boldsymbol{u} = \boldsymbol{0} & \text{on}\

\varGamma, \\ \boldsymbol{u} = \boldsymbol{g}_i &

\text{on}\ \varGamma_i,\\

-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}+p\boldsymbol{\nu}

= \boldsymbol{g}_o & \text{on } \varGamma_o \end{array}

\right\} \end{equation}

where ![]() $\boldsymbol {u}$ is the velocity,

$\boldsymbol {u}$ is the velocity, ![]() $p \unicode{x27FB} p/\rho$ is the reduced pressure,

$p \unicode{x27FB} p/\rho$ is the reduced pressure, ![]() $\rho$ is the density,

$\rho$ is the density, ![]() $\nu$ is the kinematic viscosity,

$\nu$ is the kinematic viscosity, ![]() $\boldsymbol {g}_i$ is the Dirichlet boundary condition at the inlet

$\boldsymbol {g}_i$ is the Dirichlet boundary condition at the inlet ![]() $\varGamma _i$,

$\varGamma _i$, ![]() $\boldsymbol {g}_o$ is the natural boundary condition at the outlet

$\boldsymbol {g}_o$ is the natural boundary condition at the outlet ![]() $\varGamma _o$,

$\varGamma _o$, ![]() $\boldsymbol {\nu }$ is the unit normal vector on

$\boldsymbol {\nu }$ is the unit normal vector on ![]() $\partial \varOmega$ and

$\partial \varOmega$ and ![]() ${\partial _{\boldsymbol {\nu }}\equiv \boldsymbol {\nu }\boldsymbol {\cdot } \boldsymbol {\nabla }}$ is the normal derivative.

${\partial _{\boldsymbol {\nu }}\equiv \boldsymbol {\nu }\boldsymbol {\cdot } \boldsymbol {\nabla }}$ is the normal derivative.

Figure 1. Given the images of a measured velocity field ![]() $\boldsymbol {u}^\star$, we solve an inverse Navier–Stokes problem to infer the boundary

$\boldsymbol {u}^\star$, we solve an inverse Navier–Stokes problem to infer the boundary ![]() $\varGamma$ (or

$\varGamma$ (or ![]() $\partial \varOmega$), the kinematic viscosity and the inlet velocity profile on

$\partial \varOmega$), the kinematic viscosity and the inlet velocity profile on ![]() $\varGamma _i$. The solution to this inverse problem is a reconstructed velocity field

$\varGamma _i$. The solution to this inverse problem is a reconstructed velocity field ![]() $\boldsymbol {u}^\circ$, from which the noise and the artefacts

$\boldsymbol {u}^\circ$, from which the noise and the artefacts ![]() $(\boldsymbol {u}^\star -\mathcal {S}\boldsymbol {u}^\circ )$ have been filtered out.

$(\boldsymbol {u}^\star -\mathcal {S}\boldsymbol {u}^\circ )$ have been filtered out.

We denote the data space by ![]() $\boldsymbol {D}$ and the model space by

$\boldsymbol {D}$ and the model space by ![]() $\boldsymbol {M}$, and assume that both spaces are subspaces of

$\boldsymbol {M}$, and assume that both spaces are subspaces of ![]() $\boldsymbol {L}^2$. In the 2-D case,

$\boldsymbol {L}^2$. In the 2-D case, ![]() $\boldsymbol {u}^\star = (u^\star _x,u^\star _y)$ and we introduce the covariance operator

$\boldsymbol {u}^\star = (u^\star _x,u^\star _y)$ and we introduce the covariance operator

where ![]() $\sigma ^2_{u_x},\sigma ^2_{u_y}$ are the Gaussian noise variances of

$\sigma ^2_{u_x},\sigma ^2_{u_y}$ are the Gaussian noise variances of ![]() $u^\star _x,u^\star _y$, respectively, and

$u^\star _x,u^\star _y$, respectively, and ![]() $\mathrm {I}$ is the identity operator. The discrepancy between the measured velocity field

$\mathrm {I}$ is the identity operator. The discrepancy between the measured velocity field ![]() ${\boldsymbol {u}^\star } \in \boldsymbol {D}$ and the modelled velocity field

${\boldsymbol {u}^\star } \in \boldsymbol {D}$ and the modelled velocity field ![]() $\boldsymbol {u} \in \boldsymbol {M}$ is measured on the data space

$\boldsymbol {u} \in \boldsymbol {M}$ is measured on the data space ![]() $\boldsymbol {D}$ using the reconstruction error functional

$\boldsymbol {D}$ using the reconstruction error functional

where ![]() $\mathcal {S}: \boldsymbol {M}\to \boldsymbol {D}$ is the

$\mathcal {S}: \boldsymbol {M}\to \boldsymbol {D}$ is the ![]() $L^2$-projection from the model space

$L^2$-projection from the model space ![]() $\boldsymbol {M}$ to the data space

$\boldsymbol {M}$ to the data space ![]() $\boldsymbol {D}$. (Since the discretized space consists of bilinear quadrilateral finite elements (see § 2.7), this projection is a linear interpolation.)

$\boldsymbol {D}$. (Since the discretized space consists of bilinear quadrilateral finite elements (see § 2.7), this projection is a linear interpolation.)

Our goal is to infer the unknown parameters of the Navier–Stokes problem (2.1) such that the model velocity ![]() $\boldsymbol {u}$ approximates the noisy measured velocity

$\boldsymbol {u}$ approximates the noisy measured velocity ![]() $\boldsymbol {u}^\star$ in the covariance-weighted

$\boldsymbol {u}^\star$ in the covariance-weighted ![]() $L^2$-metric defined by

$L^2$-metric defined by ![]() $\mathscr {E}$. In the general case, the unknown model parameters of (2.1) are the shape of

$\mathscr {E}$. In the general case, the unknown model parameters of (2.1) are the shape of ![]() $\varOmega$, the kinematic viscosity

$\varOmega$, the kinematic viscosity ![]() $\nu$ and the boundary conditions

$\nu$ and the boundary conditions ![]() $\boldsymbol {g}_i,\boldsymbol {g}_o$. This inverse Navier–Stokes problem leads to the nonlinearly constrained optimization problem

$\boldsymbol {g}_i,\boldsymbol {g}_o$. This inverse Navier–Stokes problem leads to the nonlinearly constrained optimization problem

where ![]() $\boldsymbol {u}^\circ$ is the reconstructed velocity field and

$\boldsymbol {u}^\circ$ is the reconstructed velocity field and ![]() $\boldsymbol {x}=(\boldsymbol {g}_i,\boldsymbol {g}_o,\nu )$. Like most inverse problems, (2.4) is ill-posed and hard to solve. To alleviate the ill-posedness of the problem, we need to restrict our search of the unknowns

$\boldsymbol {x}=(\boldsymbol {g}_i,\boldsymbol {g}_o,\nu )$. Like most inverse problems, (2.4) is ill-posed and hard to solve. To alleviate the ill-posedness of the problem, we need to restrict our search of the unknowns ![]() $(\varOmega,\boldsymbol {x})$ to function spaces of sufficient regularity.

$(\varOmega,\boldsymbol {x})$ to function spaces of sufficient regularity.

2.2. Regularization

If ![]() $x(t) \in L^2(\mathbb {R})$ is an unknown parameter, one way to regularize the inverse problem (2.4) is to search for minimizers of the augmented functional

$x(t) \in L^2(\mathbb {R})$ is an unknown parameter, one way to regularize the inverse problem (2.4) is to search for minimizers of the augmented functional ![]() $\mathscr {J} \equiv \mathscr {E} + \mathscr {R}$, where

$\mathscr {J} \equiv \mathscr {E} + \mathscr {R}$, where

\begin{equation} \mathscr{R}(x) = \sum_{j=0}^k \int_{\mathbb{R}} \alpha_j \left|\partial_x^j(x-\bar{x})\right|^2 \end{equation}

\begin{equation} \mathscr{R}(x) = \sum_{j=0}^k \int_{\mathbb{R}} \alpha_j \left|\partial_x^j(x-\bar{x})\right|^2 \end{equation}

is a regularization norm for a given (and fixed) prior assumption ![]() $\bar {x}(t) \in H^k(\mathbb {R})$, weights

$\bar {x}(t) \in H^k(\mathbb {R})$, weights ![]() ${\alpha _j\in \mathbb {R}}$ and positive integer

${\alpha _j\in \mathbb {R}}$ and positive integer ![]() $k$. This simple idea can be quite effective because by minimizing

$k$. This simple idea can be quite effective because by minimizing ![]() $\mathscr {R}$, we force

$\mathscr {R}$, we force ![]() $x$ to lie in a subspace of

$x$ to lie in a subspace of ![]() $L^2$ having higher regularity, namely

$L^2$ having higher regularity, namely ![]() $H^k$, and as close to the prior value

$H^k$, and as close to the prior value ![]() $\bar {x}$ as

$\bar {x}$ as ![]() $\alpha _j$ allow. (The regularization term, given by (2.5), can be further extended to fractional Hilbert spaces by defining the norm

$\alpha _j$ allow. (The regularization term, given by (2.5), can be further extended to fractional Hilbert spaces by defining the norm ![]() $\big \lVert x \big \rVert _{H^s(\mathbb {R})} := \big \lVert (1+\lvert t \rvert ^s)\mathcal {F}x \big \rVert _{L^2(\mathbb {R})}$ for non-integer

$\big \lVert x \big \rVert _{H^s(\mathbb {R})} := \big \lVert (1+\lvert t \rvert ^s)\mathcal {F}x \big \rVert _{L^2(\mathbb {R})}$ for non-integer ![]() $s$, with

$s$, with ![]() $0< s<\infty$, and where

$0< s<\infty$, and where ![]() $\mathcal {F}$ denotes the Fourier transform. Interestingly, under certain conditions, which are dictated by Sobolev's embedding theorem (Evans Reference Evans2010, Chapter 5), these Hilbert spaces can be embedded in the more familiar spaces of continuous functions.) However, as Stuart (Reference Stuart2010) points out, in this setting, the choice of

$\mathcal {F}$ denotes the Fourier transform. Interestingly, under certain conditions, which are dictated by Sobolev's embedding theorem (Evans Reference Evans2010, Chapter 5), these Hilbert spaces can be embedded in the more familiar spaces of continuous functions.) However, as Stuart (Reference Stuart2010) points out, in this setting, the choice of ![]() $\alpha _j$, and even the form of

$\alpha _j$, and even the form of ![]() $\mathscr {R}$, is arbitrary.

$\mathscr {R}$, is arbitrary.

There is a more intuitive approach that recovers the form of the regularization norm ![]() $\mathscr {R}$ from a probabilistic viewpoint. In the setting of the Hilbert space

$\mathscr {R}$ from a probabilistic viewpoint. In the setting of the Hilbert space ![]() $L^2$, the Gaussian measure

$L^2$, the Gaussian measure ![]() $\gamma \sim \mathcal {N}(m,\mathcal {C})$ has the property that its finite-dimensional projections are multivariate Gaussian distributions, and it is uniquely defined by its mean

$\gamma \sim \mathcal {N}(m,\mathcal {C})$ has the property that its finite-dimensional projections are multivariate Gaussian distributions, and it is uniquely defined by its mean ![]() $m \in L^2$, and its covariance operator

$m \in L^2$, and its covariance operator ![]() $\mathcal {C}:L^2\to L^2$ (Appendix A). It can be shown that there is a natural Hilbert space

$\mathcal {C}:L^2\to L^2$ (Appendix A). It can be shown that there is a natural Hilbert space ![]() $H_\gamma$ that corresponds to

$H_\gamma$ that corresponds to ![]() $\gamma$, and that (Bogachev Reference Bogachev1998; Hairer Reference Hairer2009)

$\gamma$, and that (Bogachev Reference Bogachev1998; Hairer Reference Hairer2009)

In other words, if ![]() $x$ is a random function distributed according to

$x$ is a random function distributed according to ![]() $\gamma$, any realization of

$\gamma$, any realization of ![]() $x$ lies in

$x$ lies in ![]() $H_\gamma$, which is the image of

$H_\gamma$, which is the image of ![]() $\sqrt {\mathcal {C}}$. Furthermore, the corresponding inner product

$\sqrt {\mathcal {C}}$. Furthermore, the corresponding inner product

is the covariance between ![]() $x$ and

$x$ and ![]() $x'$, and the norm

$x'$, and the norm ![]() $\big \lVert x \big \rVert ^2_\mathcal {C} = \big \langle x,x \big \rangle _\mathcal {C}$ is the variance of

$\big \lVert x \big \rVert ^2_\mathcal {C} = \big \langle x,x \big \rangle _\mathcal {C}$ is the variance of ![]() $x$. Therefore, if

$x$. Therefore, if ![]() $x$ is an unknown parameter for which a priori statistical information is available, and if the Gaussian assumption can be justified, we can choose

$x$ is an unknown parameter for which a priori statistical information is available, and if the Gaussian assumption can be justified, we can choose

In this way, ![]() $\mathscr {J}\equiv \mathscr {E}+\mathscr {R}$ increases as the variance of

$\mathscr {J}\equiv \mathscr {E}+\mathscr {R}$ increases as the variance of ![]() $x$ increases. Consequently, minimizing

$x$ increases. Consequently, minimizing ![]() $\mathscr {J}$ penalizes improbable realizations.

$\mathscr {J}$ penalizes improbable realizations.

As mentioned in § 2.1, the unknown model parameters of the Navier–Stokes problem (2.1) are the kinematic viscosity ![]() $\nu$, the boundary conditions

$\nu$, the boundary conditions ![]() $\boldsymbol {g}_i,\boldsymbol {g}_o$ and the shape of

$\boldsymbol {g}_i,\boldsymbol {g}_o$ and the shape of ![]() $\varOmega$. Since we consider the kinematic viscosity

$\varOmega$. Since we consider the kinematic viscosity ![]() $\nu$ to be constant, the regularizing norm is simply

$\nu$ to be constant, the regularizing norm is simply

where ![]() $\bar {\nu }\in \mathbb {R}$ is a prior guess for

$\bar {\nu }\in \mathbb {R}$ is a prior guess for ![]() $\nu$ and

$\nu$ and ![]() $\sigma ^2_{\nu }\in \mathbb {R}$ is the variance. For the Dirichlet boundary condition,

$\sigma ^2_{\nu }\in \mathbb {R}$ is the variance. For the Dirichlet boundary condition, ![]() $\boldsymbol {g}_i \in \boldsymbol {L}^2(\varGamma _i)$, we choose the exponential covariance function

$\boldsymbol {g}_i \in \boldsymbol {L}^2(\varGamma _i)$, we choose the exponential covariance function

with variance ![]() $\sigma _{\boldsymbol {g}_i}^2 \in \mathbb {R}$ and characteristic length

$\sigma _{\boldsymbol {g}_i}^2 \in \mathbb {R}$ and characteristic length ![]() $\ell \in \mathbb {R}$. For zero-Dirichlet (no-slip) or zero-Neumann boundary conditions on

$\ell \in \mathbb {R}$. For zero-Dirichlet (no-slip) or zero-Neumann boundary conditions on ![]() $\partial \varGamma _i$, (2.10) leads to the norm (Tarantola Reference Tarantola2005, Chapter 7.21)

$\partial \varGamma _i$, (2.10) leads to the norm (Tarantola Reference Tarantola2005, Chapter 7.21)

Using integration by parts, we find that the covariance operator is

where ![]() $\widetilde {\Delta }$ is the

$\widetilde {\Delta }$ is the ![]() $L^2$-extension of the Laplacian

$L^2$-extension of the Laplacian ![]() $\Delta$ that incorporates the boundary condition

$\Delta$ that incorporates the boundary condition ![]() $\boldsymbol {g}_i = \boldsymbol {0}$ on

$\boldsymbol {g}_i = \boldsymbol {0}$ on ![]() ${\partial \varGamma _i}$. For the natural boundary condition,

${\partial \varGamma _i}$. For the natural boundary condition, ![]() $\boldsymbol {g}_o \in \boldsymbol {L}^2(\varGamma _o)$, we can use the same covariance operator, but equip

$\boldsymbol {g}_o \in \boldsymbol {L}^2(\varGamma _o)$, we can use the same covariance operator, but equip ![]() $\widetilde {\Delta }$ with zero-Neumann boundary conditions, i.e.

$\widetilde {\Delta }$ with zero-Neumann boundary conditions, i.e. ![]() $\partial _{\boldsymbol {\nu }}\boldsymbol {g}_o = 0$ on

$\partial _{\boldsymbol {\nu }}\boldsymbol {g}_o = 0$ on ![]() $\partial \varGamma _o$. Lastly, for the shape of

$\partial \varGamma _o$. Lastly, for the shape of ![]() $\varOmega$, which we implicitly represent with a signed distance function

$\varOmega$, which we implicitly represent with a signed distance function ![]() ${\phi _{\pm }}$ (defined in § 2.4), we choose the norm

${\phi _{\pm }}$ (defined in § 2.4), we choose the norm

where ![]() $\sigma _{\phi _{\pm }} \in \mathbb {R}$ and

$\sigma _{\phi _{\pm }} \in \mathbb {R}$ and ![]() $\bar {\phi }_\pm \in L^2(I)$. Additional regularization for the boundary of

$\bar {\phi }_\pm \in L^2(I)$. Additional regularization for the boundary of ![]() $\varOmega$ (i.e. the zero level-set of

$\varOmega$ (i.e. the zero level-set of ![]() ${\phi _{\pm }}$) is needed and it is described in § 2.4. Based on the above results, the regularization norm for the unknown model parameters is

${\phi _{\pm }}$) is needed and it is described in § 2.4. Based on the above results, the regularization norm for the unknown model parameters is

\begin{align} \mathscr{R}(\boldsymbol{x},{\phi_{{\pm}}}) &= \tfrac{1}{2}\left|\nu - \bar{\nu}\right|^2_{\varSigma_\nu} + \tfrac{1}{2}\big\lVert \boldsymbol{g}_i-\bar{\boldsymbol{g}}_i \big\rVert^2_{\mathcal{C}_{\boldsymbol{g}_i}} \nonumber\\ &\quad +\,\tfrac{1}{2}\big\lVert \boldsymbol{g}_o-\bar{\boldsymbol{g}}_o \big\rVert^2_{\mathcal{C}_{\boldsymbol{g}_o}}+\tfrac{1}{2}\big\lVert \bar{\phi}_\pm - {\phi_{{\pm}}} \big\rVert^2_{\mathcal{C}_{\phi_{{\pm}}}} . \end{align}

\begin{align} \mathscr{R}(\boldsymbol{x},{\phi_{{\pm}}}) &= \tfrac{1}{2}\left|\nu - \bar{\nu}\right|^2_{\varSigma_\nu} + \tfrac{1}{2}\big\lVert \boldsymbol{g}_i-\bar{\boldsymbol{g}}_i \big\rVert^2_{\mathcal{C}_{\boldsymbol{g}_i}} \nonumber\\ &\quad +\,\tfrac{1}{2}\big\lVert \boldsymbol{g}_o-\bar{\boldsymbol{g}}_o \big\rVert^2_{\mathcal{C}_{\boldsymbol{g}_o}}+\tfrac{1}{2}\big\lVert \bar{\phi}_\pm - {\phi_{{\pm}}} \big\rVert^2_{\mathcal{C}_{\phi_{{\pm}}}} . \end{align}2.3. Euler–Lagrange equations for the inverse Navier–Stokes problem

Testing the Navier–Stokes problem (2.1) with functions ![]() ${(\boldsymbol {v},q) \in \boldsymbol {H}^1(\varOmega ) \times L^2(\varOmega )}$ and after integrating by parts, we obtain the weak form

${(\boldsymbol {v},q) \in \boldsymbol {H}^1(\varOmega ) \times L^2(\varOmega )}$ and after integrating by parts, we obtain the weak form

$$\begin{gather} \mathscr{M}(\varOmega)(\boldsymbol{u},p,\boldsymbol{v},q;\boldsymbol{x}) \equiv\int_\varOmega\left(\boldsymbol{v}\boldsymbol{\cdot}\left( \boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}\right) + \nu\boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla} \boldsymbol{u} - (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v}) p - q(\boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u})\right) +\int_{\varGamma_o}\boldsymbol{v}\boldsymbol{\cdot}\boldsymbol{g}_o \nonumber\\ +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}+p\boldsymbol{\nu}) + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u};\boldsymbol{g}_i)+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u};\boldsymbol{0})={0}, \end{gather}$$

$$\begin{gather} \mathscr{M}(\varOmega)(\boldsymbol{u},p,\boldsymbol{v},q;\boldsymbol{x}) \equiv\int_\varOmega\left(\boldsymbol{v}\boldsymbol{\cdot}\left( \boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}\right) + \nu\boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla} \boldsymbol{u} - (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v}) p - q(\boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u})\right) +\int_{\varGamma_o}\boldsymbol{v}\boldsymbol{\cdot}\boldsymbol{g}_o \nonumber\\ +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}+p\boldsymbol{\nu}) + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u};\boldsymbol{g}_i)+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u};\boldsymbol{0})={0}, \end{gather}$$

where ![]() $\mathscr {N}$ is the Nitsche (Reference Nitsche1971) penalty term

$\mathscr {N}$ is the Nitsche (Reference Nitsche1971) penalty term

which weakly imposes the Dirichlet boundary condition ![]() $\boldsymbol {z} \in \boldsymbol {L}^2(T)$ on a boundary

$\boldsymbol {z} \in \boldsymbol {L}^2(T)$ on a boundary ![]() $T$, given a penalization constant

$T$, given a penalization constant ![]() $\eta$. (The penalization

$\eta$. (The penalization ![]() $\eta$ is a numerical parameter with no physical significance (see § 2.7).) We define the augmented reconstruction error functional

$\eta$ is a numerical parameter with no physical significance (see § 2.7).) We define the augmented reconstruction error functional

which contains the regularization terms ![]() $\mathscr {R}$ and the model constraint

$\mathscr {R}$ and the model constraint ![]() $\mathscr {M}$, such that

$\mathscr {M}$, such that ![]() $\boldsymbol {u}$ weakly satisfies (2.1). To reconstruct the measured velocity field

$\boldsymbol {u}$ weakly satisfies (2.1). To reconstruct the measured velocity field ![]() $\boldsymbol {u}^\star$ and find the unknowns

$\boldsymbol {u}^\star$ and find the unknowns ![]() $(\varOmega,\boldsymbol {x})$, we minimize

$(\varOmega,\boldsymbol {x})$, we minimize ![]() $\mathscr {J}$ by solving its associated Euler–Lagrange system.

$\mathscr {J}$ by solving its associated Euler–Lagrange system.

2.3.1. Adjoint Navier–Stokes problem

To derive the Euler–Lagrange equations for ![]() $\mathscr {J}$, we first define

$\mathscr {J}$, we first define

to be the space of admissible velocity perturbations ![]() $\boldsymbol {u}'$ and

$\boldsymbol {u}'$ and ![]() $\mathcal {P}'\subset L^2(\varOmega )$ to be the space of admissible pressure perturbations

$\mathcal {P}'\subset L^2(\varOmega )$ to be the space of admissible pressure perturbations ![]() $p'$, such that

$p'$, such that ![]() $(-\partial _{\boldsymbol {\nu }}\boldsymbol {u}'+p'\boldsymbol {\nu })\vert _{\varGamma _o}\equiv \boldsymbol {0}$. We start with

$(-\partial _{\boldsymbol {\nu }}\boldsymbol {u}'+p'\boldsymbol {\nu })\vert _{\varGamma _o}\equiv \boldsymbol {0}$. We start with

\begin{align} \delta_{\boldsymbol{u}}\mathscr{E}\equiv\frac{{\rm d}}{{\rm d}\tau}\mathscr{E}(\boldsymbol{u}+\tau\boldsymbol{u}')\Big\vert_{\tau=0}&=\int_\varOmega -{\mathcal{C}^{ - 1}_{\boldsymbol{u}}}\left(\boldsymbol{u}^\star - \mathcal{S}\boldsymbol{u}\right)\boldsymbol{\cdot}\mathcal{S}\boldsymbol{u}'\nonumber\\ &= \int_\varOmega -\mathcal{S}^{\dagger}{\mathcal{C}^{ - 1}_{\boldsymbol{u}}}\left(\boldsymbol{u}^\star - \mathcal{S}\boldsymbol{u}\right)\boldsymbol{\cdot}\boldsymbol{u}'\equiv{\left\langle{D_{\boldsymbol{u}}\mathscr{E},\boldsymbol{u}'}\right\langle}_\varOmega . \end{align}

\begin{align} \delta_{\boldsymbol{u}}\mathscr{E}\equiv\frac{{\rm d}}{{\rm d}\tau}\mathscr{E}(\boldsymbol{u}+\tau\boldsymbol{u}')\Big\vert_{\tau=0}&=\int_\varOmega -{\mathcal{C}^{ - 1}_{\boldsymbol{u}}}\left(\boldsymbol{u}^\star - \mathcal{S}\boldsymbol{u}\right)\boldsymbol{\cdot}\mathcal{S}\boldsymbol{u}'\nonumber\\ &= \int_\varOmega -\mathcal{S}^{\dagger}{\mathcal{C}^{ - 1}_{\boldsymbol{u}}}\left(\boldsymbol{u}^\star - \mathcal{S}\boldsymbol{u}\right)\boldsymbol{\cdot}\boldsymbol{u}'\equiv{\left\langle{D_{\boldsymbol{u}}\mathscr{E},\boldsymbol{u}'}\right\langle}_\varOmega . \end{align}

Adding together the first variations of ![]() $\mathscr {M}$ with respect to

$\mathscr {M}$ with respect to ![]() $(\boldsymbol {u},p)$,

$(\boldsymbol {u},p)$,

and after integrating by parts, we find

\begin{align} \delta_{\boldsymbol{u}}\mathscr{M}+\delta_{p}\mathscr{M}&=\int_\varOmega \left(-\boldsymbol{u}\boldsymbol{\cdot}\left(\boldsymbol{\nabla}\boldsymbol{v} + (\boldsymbol{\nabla}\boldsymbol{v})^{\dagger}\right) -\nu\Delta \boldsymbol{v} + \boldsymbol{\nabla} q \right)\boldsymbol{\cdot}\boldsymbol{u}' + \int_\varOmega (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v})p' \nonumber\\ &\quad +\int_{\partial\varOmega} \left({(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nu})\boldsymbol{v}}+{(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{v})\boldsymbol{\nu}}+\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}\right)\boldsymbol{\cdot} \boldsymbol{u}' \nonumber\\ &\quad +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu})+ \mathscr{N}_{\varGamma_i\cup\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0}) . \end{align}

\begin{align} \delta_{\boldsymbol{u}}\mathscr{M}+\delta_{p}\mathscr{M}&=\int_\varOmega \left(-\boldsymbol{u}\boldsymbol{\cdot}\left(\boldsymbol{\nabla}\boldsymbol{v} + (\boldsymbol{\nabla}\boldsymbol{v})^{\dagger}\right) -\nu\Delta \boldsymbol{v} + \boldsymbol{\nabla} q \right)\boldsymbol{\cdot}\boldsymbol{u}' + \int_\varOmega (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v})p' \nonumber\\ &\quad +\int_{\partial\varOmega} \left({(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nu})\boldsymbol{v}}+{(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{v})\boldsymbol{\nu}}+\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}\right)\boldsymbol{\cdot} \boldsymbol{u}' \nonumber\\ &\quad +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu})+ \mathscr{N}_{\varGamma_i\cup\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0}) . \end{align}

Since ![]() $\mathscr {R}$ does not depend on

$\mathscr {R}$ does not depend on ![]() $(\boldsymbol {u},p)$, we can use (2.19) and (2.21) to assemble the optimality conditions of

$(\boldsymbol {u},p)$, we can use (2.19) and (2.21) to assemble the optimality conditions of ![]() $\mathscr {J}$ for

$\mathscr {J}$ for ![]() $(\boldsymbol {u},p)$

$(\boldsymbol {u},p)$

For (2.22) to hold true for all perturbations ![]() ${(\boldsymbol {u}',p')\in \boldsymbol {\mathcal {U}}' \times \mathcal {P}'}$, we deduce that

${(\boldsymbol {u}',p')\in \boldsymbol {\mathcal {U}}' \times \mathcal {P}'}$, we deduce that ![]() $(\boldsymbol {v},q)$ must satisfy the following adjoint Navier–Stokes problem

$(\boldsymbol {v},q)$ must satisfy the following adjoint Navier–Stokes problem

\begin{equation} \left. \begin{array}{cl@{}} - \boldsymbol{u}\boldsymbol{\cdot}\left(\boldsymbol{\nabla}\boldsymbol{v} + (\boldsymbol{\nabla}\boldsymbol{v})^{\dagger}\right) -\nu\Delta \boldsymbol{v} + \boldsymbol{\nabla} q = - D_{\boldsymbol{u}}\mathscr{E} & \text{in}\ \varOmega,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{v} = 0 & \text{in}\ \varOmega,\\ \boldsymbol{v} = \boldsymbol{0} & \text{on}\ \varGamma\cup\varGamma_i,\\ {(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nu})\boldsymbol{v}}+{(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{v})\boldsymbol{\nu}}+\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu} = \boldsymbol{0} & \text{on}\ \varGamma_o. \end{array} \right\} \end{equation}

\begin{equation} \left. \begin{array}{cl@{}} - \boldsymbol{u}\boldsymbol{\cdot}\left(\boldsymbol{\nabla}\boldsymbol{v} + (\boldsymbol{\nabla}\boldsymbol{v})^{\dagger}\right) -\nu\Delta \boldsymbol{v} + \boldsymbol{\nabla} q = - D_{\boldsymbol{u}}\mathscr{E} & \text{in}\ \varOmega,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{v} = 0 & \text{in}\ \varOmega,\\ \boldsymbol{v} = \boldsymbol{0} & \text{on}\ \varGamma\cup\varGamma_i,\\ {(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nu})\boldsymbol{v}}+{(\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{v})\boldsymbol{\nu}}+\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu} = \boldsymbol{0} & \text{on}\ \varGamma_o. \end{array} \right\} \end{equation}

In this context, ![]() $\boldsymbol {v}$ is the adjoint velocity and

$\boldsymbol {v}$ is the adjoint velocity and ![]() $q$ is the adjoint pressure, which both vanish when

$q$ is the adjoint pressure, which both vanish when ![]() $\boldsymbol {u}^\star \equiv \mathcal {S}\boldsymbol {u}$. Note also that we choose boundary conditions for the adjoint problem (2.23) that make the boundary terms of (2.21) vanish and that these boundary conditions are subject to the choice of

$\boldsymbol {u}^\star \equiv \mathcal {S}\boldsymbol {u}$. Note also that we choose boundary conditions for the adjoint problem (2.23) that make the boundary terms of (2.21) vanish and that these boundary conditions are subject to the choice of ![]() $\boldsymbol {\mathcal {U}}'$, which, in turn, depends on the boundary conditions of the (primal) Navier–Stokes problem.

$\boldsymbol {\mathcal {U}}'$, which, in turn, depends on the boundary conditions of the (primal) Navier–Stokes problem.

2.3.2. Shape derivatives for the Navier–Stokes problem

To find the shape derivative of an integral defined in ![]() $\varOmega$, when the boundary

$\varOmega$, when the boundary ![]() $\partial \varOmega$ deforms with speed

$\partial \varOmega$ deforms with speed ![]() $\mathscr {V}$, we use Reynold's transport theorem. For the bulk integral of

$\mathscr {V}$, we use Reynold's transport theorem. For the bulk integral of ![]() $f:\varOmega \to \mathbb {R}$, we find

$f:\varOmega \to \mathbb {R}$, we find

\begin{equation} \frac{{\rm d}}{{\rm d}\tau}\left(\int_{\varOmega(\tau)} f\right)\bigg\vert_{\tau=0} = \int_\varOmega f' + \int_{\partial\varOmega}f~(\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}), \end{equation}

\begin{equation} \frac{{\rm d}}{{\rm d}\tau}\left(\int_{\varOmega(\tau)} f\right)\bigg\vert_{\tau=0} = \int_\varOmega f' + \int_{\partial\varOmega}f~(\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}), \end{equation}

while for the boundary integral of ![]() $f$, we find (Walker Reference Walker2015, Chapter 5.6)

$f$, we find (Walker Reference Walker2015, Chapter 5.6)

\begin{equation} \frac{{\rm d}}{{\rm d}\tau}\left(\int_{\partial\varOmega(\tau)} f\right)\bigg\vert_{\tau=0} = \int_{\partial\varOmega} f' + (\partial_{\boldsymbol{\nu}}+\kappa)f (\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}), \end{equation}

\begin{equation} \frac{{\rm d}}{{\rm d}\tau}\left(\int_{\partial\varOmega(\tau)} f\right)\bigg\vert_{\tau=0} = \int_{\partial\varOmega} f' + (\partial_{\boldsymbol{\nu}}+\kappa)f (\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}), \end{equation}

where ![]() $f'$ is the shape derivative of

$f'$ is the shape derivative of ![]() $f$ (due to

$f$ (due to ![]() $\mathscr {V}$),

$\mathscr {V}$), ![]() $\kappa$ is the summed curvature of

$\kappa$ is the summed curvature of ![]() $\partial \varOmega$ and

$\partial \varOmega$ and ![]() $\mathscr {V} \equiv \zeta \boldsymbol {\nu }$, with

$\mathscr {V} \equiv \zeta \boldsymbol {\nu }$, with ![]() $\zeta \in L^2(\partial \varOmega )$, is the Hadamard parametrization of the speed field. Any boundary that is a subset of

$\zeta \in L^2(\partial \varOmega )$, is the Hadamard parametrization of the speed field. Any boundary that is a subset of ![]() $\partial I$, i.e. the edge of the image

$\partial I$, i.e. the edge of the image ![]() $I$, is non-deforming and therefore the second term of the above integrals vanishes. The only boundary that deforms is

$I$, is non-deforming and therefore the second term of the above integrals vanishes. The only boundary that deforms is ![]() $\varGamma \subset \partial \varOmega$. For brevity, let

$\varGamma \subset \partial \varOmega$. For brevity, let ![]() $\delta _\mathscr {V} I$ denote the shape perturbation of an integral

$\delta _\mathscr {V} I$ denote the shape perturbation of an integral ![]() $I$. Using (2.24) on

$I$. Using (2.24) on ![]() $\mathscr {E}$, we compute

$\mathscr {E}$, we compute

where ![]() $D_{\boldsymbol {u}}\mathscr {E}$ is given by (2.19). Using (2.24) and (2.25) on

$D_{\boldsymbol {u}}\mathscr {E}$ is given by (2.19). Using (2.24) and (2.25) on ![]() $\mathscr {M}$, we obtain the shape derivatives problem for

$\mathscr {M}$, we obtain the shape derivatives problem for ![]() $(\boldsymbol {u}',p')$

$(\boldsymbol {u}',p')$

\begin{equation} \left. \begin{array}{cl@{}} \boldsymbol{u}'\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}+\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}'-\nu{\Delta} \boldsymbol{u}' + \boldsymbol{\nabla} p' = \boldsymbol{0} & \text{in}\ \varOmega,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}' = 0 & \text{in}\ \varOmega,\\ \boldsymbol{u}' = - \partial_{\boldsymbol{\nu}}\boldsymbol{u}(\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}) & \text{on}\ \varGamma,\\ \boldsymbol{u}' = \boldsymbol{0} & \text{on}\ \varGamma_i, \\ -\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu} = \boldsymbol{0} & \text{on}\ \varGamma_o, \end{array} \right\} \end{equation}

\begin{equation} \left. \begin{array}{cl@{}} \boldsymbol{u}'\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}+\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}'-\nu{\Delta} \boldsymbol{u}' + \boldsymbol{\nabla} p' = \boldsymbol{0} & \text{in}\ \varOmega,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}' = 0 & \text{in}\ \varOmega,\\ \boldsymbol{u}' = - \partial_{\boldsymbol{\nu}}\boldsymbol{u}(\mathscr{V}\boldsymbol{\cdot}\boldsymbol{\nu}) & \text{on}\ \varGamma,\\ \boldsymbol{u}' = \boldsymbol{0} & \text{on}\ \varGamma_i, \\ -\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu} = \boldsymbol{0} & \text{on}\ \varGamma_o, \end{array} \right\} \end{equation}

which can be used directly to compute the velocity and pressure perturbations for a given speed field ![]() $\mathscr {V}$. We observe that

$\mathscr {V}$. We observe that ![]() $(\boldsymbol {u}',p')\equiv \boldsymbol {0}$ when

$(\boldsymbol {u}',p')\equiv \boldsymbol {0}$ when ![]() $\zeta \equiv \mathscr {V}\boldsymbol {\cdot } \boldsymbol {\nu }\equiv {0}$. Testing the shape derivatives problem (2.27) with

$\zeta \equiv \mathscr {V}\boldsymbol {\cdot } \boldsymbol {\nu }\equiv {0}$. Testing the shape derivatives problem (2.27) with ![]() $(\boldsymbol {v},q)$, and adding the appropriate Nitsche terms for the weakly enforced Dirichlet boundary conditions, we obtain

$(\boldsymbol {v},q)$, and adding the appropriate Nitsche terms for the weakly enforced Dirichlet boundary conditions, we obtain

$$\begin{align} \delta_\mathscr{V}\mathscr{M} &=\int_\varOmega\left(\boldsymbol{v}\boldsymbol{\cdot}\left( \boldsymbol{u}'\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}+\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}'\right) + \nu\boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla} \boldsymbol{u}' - (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v}) p' - q(\boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u}')\right) \nonumber\\ &\quad +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu}) + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0})+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';-\zeta\partial_{\boldsymbol{\nu}}\boldsymbol{u})={0}. \end{align}$$

$$\begin{align} \delta_\mathscr{V}\mathscr{M} &=\int_\varOmega\left(\boldsymbol{v}\boldsymbol{\cdot}\left( \boldsymbol{u}'\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}+\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}'\right) + \nu\boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla} \boldsymbol{u}' - (\boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{v}) p' - q(\boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u}')\right) \nonumber\\ &\quad +\int_{\varGamma\cup\varGamma_i} \boldsymbol{v}\boldsymbol{\cdot}(-\nu\partial_{\boldsymbol{\nu}}\boldsymbol{u}'+p'\boldsymbol{\nu}) + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0})+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';-\zeta\partial_{\boldsymbol{\nu}}\boldsymbol{u})={0}. \end{align}$$

If we define ![]() $I_i$ to be the first four integrals in (2.21), integrating (2.28) by parts yields

$I_i$ to be the first four integrals in (2.21), integrating (2.28) by parts yields

\begin{equation} \delta_\mathscr{V}\mathscr{M}= \sum_{i=1}^4 I_i + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0})+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';-\zeta\partial_{\boldsymbol{\nu}}\boldsymbol{u})={0}, \end{equation}

\begin{equation} \delta_\mathscr{V}\mathscr{M}= \sum_{i=1}^4 I_i + \mathscr{N}_{\varGamma_i}(\boldsymbol{v},q,\boldsymbol{u}';\boldsymbol{0})+\mathscr{N}_{\varGamma}(\boldsymbol{v},q,\boldsymbol{u}';-\zeta\partial_{\boldsymbol{\nu}}\boldsymbol{u})={0}, \end{equation}and, due to the adjoint problem (2.23), we find

since ![]() $\boldsymbol {u}'\vert _{\varGamma _i} \equiv \boldsymbol {0}$ and

$\boldsymbol {u}'\vert _{\varGamma _i} \equiv \boldsymbol {0}$ and ![]() $\boldsymbol {u}\vert _{\varGamma } \equiv \boldsymbol {0}$. Therefore, the shape perturbation of

$\boldsymbol {u}\vert _{\varGamma } \equiv \boldsymbol {0}$. Therefore, the shape perturbation of ![]() $\mathscr {J}$ is

$\mathscr {J}$ is

which, due to (2.30) and ![]() $\delta _\mathscr {V} \mathscr {R} \equiv 0$, takes the form

$\delta _\mathscr {V} \mathscr {R} \equiv 0$, takes the form

where ![]() $D_{\zeta }\mathscr {J}$ is the shape gradient. Note that the shape gradient depends on the normal gradient of the (primal) velocity field and the pseudotraction,

$D_{\zeta }\mathscr {J}$ is the shape gradient. Note that the shape gradient depends on the normal gradient of the (primal) velocity field and the pseudotraction, ![]() $(-\nu \boldsymbol {\nabla }\boldsymbol {v} + q\mathrm {I})\boldsymbol {\cdot } \boldsymbol {\nu }$, that the adjoint flow exerts on

$(-\nu \boldsymbol {\nabla }\boldsymbol {v} + q\mathrm {I})\boldsymbol {\cdot } \boldsymbol {\nu }$, that the adjoint flow exerts on ![]() $\varGamma$.

$\varGamma$.

2.3.3. Generalized gradients for the unknown model parameters  ${x}$

${x}$

The unknown model parameters ![]() $\boldsymbol {x}$ have an explicit effect on

$\boldsymbol {x}$ have an explicit effect on ![]() $\mathscr {M}$ and

$\mathscr {M}$ and ![]() $\mathscr {R}$, and can therefore be obtained by taking their first variations. For the Dirichlet-type boundary condition at the inlet, we find

$\mathscr {R}$, and can therefore be obtained by taking their first variations. For the Dirichlet-type boundary condition at the inlet, we find

\begin{align} {\left\langle{D_{\boldsymbol{g}_i}\mathscr{J},\boldsymbol{g}_i'}\right\rangle}_{\varGamma_i} &= {\left\langle{\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}- \eta\boldsymbol{v} + {\mathcal{C}^{ - 1}_{\boldsymbol{g}_i}}\left(\boldsymbol{g}_i-{\bar{\boldsymbol{g}}_i} \right),\boldsymbol{g}_i'}\right\rangle}_{\varGamma_i} \nonumber\\ &={\left\langle{{\mathcal{C}_{\boldsymbol{g}_i}}\left(\nu \partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}- \eta\boldsymbol{v}\right) + \boldsymbol{g}_i-\bar{\boldsymbol{g}}_i, \boldsymbol{g}_i'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_i}}}={\left\langle{\hat{D}_{\boldsymbol{g}_i}\mathscr{J},~\boldsymbol{g}_i'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_i}}}, \end{align}

\begin{align} {\left\langle{D_{\boldsymbol{g}_i}\mathscr{J},\boldsymbol{g}_i'}\right\rangle}_{\varGamma_i} &= {\left\langle{\nu\partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}- \eta\boldsymbol{v} + {\mathcal{C}^{ - 1}_{\boldsymbol{g}_i}}\left(\boldsymbol{g}_i-{\bar{\boldsymbol{g}}_i} \right),\boldsymbol{g}_i'}\right\rangle}_{\varGamma_i} \nonumber\\ &={\left\langle{{\mathcal{C}_{\boldsymbol{g}_i}}\left(\nu \partial_{\boldsymbol{\nu}}\boldsymbol{v}-q\boldsymbol{\nu}- \eta\boldsymbol{v}\right) + \boldsymbol{g}_i-\bar{\boldsymbol{g}}_i, \boldsymbol{g}_i'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_i}}}={\left\langle{\hat{D}_{\boldsymbol{g}_i}\mathscr{J},~\boldsymbol{g}_i'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_i}}}, \end{align}

where ![]() $-\hat {D}_{\boldsymbol {g}_i}\mathscr {J}$ is the steepest descent direction that corresponds to the covariance-weighted norm. For the natural boundary condition at the outlet, we find

$-\hat {D}_{\boldsymbol {g}_i}\mathscr {J}$ is the steepest descent direction that corresponds to the covariance-weighted norm. For the natural boundary condition at the outlet, we find

\begin{align} {\left\langle{D_{\boldsymbol{g}_o}\mathscr{J},\boldsymbol{g}_o'}\right\rangle}_{\varGamma_o} &= {\left\langle{\boldsymbol{v} + {\mathcal{C}^{ - 1}_{\boldsymbol{g}_o}}\left(\boldsymbol{g}_o-\bar{\boldsymbol{g}}_o\right),\boldsymbol{g}_o'}\right\rangle}_{\varGamma_o} \nonumber\\ &={\left\langle{{\mathcal{C}_{\boldsymbol{g}_o}}\boldsymbol{v} + \boldsymbol{g}_o-\bar{\boldsymbol{g}}_o,\boldsymbol{g}_o'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_o}}} = {\left\langle{\hat{D}_{\boldsymbol{g}_o}\mathscr{J}, \boldsymbol{g}_o'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_o}}}. \end{align}

\begin{align} {\left\langle{D_{\boldsymbol{g}_o}\mathscr{J},\boldsymbol{g}_o'}\right\rangle}_{\varGamma_o} &= {\left\langle{\boldsymbol{v} + {\mathcal{C}^{ - 1}_{\boldsymbol{g}_o}}\left(\boldsymbol{g}_o-\bar{\boldsymbol{g}}_o\right),\boldsymbol{g}_o'}\right\rangle}_{\varGamma_o} \nonumber\\ &={\left\langle{{\mathcal{C}_{\boldsymbol{g}_o}}\boldsymbol{v} + \boldsymbol{g}_o-\bar{\boldsymbol{g}}_o,\boldsymbol{g}_o'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_o}}} = {\left\langle{\hat{D}_{\boldsymbol{g}_o}\mathscr{J}, \boldsymbol{g}_o'}\right\rangle}_{{\mathcal{C}_{\boldsymbol{g}_o}}}. \end{align}

Lastly, since the kinematic viscosity is considered to be constant within ![]() $\varOmega$, its generalized gradient is

$\varOmega$, its generalized gradient is

\begin{align} {\left\langle{D_{\nu}\mathscr{J}, \nu'}\right\rangle}_\mathbb{R} &= {\left\langle{\int_\varOmega \boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla}\boldsymbol{u} + {\varSigma^{ - 1}_{\nu}} \left(\nu-\bar{\nu}\right), \nu'}\right\rangle}_\mathbb{R} \nonumber\\ & = {\left\langle{{\varSigma_{\nu}} \int_\varOmega \boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla}\boldsymbol{u} + \nu-\bar{\nu}, \nu'}\right\rangle}_{{\varSigma_{\nu}}} = {\left\langle{\hat{D}_{\nu}\mathscr{J}, \nu'}\right\rangle}_{\varSigma_{\nu}} . \end{align}

\begin{align} {\left\langle{D_{\nu}\mathscr{J}, \nu'}\right\rangle}_\mathbb{R} &= {\left\langle{\int_\varOmega \boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla}\boldsymbol{u} + {\varSigma^{ - 1}_{\nu}} \left(\nu-\bar{\nu}\right), \nu'}\right\rangle}_\mathbb{R} \nonumber\\ & = {\left\langle{{\varSigma_{\nu}} \int_\varOmega \boldsymbol{\nabla}\boldsymbol{v}\boldsymbol{:}\boldsymbol{\nabla}\boldsymbol{u} + \nu-\bar{\nu}, \nu'}\right\rangle}_{{\varSigma_{\nu}}} = {\left\langle{\hat{D}_{\nu}\mathscr{J}, \nu'}\right\rangle}_{\varSigma_{\nu}} . \end{align}

For a given step size ![]() $\mathbb {R} \ni \tau > 0$, the steepest descent directions (2.33)–(2.35) can be used either to update an unknown parameter

$\mathbb {R} \ni \tau > 0$, the steepest descent directions (2.33)–(2.35) can be used either to update an unknown parameter ![]() $x$ through

$x$ through

with ![]() $s_k = -\hat {D}_{x}\mathscr {J}$, or to reconstruct an approximation

$s_k = -\hat {D}_{x}\mathscr {J}$, or to reconstruct an approximation ![]() $\tilde {H}$ of the inverse Hessian matrix, in the context of a quasi-Newton method, and thereby to compute

$\tilde {H}$ of the inverse Hessian matrix, in the context of a quasi-Newton method, and thereby to compute ![]() $s_k = -\tilde {H}\hat {D}_{x}\mathscr {J}$. We adopt the latter approach, which is discussed in § 2.5.

$s_k = -\tilde {H}\hat {D}_{x}\mathscr {J}$. We adopt the latter approach, which is discussed in § 2.5.

2.4. Geometric flow

To deform the boundary ![]() $\partial \varOmega$ using the simple update formula (2.36), we need a parametric surface representation. Here we choose to implicitly represent

$\partial \varOmega$ using the simple update formula (2.36), we need a parametric surface representation. Here we choose to implicitly represent ![]() $\partial \varOmega$ using signed distance functions

$\partial \varOmega$ using signed distance functions ![]() ${\phi _{\pm }}$. The object

${\phi _{\pm }}$. The object ![]() $\varOmega$ and its boundary

$\varOmega$ and its boundary ![]() $\partial \varOmega$ are then identified with a particular function

$\partial \varOmega$ are then identified with a particular function ![]() ${\phi _{\pm }}$ such that

${\phi _{\pm }}$ such that

2.4.1. Implicit representation of  $\varOmega$ using signed distance functions

$\varOmega$ using signed distance functions

A signed distance function ![]() ${\phi _{\pm }}$ for

${\phi _{\pm }}$ for ![]() $\varOmega$ can be obtained by solving the Eikonal equation

$\varOmega$ can be obtained by solving the Eikonal equation

One way to solve this problem is with level-set methods (Osher & Sethian Reference Osher and Sethian1988; Sethian Reference Sethian1996; Burger Reference Burger2001, Reference Burger2003; Burger & Osher Reference Burger and Osher2005; Yu, Juniper & Magri Reference Yu, Juniper and Magri2019). There is, however, a different approach, which relies on the heat equation (Varadhan Reference Varadhan1967b,Reference Varadhana; Crane, Weischedel & Wardetzky Reference Crane, Weischedel and Wardetzky2017). The main result that we draw from Varadhan (Reference Varadhan1967b), to justify the use of the heat equation for the approximation of ![]() ${\phi _{\pm }}$, states that

${\phi _{\pm }}$, states that

where ![]() ${d}(x,\partial \varOmega )$ is the Euclidean distance between any point

${d}(x,\partial \varOmega )$ is the Euclidean distance between any point ![]() $x \in I$ and

$x \in I$ and ![]() $\partial \varOmega$, and

$\partial \varOmega$, and ![]() $u$ is the solution of heat propagation away from

$u$ is the solution of heat propagation away from ![]() $\partial \varOmega$

$\partial \varOmega$

Crane et al. (Reference Crane, Weischedel and Wardetzky2017) used the above result to implement a smoothed distance function computation method which they called the ‘heat method’. Here, we slightly adapt this method to compute signed distance functions ![]() ${\phi _{\pm }}$ in truncated domains (figure 2b). To compute

${\phi _{\pm }}$ in truncated domains (figure 2b). To compute ![]() ${\phi _{\pm }}$, we therefore solve (2.40) for

${\phi _{\pm }}$, we therefore solve (2.40) for ![]() $\tau _1 \ll 1$ and then obtain

$\tau _1 \ll 1$ and then obtain ![]() ${\phi _{\pm }}$ by solving

${\phi _{\pm }}$ by solving

\begin{equation} \left. \begin{array}{cl@{}} \boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{\nabla} {\phi_{{\pm}}} = \boldsymbol{\nabla} \boldsymbol{\cdot} X & \text{in}\ I,\\ \partial_{\boldsymbol{\nu}} {\phi_{{\pm}}} = X \boldsymbol{\cdot} \boldsymbol{\nu} & \text{on}\ \partial I,\\ {\phi_{{\pm}}} = 0 & \text{on}\ \partial \varOmega, \end{array} \right\} \quad X = - \text{sgn}(\psi) \dfrac{\boldsymbol{\nabla} u}{\lvert \boldsymbol{\nabla} u \rvert}, \end{equation}

\begin{equation} \left. \begin{array}{cl@{}} \boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{\nabla} {\phi_{{\pm}}} = \boldsymbol{\nabla} \boldsymbol{\cdot} X & \text{in}\ I,\\ \partial_{\boldsymbol{\nu}} {\phi_{{\pm}}} = X \boldsymbol{\cdot} \boldsymbol{\nu} & \text{on}\ \partial I,\\ {\phi_{{\pm}}} = 0 & \text{on}\ \partial \varOmega, \end{array} \right\} \quad X = - \text{sgn}(\psi) \dfrac{\boldsymbol{\nabla} u}{\lvert \boldsymbol{\nabla} u \rvert}, \end{equation}

with ![]() $X$ being the normalized heat flux and

$X$ being the normalized heat flux and ![]() $\psi$ being a signed function such that

$\psi$ being a signed function such that ![]() $\psi (x)$ is negative for points

$\psi (x)$ is negative for points ![]() $x$ in

$x$ in ![]() $\varOmega$ and positive for points

$\varOmega$ and positive for points ![]() $x$ outside

$x$ outside ![]() ${\varOmega }$. This intermediate step (the solution of two Poisson problems (2.40)–(2.41) instead of one) is taken to ensure that

${\varOmega }$. This intermediate step (the solution of two Poisson problems (2.40)–(2.41) instead of one) is taken to ensure that ![]() $\lvert \boldsymbol {\nabla }{\phi _{\pm }} \rvert = 1$.

$\lvert \boldsymbol {\nabla }{\phi _{\pm }} \rvert = 1$.

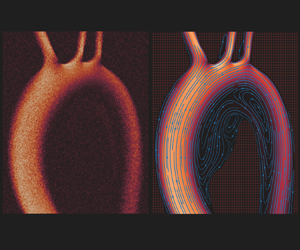

Figure 2. Geometric flow of ![]() $\partial \varOmega$ (figure 2a) relies on the computation of its signed distance field

$\partial \varOmega$ (figure 2a) relies on the computation of its signed distance field ![]() ${\phi _{\pm }}$ (figure 2b) and its normal vector extension

${\phi _{\pm }}$ (figure 2b) and its normal vector extension ![]() ${\mathring {\boldsymbol {\nu }}}$ (figure 2c). The shape gradient

${\mathring {\boldsymbol {\nu }}}$ (figure 2c). The shape gradient ![]() $\zeta$ (figure 2d), which is initially defined on

$\zeta$ (figure 2d), which is initially defined on ![]() $\partial \varOmega$, is extended to the whole image

$\partial \varOmega$, is extended to the whole image ![]() $I$ (

$I$ (![]() ${\mathring {\zeta }}$ in figure 2e, f). Shape regularization is achieved by increasing the diffusion coefficient

${\mathring {\zeta }}$ in figure 2e, f). Shape regularization is achieved by increasing the diffusion coefficient ![]() $\epsilon _{\zeta }$ to mitigate small-scale perturbations when assimilating noisy velocity fields

$\epsilon _{\zeta }$ to mitigate small-scale perturbations when assimilating noisy velocity fields ![]() $\boldsymbol {u}^\star$. Figure 2( f) shows results at a lower value of

$\boldsymbol {u}^\star$. Figure 2( f) shows results at a lower value of ![]() $\mbox {Re}_{\zeta }$ than figure 2(e). (a) Shape of

$\mbox {Re}_{\zeta }$ than figure 2(e). (a) Shape of ![]() $\partial \varOmega$. (b) Level-sets of

$\partial \varOmega$. (b) Level-sets of ![]() ${\phi _{\pm }}$. (c) Magnitude of

${\phi _{\pm }}$. (c) Magnitude of ![]() ${\phi _{\pm }}$ and

${\phi _{\pm }}$ and ![]() ${\mathring {\boldsymbol {\nu }}}$. (d) Shape gradient

${\mathring {\boldsymbol {\nu }}}$. (d) Shape gradient ![]() $\zeta$ on

$\zeta$ on ![]() $\partial \varOmega$. (e)

$\partial \varOmega$. (e) ![]() ${\mathring {\zeta }}$ in

${\mathring {\zeta }}$ in ![]() $I$ (

$I$ (![]() $\mbox {Re}_{\zeta }=1$). ( f)

$\mbox {Re}_{\zeta }=1$). ( f) ![]() ${\mathring {\zeta }}$ in

${\mathring {\zeta }}$ in ![]() $I$ (

$I$ (![]() $\mbox {Re}_{\zeta }=0.01$).

$\mbox {Re}_{\zeta }=0.01$).

2.4.2. Propagating the boundary of  $\varOmega$

$\varOmega$

To deform the boundary ![]() $\partial \varOmega$, we transport

$\partial \varOmega$, we transport ![]() ${\phi _{\pm }}$ under the speed field

${\phi _{\pm }}$ under the speed field ![]() ${\mathscr {V} \equiv \zeta \boldsymbol {\nu }}$. The convection-diffusion problem for

${\mathscr {V} \equiv \zeta \boldsymbol {\nu }}$. The convection-diffusion problem for ![]() ${\phi _{\pm }}(x,t)$ reads

${\phi _{\pm }}(x,t)$ reads

where ![]() ${{\phi _{\pm }}}_0$ denotes the signed distance function of the current domain

${{\phi _{\pm }}}_0$ denotes the signed distance function of the current domain ![]() $\varOmega$,

$\varOmega$, ![]() $\epsilon _{\phi _{\pm }}$ is the diffusion coefficient,

$\epsilon _{\phi _{\pm }}$ is the diffusion coefficient, ![]() $\iota$ is a length scale,

$\iota$ is a length scale, ![]() $\mbox {Re}_{\phi _{\pm }}$ is a Reynolds number and

$\mbox {Re}_{\phi _{\pm }}$ is a Reynolds number and ![]() ${{\mathring {\mathscr {V}}}:I \to \mathbb {R}\times \mathbb {R}}$ is an extension of

${{\mathring {\mathscr {V}}}:I \to \mathbb {R}\times \mathbb {R}}$ is an extension of ![]() $\mathscr {V}: \partial \varOmega \to \mathbb {R}\times \mathbb {R}$. If we solve (2.42) for

$\mathscr {V}: \partial \varOmega \to \mathbb {R}\times \mathbb {R}$. If we solve (2.42) for ![]() ${\phi _{\pm }}(x,\tau )$, we obtain the implicit representation of the perturbed domain

${\phi _{\pm }}(x,\tau )$, we obtain the implicit representation of the perturbed domain ![]() $\varOmega _\tau$ at time

$\varOmega _\tau$ at time ![]() $t=\tau$ (the step size), but to do so, we first need to extend

$t=\tau$ (the step size), but to do so, we first need to extend ![]() $\mathscr {V}$ to the whole space of the image

$\mathscr {V}$ to the whole space of the image ![]() $I$.

$I$.

To extend ![]() $\mathscr {V}$ to

$\mathscr {V}$ to ![]() $I$, we extend the normal vector

$I$, we extend the normal vector ![]() $\boldsymbol {\nu }$ and the scalar function

$\boldsymbol {\nu }$ and the scalar function ![]() $\zeta$, which are both initially defined on

$\zeta$, which are both initially defined on ![]() $\partial \varOmega$. The normal vector extension (figure 2c) is easily obtained by

$\partial \varOmega$. The normal vector extension (figure 2c) is easily obtained by

since ![]() $\lvert \boldsymbol {\nabla }{\phi _{\pm }} \rvert = 1$, and an outward-facing extension is given by

$\lvert \boldsymbol {\nabla }{\phi _{\pm }} \rvert = 1$, and an outward-facing extension is given by

We then use the extended normal vector ![]() ${\mathring {\boldsymbol {\nu }}}_o$ to extend

${\mathring {\boldsymbol {\nu }}}_o$ to extend ![]() $\zeta \in L^2(\partial \varOmega )$ to

$\zeta \in L^2(\partial \varOmega )$ to ![]() ${\mathring {\zeta }} \in L^2(I)$, using the convection-diffusion problem

${\mathring {\zeta }} \in L^2(I)$, using the convection-diffusion problem

\begin{equation} \left. \begin{array}{cl@{}} \partial_t {\mathring{\zeta}} + {{\mathring{\boldsymbol{\nu}}}_o}\boldsymbol{\cdot}\boldsymbol{\nabla}{\mathring{\zeta}} - \epsilon_{\zeta} \Delta {\mathring{\zeta}} = 0 & \text{in}\ I\times (0,\tau_{\zeta}],\\ {\mathring{\zeta}} = \zeta & \text{on}\ \partial \varOmega\times (0,\tau_{\zeta}], \\ {\mathring{\zeta}} \equiv 0 & \text{in}\ I\times\{t=0\}, \end{array} \right\} \quad \epsilon_{\zeta} = \dfrac{\lvert {\mathring{\boldsymbol{\nu}}}_o \rvert_\infty \iota}{\textit{Re}_{\zeta}}. \end{equation}

\begin{equation} \left. \begin{array}{cl@{}} \partial_t {\mathring{\zeta}} + {{\mathring{\boldsymbol{\nu}}}_o}\boldsymbol{\cdot}\boldsymbol{\nabla}{\mathring{\zeta}} - \epsilon_{\zeta} \Delta {\mathring{\zeta}} = 0 & \text{in}\ I\times (0,\tau_{\zeta}],\\ {\mathring{\zeta}} = \zeta & \text{on}\ \partial \varOmega\times (0,\tau_{\zeta}], \\ {\mathring{\zeta}} \equiv 0 & \text{in}\ I\times\{t=0\}, \end{array} \right\} \quad \epsilon_{\zeta} = \dfrac{\lvert {\mathring{\boldsymbol{\nu}}}_o \rvert_\infty \iota}{\textit{Re}_{\zeta}}. \end{equation}

In other words, we convect ![]() $\zeta$ along the predefined

$\zeta$ along the predefined ![]() ${\mathring {\boldsymbol {\nu }}}_o$-streamlines and add isotropic diffusion for regularization (figure 2e, f). The choice of

${\mathring {\boldsymbol {\nu }}}_o$-streamlines and add isotropic diffusion for regularization (figure 2e, f). The choice of ![]() $\epsilon _{\phi _{\pm }}$ in (2.42) and

$\epsilon _{\phi _{\pm }}$ in (2.42) and ![]() $\epsilon _{\zeta }$ in (2.45) has been made for the shape regularization to depend only on the length scale

$\epsilon _{\zeta }$ in (2.45) has been made for the shape regularization to depend only on the length scale ![]() $\iota$ and the Reynolds numbers

$\iota$ and the Reynolds numbers ![]() $\mbox {Re}_{\phi _{\pm }},\mbox {Re}_{\zeta }$. More precisely, the shape regularization depends only on

$\mbox {Re}_{\phi _{\pm }},\mbox {Re}_{\zeta }$. More precisely, the shape regularization depends only on ![]() $\mbox {Re}_{\phi _{\pm }}$ and

$\mbox {Re}_{\phi _{\pm }}$ and ![]() $\mbox {Re}_{\zeta }$ because we fix the length scale

$\mbox {Re}_{\zeta }$ because we fix the length scale ![]() $\iota$ to equal the smallest possible length scale of the modelled flow, which is the numerical grid spacing

$\iota$ to equal the smallest possible length scale of the modelled flow, which is the numerical grid spacing ![]() $h$ for a uniform Cartesian grid. For illustration, if we consider

$h$ for a uniform Cartesian grid. For illustration, if we consider ![]() $\zeta$ to be the concentration of a dye on

$\zeta$ to be the concentration of a dye on ![]() $\partial \varOmega$ (figure 2d), using a simplified scaling argument similar to the growth of a boundary layer on a flat plate, we observe that the diffusing dye at distance

$\partial \varOmega$ (figure 2d), using a simplified scaling argument similar to the growth of a boundary layer on a flat plate, we observe that the diffusing dye at distance ![]() $d$ from

$d$ from ![]() $\partial \varOmega$ will extend over a width

$\partial \varOmega$ will extend over a width ![]() $\delta$ such that

$\delta$ such that

\begin{equation} \delta \sim \sqrt{\frac{\epsilon_{\zeta} d}{\lvert {\mathring{\boldsymbol{\nu}}}_o \rvert_\infty}}=\sqrt{\frac{\iota d}{\textit{Re}_{\zeta}}}\quad \text{or}\quad \frac{\delta}{\iota} \sim \sqrt{\frac{\alpha}{\textit{Re}_{\zeta}}} \quad \text{when}\ d = \alpha \iota. \end{equation}

\begin{equation} \delta \sim \sqrt{\frac{\epsilon_{\zeta} d}{\lvert {\mathring{\boldsymbol{\nu}}}_o \rvert_\infty}}=\sqrt{\frac{\iota d}{\textit{Re}_{\zeta}}}\quad \text{or}\quad \frac{\delta}{\iota} \sim \sqrt{\frac{\alpha}{\textit{Re}_{\zeta}}} \quad \text{when}\ d = \alpha \iota. \end{equation}

The above scaling approximation describes the dissipation rate of small-scale features such as roughness away from ![]() $\partial \varOmega$. This is therefore how

$\partial \varOmega$. This is therefore how ![]() $\mbox {Re}_{\phi _{\pm }}$ and

$\mbox {Re}_{\phi _{\pm }}$ and ![]() $\mbox {Re}_{\zeta }$ control the regularity of the boundary

$\mbox {Re}_{\zeta }$ control the regularity of the boundary ![]() $\partial \varOmega _\tau$ at time

$\partial \varOmega _\tau$ at time ![]() $t = \tau$, which is given by (2.42). We take

$t = \tau$, which is given by (2.42). We take ![]() $\tau _{\zeta }$ to be large enough to find a steady-state for (2.45). We recast the linear initial value problems (2.42) and (2.45) into their corresponding boundary value problems using backward-Euler temporal discretization because the time-dependent solution does not interest us here.

$\tau _{\zeta }$ to be large enough to find a steady-state for (2.45). We recast the linear initial value problems (2.42) and (2.45) into their corresponding boundary value problems using backward-Euler temporal discretization because the time-dependent solution does not interest us here.

The extended shape gradient (2.32), after taking into account the regularizing term for ![]() ${\phi _{\pm }}$, is therefore given by

${\phi _{\pm }}$, is therefore given by

\begin{align}{\left\langle{D_{\mathring{\zeta}}\mathscr{J}, {\mathring{\zeta}}'}\right\rangle}_I &={\left\langle{{\mathring{\zeta}} + \mathcal{C}^{ -1}_{\phi_{{\pm}}}\left(\bar{\phi}_\pm - {\phi_{{\pm}}}\right), {\mathring{\zeta}}'}\right\rangle}_I \nonumber\\ &={\left\langle{\mathcal{C}_{\phi_{{\pm}}}{\mathring{\zeta}} +\bar{\phi}_\pm - {\phi_{{\pm}}},{\mathring{\zeta}}'}\right\rangle}_{\mathcal{C}_{\phi_{{\pm}}}} ={\left\langle{\hat{D}_{\mathring{\zeta}}\mathscr{J},{\mathring{\zeta}}'}\right\rangle}_{\mathcal{C}_{\phi_{{\pm}}}},\end{align}

\begin{align}{\left\langle{D_{\mathring{\zeta}}\mathscr{J}, {\mathring{\zeta}}'}\right\rangle}_I &={\left\langle{{\mathring{\zeta}} + \mathcal{C}^{ -1}_{\phi_{{\pm}}}\left(\bar{\phi}_\pm - {\phi_{{\pm}}}\right), {\mathring{\zeta}}'}\right\rangle}_I \nonumber\\ &={\left\langle{\mathcal{C}_{\phi_{{\pm}}}{\mathring{\zeta}} +\bar{\phi}_\pm - {\phi_{{\pm}}},{\mathring{\zeta}}'}\right\rangle}_{\mathcal{C}_{\phi_{{\pm}}}} ={\left\langle{\hat{D}_{\mathring{\zeta}}\mathscr{J},{\mathring{\zeta}}'}\right\rangle}_{\mathcal{C}_{\phi_{{\pm}}}},\end{align}

where ![]() ${\mathring {\zeta }}$ is the extension of the shape gradient

${\mathring {\zeta }}$ is the extension of the shape gradient ![]() $\zeta (x) = \partial _{\boldsymbol {\nu }}\boldsymbol {u}\boldsymbol {\cdot } (-\nu \partial _{\boldsymbol {\nu }}\boldsymbol {v}+q\boldsymbol {\nu })$, for

$\zeta (x) = \partial _{\boldsymbol {\nu }}\boldsymbol {u}\boldsymbol {\cdot } (-\nu \partial _{\boldsymbol {\nu }}\boldsymbol {v}+q\boldsymbol {\nu })$, for ![]() $x$ on

$x$ on ![]() $\varGamma$.

$\varGamma$.

2.5. Segregated approach for the Euler–Lagrange system

The inverse Navier–Stokes problem for the reconstruction and the segmentation of noisy velocity images ![]() $\boldsymbol {u}^\star$ can be written as the saddle point problem (Benzi, Golubt & Liesen Reference Benzi, Golubt and Liesen2005)

$\boldsymbol {u}^\star$ can be written as the saddle point problem (Benzi, Golubt & Liesen Reference Benzi, Golubt and Liesen2005)

where ![]() $\mathscr {J}$ is given by (2.17). The above optimization problem leads to an Euler–Lagrange system whose optimality conditions were formulated in § 2.3. We briefly describe our segregated approach to solve this Euler–Lagrange system in algorithm 1.

$\mathscr {J}$ is given by (2.17). The above optimization problem leads to an Euler–Lagrange system whose optimality conditions were formulated in § 2.3. We briefly describe our segregated approach to solve this Euler–Lagrange system in algorithm 1.

Algorithm 1: Reconstruction and segmentation of noisy flow velocity images.

To precondition the steepest descent directions (2.33)–(2.35) and (2.47), we reconstruct the approximated inverse Hessian ![]() $\tilde {H}$ of each unknown using the BFGS quasi-Newton method (Fletcher Reference Fletcher2000) with damping (Nocedal & Wright Reference Nocedal and Wright2006). Due to the large scale of the problem, it is only possible to work with the matrix-vector product representation of

$\tilde {H}$ of each unknown using the BFGS quasi-Newton method (Fletcher Reference Fletcher2000) with damping (Nocedal & Wright Reference Nocedal and Wright2006). Due to the large scale of the problem, it is only possible to work with the matrix-vector product representation of ![]() $\tilde {H}$. Consequently, the search directions are given by

$\tilde {H}$. Consequently, the search directions are given by

\begin{equation} \boldsymbol{s} = -

\begin{pmatrix} \tilde{H}_{\mathring{\zeta}} & {\cdot} & {\cdot} &

{\cdot} \\ {\cdot} & \tilde{H}_{\boldsymbol{g}_i} & {\cdot}

& {\cdot} \\ {\cdot} & {\cdot} &

\tilde{H}_{\boldsymbol{g}_o} & {\cdot} \\ {\cdot} & {\cdot}

& {\cdot} & \tilde{H}_\nu \end{pmatrix} \begin{pmatrix}

\hat{D}_{\mathring{\zeta}} \mathscr{J}\\

\hat{D}_{\boldsymbol{g}_i}\mathscr{J}\\

\hat{D}_{\boldsymbol{g}_o}\mathscr{J}\\

\hat{D}_\nu\mathscr{J} \end{pmatrix},

\end{equation}

\begin{equation} \boldsymbol{s} = -

\begin{pmatrix} \tilde{H}_{\mathring{\zeta}} & {\cdot} & {\cdot} &

{\cdot} \\ {\cdot} & \tilde{H}_{\boldsymbol{g}_i} & {\cdot}

& {\cdot} \\ {\cdot} & {\cdot} &

\tilde{H}_{\boldsymbol{g}_o} & {\cdot} \\ {\cdot} & {\cdot}

& {\cdot} & \tilde{H}_\nu \end{pmatrix} \begin{pmatrix}

\hat{D}_{\mathring{\zeta}} \mathscr{J}\\

\hat{D}_{\boldsymbol{g}_i}\mathscr{J}\\

\hat{D}_{\boldsymbol{g}_o}\mathscr{J}\\

\hat{D}_\nu\mathscr{J} \end{pmatrix},

\end{equation}

and the unknown variables ![]() $\boldsymbol {x}$ are updated according to (2.36). The signed distance function

$\boldsymbol {x}$ are updated according to (2.36). The signed distance function ![]() ${\phi _{\pm }}$ is perturbed according to (2.42), with

${\phi _{\pm }}$ is perturbed according to (2.42), with ![]() ${\mathring {\mathscr {V}}} \equiv -(\tilde {H}_{\mathring{\zeta}}\hat {D}_{\mathring{\zeta}}\mathscr {J}){\mathring {\boldsymbol {\nu }}}$. We start every line search with a global step size

${\mathring {\mathscr {V}}} \equiv -(\tilde {H}_{\mathring{\zeta}}\hat {D}_{\mathring{\zeta}}\mathscr {J}){\mathring {\boldsymbol {\nu }}}$. We start every line search with a global step size ![]() $\tau = 1$ and halve the step size until

$\tau = 1$ and halve the step size until ![]() ${\mathscr {J}(({\phi _{\pm }},\boldsymbol {x})_{k+1}) < \mathscr {J}(({\phi _{\pm }},\boldsymbol {x})_{k})}$. To update the flowfield

${\mathscr {J}(({\phi _{\pm }},\boldsymbol {x})_{k+1}) < \mathscr {J}(({\phi _{\pm }},\boldsymbol {x})_{k})}$. To update the flowfield ![]() $\boldsymbol {u}_k$ to

$\boldsymbol {u}_k$ to ![]() $\boldsymbol {u}_{k+1}$, we solve the Oseen problem for the updated parameters

$\boldsymbol {u}_{k+1}$, we solve the Oseen problem for the updated parameters ![]() $({\phi _{\pm }},\boldsymbol {x})_{k+1}$

$({\phi _{\pm }},\boldsymbol {x})_{k+1}$

with the boundary conditions given by (2.1). Algorithm 1 terminates if either the covariance-weighted norm for the perturbations of the model parameters is below the user-specified tolerance or the line search fails to reduce ![]() $\mathscr {J}$.

$\mathscr {J}$.

2.6. Uncertainty estimation

We now briefly describe how the reconstructed inverse Hessian ![]() $\tilde {H}$ can provide estimates for the uncertainties of the model parameters. To simplify the description, let

$\tilde {H}$ can provide estimates for the uncertainties of the model parameters. To simplify the description, let ![]() $x$ denote an unknown parameter distributed according to

$x$ denote an unknown parameter distributed according to ![]() $\mathcal {N}(x_k,\mathcal {C}_x)$. The linear approximation to the data

$\mathcal {N}(x_k,\mathcal {C}_x)$. The linear approximation to the data ![]() $\boldsymbol {u}^\star$ is given by

$\boldsymbol {u}^\star$ is given by

where ![]() $\boldsymbol {u} = \mathcal {Z}x$, where

$\boldsymbol {u} = \mathcal {Z}x$, where ![]() $\mathcal {Z}$ is the operator that encodes the linearized Navier–Stokes problem around the solution

$\mathcal {Z}$ is the operator that encodes the linearized Navier–Stokes problem around the solution ![]() $\boldsymbol {u}_k$. To solve (2.48), we update

$\boldsymbol {u}_k$. To solve (2.48), we update ![]() $x$ as

$x$ as

where ![]() $\mathcal {Z}^{\dagger}$ is the operator that encodes the adjoint Navier–Stokes problem and

$\mathcal {Z}^{\dagger}$ is the operator that encodes the adjoint Navier–Stokes problem and ![]() $\mathcal {C}$ is the posterior covariance operator. It can be shown that (Tarantola Reference Tarantola2005, Chapter 6.22.8)

$\mathcal {C}$ is the posterior covariance operator. It can be shown that (Tarantola Reference Tarantola2005, Chapter 6.22.8)

where ![]() $\tilde {H}_x$ is the reconstructed inverse Hessian for

$\tilde {H}_x$ is the reconstructed inverse Hessian for ![]() $x$. Note that

$x$. Note that ![]() $\tilde {H}$ by itself approximates

$\tilde {H}$ by itself approximates ![]() $(\mathcal {C}_x\mathcal {Z}^{\dagger} {\mathcal {C}^{-1}_{\boldsymbol {u}}}\mathcal {Z}+\mathrm {I})^{-1}$ and not