1. Introduction

Among its many uses, argumentation is often pursued aiming to provide a rational response to disagreement. “En parlant on se comprend,” as the saying goes.Footnote 1 By means of rational exchange, we might understand each other better, overcome our differences, and converge in our views, fostering peaceful coexistence and promoting cooperation. That is the rosy picture.Footnote 2 However, this kind of convergence seldom occurs in argumentative reality. Instead, rather peculiar situations typically arise. Sometimes participants remain steadfast in their initial position, even while recognizing that the reasons being offered to them are relevant to the issue at hand. When no new evidence is being offered, some participants often intensify their views. If this happens to antagonists in an exchange, their extreme initial positions are accentuated, thus widening the gap that existed before the conversation took place.

It is a platitude that argumentation does not often lead to the rational resolution of disagreement, as the rosy picture suggests. This fact is widely recognized, to the point of being the subject of an encyclopedic entry: “The usual result of arguing […] is that everyone remains more attached to their convictions than before”Footnote 3 (Diderot and D’Alembert Reference Diderot and D’Alembert1765: 196). While arguing in response to disagreement, some beliefs are rendered impervious to change in the light of evidence and views are radicalized in the absence of new evidence for them. Worse still: in many cases, argumentation seems to be the driving force for these peculiar effects on people’s thinking. Although argumentation has all kinds of effects – e.g., emotional, social, political, and so forth – here I focus exclusively on its epistemic effects: the impact of argumentation in beliefs or in degrees of confidence (credences) of participants in the exchange. My aim in this paper is to provide the broad outline of a purely epistemic account of how argumentation can typically produce epistemic effects like the ones described above. For the kind of understanding that this account aims to provide, it is not enough to hint at some indefinite flaw or deficiency. Rather, specific conditions under which these effects are to be expected should be identified. Insofar as these results are not only oddities but also undesirable, this model should offer some advice on interventions to avoid them; this, however, might not always translate into personal advice to improve argumentation.

Against the prevailing view in contemporary argumentation theory, I deploy tools from logic and formal epistemology, contending that they are furnished with descriptive and normative resources to account for a wide range of argumentative effects. I start by presenting some clarifications concerning my use of the terms “argumentation,” “rationality,” and “disagreement.” Next, I outline a simple model for argumentative phenomena. The epistemic effects of argumentation in “ideal” situations are illustrated according to this model. Then, I discuss how some distinctive epistemic effects of argumentation in the face of disagreement are prone to come about. I conclude with some remarks about the kind of understanding we get from models like the one outlined in this paper, as well as their potential use for improving the quality of argumentative discourse.

2. Argumentation, rationality, and disagreement

Since these notions play a heavy role in a model which aims to account for the epistemic effects of argumentation in response to disagreement, in this section I clarify my use of “argumentation,” when its epistemic effects are deemed to be “rational,” and what can be counted as a “disagreement.”

Several perspectives for the study of argumentation focus on non-overlapping phenomena, amenable to different kinds of evaluations. Roughly, for rhetorical perspectives argumentation involves strategic attempts to persuade an audience – e.g., ways of “influencing others’ opinions and expectations without damaging cooperative bonds” (Bernache Maldonado Reference Bernache Maldonado2025: 130). In dialectical perspectives, participants must perform certain roles in several stages for argumentative exchanges to occur (Eemeren Reference Eemeren2018: 34–36). Here, I embrace a logical perspective. From this standpoint, argumentation requires arguments: inferentially structured sets of claims. Typically, communicative exchanges containing them involve people making statements that purport to evidentially contribute to the topic under discussion. The assessment of these episodes involves argument evaluation,Footnote 4 which considers the qualities of constituent claims as well as those of inferential structure. On a positive evaluation, epistemic “goods” or “assets” can be associated with statements (e.g., truth, knowledge, justification, among others) and/or with their inferential link (e.g., truth-preservation, coherence, confirmation, understanding, among others).Footnote 5

By way of illustration, consider a sound deductive argument. According to this logical perspective, the positive assessment of such an argument relies on the quality of its constituent statements (i.e., its premises are true, perhaps known to be true) and of their evidential link to its conclusion (i.e., its structure is valid, or truth-preserving). Thus, a sound deductive argument can provide knowledge or understanding of its conclusion, by competently deducing its truth from that of the premises. Its statements and its structure are jointly “truth-conducive” at the highest possible degree. Although this might be used as template for evaluation from a logical perspective, it is not a feature of this approach that only sound deductive arguments are good arguments. In a broader sense, “truth-conduciveness” could be generalized to uncertain deductions and non-deductive arguments. Thus, the features of arguments that are relevant to their evaluation from this logical perspective concern “epistemic rationality.”

Let us start with a slightly restrictive and highly idealizedFootnote 6 characterization of epistemic rationality in a communicative exchange. An epistemically rational agent engaged in argumentation seeks to maximize her epistemic assets. Starting from a coherent epistemic situation, she incorporates the “evidence” produced during the exchange. This includes the content of what her interlocutors say, the very fact that they make a claim, as well as the information available or salient in the conversational context. Two constraints govern this process of incorporating evidence.Footnote 7 First: the agent’s degree of confidence in statements that she does not recognize as logical truths should be responsive to (some) evidence. Second: new information should be incorporated in a systematic way, seeking to preserve (synchronic) coherence in beliefs and degrees of confidence, and (diachronically) updating them in ways that are truth-conducive upon adequate evidence. This is a highly idealized characterization of epistemic rationality, since it abstracts away from a constellation of factors that do matter in people’s lives and that are often present in argument. Furthermore, it introduces an unrealistic degree of precision, which we do not use to describe the chaotic real world.

Since argumentative exchanges in the face of disagreement are the focus of our attention, before presenting a simple model of their epistemic effects, I offer a more precise characterization of “disagreement.” In talking about disagreements, their subject matter is presented as claims or statements, which could be the premises or conclusions of arguments. Consider a statement p, e.g., the claim that God exists. Three doxastic attitudes toward the truth of p are usually distinguished: (1) Believing that p is true (theism); (2) Believing that p is false (atheism); and (3) Suspending judgment concerning the truth of p (agnosticism). Thus, disagreement can be thought as:

Doxastic disagreement (D-disagreement). People D-disagree about p if and only if they hold different doxastic attitudes toward p. (Frances and Matheson Reference Frances, Matheson and Zalta2018: §1)

Under this characterization, an “argumentative resolution” of D-disagreements requires that at least one of the parties changes their initial doxastic attitude toward p as a result of argument. However, even if there are changes in doxastic attitude, D-disagreements can be “argumentatively stagnated” if the participants end up with different doxastic attitudes.

Instead of speaking about doxastic attitudes towards p, we can talk about the degree of confidence or “credence” of a person toward p. As in our previous example, someone could be highly confident in p’s truth, assigning its credence a value close to 1 (theistic fundamentalism); she could be more confident in p’s truth than in its falsity, assigning its credence a value above the threshold of 0.5 but quite distant from 1 (fallibilistic theism); she could be equally confident in p’s truth as in its falsity, with a precise credence of 0.5; or she could be more confident in p’s falsity than in its truth, assigning its credence a value below the threshold of 0.5 (fallibilistic atheism), perhaps even close to 0 (atheistic fundamentalism). This allows another characterization of disagreement:

Credal disagreement (C-disagreement). People C-disagree about p if and only if they hold different credencesFootnote 8 toward p. (Rowbottom Reference Rowbottom2018: §2)

For the “argumentative resolution” of a C-disagreement, both parties must converge in assigning the exact same value to their credences toward p. For that to occur – or, more generally, for an argumentative “decrease” of C-disagreement – one or both parties must “shift” their degree of confidence toward p “in the direction” of the other party’s credence (i.e., they must “converge” with her on a value or on a narrower threshold than their starting point). C-disagreements can also be “argumentatively augmented” when, because of argument, one (or both) of the parties changes their degree of confidence toward p in an “opposite direction,” in a way that is not compensated by a change in the degree of confidence by the other party (i.e., the distance in their degrees of confidence increases, widening the threshold). Even if there are changes in credence, C-disagreement can be “argumentatively stagnated” if the distance in the degrees of confidence remains equal.

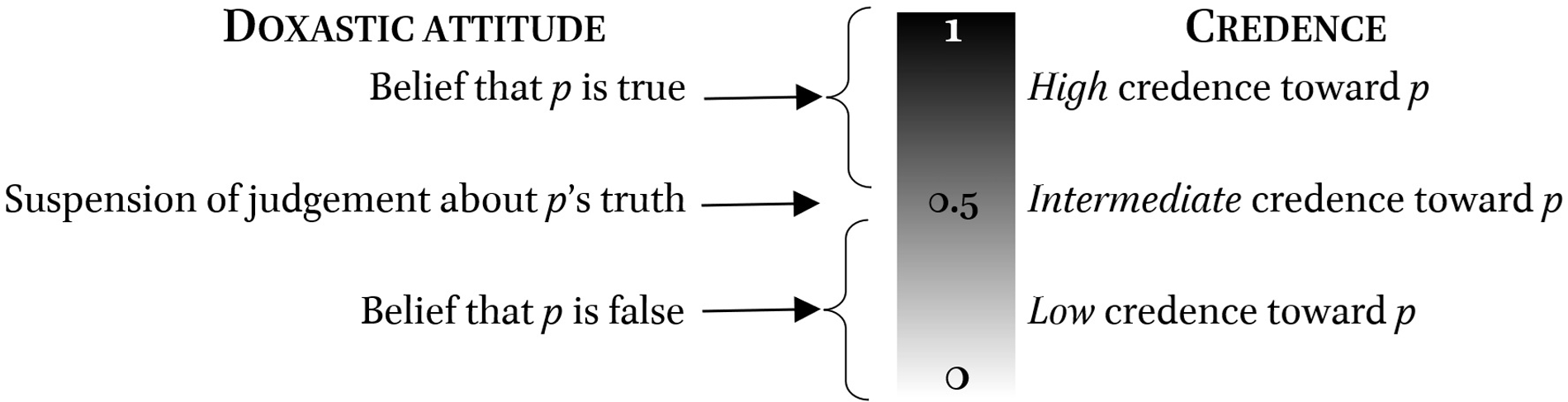

The distinction between D-disagreement and C-disagreement might appear unnecessary, and perhaps redundant.Footnote 9 By identifying doxastic attitudes with (intervals of) credences, we could dispense of one of the characterizations of disagreement just outlined, by means of a mapping as in Figure 1.

Figure 1. A possible mapping of doxastic attitudes to (intervals of) credences.

There are, however, several theoretical objections to identifying beliefs with (intervals of) credence.Footnote 10 For instance, retaining the distinction between credence and belief might be crucial to account for some apparent exceptions to plausible rational constraints on the logic of belief (Titelbaum Reference Titelbaum, Pettigrew and Weisberg2019: 2–3; Reference Titelbaum2022: 8–9). To avoid the Lottery Paradox, even extremely low credences toward p should not be identified with belief in the falsity of p. If a 0.0001 credence in the statement “Ticket i will win the lottery” is not identified with the belief that ticket i will not win the lottery for each ticket of a fair lottery, there is no inconsistency in believing that at least one ticket will win the lottery. Analogously, to eschew the Preface Paradox, extremely high credences toward p should not be identified with belief in p’s truth. An author who has carefully revised the contents of her book might believe in each claim therein included. Assigning a very high credence to the claim that “At least one statement in my book is false” (i.e., acknowledging the possibility of error in her Preface) is not inconsistent with belief (and high credence) in every single claim.

In addition to these normative advantages of preserving the distinction between credence and belief, it might be descriptively useful to distinguish both kinds of disagreements. Two agents with the same doxastic attitude toward p do not D-disagree about p. They might, however, assign different credences to p (e.g., one might be much more confident than the other of p’s truth); thus, they C-disagree about p. Although this is a distinction compatible with mapping doxastic attitudes to (intervals of) credences, it does not require it. On the other hand, there could also be cases where two agents D-disagree without there being some C-disagreement involved. For that to occur, agents with the exact same credence toward p should have different doxastic attitudes toward p. This possibility is not preserved by a mapping as the one we presented in Figure 1. It might depict agents who adjust their degree of confidence to the same value, while retaining a prior commitment to their beliefs. This possibility will be explored in Section 4. In the meantime, both doxastic and credal will be treated as (potentially) genuine cases of disagreement.

We can now ask: What are the epistemic effects of arguing in the face disagreement? To answer this question, I will sketch a simple model using probabilities.

3. A simple model of argumentative epistemic effects

To explore the epistemic effects of argument, in this section I outline a simplified modelFootnote 11 of some epistemic features of the participants in an argumentative exchange. Before attempting to account for peculiar cases as the ones mentioned at the outset, it will be useful to illustrate how this model represents the epistemic effects of argumentation in “ideal” situations.

Our model assumes a finite set of epistemically rational agents {a, b, c, …, o}, representing the individuals taking part in the argumentative exchange. There is also a set of atomic propositions, denoted by letters p, q, r, …, u (with or without subscripts), which is closed under truth-functional operations. These are used to represent claims that are made within the conversational exchange, but they might also represent the information available to the participants at the outset or during the exchange. For each agent

![]() $\alpha $

, there is a probability function, Prα(Φ), which assigns real numbers between 0 and 1 to each (simple or complex) proposition Φ. These are credences reflecting

$\alpha $

, there is a probability function, Prα(Φ), which assigns real numbers between 0 and 1 to each (simple or complex) proposition Φ. These are credences reflecting

![]() $\alpha $

’s degree of confidence in Φ: they can be high (close to 1), low (close to 0), or intermediate. In addition to credences, an agent

$\alpha $

’s degree of confidence in Φ: they can be high (close to 1), low (close to 0), or intermediate. In addition to credences, an agent

![]() $\alpha $

might also have (qualitative) beliefs toward propositions, Bα(Φ).

$\alpha $

might also have (qualitative) beliefs toward propositions, Bα(Φ).

In this model, epistemic rationality imposes (synchronic) consistency constraints. At any time t, the contents of

![]() $\alpha $

’s beliefs should not include or entail contradictions. Although each agent might assign different values to the same propositions, the consistency of their credence functions is equally constrained by the mathematical properties of the probability calculus. Additionally, all epistemically rational agents (diachronically) update their credences toward Φ by Conditionalization:Footnote

12

assuming that Ψ represents everything the agent learns in the interval of time t

1

and a later time t

2

, the value in t

2

of Prα(Φ) = Prα(Φ| Ψ) in t

1

, given Prα(Ψ)>0; when nothing is learned in the interval, Ψ is considered to be a tautology. This reflects the agent’s “balance of evidence”: the epistemic effect of the agent rationally assimilating information relevant for her prior credences. But epistemic rationality does not only demand deductive and probabilistic (synchronic) consistency, as well as systematic (diachronic) evidential updating. It also requires the choice of beliefs considered as courses of action (i.e., as “cognitive options”)Footnote

13

that maximize expected epistemic utility. In this model, epistemically rational agents seek two epistemic goals: (1) to gather truths and (2) to avoid falsities. An agent’s beliefs considered as courses of action have an associated epistemic utility, uBα(Φ), which depends on the truth and informativeness of the believed claim. When true propositions are more informative, they provide a greater utility for the agent who believes them. Some courses of action might also have an associated additional quasi-epistemic benefit, β, which may add to the epistemic utility of a course of action, when the believed proposition turns out to be true.

$\alpha $

’s beliefs should not include or entail contradictions. Although each agent might assign different values to the same propositions, the consistency of their credence functions is equally constrained by the mathematical properties of the probability calculus. Additionally, all epistemically rational agents (diachronically) update their credences toward Φ by Conditionalization:Footnote

12

assuming that Ψ represents everything the agent learns in the interval of time t

1

and a later time t

2

, the value in t

2

of Prα(Φ) = Prα(Φ| Ψ) in t

1

, given Prα(Ψ)>0; when nothing is learned in the interval, Ψ is considered to be a tautology. This reflects the agent’s “balance of evidence”: the epistemic effect of the agent rationally assimilating information relevant for her prior credences. But epistemic rationality does not only demand deductive and probabilistic (synchronic) consistency, as well as systematic (diachronic) evidential updating. It also requires the choice of beliefs considered as courses of action (i.e., as “cognitive options”)Footnote

13

that maximize expected epistemic utility. In this model, epistemically rational agents seek two epistemic goals: (1) to gather truths and (2) to avoid falsities. An agent’s beliefs considered as courses of action have an associated epistemic utility, uBα(Φ), which depends on the truth and informativeness of the believed claim. When true propositions are more informative, they provide a greater utility for the agent who believes them. Some courses of action might also have an associated additional quasi-epistemic benefit, β, which may add to the epistemic utility of a course of action, when the believed proposition turns out to be true.

For our purposes, this model aims to perform two tasks concerning the epistemic effects of argumentation in the face of disagreement:

Explanation task: If the effects follow a recurring pattern, the model should explain why they do occur.

Optimization task: If the effects are undesirable, the model should provide guidance on how to avoid them.

Before examining the peculiar situations described at the outset, it is worth considering how this model would represent the epistemic effects of argumentation in “ideal” cases. From some additional assumptions, Robert Aumann obtained the following result:

Aumann’s Theorem. If two agents have the same prior credence toward p, and the result of their conditionalizing upon evidence is “common knowledge,” then their final credence toward p is the same even if their posteriors are based on different information.Footnote 14

Aumann points out that, “once one has the appropriate framework, [this result] is mathematically trivial. Intuitively, though, it is not quite obvious” (Reference Aumann1976: 1236). Adapted to our terminology, the theorem describes situations where two agents do not C-disagree about p prior to an exchange and share their evidential assessments concerning p. It concludes that the epistemic effect of this exchange cannot be a C-disagreement about p in conditions of “full disclosure.”Footnote 15 Linked to our topic, Aumann’s theorem suggests that argumentation itself does not generate C-disagreement when those involved agree and share their rational assessments of the evidence. This result is in line with the rosy picture’s normative intuitions about the epistemic effects of argumentation. However, it would be hard to find situations that fit such a description. One reason for this is that agents often assign different credences to the disputed claim: their starting point is a C-disagreement. The model seems to offer some additional encouraging prospects for the argumentative resolution of such disagreements, in light of various convergence theorems (e.g., Gaifman and Snir Reference Gaifman and Snir1982). As Igor Douven explains,

What these theorems say, at bottom, is that the difference between prior [credences] do not mean all that much, as these priors will get “washed out” by the evidence, that is to say, as we go, the influence of the [credences] we once started out with will gradually diminish, and our [credences] will come to be increasingly determined by the evidence we receive. (Douven Reference Douven and Hales2011: 259)

Even if they start from a pronounced C-disagreement, by updating from shared evidence rational agents will gradually converge in assigning the same value (or interval) to their credences toward a proposition.Footnote 16 Since this convergence responds directly to evidence, it seems to contribute to the epistemic goals of gathering truths and avoiding falsities. These features of the model provide an optimistic forecast for the rational argumentative resolution of disagreement. For our current purposes, however, they pose a challenge. The robustness of these results (they are theorems!) suggests that the peculiar phenomena described at the outset of this paper are incompatible with the model. For convenience, I state them succinctly:

Steadfastness. While acknowledging the relevance of evidence presented in the argumentative exchange, a participant does not change her mind.

Escalation. With no new supporting evidence (beyond the fact of disagreement itself), a participant intensifies her degree of confidence in her view.

Polarization. With no new supporting evidence (beyond the fact of disagreement itself), participants intensify their degrees of confidence in opposite directions.

These epistemic effects of argumentation diverge from the patterns suggested by the aforementioned theorems. In these scenarios, argumentation does not contribute to the rational resolution of disagreements; in some cases, it even aggravates them!

A popular suggestion is that the Explanation task for these patterns lies beyond the reach of simple models like the one sketched above. Insofar as these epistemic effects are not adequately represented by the behavior of epistemically rational agents, they must be attributable to some other facts about real flesh-and-blood human beings. Models dispense of our cognitive biases and abstract from the emotional richness of our psychological life; social ties and affiliations are omitted too; the plethora of rhetorical niceties that flood our conversations is also left out. These factors could play a crucial role in describing the epistemic effects of argumentation in the face of disagreement.Footnote 17 Besides, idealized epistemic models place too many and too severe demands on lazy, careless, and clumsy creatures – as many of us (and our interlocutors) are. Thus, the forces preventing the argumentative resolution of disagreement might derive from deviations from epistemic rationality.

If we must resort to these kinds of alternative explanations, our model cannot fulfill the tasks we assigned to it. In that scenario, explaining why argumentation has these effects and offering prescriptions for how to avoid them would be beyond the scope of epistemic rationality, as characterized by the model. It would also require paying attention to features of argumentation that are not considered by the logical perspective. However, in the following sections I will show that these apparently irrational epistemic effects can be melted down and poured into the behavior molded for epistemically rational agents. The rigid cast resulting from this process will show that “the irrational is not merely the non-rational, which lies outside the ambit of the rational; irrationality is a failure within the house of reason” (Davidson Reference Davidson1982: 169). To achieve this, I will heavily rely on previous work.

4. Rational steadfastness in the face of disagreement

Lara Buchak describes situations in which one is prone “to adopt a conviction and to maintain that conviction when counterevidence arises […] even if one’s credences would not make it rational to adopt that belief anew” (Reference Buchak2021: 210). Some beliefs a person holds in such circumstances do not seem to sensibly respond to her balance of evidence. This seems incompatible with our model: this person is not behaving as one would expect from an epistemically rational agent, or these epistemic effects might be due to further non-epistemic factors excluded from the model. These kinds of situations are not unusual: they are typical, especially when it comes to controversial claims about important issues.Footnote 18 Buchak suggests that these situations involve “faith,” understood as some kind of sustained resistance against counterevidence; but she also argues that such an attitude can be epistemically rational, under certain conditions. By specifying those conditions within the model, its ability to explain epistemic effects of argumentation in the face of disagreement can be tested.

An agent α having “faith” toward p, in an epistemic situation q with possible additional evidence E, is subject to the following conditions:

-

(i) α must believe that p and assign it an initially high (but less than 1) credence, conditional on q.

-

(ii) α must commitFootnote 19 to take a risk on p, regardless of counterevidence E.

-

(iii) α must follow through on her commitment even when receiving counterevidence E against p.

As we have seen, epistemically rational agents can have different initial beliefs and credences, so condition (i) seems compatible with the model. What seems problematic is (iii): How can such an agent believe that p while also acknowledging counterevidence that diminishes her credence toward p? This is a case of Steadfastness.

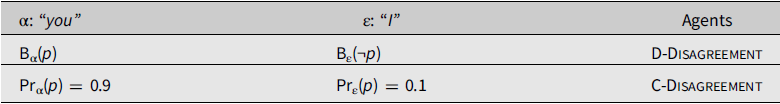

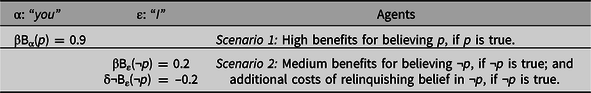

To illustrate, imagine you (represented by α) and I (represented by ε) are arguing about an issue (p) on which we hold different doxastic attitudes and toward which we hold different degrees of confidence: we are both in D-disagreement and in C-disagreement (see Table 1).

Table 1. Initial disagreements concerning p.

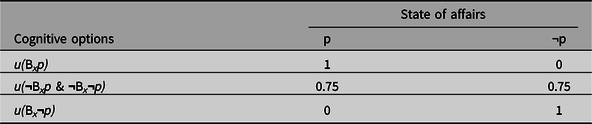

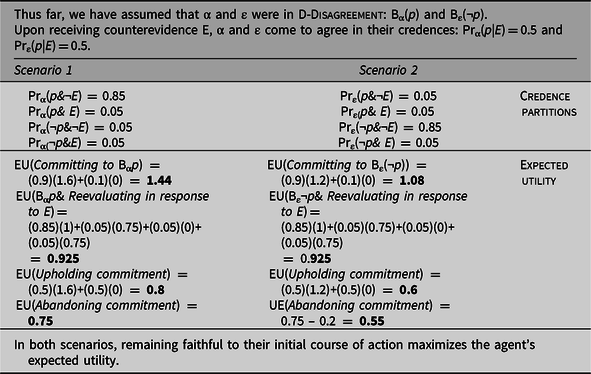

We argue. As a result of the exchange, you and I come to agree on the balance of evidence, converging on the exact same value for our respective credence Pr x (p), thus solving our C-disagreement. Nevertheless, “it might still be the case that each of us should remain committed to our initial belief, despite now sharing the same credence” (Buchak Reference Buchak2021: 211). The possibility of such a situation is incompatible with the existence of a general principle governing synchronic rational relations between beliefs and credences.Footnote 20 It is worth noting that the model we presented does not demand such a principle. Thus, it is open to the possibility of epistemically rational agents having “faith” toward certain claims. The explanatory work of the model consists in detailing under what conditions having “faith” would maximize expected epistemic utility for an agent, thus rendering these situations typical. It is there that the ideas of “commitment to take a risk” and “following through on a commitment” play a crucial role in Buchak’s explanation. Roughly, she shows general sets of conditions under which – given an initial epistemic situation compatible with (i) – committing to a belief is a course of action that maximizes expected epistemic utility compared to reevaluating that same belief after receiving counterevidence. To continue with our previous example, we could make the following assumptions for our cognitive options toward p: for any of us, “believing p” has a positive utility of 1, if p is true – 0 if not; “believing ¬ p” has a positive utility of 1, if p is false – 0 if not; “remaining agnostic towards p” has a positive utility of 0.75, regardless of p’s truth-value – since it avoids being mistaken, but is less informative (see Table 2).

Table 2. Utility assigned to our cognitive options toward p.

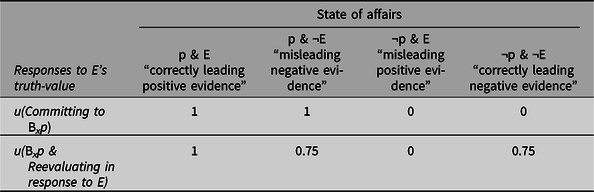

Assuming that counterevidence E might be misleading or correctly lead to the current state of affairs, we can compare the utility of “Committing” to a course of action (e.g., “to believe p”) and that of “Reevaluating” our initial course of action after receiving counterevidence E (see Table 3).

Table 3. Utility of “Committing” and “Reevaluating,” upon receiving counterevidence E (Buchak, Reference Buchak2021: 201).

As Buchak observes, committing might maximize expected epistemic utility compared to reevaluating after receiving counterevidence when there are quasi-epistemic benefits,Footnote 21 β, that go beyond the utility of believing something true at time t. For example, consider the scenarios presented in Table 4.

Such benefits are common in various circumstances: (a) when continuously believing the truth has higher epistemic value than intermittent patterns of belief (e.g., for gaining knowledge while sustaining interpersonal relationships); (b) when a claim is a high-level proposition and is deeply embedded in the web of reasoning (e.g., it is at the core of a paradigm or worldview); or (c) when a claim is at the base of a long-term intellectual project (e.g., in science, morality, religion, and so forth) that requires actions over time whose rationality depends on believing that claim. It seems that some of these conditions are clearly met in ordinary cases of Steadfastness.Footnote 22 Our example would provide the cases of rational Steadfastness represented in Table 5.Footnote 23

Table 4. Quasi-epistemic benefits of Committing.

Table 5. Examples of rational Steadfastness with D-Disagreement, but without C-Disagreement.

Insofar as these conditions account for the pervasiveness of rational Steadfastness in many ordinary cases, the model might perform the Explanation task therein. Also,

The result here goes beyond the obvious point that if there are benefits to commitment, then it can be good to maintain a belief even if it wouldn’t have been good to believe it in the absence of these benefits. What the model contributes is showing which things, exactly, we ought to be weighing against each other, and how the magnitude of the new probability matters. (Buchak Reference Buchak2021: 206)

Although by itself the model does not resolve this issue, it portrays situations in which Steadfastness would not be an undesirable outcome. Thus, the Optimization task should consider additional dimensions. If we manage to isolate the undesirable cases, what does the model tell us about how to avoid them? Roughly speaking, the model is compatible with an argumentative resolution of these cases of D-disagreement. Since faith does not constrain the balance of evidence, agents might converge on their credence, resolving their C-disagreement. Even if belief can be rationally maintained against counterevidence for some time, this does not occur indefinitely or in the face of any amount of counterevidence: the effect of benefits “anchoring” belief might be outweighed by the balance of evidence, especially over time.Footnote 24 Thus, commitment is eventually abandoned and D-disagreement argumentatively resolved.

Some valuable clues can be gleaned from the model about what happens in such situations. To the extent that an agent’s beliefs interact with her balance of evidence, upholding a commitment along with certain credences will be an epistemically awkward position for the agent: she believes something that it “would not be rational to believe if [she] approached the question anew. […T]his tension is in fact felt when we respond to disagreement by maintaining our own beliefs, and so it is an advantage of the [model] that it can explain this tension” (Buchak Reference Buchak2021: 214). Facing Steadfastness argumentatively will foster this kind of epistemic tension, perhaps for considerable periods of time. Additionally, relinquishing faith will differ from other kinds of doxastic change: once credence exceeds a certain threshold, the motivational effect of commitment fades away, and the agent experiences a sudden “doxastic openness to evidence.” Due to the kinds of beliefs that are prone to be subject of commitment, this change will have large-scale repercussions in the epistemic life of a person: it will be akin to an episode of religious “conversion.” Thus, according to the model, the argumentative resolution of disagreements in cases of Steadfastness might not always be desirable, and it is probably not easy.

5. Rational escalation and polarization

Let us now consider cases of Escalation and Polarization. Our description of these situations seems to involve a peculiar weighting of evidence in response to an argumentative exchange in the face of disagreement. Regardless of whether there is a D-disagreement, the net effect of argumentation in these cases seems to be an increase in the “distance” between the agents’ credences, thus aggravating a C-disagreement. Unlike Lara Buchak’s account of Steadfastness, this response to disagreement seems epistemically irrational mainly at the level of the balance of evidence. We have assumed that in these cases the only relevant information that emerges from the argumentative exchange is the fact of the disagreement itself. So, why should a participant modify her credence in the opposite direction of that of her interlocutor, as occurs in Escalation?Footnote 25 Why would both participants drift apart, as they do in Polarization? These phenomena seem at odds with the optimistic theorems of the model. As far as I know, there is no completely general explanation of these phenomena within the model. Some preliminary results, however, seem encouraging for the Explanation task.

Kenny Easwaran, Luke Fenton-Glynn, Christopher Hitchcock and Joel Velasco (Reference Easwaran, Fenton-Glynn, Hitchcock and Velasco2016) have investigated how an epistemically rational agent should respond to the discovery of the credence that other agents assign to a claim.Footnote 26 They aim to identify a computationally undemanding heuristic (one that is psychologically plausible) which emulates the epistemic effect of conditionalizing on the credence of other agents. In the process of devising such a heuristic, they demand that it allows agents to respond “synergestically” upon learning the prior credences of other agents, i.e., that the result of α’s updating her credence toward p after learning ε’s credence toward p must lie “outside of the interval” of their prior credences [min{Pr x (p)}, max{Pr y (p)}]. This property, which they call “synergy,” sometimes in fact occurs by performing the (computationally more complicated) procedure of applying Conditionalization upon learning the credence of other agents and assessments of their reliability (Easwaran, Fenton-Glynn, Hitchcock and Velasco Reference Easwaran, Fenton-Glynn, Hitchcock and Velasco2016: 36–37). Thus, although “in some cases of disagreement, the credences of one’s peers are evidence against one’s view; here, they are evidence for it” (Easwaran, Fenton-Glynn, Hitchcock and Velasco Reference Easwaran, Fenton-Glynn, Hitchcock and Velasco2016: 10). Though the cases they analyze are not exhaustive of situations in which synergy occurs, they suggest that “such a response will be rational in any case in which, despite the fact that one’s credence in [p] differs from those of one’s peers, the different credences nevertheless represent a kind of agreement about whether the evidence favors [p] or [¬p]” (Easwaran, Fenton-Glynn, Hitchcock and Velasco Reference Easwaran, Fenton-Glynn, Hitchcock and Velasco2016: 10). In our terms, this is a case of Escalation: arguing about an initial C-disagreement results in (at least) one of the participants intensifying her degree of confidence (modifying her credence to an interval outside the range of the original disagreement). Although under this description it seems peculiar, in some cases it would appear to be intuitively rational to reason synergistically. Consider the following situation:

A doctor wonders what dosage of a drug to give a patient. She is initially very confident (e.g., with credence 0.97) that she should inject 5 ml. She consults with one of her colleagues. She discovers that her colleague is also very confident (e.g., with credence 0.92) that the correct dose is 5 ml. As a result, she increases her credence (e.g., up to 0.98) that the correct dose is 5 ml.Footnote 27

In such cases, Escalation seems a rational response to the exchange. Roughly, this seems to be the case when, despite there being a C-disagreement among participants considered as reliable sources of information, there is no D-disagreement among them. However, this crude generalization is insufficient to account for many apparent cases of Escalation. A more careful examination of the model could provide better insights of the conditions that account for this phenomenon.

The most relevant results concerning Polarization in the model come from experiments with computer simulations. As Erik Olsson (Reference Olsson, Broncano-Berrocal and Carter2021) discusses in a literature review, computer simulations of Bayesian agent networks (i.e., that satisfy the constraints of the model) offer a robust “argument for the rationality of polarization. Not only is polarization compatible with Bayesian updating; polarization is […] omnipresent in social networks governed by such updating” (Olsson Reference Olsson, Broncano-Berrocal and Carter2021: 221–222). These results come from performing experiments on a social communication network represented by means of a simulation model called “Laputa,” written by Staffan Angere (Olsson Reference Olsson2011). The outcome of these experiments depends on feeding the model with some arbitrary values (e.g., for initial credences of agents and for sets of functions for assigning and updating of their estimates on trustworthiness of their interlocutors). By performing the experiment on multiple parameters for these values (285 groups), Josefine Pallavicini, Bjørn Hallsson and Klemens Kappel (Reference Pallavicini, Hallsson and Kappel2021) concluded that: “The very surprising result of this simulation is all of the groups polarized to some degree. In fact, most groups polarized to the maximum level. There were no conditions under which depolarization occurred” (Pallavicini, Hallsson and Kappel Reference Pallavicini, Hallsson and Kappel2021: 22). There is no general explanation of why this happens for agents of the model.Footnote 28 An attractive suggestion, however, is that Polarization is caused by

differences in background beliefs and the effects those differences have, […since] those effects essentially mean, in the case of credence updating, that evidence coming from sources believed to be trustworthy is taken at face value, whereas evidence coming from sources believed to be biased is taken as “evidence to the contrary.” (Olsson Reference Olsson, Broncano-Berrocal and Carter2021: 218)Footnote 29

If we focus our attention on real situations that resemble Polarization, these kinds of conditions often seem to be fulfilled. According to Thi Nguyen (Reference Nguyen2020), in addition to epistemic bubbles (where you do not hear the other side), there are echo chambers: social structures which discredit all outsiders. Their testimony is not just ignored but taken as evidence for the falsity of its content. In light of the robustness of computational results, and the fact that “depolarization” does not occur, perhaps an argumentative resolution of this kind of disagreement is not possible, or it is extremely unlikely.

An outcome like this might not be so bleak. After all, sustained disagreement might be accompanied by other epistemic benefits for groups cultivating it, such as “the revelation of a complex of interrelated assumptions, values, inferential standards, and cognitive goals […] through critical interaction among researchers, [which is] disagreement under a more benign name” (Longino Reference Longino2022: 179). Another moral that could be drawn from the model is that, even if disagreements that exhibit Polarization cannot be argumentatively resolved, they are susceptible to other kinds of epistemically rational interventions:

The route to undoing their influence is not through direct exposure to supposedly neutral facts and information; those sources have been preemptively undermined. It is to address the structures of discredit – to work to repair the broken trust between [members of such structures] and the outside social world. (Nguyen Reference Nguyen2020: 159)

6. Final remarks

I explored the resources of the logical perspective, equipped with probabilistic tools, for the description and assessment of various epistemic effects of argumentation in response to disagreement. I used a simple model to illustrate how this approach allows us to identify very general sets of conditions under which an argumentative resolution of disagreements can be expected. Unsurprisingly, this seems possible under the constraints of epistemic rationality in “ideal” situations: when agents share initial credences and assessment of evidence, or when different initial credences are repeatedly assessed considering shared evidence. More controversially, drawing on several recent formal results, I suggested that the logical perspective is also capable of describing sets of conditions under which argumentation does not contribute to the resolution of disagreements, or it even aggravates them. Under these conditions, it might be epistemically rational (although, sometimes, undesirable) for disagreement to endure argumentation. By modeling these situations, it is possible to identify interventions to avoid these conditions; the simplest or most effective interventions might not always be argumentative.Footnote 30

The highly idealized model I sketched has several limitations. It leaves out much of what is important in argumentative exchanges. Many details could be added to apply it to real situations. Nevertheless, even this outline offers recognizable diagnoses of what seems to be happening in various argumentative exchanges in the face of disagreement. It provides an outline of the factors that play a crucial role in these situations, and of the normative constraints that seem to guide them. This is compatible with other factors (e.g., choice of words, appeal to emotions, tone of voice, virtuous behavior, and so forth) playing a significant role in producing such epistemic effects in argumentative exchanges. It is not essential, however, to take them into account to explain the possibility of such effects: they might occur when an agent’s epistemic rationality maintains its course. Requiring that “most” people – most of the time – be “good approximations to” epistemically rational agents should not strike one as a flaw of the model. This assumption makes it easier to deal with people; furthermore, it is explanatorily more fruitful than assuming that they are victims (or executioners) of rampant irrationality. However, the model employs a degree of precision that unrealistically represents epistemic capabilities and duties. At this point, it is important to remember that it is simply a model. It “can be thought of as an imaginary, simplified version of the part of the world being studied, one in which exact calculations are possible” (Gowers Reference Gowers2002: 4). Still, it can provide a very general understanding of why argumentation in the face of disagreement typically produces certain kinds of epistemic effects.

Acknowledgements

This paper is part of the project “Modelos epistémicos de argumentación científica,” funded by UAM-I. Thanks to Mario Gensollen, Nancy Nuñez, Fabián Bernache, Fernanda Clavel, José de Teresa, Yolanda Torres, Juan Carlos Sánchez, Alejandra Tovilla, Christian Omar Méndez, José Carlos Rojas, Luis Gustavo Guzmán, Pablo Rangel, Marcos Iván Ramos, and Joaquín Galindo for insightful conversations on different aspects of the topic of this paper. Earlier versions were presented at the V Coloquio Internacional de Argumentación y Retórica (Mexico 2022) and as an invited lecture at the Universidad Autónoma de Guerrero (Chilpancingo 2024); I thank the audiences at both events for their questions and comments. I am also grateful to an anonymous referee for extensive and incredibly helpful comments. The remaining mistakes and misconceptions are mine.