Policy Significance Statement

With Canada’s most recent legislation on AI and data, we had the opportunity to examine the internals of Canada regulations and governance as it was drafted, contested and ultimately scrapped. AIDA’s origins in a federal economic development department allowed us to critique AI policy from the perspective of shared prosperity and show that the groundwork for governments to accelerate economic inclusion was missing. We combined not just impacts on the local population but also impacts on workers’ rights outside Canada. Our recommendations for future regulation are: implementing accountability mechanisms for public and private sectors, acknowledging the problems in the dual agendas of regulating and promoting AI and data, enforcing rights for all workers involved in the production of AI; and enabling pathways for meaningful public participation in AI policy.

1. Introduction

Many countries view regulations as a governance mechanism not to constrain artificial intelligence (AI) development and deployment but to promote innovations in AI. By supporting innovation and economic development, government officials and others argue that this governance-by-regulation will ensure all citizens gain from the benefits of AI. This sentiment plays out as national governments draft proposals to regulate AI. For instance, the United Kingdom promoted a pro-innovation approach to AI regulation: ‘making responsible innovation easier and reducing regulatory uncertainty … [that] will encourage investment in AI and support its adoption throughout the economy, creating jobs and helping us to do them more efficiently’ (UK Office for Artificial Intelligence, 2023). The European Union’s AI Act promises to ‘ensure that AI systems in the EU are safe, transparent, ethical, unbiased and under human control’ (European Parliament, 2023). In the development of its executive order on AI, the United States (US) asserted that ‘AI—if properly managed—can contribute enormously to the prosperity, equality and security of all’ (U.S. White House, 2023). The US described responsible AI as having ‘the potential to help solve urgent challenges while making our world more prosperous, productive, innovative and secure’ (U.S. White House, 2023). In these various locales, regulations directly have tied technological advances to capital development, which presumably induces inclusive progress across the broadest range of individuals.

Proponents of AI promise exponential benefits, from the optimisation of the energy grid, medical and scientific breakthroughs, to improvements in government service delivery and increased convenience of everyday life. We are sceptical that AI possesses such heightened power to generate positive impacts, as AI amplifies existing societal biases (e.g., Eubanks, Reference Eubanks2017; Noble, Reference Noble2018; Benjamin, Reference Benjamin2019). AI systems are often transjurisdictional in their reach and therefore AI companies may be averse to jurisdictional regulation. The hyperbole surrounding AI also is novel because it crosses partisan divides. Like other countries, Canada has rushed to invest in AI (Brandusescu, Reference Brandusescu2021) while appearing to ignore calls to protect intellectual property (IP) or address potential environmental degradation.

Despite proposed benefits from advances in AI or other innovations, historically, prosperity from these advances has not been shared widely, because ‘society’s approach to dividing the gains [was] pushed away from arrangements that primarily served a narrow elite’ (Johnson and Acemoglu, Reference Johnson and Acemoglu2023: 6). The Canadian Senate argued that historically economic development plans failed to boost economic opportunities for marginalised communities, and that transitioning to shared prosperity requires recognising this unsustainable economic imbalance and rethinking how governments, businesses and organisations engage with citizens for broader access and equity (Senate of Canada, 2021). AI Now Institute’s Kak and Myers West (Reference Kak and Myers West2024) caution against a future where, ‘the concentration of AI-related resources and expertise within the private sector makes it almost inevitable that all roads (and explicitly, profits and revenue) will lead back to industry’.

Canada’s proposed and now failed AI legislation offers a case study in the rhetorical collision of economic development and societal concerns of Canadians. We contend that AI innovation will not deliver broad-based economic development and inclusive benefits. What follows is a brief overview of the origins of Canada’s AI and Data Act (AIDA). We argue that several opportunities were missed during the drafting of AIDA. These missing elements led to extensive critiques, delays and the ultimate downfall of AIDA, when the Canadian Parliament was prorogued (As of January 6, 2025, Canada’s AI and Data Act (AIDA) is no longer under review as parliament is prorogued, which means that all draft bills have died.).

To remedy AIDA’s missed opportunities, we recommend the Canadian government and, by extension, other governments, invest in a future AI act that prioritises: (1) accountability mechanisms and tools for the public and private sectors; (2) robust workers’ rights in terms of data handling; and (3) meaningful public participation in all stages of legislation. These critiques and recommendations have implications for Canada and the world.

2. The origins of Canada’s AIDA

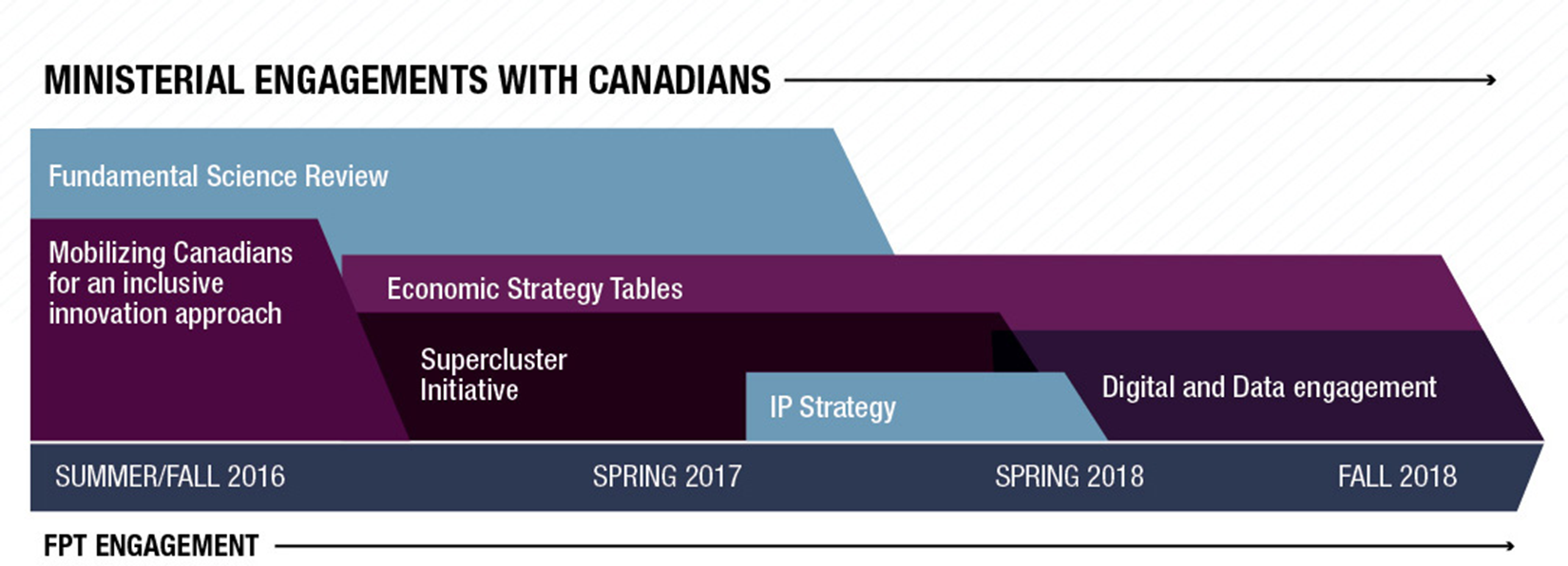

The Canadian governmental department called Innovation, Science and Economic Development Canada (ISED) was tasked to draft a new digital and data strategy that evolved into the Digital Charter (Shade, Reference Shade2019) (It is important to note that ISED was renamed from Industry Canada in 2015, with the goal of enhancing the country’s productivity and competitiveness within a global economy, thereby contributing to the economic and social welfare of Canadians.). From its inception in 2016, the Digital Charter was driven by ISED’s goals of economic development vis-à-vis innovation. In addition to promotion, the department formulates regulations and compliance mechanisms (Innovation Science and Economic Development Canada, 2019a). The Digital Charter is considered an aspirational document, which borrows from and must comply with the Charter of Rights and Freedom (Department of Justice Canada, 1982). Overall, the Digital Charter presumes these ‘series of commitments [will] build… trust in the digital and data economy’ (Scassa, Reference Scassa2023). Consequently, the Digital Charter included a broad range of entities like the Canadian Statistics Advisory Council as well as initiatives like Protecting Democracy, Christchurch Call to Action, and Computers for Schools (Figure 1).

Figure 1. ISED’s ministerial engagements with Canadians for the Digital Charter.

The Digital Charter also has roots in calls to modernise Canada’s consumer and personal privacy laws in response to big data and technologies like AI. An example is in the 2017 statement on ‘the need for a modern privacy and data protection regime, the value of data trusts and the need for compatibility with the EU’s General Data Protection Regulation [GDPR]’ (Innovation Science and Economic Development Canada, 2019b). Like many countries, ISED looked to the EU GDPR as the gold standard regulation for data protection and privacy. A related initiative was the call in the Digital Charter to revise the protection of IP. In 2018, ISED launched a new strategy to ‘help Canadian entrepreneurs better understand and protect their IP, and get better access to shared IP’ (Innovation Science and Economic Development Canada, 2019b). Thus data protection, (consumer) privacy and more broadly the realm of the digital became fundamental to economic development in Canada and to ISED, where ‘the data-driven economy represents limitless opportunities to improve the lives of Canadians—from producing faster diagnoses to making farming more efficient’ (Innovation Science and Economic Development Canada, 2019c).

Between June and September 2018, the Digital Charter moved from an initial draft to public consultations, which were structured on ISED’s vision to ‘make Canada a nation of innovators’ (Innovation Science and Economic Development Canada, 2019b). ISED’s Minister and the six Digital Innovation Leaders he selected, conducted 30 public roundtable discussions with ‘business leaders, innovators and entrepreneurs, academia, women, youth, Indigenous peoples, provincial and territorial governments and all Canadians’ (Innovation Science and Economic Development Canada, 2019b). A total of 580 participants attended a four-month consultation process, which ISED distilled into three categories: skills and talent; unleashing innovation; and privacy and trust. Of note, ‘rapid acceleration of data being created, and its use as a commodity means Canada must re-evaluate the [marketplace] frameworks’ (Innovation Science and Economic Development Canada, 2019b). The overall sentiment was that Canada could fall behind other nations in digital innovation. It was imperative that Canada become a risk-taker to close the productivity gap with emerging technologies and ‘leverage them as a competitive advantage’ (Ibid). Regulations were paramount and urgent but those regulations should be championed through market-led competition, data-driven innovation and the digital economy.

ISED consultations on the Digital Charter occurred 4 years before the inclusion of AI in any federal bill. Bill C-27, An Act to enact the Consumer Privacy Protection Act, the Personal Information and Data Protection Tribunal Act and the Artificial Intelligence and Data Act and to make consequential and related amendments to other Acts was introduced in June 2022. The name was shortened to the Digital Charter Implementation Act, 2022 (Department of Justice Canada, 2022). The bill’s inclusion of AI and data formalised in AIDA, came as a surprise to Canada’s AI researcher and practitioner community. Since then, Bill C-27 came under significant critique, with many arguing that AIDA should be severed or removed entirely from the bill (Clement, Reference Clement2023a). To address certain critiques, the Minister of ISED unexpectedly introduced a series of oral amendments to Bill C-27 during the initial meeting before Members of Parliament. AIDA went through multiple meetings, which were the result of numerous critiques. The Bill fell when Parliament was suspended. In the next section, we discuss our pressing issues with AIDA, which will persist in future AI regulation, including the future of the Digital Charter.

3. The problems with AIDA

3.1 AIDA relied on public trust in a digital and data-driven economy

The preamble of Bill C-27 states that ‘trust in the digital and data-driven economy is key to ensuring its growth and fostering a more inclusive and prosperous Canada’ (Parliament of Canada, 2022). However, there is no guarantee that this trust will result in shared prosperity. Research has found that regions experiencing data poverty, data deserts and data divides fail to benefit from technological innovations that foster data growth and access (e.g., Leidig and Teeuw, Reference Leidig and Teeuw2015). Data growth can just as easily equal mass data surveillance, which adds opportunities to monetise data. Data-growth-as-surveillance ‘socially sorts people’ (Surveillance Study Centre, 2022) and is valuable for advertising and data brokers (Zuboff, Reference Zuboff2019; Lamdan, Reference Lamdan2022) but hardly generates income for individuals who are classified by industry and government. This asymmetry in the data economy between the public who is both contributor and user versus industry and government enables distrust. Considerable research has identified a public distrust of AI, tracking government and companies’ collection of intimate ‘online personal data traces and biometric and body data’ for surveillance purposes (Kalluri et al., Reference Kalluri, Agnew, Cheng, Owens, Soldaini and Birhane2023) and the racial bias in facial recognition technology used in predictive policing (Lum and Isaac, Reference Lum and Isaac2016; Buolamwini and Gebru, Reference Buolamwini and Gebru2018).

Polls have shown that Canadians are highly distrustful of AI, more so than other countries (Edelman Trust Barometer, 2024). In Canada, 54 percent reject AI innovation, compared with the 17 percent who embrace it. Segments of the population have legitimate reasons to distrust the impacts of AI. Marginalised and low-income people are likely to be subject to the negatives of AI: job losses due to automation, enhanced surveillance, amplified discrimination and increased digital divides. These concerns do not prevent governments from leveraging public trust as a justification for pursuing AI innovation (Sieber et al., Reference Sieber, Brandusescu, Adu-Daako and Sangiambut2024a: 7).

Trust and safety have become central to AI, marked by the emergence of government-funded AI safety institutes worldwide. Confident in Bill C-27’s passage, ISED announced the Canadian AI Safety Institute in November 2024 to further AI’s responsible development (Innovation Science and Economic Development Canada, 2024). Safety emphasises technical risks like system performance over societal harms such as inequality, privacy violations or environmental impact. Similarly, trust is often reduced to public acceptance that safety will be inclusive (Sieber et al., Reference Sieber, Brandusescu, Adu-Daako and Sangiambut2024a). This framing risks legitimising technical robustness without adequately addressing broader societal safeguards.

3.2 ISED tried to both regulate and promote AI and data

It has long been recognised that government departments and agencies mandated to both promote and regulate face irreconcilable conflicts. We see this in ISED, which is mandated to regulate innovations, for example through allocating government funding, granting licenses and setting standards. ISED’s mandate also is to ‘make Canadian industry more productive and competitive in the global economy’ (Innovation Science and Economic Development Canada, 2019a), which places it squarely in the role of promotion. These dual roles and responsibilities are often found to be incompatible and inevitably favour promotion over the accountability of AI development and deployment.

We argue that promotion is facilitated by a sense of urgency in technology adoption and deployment; hence regulation may be implemented in haste. AI is often framed as an arms race for development and talent (Taddeo and Floridi, Reference Taddeo and Floridi2021). This is especially important for mid-tier economies like Canada’s, struggling to find a place in the geopolitics of a fourth industrial revolution (Walker and Alonso, Reference Walker and Alonso2016). ISED invoked an ‘agile’ and hasty approach to implementing the Digital Charter (Scassa, Reference Scassa2023). In responding to ISED’s approach, Wylie (Reference Wylie2023) argues that Canadian society must be safeguarded against a ‘move fast and break how we make laws in democracy’ regulatory attitude that fast-tracks a type of economic growth that invariably concentrates wealth.

Nuclear regulatory agencies serve as the key case study of the failure of departments and agencies with dual roles of promotion and regulation (Walker and Wellock, Reference Walker and Wellock2010). Cha (Reference Cha2024) draws parallels between nuclear power regulation and safety regulations for AI, noting how the International Atomic Energy Agency recommends independence in assessing safety, setting standards and ensuring compliance. The analogy to nuclear power is further important because of its transjurisdictional impact. Nuclear power regulation contains safeguards for the hardware, software and data components that policymakers could adopt (U.S. Nuclear Regulatory Commission, 2024: 17), although AI firms and labs have ‘pushed back’ against policymakers to delay or weaken such AI regulation (Khlaaf, Reference Khlaaf2023). We worry that these dual roles advance current regulatory capture around AI (Abdalla and Abdalla, Reference Abdalla and Abdalla2021; Whittaker, Reference Whittaker2021; Wei et al., Reference Wei, Ezell, Gabrieli and Deshpande2024). In particular, Wei et al. (Reference Wei, Ezell, Gabrieli and Deshpande2024: 1539) found that the capture led ‘to a lack of AI regulation, weak regulation or regulation that over-emphasise[d] certain policy goals over others’.

The problem is further underlined in the AI regulatory responsibilities drafted by ISED, which were defined as the domain of a new AI and Data Commissioner. The envisioned commissioner would have been appointed by the Minister of ISED to support the Minister in overseeing the functions of AIDA. Furthermore, the Commissioner would have, at the discretion of the Minister, been granted the authority, responsibility and functions required to enforce AIDA. In essence, the Commissioner’s capacity for independent oversight was contingent upon the Minister’s discretion, resulting in diminished autonomous enforcement powers under AIDA (Tessono et al., Reference Tessono, Stevens, Malik, Solomun, Dwivedi and Andrey2022; Witzel, Reference Witzel2022). OpenMedia (2023) assembled experts who noted the deficiencies in oversight and called for greater independence, which would obviate the dual roles. This arrangement consolidated a significant level of authority within a single department (Ifill, Reference Ifill2022), for example, to ‘intervene if necessary to ensure that AI systems are safe and non-discriminatory’ (Parliament of Canada, 2022) and thus would be subject to ISED’s conflicting goals of promotion and regulation.

3.3 Public consultation was insufficient for AIDA

Researchers and practitioners have extensively documented the insufficiency of public consultation on AIDA. Before the tabling of AIDA in June 2022, no public consultations were conducted (Tessono et al., Reference Tessono, Stevens, Malik, Solomun, Dwivedi and Andrey2022; Attard-Frost, Reference Attard-Frost2023). Since then, ISED hosted over 300 invite-only meetings on Bill C-27, where only nine of the meetings were held with members of civil society (Clement, Reference Clement2023b). A lack of transparency has denied the public the ability to scrutinise the ‘who’ and ‘how’ of AIDA’s construction. Public consultation is important to increase trust and lower scepticism in AI. Equally important, democratic governance demands that the government heeds concerns about the impacts of AI on the economy and society.

With its increasing ubiquity, generative AI (GenAI) has the potential to accelerate the concentration of power in Big Tech and the AI industry, manifesting in a seamless dominance over numerous sectors (Guo, Reference Guo2024). The vast majority of GenAI models are not open; they are owned by private sector firms (Widder et al., Reference Widder, Meyers West and Whittaker2024) where a company can quickly constrict access or disable the millions of apps built atop the models. Because of energy and computing demands, producers of AI, especially those of GenAI models have become de facto monopolies (Whittaker, Reference Whittaker2021). These firms will not be in Canada and will benefit neither firms nor Canadian employees but be concentrated within a few American firms (Standing Committee on Industry and Technology Canada, 2024). This is another reason why Canadian public consultation on AI is paramount.

The Canadian Senate report (2021) noted a historic lack of shared prosperity enjoyed by rural and remote communities. Engaging a broad range of the public means confronting unequal harms caused by AI, which are skewed by race, gender and geography (Benjamin, Reference Benjamin2019). Indeed, experts are increasingly unable to anticipate potential harms due to the sheer volume of accumulated intimate data about individuals; consumers could be sold products before even expressing a desire to purchase them (Chaudhary and Penn, Reference Chaudhary and Penn2024).

3.4 Workers’ rights in Canada and worldwide were excluded in AIDA

According to Canada’s Labour Market Information Council, ‘Canada risks ceding an important piece of its sovereignty if it does not control the technology used to gather and analyse essential workplace data’ (Bergamini, Reference Bergamini2023). Canadian workers’ rights were not addressed in the original draft or the amendments of AIDA (Attard-Frost, Reference Attard-Frost2023). Researchers have extensively documented the human cost of AI systems on workers, whether in Canada or worldwide (Arsht and Etcovitch, Reference Arsht and Etcovitch2018; Gray and Suri, Reference Gray and Suri2019; Roberts, Reference Roberts2019). One shortcoming of AI legislation in addressing societal harms is the failure to account for extra-jurisdictional impacts, despite AI’s significant externalities. In low-and-middle income countries (LMICs), content moderation work for Meta (Facebook), OpenAI and TikTok has been found to traumatise workers (The Bureau of Investigative Journalism, 2022; Perrigo, Reference Perrigo2023a), in one case leading to a content moderator committing suicide after being refused a transfer (Parks, Reference Parks2019). Warehouse workers for Amazon have been intensely surveilled by the company’s ‘time off task’ feature where minute by minute the managers could monitor the duration employees spent in the restroom during a specific period (Gurley, Reference Gurley2022). Uber has partaken in algorithmic wage discrimination, in which wages are ‘calculated with ever-changing formulas using granular data on location, individual behaviour, demand, supply and other factors’ (Dubal, Reference Dubal2023: 1935). Instead of sharing in the prosperity promised by AI systems, AI has contributed to dehumanising workers (Williams et al., Reference Williams, Miceli and Gebru2022).

Canada is hardly unique in failing to address workers’ rights; the US has failed to centre labour in AI regulations, despite fear of job displacement due to AI deployment (Kak and Myers West, Reference Kak and Myers West2024). More importantly, AIDA’s response to an AI system which violates workers’ rights, delegates worker protection to the responsibility of the developer and provides minimal oversight, ‘to provide guidance or make recommendations regarding corrective action’ (Office of the Minister of Innovation Science and Industry Canada, 2023). ISED’s words created the illusion of responsiveness while avoiding accountability.

The number of gig workers has rapidly increased and, with or without AI, it remains challenging to enact sufficient labour protections for those workers (De Stefano, Reference De Stefano2016; Mateescu, Reference Mateescu2024). Any wording in AIDA covering employer-employee relations neither failed to include gig workers like freelance copywriters, artists and software developers in Canada nor, for example, customer service agents in the Philippines (Deck, Reference Deck2023). Worker protection is paramount since firms, which are predominantly price sensitive, already use gig workers for less expensive labour—off-shoring or, in Canada’s case, near-shoring (Canada is frequently a site of near-shoring for the US. Canadian creative workers are often goto’s for Hollywood because they offer excellent and less expensive alternatives [e.g., in visual effects].). Consequently, gig workers are situated in the most precarious labour positions.

Not only may workers lose jobs but also their creative works—their IP—is being harvested to train GenAI systems without apparent legislative repercussions. IP strategy has been a weak point of government regulation in Canada (Brandusescu et al., Reference Brandusescu, Cutean, Dawson, Davidson, Matthews and O’Neill2021) with no mention of copyright in the original draft of AIDA (Attard-Frost, Reference Attard-Frost2023) or its amendments. Canada’s long-standing use of regulations to protect ‘CanCon’ (Canadian creative content) (Mejaski, Reference Mejaski2011) contrasts with the challenges posed by GenAI’s parasitic use of IP. The disregard for IP evinced in AIDA undermines Canada’s historic efforts, such as Bill C-18 (Online News Act), which led to Google and Meta blocking Canadian news from their platforms. The revised national IP strategy, led by ISED since 2018, focuses on education and guidelines, not IP protection (Innovation Science and Economic Development Canada, 2023), even though protecting IP is part of the department’s mandate. Relaxing or ignoring the protections of individuals’ IP rights protects the firms developing GenAI.

4. Recommendations for a future AI sct

4.1 Accountability mechanisms and tools for the public and private sectors

We argue that AIDA necessitated a redraft led by departments and agencies outside of ISED, several of which already have established accountability measures (Treasury Board of Canada Secretariat, 2019). Likewise, ombuds-type monitoring of compliance with AI legislation requires a separate government body from the one charged with regulation, free of undue influence by the public and private sectors (OpenMedia, 2023). Mechanisms should be developed to address conflicts of interest amongst those involved in the process. As we have learned from nuclear regulatory bodies, the supervisory authority should not be involved in both commercial and regulatory aspects of AI systems (Witzel, Reference Witzel2022).

Accountability tools can take several forms. We recommend third-party audits of AI systems (Costanza-Chock et al., Reference Costanza-Chock, Raji and Buolamwini2022), which offer an independent arms-length assessment of an AI system. The auditor is given access to the internals of the AI system to stress-test the system with alternate data. The goal is an impartial evaluation of compliance, performance, quality or adherence to specific standards, regulations or requirements. These audits can reveal harms and guide meaningful accountability (and regulations) for the government and protection of human rights (Tessono et al., Reference Tessono, Stevens, Malik, Solomun, Dwivedi and Andrey2022; Tessono and Solomun, Reference Tessono and Solomun2022). To finance independent AI audits, we propose government establish an AI trust into which companies transfer a portion of their profits. Finance bonds would guarantee the company can compensate for harms, similar to environmental bonds, for example, which ensure the remediation of tailings from mining (Aghakazemjourabbaf and Insley, Reference Aghakazemjourabbaf and Insley2021).

Tracking the role of the private sector vis-a-vis AI legislation supports public sector values of transparency and accountability. Johnson and Acemoglu (Reference Johnson and Acemoglu2023) argue for transparency of agreements brokered among lobbyists, politicians and companies since exorbitant sums are invested in lobbying in high-income countries. The government should reveal instances of corporate lobbying, so the public can identify potential conflicts of interest between the government and companies regarding AI. Corporate lobbying practices were exempt in Canada’s AIDA (Ifill, Reference Ifill2022). However, Canada has a lobbying registry system that could include a range of new activities, such as ‘polling, monitoring and attending committees, offering strategic advice and hosting events” as well as players like consultants who play an outsized role in AI services and are active actors in lobbying on AI (Beretta, Reference Beretta, Dubois and Martin-Bariteau2020: 138).

Reducing Big Tech’s monopolistic power also is key for the redistribution of AI’s economic benefits. Accountability approaches that champion the increase of government subsidies and financial redress mechanisms like tax reform in AI regulations also can prove economically beneficial to a broader segment of the population. Government can impose taxes on AI and tech companies, beyond Big Tech. Because of AI’s ability to damage IP, governments can demand technical fixes like removing IP-protected data from training models. Another innovative regulatory approach considers using the economic power of the GenAI foundation model makers to transform certain AI companies and their models into public utilities (Vipra and Korinek, Reference Vipra and Korinek2023). Here the costs would be heavily controlled with equal access and transparency guaranteed.

4.2 Robust workers’ rights in terms of data handling

One unique aspect of AI is that most AI systems rely on massive amounts of data to train AI models before systems can be used. If developers do not wish the models to learn from toxic images, audio and text, content needs to be cleaned—‘moderated’—of toxicity. Shared prosperity and a sustainable AI economy demand that workers’ rights be integrated. These rely on bolstering the voice of workers in the development and the use of AI systems. Canada can set an example worldwide to improve data worker conditions, especially in LMICs.

In Kenya, data workers sued Meta, the parent company of Facebook through their work with Sama (Perrigo, Reference Perrigo2022). This lawsuit is one of the first against Big Tech outside the West (Sambuli, Reference Sambuli2022). Kenyan data workers also are unionising for substantial worker protections like better pay and working conditions, including mental health support (Perrigo, Reference Perrigo2023a) and launched a second lawsuit against Big Tech companies, this time Meta, OpenAI and TikTok (Perrigo, Reference Perrigo2023b). A Western government should recognise the negative externalities amassed in LMICs and prevent AI and data analytics companies from operating, in this case in Canada, if they partake in human rights abuses worldwide (Brandusescu, Reference Brandusescu2021). Additionally, an AI act should bolster the ability of workers to engage in class actions. American workers from the Writers Guild of America (WGA) and the actors’ union’s strike demands focused heavily on the negative impacts of AI. In the end, the WGA prevented production companies from deciding when they could use and not use AI (Foroohar, Reference Foroohar2023).

Nearly everyone contributes data that make AI systems work. The public can create a new type of union called a data union (Johnson and Acemoglu, Reference Johnson and Acemoglu2023). Within the data union, data stewards would simultaneously protect the rights of workers and citizens. Workers’ Councils on AI offer another promising avenue toward worker control over AI use (McQuillan, Reference McQuillan2022). An AI act can encourage data unions and workers’ councils as options and reduce any barriers to their formation.

4.3 Meaningful public participation in all stages of legislation

To ensure shared prosperity now and not just in an indeterminate future, an AI act must include a right to public participation in the choices to develop and use AI systems (McCrory, Reference McCrory2024). Even though a dominant narrative of shared prosperity prioritises the customer experience through productivity gains (Ben-Ishai et al., Reference Ben-Ishai, Dean, Manyika, Porat, Varian and Walker2024), this will not adequately substitute for the loss of a job, a wrongful arrest, or another harm caused by AI. Government should not reduce the public to consumers’ responsiveness to a market economy but instead remember its responsibility to protect its citizenry and ensure distributive prosperity. Government can do so by applying policy protections within an AI act that go well beyond an individual’s privacy or consumer protections.

Numerous types of meaningful participation in AI have been ‘field-tested’, from which government can choose, among them citizens’ juries, permanent mini-publics and citizens’ assemblies (Balaram et al., Reference Balaram, Greenham and Leonard2018; Ada Lovelace Institute, 2021; Data Justice Lab, 2021; Sieber et al., Reference Sieber, Brandusescu, Adu-Daako and Sangiambut2024a, Reference Sieber, Brandusescu, Sangiambut and Adu-Daako2024b). Simultaneously, meaningful participation in AI must accommodate durable issues in participation, such as who is able to participate and how? Digital data tends to favour dominant and privileged populations, not refugees, migrants or stateless people. For us, the public represents impacted individuals and groups as well as the general public. Therefore, we extend participation beyond those who are directly impacted by an AI system or to tech executives but to numerous publics (Raji, Reference Raji2023). A right to participation should occur as early as possible in the development stage of AI systems (e.g., participatory design) (Mathewson, Reference Mathewson2023).

In Canada, AI governance and data policy spaces have reduced meaningful participation to consultations like multistakeholder forums. Their agendas are often shaped by money; meaningful participation is resource-intensive and can exclude those with limited funds and time (Sambuli, Reference Sambuli2021). Government must equalise the significant resource differences among stakeholders in their participation (Sieber et al., Reference Sieber, Brandusescu, Adu-Daako and Sangiambut2024a, Reference Sieber, Brandusescu, Sangiambut and Adu-Daako2024b), for instance compensating members of citizens’ assemblies. Despite the delays implied by broad participation, the breadth provides the richest, most nuanced solutions that articulate current harms and anticipate new ones. It also is cost-effective (European AI and Society Fund, 2023).

Because of AI’s ubiquity in communication technologies, we should ensure old forms of participation are protected as well as improved, to allow for a range of voices to express assent and dissent. Nurturing a culture of meaningful participation means having the choice to reject GenAI to summarise activities as this further trains generative models with participants’ content. Meaningful participation requires the ability of participants to exert significant influence on policies and products, including public influence on decisions regarding the banning, sunsetting and decommissioning of AI systems, such as autonomous weapons or facial recognition.

5. Concluding remarks

AIDA was delayed because of consistent criticism from civil society, businesses and academia, even as its passage was strongly supported by the Liberal Party. Since its launch, calls to withdraw AIDA entirely from Bill C-27 were still being made by multiple stakeholders (Canadian Civil Liberties Association, 2024). During the revision of this article, Bill C-27 and 25 other bills, effectively died with the prorogation of Parliament, making the future of AI regulation uncertain (Osman and Reevely, Reference Osman and Reevely2025), even though the other political parties and industry support individual privacy and consumer protection parts of the Bill (Mazereeuw, Reference Mazereeuw2025).

We note calls for self-regulation of AI; as others have argued, the private sector should not be relied upon to rein in AI (Ferretti, Reference Ferretti2022; Wheeler, Reference Wheeler2023; McCrory, Reference McCrory2024). Governments have a role in regulating AI to ensure that accountability is not subordinate to market incentives, which could include worker exploitation. AI legislation also requires a bottom-up approach, in which data can be leveraged through unions. These and other inclusive policy instruments should be deliberated within a transparent and democratic process.

Participating publics need not be technical experts; they bring their own lived experience and expertise to the AI regulatory discourse. Nor does the public need to be persuaded of the promised benefits of AI or compelled to trust AI as a prerequisite to participation. The government has a role in regulating AI for its potentially harmful effects on society and achieving shared prosperity for all.

Data availability statement

All resources used are included in the references. Where they are available online, a link is provided.

Acknowledgments

The authors are grateful for reviewers’ comments in helping refine the commentary.

Author contribution

Both authors have contributed to the conceptualisation, data curation, formal analysis, methodology, project administration, visualisation, writing—original draft, writing—review and editing and approved the final submitted draft.

Funding statement

This research was supported by a Canada Graduate Scholar Doctoral program grant from the ‘Social Sciences and Humanities Research Council of Canada’ (‘767-2021-0252’). The funder had no role in study design, data collection and analysis, the decision to publish or preparation of the manuscript.

Competing interests

The authors declare none.

Comments

No Comments have been published for this article.