1 Introduction

A male peacock mantis shrimp resides happily in his burrow. He has recently molted, leaving his shell soft and vulnerable. As he waits, another male wanders onto his territory and approaches. The new male would like a burrow of his own. Both possess raptorial claws powerful enough to smash through the glass of an aquarium. If they fight for the territory, the temporarily squishy burrow owner will be seriously hurt. Neither, though, can directly observe whether the other has molted recently. Both mantis shrimp raise their appendages to display brightly colored “meral spots”, intended to signal their aggression and strength. The intruder is impressed and backs off to seek territory elsewhere.

Alex is looking to hire a new data analyst for his company. Annaleigh wants the job and so is keen to impress Alex. She includes every accomplishment she can think of on her resume. Since she went to a prestigious college, she makes sure her educational background is front and center, where Alex will see it first.

A group of vampire bats need to eat nightly to maintain their strength. This is not always easy, however. Sometimes a bat will hunt all night but fail to find a meal. For this reason, most bats in the group have established relationships for reciprocal food sharing. Bats who manage to feed will regurgitate blood for partners who did not.

Mitzi needs a kidney transplant but is too old to go on the normal donor list. Her close friend wants to donate but is incompatible as a donor for Mitzi. They join the National Kidney Registry and become part of the longest-ever kidney donation chain. This involves thirty-four donors who pay forward kidneys to unrelated recipients so that their loved ones receive donations from others in the chain.Footnote 1

We have just seen four examples of strategic scenarios. By strategic, I mean situations where (1) multiple actors are involved and (2) each actor is affected by what the others do. Two of these strategic scenarios I just described – the ones involving mantis shrimp and vampire bats – occurred between non-human animals. The other two – with Alex, Annaleigh, Mitzi, and her friend – occurred in the human social scene. Notice that strategic scenarios are completely ubiquitous in both realms. Whenever predators hunt prey, potential mates choose partners, social animals care for each other, mutualists exchange reciprocal goods or services, parents feed their young, or rivals battle over territory, these animals are engaged in strategic scenarios. Whenever humans do any of these things, or when they apply for jobs, work together to overthrow a dangerous authoritarian leader, bargain over the price of beans, plan a military attack, divide labor in a household, or row a boat, they are likewise in strategic scenarios.

This ubiquity explains the success of the branch of mathematics called game theory. Game theory was first developed to explore strategic scenarios among humans specifically.Footnote 2 Before long, though, it became clear that this framework could be applied to the biological world as well. There have been some differences to how game theory has typically been used in the human realm versus the biological one. Standard game theoretic analyses start with a game. I will define games more precisely in Section 2, but for now it is enough to know that they are simplified representations of strategic scenarios. Game theoretic analyses typically proceed by making certain assumptions about behavior, most commonly that agents act in their own best interests and that they do this rationally.

Strong assumptions of rationality are not usually appropriate in the biological realm. When deciding whether to emit signals to quora of their peers, bacteria do not spend much time weighing the pros and cons of signaling or not signaling. They do not try to guess what other bacteria are thinking about and make a rational decision based on these calculations. But they do engage in strategic behavior, in the sense outlined above, and often very effectively.

There is another framework, closely related to game theory, designed to model just this sort of case. Evolutionary game theory also starts with games but focuses less on rationality.Footnote 3 Instead, this branch of theorizing attempts to explain strategic behavior in the light of evolution. Typical work of this sort applies what are called dynamics to populations playing games. Dynamics are rules for how strategic behavior will change over time. In particular, dynamics often represent evolution by natural selection. These models ask, in a group of actors engaged in strategic interactions, which sorts of behaviors will improve fitness? Which will evolve?

This is an Element about games in the philosophy of biology. The main goal here is to survey the most important literature using game theory and evolutionary game theory to shed light on questions in this field. Philosophy of biology is a subfield in philosophy of science. Philosophers of science do a range of things. Some philosophy of science asks questions like, How do scientists create knowledge? What are theories? And how do we shape an ideal science? Other philosophers of science do work that is continuous with the theoretical sciences, though often with a more philosophical bent. This Element will focus on work in this second vein.

In surveying this literature, then, it will not be appropriate to draw hard disciplinary boundaries. Some work by biologists and economists has greatly contributed to debates in philosophy of biology. Some work by philosophers of biology has greatly contributed to debates in the behavioral sciences. Instead, I will focus on areas of work that have been especially prominent in philosophy of biology and where the greatest contributions have been made by philosophers.

As I’ve also been hinting at, it will also not be appropriate to draw clear boundaries between biology and the social sciences in surveying this work. This is in part because game theory and evolutionary game theory have been adopted throughout the behavioral sciences – human and biological. Frameworks and results have been passed back and forth between disciplines. Work on the same model may tell us both about the behaviors of job seekers with college degrees and the behaviors of superb birds of paradise seeking mates, meaning that one theorist may contribute to the human and biological sciences at the same time.

There is something special that philosophers of biology have tended to contribute to this literature. Those trained in philosophy of science tend to think about science from a meta-perspective. As a result, many philosophers of science have both used formal tools and critiqued them at the same time. This can be a powerful combination. It is difficult to effectively critique scientific practice without being deeply immersed in that practice, but scientists are not always trained to turn a skeptical eye on the tools they use. This puts philosophers of biology who actually build behavioral models into a special position. As I will argue, this is a position that any modeler should ultimately assume – one of using modeling tools and continually assessing the tools themselves. Throughout this Element we will see how philosophers who adopted this sort of skeptical eye have helped improve the methodological practices of game theory and evolutionary game theory.

So, what are the particular debates and areas of work that will be surveyed here? I will focus on two branches of literature. I begin this Element with a short introduction to game theory and evolutionary game theory. After that, I turn to the first area of work, which uses signaling games to explore questions related to communication, meaning, language, and reference. There are three sections in this part of the Element. The first, Section 3, starts with the common interest signaling game described by David Lewis. This section shows how philosophers of science have greatly expanded theoretical knowledge of this model, while simultaneously using it to explore questions related to human and animal signaling. In Section 4, I turn to a variation on this model – the conflict of interest signaling game. This game models scenarios where actors communicate but do not always seek the same ends. As we will see, traditional approaches to conflict of interest signaling, which appeal to signal costs, have been criticized as biologically unrealistic and methodologically unsound. Philosophers of biology have helped to rework this framework, making clearer how costs can and cannot play a role in supporting conflict of interest signaling. The last section on signaling, Section 5, turns to deeper philosophical debates. I look at work using signaling games to consider what sort of information exists in biological signals. Do these signals include semantic content? Of what sort? Can they be deceptive?

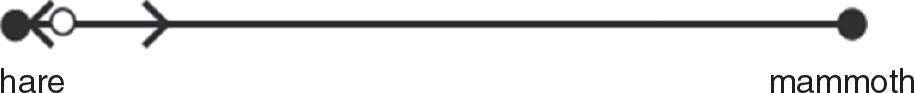

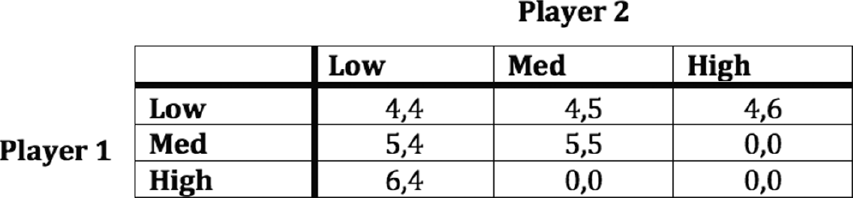

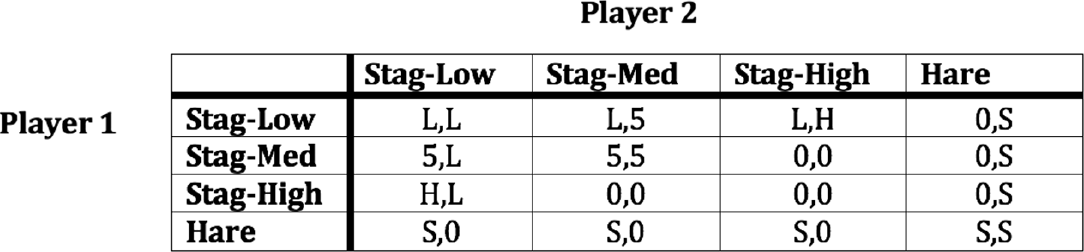

The second part of the Element addresses a topic that has been very widely studied in game theory and evolutionary game theory – prosociality. By prosociality, I mean strategic behavior that generally contributes to the successful functioning of social groups. Section 6 looks at the evolution of altruism in the prisoner’s dilemma game. This topic has been explored in biology, economics, philosophy, and all the rest of the social sciences, so this section is even more interdisciplinary than the rest of the Element. Because this game has been so widely studied, and the literature so often surveyed, I will keep this treatment very brief. Section 7 turns to two models that have gotten quite a lot of attention in philosophy of biology: the stag hunt and the Nash demand game. The stag hunt represents situations where cooperation is risky but mutually beneficial. The Nash demand game can represent bargaining and division of resources more generally. As we will see, these games have been used to elucidate the emergence of human conventions and norms governing things like the social contract, fairness, and unfairness.

By way of concluding the Element, I’ll dig a bit deeper into a topic mentioned above: methodology. In particular, a number of philosophers have made significant methodological contributions to evolutionary game theory that stand free of particular problems and applications. The epilogue, Section 8, briefly discusses this literature.

Readers should note that the order and focus of this Element are slightly idiosyncratic. A more traditional overview might start with the earliest work in evolutionary game theory – looking at the hawk-dove game in biology – and proceed to the enormous literature on cooperation and altruism that developed after this. The literature on signaling games would come later, reflecting the fact that it is relatively recent, and would take up a less significant chunk of the Element. My goal is to give a more thorough overview of work that has received less attention rather than to revisit well-worn territory. In addition, as mentioned, I focus in this Element on the areas of the literature that have been most heavily developed in philosophy.

This Element is very short. For this reason, it does not provide a deep understanding of any one topic but overviews many. Interested readers should, of course, use the references in this Element to dig deeper. Furthermore, the brevity of the Element means there is no space to even briefly touch on a number of related literatures. Most notably, I ignore important work in biology and philosophy of biology on other sorts of evolutionary theory/modeling and work in economics on strategic behavior in the human realm.

So, in the interest of keeping it snappy, on to Section 2, and the introduction to game theory and evolutionary game theory.

2 Games and Dynamics

What does a strategic scenario involve? Let’s use an example to flesh this out. Every Friday, Alice and Sharla like to have coffee at Peets. Upon arriving slightly late, though, Sharla notices it is closed and Alice is not there. Alice has likely chosen another coffee shop – either the nearby Starbucks or the Dunkin’ Donuts. Which should Sharla try?Footnote 4 This sort of situation is often referred to as a coordination problem. Two actors would like to coordinate their action, in this case by choosing the same coffee shop. They care quite a lot about going to the same place, and less about which place it is.

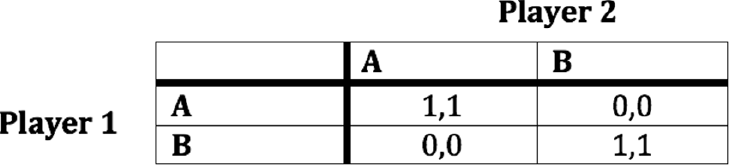

How can we formalize this scenario into a game? We do this by specifying four things. First, we need to specify the players, or who is involved. In this case, there are two players, Alice and Sharla. If we like, we can call them player 1 and player 2. Second, we need to specify what are called strategies. Strategies capture the behavioral choices that players can make. In this case, each player has two choices, to go to Starbucks or to go to Dunkin’ Donuts. Let’s call these strategies a and b. Third, we need to specify what each player gets for different combinations of strategies, or what her payoffs are.

This is a bit trickier. In reality, the payoff to each player is the joy of meeting a friend should they choose the same strategy and unhappiness over missing each other if they fail. But to formalize this into a game, we need to express these payoffs mathematically. What we do is choose somewhat arbitrary numbers meant to capture the utility each player experiences for each possible outcome. Utility is a concept that tracks joy, pleasure, preference, or whatever it is that players act to obtain.Footnote 5 Let us say that when Sharla and Alice meet, they get payoffs of 1, and when they do not, they get lower payoffs of 0.

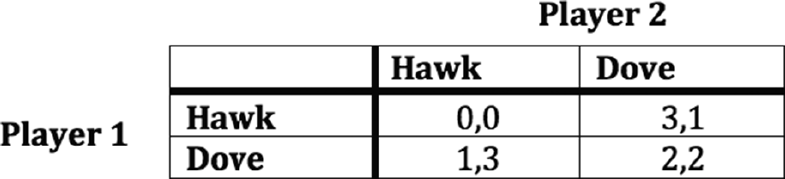

We can now specify a game representing the interaction between Alice and Sharla. Figure 2.1 shows what is called a payoff table for this game. Rows represent Alice’s choices, and columns represent Sharla’s. Each entry shows their payoffs for a combination of choices, with Alice’s (player 1’s) payoff first. As specified, when they both pick a or both pick b, they get 1. When they miscoordinate, they get 0. Game theorists call games like this – ones that represent coordination problems – coordination games. This game, in particular, we might call a pure coordination game. The only thing that matters to the actors from a payoff perspective is whether they coordinate.

Figure 2.1 A payoff table of a simple coordination game. There are two players, each of whom chooses a or b. Payoffs are listed with player 1 first.

There is one last aspect of a game that often needs to be specified, and that is information. Information characterizes what each player knows about the strategic situation – does she know the structure of the game? Does she know anything about the other player? In evolutionary models the information aspect of games is often downplayed, though. This is because information in this sense is most relevant to rational calculations of game theoretic behavior, not to how natural selection will operate.

So now we understand how to build a basic game to represent a strategic scenario. The next question is, how do we analyze this game? How do we use this model to gain knowledge about real-world strategic scenarios? In classic game theory, as mentioned in the introduction, it is standard to assume that each actor attempts to get the most payoff possible given the structure of the game and what the player knows. This assumption allows researchers to derive predictions about which strategies a player will choose, or might choose, and also to explain observed behavior in strategic scenarios.

More specifically, these predictions and explanations are derived using different solution concepts, the most important being the Nash equilibrium concept. A Nash equilibrium is a set of strategies where no player can change strategies and get a higher payoff. There are two Nash equilibria in the coordination game – both players choose a or both choose b.Footnote 6 Although this is far from the only solution concept in use by game theorists, it is most used by philosophers of biology, who tend to focus on evolutionary models. As we will see, Nash equilibria have predictive power when it comes to evolution, as well as to rational choice–based analyses.Footnote 7

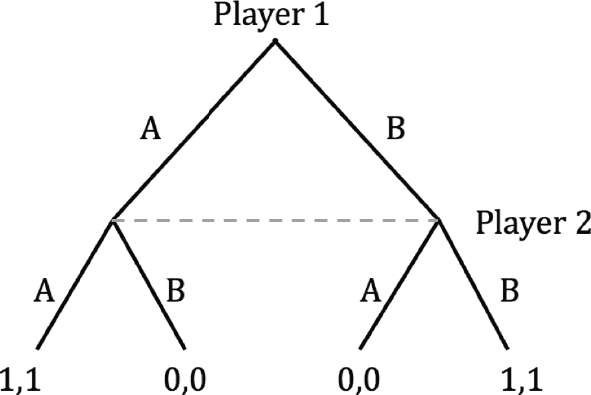

Notice that there is something in the original scenario with Sharla and Alice that is not captured in the game depicted in Figure 2.1. Remember that we supposed Sharla arrived at Peets late, to discover Alice had already made a choice. The payoff table above displays what is called the normal form coordination game. Normal form games do not capture the fact that strategic decisions happen over time. Sometimes one cannot appropriately model a strategic scenario without this time progression. In such cases, one can build a game in extensive form. Figure 2.2 shows this. Play starts at the top node. First Alice (player 1) chooses between Starbucks and Dunkin’ Donuts (a or b). Then Sharla (player 2) chooses. The dotted line specifies an information set for Sharla. Basically it means that she does not know which of those two nodes she is at, since she did not observe Alice’s choice. At the ends of the branches are the payoffs, again with player 1 listed first. There are ways to analyze extensive form games that are not possible in normal form games, but discussing these goes beyond the purview of this Element.

Figure 2.2 The extensive form of a simple coordination game. There are two players, each of whom chooses a or b. Player one chooses first.

Now let us turn to evolutionary analyses. In evolutionary game theoretic models, groups of individuals are playing games, and evolving or learning behavior over time. Dynamics represent rules for how evolution or learning occurs. The question becomes: what behaviors will emerge over the course of an evolutionary process?

One central approach developed in biology to answer this question involves identifying what are called evolutionarily stable strategies (ESSs) of games. Intuitively, an ESS is a strategy that, if played by an entire population, is stable against mutation or invasion of other strategies.Footnote 8 Thus ESSs predict which stable evolutionary outcomes might emerge in an evolving population. Despite being used for evolutionary analyses, though, the ESS concept is actually a static rather than an explicitly dynamical one. Without specifying a particular dynamic, one can identify ESSs of a game.

Here is how an ESS is defined. Suppose we have strategies a and b, and let u(a, b) refer to the payoff received for playing strategy a against strategy b. Strategy a is an ESS against strategy b whenever u(a, a) > u(b, a), or else if u(a, a) = u(b, a), then it is still an ESS if u(a, b) > u(b, b). When these conditions hold, if an a type mutates into a b type, we should expect this new b type to die off because they get lower payoffs than as do. The strategies here are usually thought of as corresponding to fixed behavioral phenotypes because biological evolution is the usual target for ESS analysis. In the cultural realm, one can apply the ESS concept by thinking of mutation as corresponding to experimentation. The fit is arguably less good, but an ESS analysis can tell us what learned behaviors might die out because those who experiment with them will switch back to a more successful choice.

Let’s clarify with an example. Suppose we have a population playing the game in Figure 2.1 – they all choose which coffee shop to meet at. Is a (all going to Starbucks) an ESS? The first condition holds whenever the payoff one gets for playing a against a is higher than the payoff for playing b against a. This is true. So we know a is an ESS by the first condition. Using the same reasoning, we can see that b (all going to Dunkin’ Donuts) is an ESS too. Intuitively this should make sense. When we have a population of people going to one coffee shop (who all like to coordinate) no one wants to switch to the other.

The second condition comes into play whenever the payoff of a against a is the same as the payoff of b against a. This would capture a scenario where a new, invading strategy does just as well against the current one as it does against itself. We can see that a will still be stable, though, if b does not do very well against itself.

As mentioned, Nash equilibria are important from an evolutionary standpoint. In particular, every ESS will be a Nash equilibrium (though the reverse is not true). So identifying Nash equilibria is the first step to finding ESSs.

As we will see in Section 8, philosophers of biology have sometimes been critical of the ESS concept. A central worry is that in many cases full dynamical analyses of evolutionary models reveal ESS analyses to be misleading. For example, some ESSs have very small basins of attraction. A basin of attraction for an evolutionary model specifies what proportion of population states will end up evolving to some equilibrium (more on this shortly). In this way, basins of attraction tell us something about how likely an equilibrium is to arise and just how stable it is to mutation and invasion. Another worry is that sometimes stable states evolve that are not ESSs, and are thus not predicted by ESS analyses.

We will return to these topics later. For now, let us discuss in more detail what a full dynamical analysis of an evolutionary game theoretic model might look like. First, one must make some assumptions about what sort of population is evolving. A typical assumption involves considering an uncountably infinite population. This may sound strange (there are no infinite populations of animals), but in many cases, infinite population models are good approximations to finite populations, and the math is easier. One can also consider finite populations of different sizes.

Second, one must specify the interactive structure of a population. The most common modeling assumption is that all individuals belong to a single population that freely mixes. This means that every individual meets every other individual with the same probability. This assumption, again, makes the math particularly easy, but it can be dropped. For instance, a modeler might need to look at models with multiple interacting populations. (This might capture the evolution of a mutualism between two species, for example.) Or it might be that individuals tend to choose partners with their own strategies. Or perhaps individuals are located in a spatial structure which increases their chances of meeting nearby actors.

Last, one must choose dynamics which, as mentioned, define how a population will change over time. The most widely used class of dynamics – payoff monotonic dynamics – makes variations on the following assumption: whatever strategies receive higher payoffs tend to become more prevalent, while strategies that receiver lower payoffs tend to die out. In particular, the replicator dynamics are the most commonly used dynamics in evolutionary game theory.Footnote 9 They assume that the degree to which a strategy over- or underperforms the average determines the degree to which it grows or shrinks. We can see how such an assumption might track evolution by natural selection – strategies that improve fitness lead to increased reproduction of offspring who tend to have the same strategies. This model can also represent cultural change via imitation of successful strategies and has thus been widely employed to model cultural evolution.Footnote 10 The assumption underlying this interpretation is that group members imitate strategies proportional to the success of these strategies. In this Element, we will mostly discuss work using the replicator dynamics, and other dynamics will be introduced as necessary.

Note that once we switch to a dynamical analysis, payoffs in the model no longer track utility, but instead track whatever it is that the dynamics correspond to. If we are looking at a biological interpretation, payoffs track fitness. If we are looking at cultural imitation, payoffs track whatever it is that causes imitation – perhaps material success.

Note also that evolutionary game theoretic dynamics do not usually explicitly model sexual reproduction. Instead, these models usually make what is called the “phenotypic gambit” by assuming asexually reproducing individuals whose offspring are direct behavioral clones. Again, this simplifies the math, and again, many such models are good enough approximations to provide information about real, sexually reproducing systems.

To get a better handle on all this, let us consider a population playing the coordination game. Assume it is an infinite population and that every individual freely mixes. Assume further that it updates via the replicator dynamics.

The standard replicator dynamics are deterministic, meaning that given a starting state of a population, they completely determine how it will evolve.Footnote 11 This determinism means that we can fully analyze this model by figuring out what each possible population state will evolve to. We can start this analysis by looking at the equation specified for this model and figuring out what the rest points of the system are.Footnote 12 These are the population states where evolution stops, so they are the candidates for endpoints of the evolutionary process. In particular we can ask, which of these rest points are ones that other states evolve to? Isolating these will tell us which stable outcomes to expect. Note that these population rest points are themselves often referred to as equilibria, since these are balanced points where no change occurs. These are equilibria of the evolutionary model, not of the underlying game. This said, Nash equilibria – and thus ESSs – are always rest points for the replicator dynamics.Footnote 13

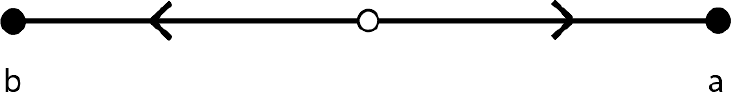

In this case, there are three equilibria, which are the states where everyone plays a, everyone plays b, and where there are exactly 50 percent of each. This third equilibrium is not a stable one, though, in the sense that a population perturbed away from it even a bit will evolve toward one of the other two equilibria. This means that we have a prediction as to what will evolve – either a population where everyone plays a or everyone plays b. Notice that these are the two ESSs.

We can say more than this. As mentioned, we can also specify just which states will evolve to which of these two equilibria. For this model, the replictor equation tells us that whenever more than one-half of individuals play a, the a types will become more prevalent. Whenever less than one-half play a, the b types become more prevalent. This makes intuitive sense – those playing the less prevalent strategy coordinate less often, do worse, and become even less prevalent. We can summarize these results in a phase diagram, or a visual representation of each population state and where it evolves.

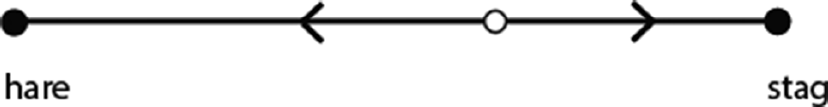

Figure 2.3 shows this. The line displays proportions of strategies from 0 percent a (or all b) to 100 percent a. The two endpoints are the two stable equilibria. The open dot in the middle represents the unstable equilibrium. Arrows show the direction of population change, toward these two equilibria. The diagram also illustrates the basins of attraction for each equilibrium, or the set of starting points that will end up at that equilibrium. The points to the right of the unstable rest point are the basin of attraction for the a equilibrium, and the points to the left for the b. In this case, the two basins of attraction have equal size, but, as we will see, in many models they differ. The size of a basin of attraction is often taken to give information about the likelihood that an equilibrium evolves.

Figure 2.3 The phase diagram for a population evolving via the replicator dynamics to play a simple coordination game.

This is a very simple evolutionary model. Models with more strategies and more complicated population structures will be harder to analyze. A host of methods can be employed to learn about these evolutionary systems. For example, one might run simulations of the replicator dynamics for thousands of randomly generated population states. Doing so generates an approximation of the sizes of the various basins of attraction for a model. One might also use analytic methods to prove various things about the evolutionary system – for example, that all population states will evolve to some particular rest point – even if one cannot generate a complete dynamical picture.

At this point, we have surveyed some general concepts in game theory and evolutionary game theory. We have also seen an example of how one might analyze a simple game theoretic and evolutionary game theoretic model. This, of course, is a completely rudimentary introduction, but I hope it will suffice for readers who would like to situate the rest of this Element. Those who are interested in learning more can look at Reference WeibullWeibull (1997) or Reference GintisGintis (2000). As we will see, philosophers of biology have made diverse uses of these frameworks, contributing to diverse literatures. Let us now turn to the first of these literatures – that on signaling.

3 Common Interest Signaling

Baby chicks have a call that they issue when lost or separated from their mother. This induces the mother chicken to rush over and reclaim the chick. This call is an example of a signal, an arbitrary sign, symbol, or other observable, that plays a role in communication between organisms. By arbitrary, I simply mean that the signal could play its role even if it was something else. For the chickens, many different calls could have done just as well. We see such arbitrary signals throughout the human social world, and across the rest of the animal kingdom (and even outside it). Humans communicate with each other using language, both spoken and written, and a bestiary of other signals, including hand gestures, signage, facial expressions, etc. Many other species engage in verbal signaling. A famous example comes from vervet monkeys who have a host of evolved alarm calls (Reference Seyfarth, Cheney and MarlerSeyfarth et al., 1980b). Other animals, like wolves, signal with stereotyped gestures and body positions (Reference ZimenZimen, 1981). Some animals send chemical signals, including pheromones. In addition, for many animals, actual features of their physiology serve as signals. A classic example is the tail of the peacock, though we will focus more on this sort of case in Section 4, when we talk about conflict of interest signaling.

There is a reason for the ubiquity of signaling. It can be incredibly beneficial from a payoff standpoint for organisms to communicate about the world around them, and about themselves. At the most basic biological level, we would not be here without genetic signaling. As Reference SkyrmsSkyrms (2010b) puts it, “signals transmit information, and it is the flow of information that makes all life possible” (32).

Of course, the actual details of what a signal is, who is communicating, and for what purpose vary across these examples. What unifies them is that in each case organisms derived enough benefit from communicating that they evolved, or culturally evolved, or learned signaling conventions to facilitate this communication. As will become clear, we can unify these diverse scenarios under one model, which helps us understand all sorts of things about them. The goal of this section will be to introduce this framework and describe some ways philosophers have used it to inform questions in philosophy, biology, and the social sciences.

I will start by describing the common interest signaling game and briefly discussing its history in philosophical discourse. After that, I’ll describe how philosophers have been instrumental in developing our theoretical understanding of the evolution of signaling using this model. I will then move on to describe some of the diverse ways that the signaling model has been applied, including to human language, animal communication, and communication within the body.

3.1 The Lewis Signaling Game

Reference QuineQuine ([1936] 1976) famously argued that linguistic conventions could not emerge naturally. The idea is that one cannot assign meaning to a term without using an established language. In Convention, David Lewis first employs game theory to chip away at this argument (Reference LewisLewis, 1969). In particular, he introduces a highly simplified game to show how terms might attain conventional meaning in a communication context. Philosophers have often called this the Lewis signaling game, though it is also referred to as the common interest signaling game, or just the signaling game. As we will see, there are actually a large family of games that go under this heading, but let us start with the basics.

Suppose that a mother and a daughter regularly go on outings. Sometimes it is rainy, in which case they like to walk in the rain. If it is sunny, they have a picnic outside. To figure out whether to bring umbrellas or sandwiches, the daughter calls her mother ahead of time to see what the weather is like. The mother reports that it is either “sunny” or “rainy,” and the daughter makes her choice by bringing either umbrellas or a picnic.

We might represent a scenario like this with the following game. There are two possible states of the world – call them state 1 and state 2 – which represent the weather being sunny or rainy. A player called the sender (the mother) is able to observe these states and contingent on that observation to send one of two signals (“sunny” or “rainy” – call them signal a and signal b). A player called the receiver, the daughter, cannot observe the world directly. Instead, she receives whatever message is sent and can take one of two actions (act 1 and act 2) – bringing umbrellas or a picnic. Act 1 is appropriate in state 1 and act 2 in state 2. If the receiver takes the appropriate action, both players get a payoff of 1 (just like the mother and daughter get a payoff for a picnic in the sun and umbrellas in the rain), otherwise they get nothing. In this game, two players want the same thing – for the action taken by the receiver to match the state observed by the sender. (This is why the game is a common interest signaling game.) The only way to achieve this coordination dependably is through the transmitted signal.

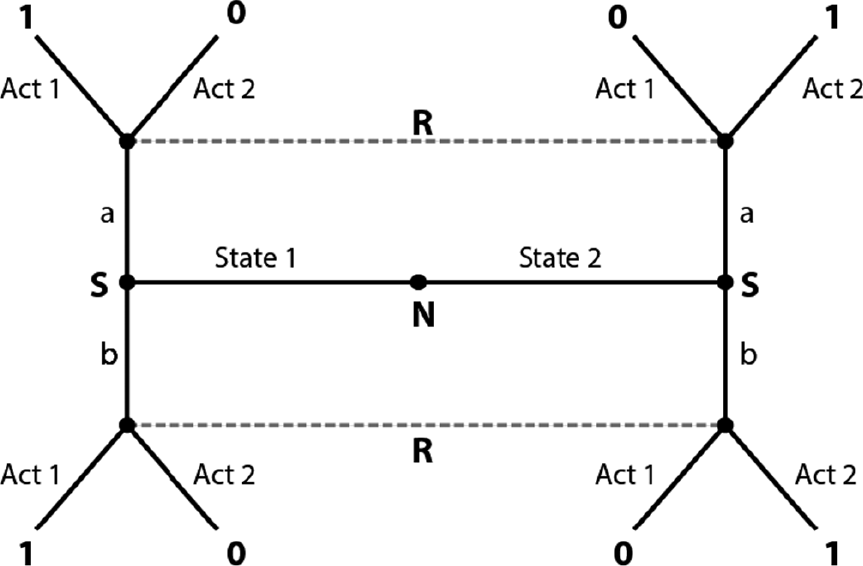

Figure 3.1 shows the extensive form of this game. This game tree should be read starting with the central node. Remember from the last section that play of the game can go down any of the forking paths depending on what each agent chooses to do. In order to capture the fact that one of two states obtains independent of the players’ choices, we introduce a dummy player called Nature. Nature (N) first chooses state 1 or state 2. Then the sender (S) chooses signal a or b. The receiver (R) has only two information sets since they do not know which state obtains. These, remember, are the nodes connected by dotted lines. The receiver, upon finding themselves at one of these sets, chooses act 1 or 2. The payoffs are listed at the terminal branches. Since payoffs are identical for both actors, we list only one number.

Figure 3.1 The extensive form respresentation of a Lewis signaling game with two states, two signals (a and b), and two acts.

Lewis observed that there are two special sorts of Nash equilibria of this game often called signaling systems. These are the sets of strategies where the sender and receiver always manage to coordinate with the help of their signals. Either signal a is always sent in state 1, and signal b in state 2, and the receiver always uses these to choose the right act, or else the signals are flipped. Figure 3.2 shows these two signaling systems. Arrows map states to signals and signals to acts. Notice that the left arrows in each diagram represent the sender’s strategy, and the right ones the receiver’s.

Figure 3.2 The two signaling systems of the Lewis signaling game. In the first, signal a represents state 1 and causes act 1, while signal b attaches to state 2 and act 2. In the second system, the meanings of the two signals are reversed.

What Lewis argued is that at each of these equilibria, players engage in a linguistic convention – a useful, dependable social pattern which might have been otherwise. (In Section 7 we will say a bit more about this concept.) For Lewis, these conventions might arise through a process of precedent setting or as a result of natural salience. If so, this could explain how conventional meaning might emerge naturally in human groups.

But one might worry that while Lewis gestures toward a process by which signals can obtain conventional meaning, he does not do much to fill in how such a process might go. Furthermore, we have seen that conventional meaning has emerged throughout the natural world. Can Lewis’s analysis address vervet monkeys? We do not suppose that their alarm signals were developed through a process of precedent setting between members of the group. Furthermore, Lewis requires that his players have full common knowledge of the game and expectations about the play of their partner for an equilibrium to count as a convention. These conditions will not apply to the animal world (and sometimes not to the human one either). Let us now turn to explicitly evolutionary models of this game.

3.2 The Evolution of Common Interest Signaling

Reference SkyrmsSkyrms (1996) uses evolutionary game theory to strengthen Lewis’s response to Quine. He starts by observing that in the simple signaling game described above, the only ESSs are the two signaling systems (Reference WärnerydWärneryd, 1993).Footnote 14 This is because each signaling system achieves perfect coordination, and any other strategy will sometimes fail to coordinate with those playing the signaling system. In addition no other strategy has this property – each may be invaded by the signaling system strategies (Reference WärnerydWärneryd, 1993; Reference SkyrmsSkyrms, 1996).Footnote 15 So in an evolutionary model, then, we have some reason to think the signaling systems will evolve.

As Skyrms points out, true skeptics might not be moved by this ESS analysis. In particular, they might worry that there is no reason to think that either one signaling system or the other will take over a population. If so, groups might fail to evolve conventional meaning. To counter this worry, he analyzes what happens when a single, infinite population evolves to play the signaling game under the replicator dynamics. We observed in Section 2 that these dynamics can represent both cultural evolution and natural selection. If we see meaning emerge in this model, then, we have good reason to think that simple signals can emerge in the natural world as well as the social one. Using computer simulation tools, Skyrms finds that one of the signaling systems always takes over the population. Subsequently, proof-based methods have been used to show this will always happen (Reference Skyrms and PemantleSkyrms and Pemantle, 2000; Reference HutteggerHuttegger, 2007a).Footnote 16 As Skryms concludes for this model, “the emergence of meaning is a moral certainty” (93).

As we will see in a minute, this statement must be taken with a grain of salt. But this “moral certainty” nonetheless has explanatory power. Signaling is ubiquitous across the biological and human social realms, and now we see part of the reason why. Despite Quine’s worries, signaling is easy to evolve. It takes very little to generate conventional meaning from nothing.

This is not the end of the story, though. Subsequently, philosophers and others have developed a deep understanding of common interest signaling, deriving further results about this very simplest signaling model and a host of variants. In addition, the signaling game has been co-opted in numerous ways to address particular research questions. Let us first look at what else we have learned about the signaling game, and then move on to some applications. Much of the literature I will now describe is discussed in more detail in Reference SkyrmsSkyrms (2010b).Footnote 17

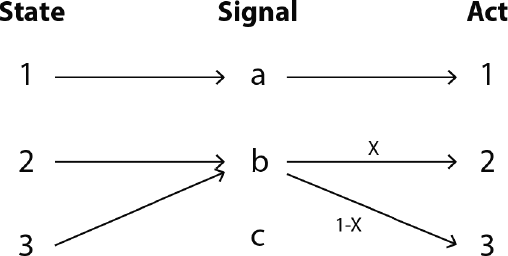

The simple game we have been discussing is sometimes called the 2 × 2 × 2 game, for two states, two signals, and two possible actions. But we can consider N × N × N games with arbitrary numbers of states, signals, and actions. When N > 2, these models have a new set of equilibria called partial pooling equilibria. The sender sends the same message in multiple states, and upon receipt of this partially pooled message the receiver mixes between the actions that might work. Figure 3.3 shows what this might look like for the 3 × 3 × 3 game. After receipt of signal b, the receiver does act 2 with probability x and act 3 with probability 1 − x. These are mixed strategy equilibria, where the actors mix over behaviors.Footnote 18 The only ESSs of these games are the signaling systems, not these partial pooling equilibria. These equilibria, however, do have basins of attraction under the replicator dynamics (Reference Nowak and KrakauerNowak and Krakauer, 1999; Reference HutteggerHuttegger, 2007a; Reference PawlowitschPawlowitsch et al., 2008). And for larger games, simulations show an increasing likelihood that a system of partial communication will emerge instead of perfect signaling (Reference BarrettBarrett, 2009). In other words, the moral certainty of signaling is disrupted when even a bit of complexity is added to the model.

Figure 3.3 A partial pooling equilibrium in a 3 × 3 × 3 signaling game. The sender uses b in both states 2 and 3. Upon receipt of b, the receiver mixes between acts 2 and 3.

Another, even smaller change can disrupt this certainty. Consider the 2×2×2 model where one state is more probable than the other. The more common one state is, the smaller the benefits of signaling. (When one state occurs 90 percent of the time, for example, the receiver can be very successful by simply taking the action that is best in that state.) As a result, babbling equilibria, where no information is transferred because sender and receiver always do the same thing, evolve under the replicator dynamics with greater likelihood the more likely one state is (Reference HutteggerHuttegger, 2007a; Reference SkyrmsSkyrms, 2010b).

There is a methodological lesson here. Early analyses of ESSs in com mon interest signaling games, as in Reference WärnerydWärneryd (1993), indicated that perfect signaling is expected to evolve. But, as we have just noted, more complete dynamical analyses show the ESS analysis insufficient – it fails to capture the relevance and stability of babbling equilibria and partial pooling equilibria.

There is also a philosophical lesson. The fact that these small changes lead to situations where perfect signaling does not emerge seems to press against the power of these models to help explain the ubiquity of real-world signaling. But we should not be too hasty. Philosophers have looked at changes to these more complex models that increase the probability of signaling. These include the addition of drift and mutation to the models (Reference HutteggerHuttegger, 2007a; Reference Hofbauer and HutteggerHofbauer and Huttegger, 2008), correlation between strategies – where signalers tend to meet those of their own type (Reference Skyrms and PemantleSkyrms and Pemantle, 2000; Reference Huttegger, Zollman, Okasha and BinmoreHuttegger and Zollman, 2011a; Reference SkyrmsSkyrms, 2010b), and finite populations (Reference PawlowitschPawlowitsch, 2007; Reference Huttegger, Skyrms, Smead and ZollmanHuttegger et al., 2010). Furthermore, even in cases where evolved communication is not perfect, quite a lot of information may be transferred (Reference BarrettBarrett, 2009). In other words, our simple signaling models retain their power to help explain the ubiquity of real-world signaling, even if in many cases perfect signaling does not emerge.

Let’s look at some more variants that extend our understanding of signaling. Sometimes more than two agents communicate at once. Bacterial signaling is usually between multiple senders and multiple receivers, for instance. And vervet monkeys send alarm calls to all nearby group members. Reference BarrettBarrett (2007) and Reference SkyrmsSkyrms (2009) consider a model with two senders, one receiver, and four states of the world, where senders each observe different partitions of the states. (That is, one sender can distinguish states 1–2 from states 3–4, and the other can distinguish odd and even states.) These authors show how signaling systems can emerge that perfectly convey information to the receiver.Footnote 19 Reference SkyrmsSkyrms (2009, Reference Skyrms2010b) considers further variations, such as models where one sender communicates with two receivers who must take complimentary roles, where a receiver listens to multiple senders who are only partially reliable, where actors transmit information in signaling “chains,” and dialogue models where the receiver tells the sender what information they seek and the sender responds. In each case, the ease with which signaling can emerge is confirmed.

Some creatures may be limited in the number of signals they can send. Others (like humans) may be able to easily generate a surfeit of terms. In an evolutionary setting, games with too many signals often evolve to outcomes where one state is represented by multiple terms or “synonyms”. In these states, actors coordinate just as well as they would with fewer signals. This is because each signal has one meaning, even if a state may be represented by multiple signals (Reference PawlowitschPawlowitsch et al., 2008; Reference SkyrmsSkyrms, 2010b).

Without enough signals, actors cannot perfectly coordinate their action with the world. This is because they do not have the expressive power to represent each state individually. For this reason, Reference SkyrmsSkyrms (2010b) describes this sort of situation as an “information bottleneck.”Footnote 20 At the optimal equilibria of such models, actors may either ignore certain states or else categorize multiple states under the same signal. Reference Donaldson, Lachmann and BergstromDonaldson et al. (2007) point out that in some cases actors have payoff reasons for choosing some ways of categorizing over others, in which case these ways may be more likely to evolve. For example, while the ideal response to predatory wolves and dogs might be slightly different for a group of monkeys, it still might make sense to use one alarm call for these highly similar predators if monkeys are limited in their number of signals. We will come back to this theme in Section 3.3.

One thing actors without enough signals might like to do is to invent new ones – this should help them improve coordination. To add signals, though, involves a change to the structure of the game itself. Reference SkyrmsSkyrms (2010b) uses a different sort of model – one that explicitly represents learning – to explore this possibility. Such models have provided an alternative paradigm to explore the evolution of signaling, one that has often created synergy with evolutionary models. Let us now turn to this paradigm.

3.2.1 Learning, Simulation, and the Emergence of Signaling

The results discussed thus far are from models that assume infinite populations, and change via either natural selection or cultural imitation. There is another set of models that have been widely used by philosophers to investigate signaling that instead represent two agents – a sender and a receiver – who learn to signal over time. It should be clear why such a model is a particularly good one to respond to skeptical worries about conventional meaning. They show that not only can such meaning evolve over time, it can emerge on very short timescales between agents with simple learning rules.

This framework assumes two agents play the signaling game round after round and that over time, they learn via reinforcement learning. This is a type of learning that uses past successes and failures to weight the probabilities that actors use different strategies in future play. One widely used reinforcement learning rule was introduced by Reference Roth and ErevRoth and Erev (1995).Footnote 21 The general idea is that actors increase the likelihood that they take a certain action proportional to the success of that action in the past.

Reference Argiento, Pemantle, Skyrms and VolkovArgiento et al. (2009) prove that actors in the 2×2×2 reinforcement learning model always learn signaling systems. In other words, the lesson about moral certainty transfers to the learning case. There is something especially nice about this result, because the learning dynamics are so simple. Any critter capable of performing successful actions with increasing likelihood will be able to learn to signal. Once again, models make clear that meaning is easy to get.

Many of the other results from evolutionary models transfer to these learning models too. Reference BarrettBarrett (2009) and Reference Argiento, Pemantle, Skyrms and VolkovArgiento et al. (2009), for example, show that in N × N × N games the emergence of partial pooling equilibria becomes increasingly likely as N increases. Reference Argiento, Pemantle, Skyrms and VolkovArgiento et al. (2009) confirm that babbling equilibria emerge in games where states are not equiprobable, that synonyms arise and persist in games with too many signals and that categories typically appear in games with too few.

One thing the reinforcement learning framework does is to provide a robustness check to the evolutionary models described. Robustness of a result is checked by altering various aspects of a model and seeing whether the results still hold up.Footnote 22 The idea is usually to show that the causal dependencies identified in a model are not highly context-specific. In this case, we see that the emergence of signaling is robust to changes in the population size and changes in the underlying evolutionary process. Furthermore, a host of variations on reinforcement learning yield similar outcomes (Reference BarrettBarrett, 2006; Reference Barrett and ZollmanBarrett and Zollman, 2009; Reference SkyrmsSkyrms, 2010b; Reference Huttegger and ZollmanHuttegger and Zollman, 2011b), as do investigations of other sorts of learning rules (Reference SkyrmsSkyrms, 2010b, Reference Skyrms2012; Reference Huttegger, Skyrms, Tarrès and WagnerHuttegger et al., 2014) and other types of signaling scenarios (Reference AlexanderAlexander, 2014).Footnote 23

In addition to showing robustness, reinforcement learning models lend themselves to explorations of new possibilities that would be difficult to tackle in infinite population models. As noted, Reference SkyrmsSkyrms (2010b) develops a simple model where actors invent new signals.Footnote 24 One interesting result of this model is that the invention feature seems to prevent the emergence of suboptimal signaling. Instead, actors who start with no meaning and no signals develop highly efficient patterns of behavior. Reference Barrett and ZollmanBarrett and Zollman (2009) use the reinforcement framework to incorporate forgetting into evolution and to show that forgetting can actually improve the success of signal learning. So, in sum, we see that this learning framework extends our understanding of signaling both by opening up new possibilities for exploration and undergirding previous discoveries.Footnote 25, Footnote 26

3.3 Applying the Model

We have now seen that a great deal of theoretical progress has been made understanding the Lewis signaling game and the evolution of simple, common interest signaling. Let’s turn to how philosophers of biology have applied the model to deepen our understanding of specific phenomena in the biological world. We will not be able to discuss all the literature that might be relevant. Rather, the goal is to see some examples of what the common interest signaling game can do.

3.3.1 Animal Communication

As Reference SkyrmsSkyrms (1996) points out, birds do it, bees do it, even monkeys in the trees do it – we have noted that signaling is rampant in the animal kingdom. Evolutionary results from signaling games help explain this ubiquity, but can they provide insights into particular cases?

Skyrms considers vervet alarm calls. In this case, signaling is costly – an alarm draws attention right at the moment a dangerous predator is nearby. This means that we should not expect signaling to emerge in the basic Lewis game. But the framework also elucidates what is missing – in many cases, vervets are signaling to their own kin, meaning that altruistic signalers are also creating benefits for other altruistic signalers.Footnote 27 As he shows, if there is even a small amount of correlation between strategies so that the benefits of signaling fall on signalers, communication can emerge.

Reference Donaldson, Lachmann and BergstromDonaldson et al. (2007) ask why many animal alarm calls refer to a level of urgency perceived by the speaker, rather than to clear external world referents (such as hawks or snakes). They consider signaling games with fewer signals than states, where there are payoff reasons why some states might be categorized together. As they show, directing successful action, rather than referring to distinct states, is the primary driver of categorization in these games. Thus alarm calls are doing the fitness-enhancing thing by directly referring to the action that needs to be performed.

Reference BarrettBarrett (2013a) and Reference Barrett and SkyrmsBarrett and Skyrms (2017) consider experimental evidence showing that scrub jays and pinyon jays infer transitive relationships based on learned stimuli. (For example, if they learn that blue > red and red > yellow, they are able to infer that blue > yellow.) They use reinforcement learning simulations to consider (1) how organisms might evolve a tendency to linear order stimuli in the first place and (2) how they might apply this tendency to novel scenarios. The signaling model illuminates how this sort of tendency might emerge and how it might later help organize information from the world. In other words, it gives a how-possibly story about the emergence of transitive inference in animals like jays. (More in a minute on how signaling models can be used to represent cognition.)

3.3.2 Human Language

Signaling games provide a useful framework for exploring human language. There are many features of language that go far beyond the behavior that emerges in highly simplified game theoretic models, but nonetheless, progress can be made by starting with these simple representations.Footnote 28

Payoff Structure, Vagueness, and Ambiguity

Reference JägerJäger (2007) extends an idea we saw in Reference Donaldson, Lachmann and BergstromDonaldson et al. (2007) – that, in many cases, there are payoff reasons why actors categorize together states of the world for the purposes of signaling. He develops a framework, the simmax game, to explore this idea in greater detail.Footnote 29 Sim-max games are common interest signaling games where (1) actors encounter many states that bear similarity relations to each other, (2) actors have fewer signals than states, and, as a result, (3) actors categorize groups of states together for the purposes of communication. Similarity, in these models, is captured by payoffs. States are typically arrayed in a space – a line or a plane, say – where closer states are more similar in that the same actions are successful for them. Remember the mother and daughter signaling about the weather? In a sim-max game, the states might range from bright sun to a hurricane. Umbrellas would be successful for multiple states in the rainy range and a picnic for multiple states in the sunny range.

Several authors have used the model to discuss the emergence of vague terms – those with borderline cases, such as “bald” or “red.” Vague terms provide an evolutionary puzzle. They are ubiquitous, but they are never efficient in the sense that linguistic categories with clear borderlines will always be better at transferring information (Reference LipmanLipman, 2009). Reference Franke, Jäger and Van RooijFranke et al. (2010) use a simmax model to show how boundedly rational agents – those who are not able to calculate perfect responses to their partners – might develop vague terms.Footnote 30 Reference O’ConnorO’Connor (2014a) takes a different tack by showing how vagueness in the model, while not itself fitness enhancing, might be the necessary side effect of a learning strategy that is beneficial: generalizing learned lessons to similar, novel scenarios.

Reference O’ConnorO’Connor (2015) also uses the model to address the evolution of ambiguity – the use of one term to apply to multiple states of the world. She proves that whenever it costs actors something to develop and remember signals, optimal languages in sim-max games will involve ambiguity, even though communication would always be improved by adding more signals.Footnote 31 In both of these cases, evolutionary models of signaling help shed light on linguistic traits that are otherwise puzzling.

Compositionality

One of the most important features of human language is compositionality – the fact that words can be composed to create new, novel meanings. For example, we all can understand the phrase “neon pink dog,” even if we have never encountered this particular string of words.

Reference BarrettBarrett (2007) provides a first model, where two agents send signals to a receiver who chooses actions based on the compositions of these signals.Footnote 32 As he shows, meaning and successful action evolve easily in this model. However, Reference FrankeFranke (2014, Reference Franke2016) points out that actors in Barrett’s model do not creatively combine meanings but rather independently develop actions for each combination of signals. He presents a model with a sim-max character, where actors generalize their learning to similar states and signals. As he demonstrates, extremely simple learners in this model can creatively use composed signals to guide action. Reference Steinert-ThrelkeldSteinert-Threlkeld (2016) also provides support for this claim by showing how simple reinforcement learners can learn to compose a negation symbol with other basic symbols. In doing so, they outperform agents who learn only non-compositional signaling strategies. Reference Barrett, Skyrms and CochranBarrett et al. (2018) develop a more complex model where two senders communicate about two cross cutting aspects of real-world states, but an “executive sender” also determines whether one or both of the possible signals are communicated. The receiver may then respond with an action appropriate for any of the combined states or just one aspect of the world. In all, the signaling game here provides a framework that helps researchers explore (1) what true compositionality entails, (2) what sorts of benefits it might provide to signalers, and (3) how it might start to emerge in human language.Footnote 33

3.3.3 Signaling in the Body

Within each of our bodies neurons fire, hormones travel through the blood stream, DNA carries genetic information, and our perceptual systems mediate action with respect to inputs from the outside world. Each of these is an example of a process where information of some sort is transferred. Each might be represented and understood via the signaling game. There is an issue, though. When it comes to signaling within the body, certain features of the signaling model may not be present. Can we still apply the Lewisean framework?

Reference Godfrey-SmithGodfrey-Smith (2014) points out that there are many systems throughout the biological world that have partial matches to the signaling game model. He discusses, in particular, the match with two sorts of memory systems – neuronal memories and genetic memory. In the case of neuronal memory, there are clear states experienced by an organism, and a clear moment in which these states are written into synaptic patterns as a signal. However, there is arguably no clear receiver since synaptic weights directly influence future behavior.Footnote 34 Instead, we should think of these as write-activate systems, which have a partial match to the Lewis model. In the case of genetic signaling, various authors have argued that genes present a clear case of information transfer and signaling between generations (Reference SheaShea, 2007, Reference Shea2013; Reference Bergstrom and RosvallBergstrom and Rosvall, 2011). Godfrey-Smith argues that this is also only a partial match, since there is no clear sender of a genetic signal. He dubs this an evolve-read system. More generally, we can think about many cases as having these sorts of partial matches to the full Lewis model.

In this vein, Reference O’ConnorO’Connor (2014b) uses the sim-max game to model the evolution of perceptual categories. Every animal mediates behavior via perception. In this way, perceptual systems are signals from an animal to itself about the state of the world around them. In O’Connor’s models, a state is a state of the external world, the action is whatever an organism does in response to that state, and the signal consists in mediating perceptual states within the body. As noted, sim-max games tend to evolve so that whatever states may be responded to similarly are categorized together. O’Connor argues that for this reason, we should expect categories to evolve to enhance fitness, not necessarily to track external similarity of stimuli.

Reference CalcottCalcott (2014) shows how signaling games can be used to represent generegulatory networks: systems that start with environmental inputs (such as the presence of a chemical) and use chemical signals to turn genes on and off that help the organism cope with the detected state. Variations of the model involving multiple senders, and a receiver who can integrate this information can help explain how gene regulatory networks perform simple computations. Furthermore, the signaling structure induces a highly evolvable system – a new environment might be mapped to a signal to induce a preevolved action appropriate for it, for example. In a related project, Reference PlanerPlaner (2014) uses signaling games to argue that a typical metaphor in biology – that genes execute something like a computer code – is misleading. He draws out disanalogies between genetic expression and the execution of an algorithm. He then argues that genes are better thought of as the senders and receivers of transcription factors.

What we see from these examples is that even when there is not an entirely explicit signaling structure, the signaling game framework as developed by philosophers of biology can be extremely useful. It can help answer questions such as, Do our perceptual categories track the world? How can gene regulatory networks flexibly evolve? And, are genes really like computer programs?

As we have seen in this section, the common interest signaling game first entered philosophy through attempts to answer skeptical worries about linguistic convention. But the model has become a central tool in philosophy of biology, used to elucidate a range of topics. In addition, philosophers have helped develop an extended, robust understanding of the dynamics of signaling evolution. In the next section we will see what happens when we drop a key assumption from the Lewis model: that the interests of signalers always coincide.

4 Conflict of Interest Signaling

The Lewis games of the last section modeled signaling interactions where agents shared interests. Sender and receiver, in every case, agreed about what action should be performed in what state of the world. But what if sender and receiver are not on the same page? Remember the peacock mantis shrimps from the introduction, who would both like to reside in the same cozy burrow, and who use the colors on their meral appendages as signals to help determine who will get to do so? These actors have a clear conflict of interest – each prefers a state where he is the resident of the burrow, and the other is not. There might be some temptation to think, then, that successful communication should not be possible between these shrimp. Why should they transfer information to an opponent? And alternatively, why would they trust an opponent to transfer dependable information when their interests do not line up? Clearly, though, the mantis shrimp do manage to signal, as do many other organisms who have some conflict of interest – predators and prey, potential mates, etc.

We will begin this section with some of the most important insights in the study of conflict of interest signaling. In particular, both biologists and economists have used signaling models to show how signal costs can maintain information transfer when there are partial conflicts of interest. This insight is sometimes known as costly signaling hypothesis. There are issues with this hypothesis, though. First, empirical studies have not always found the right sorts of costs in real-world species. Second, on the theoretical side, because such costs directly detriment fitness, they seem to pose a problem for evolution.

Philosophers of biology have contributed to these theoretical critiques. But they have also explored ways to circumvent these worries and revamp costly signaling hypothesis. This section will discuss several ways in which philosophers have done this (1) by looking at hybrid equilibria – ones with low signal costs and partial information transfer, (2) by showing how communication that is even partially indexical (or inherently trustworthy) can lower signal costs, and (3) by showing that some previous modeling work has overestimated the costs necessary for signaling with kin.

At the end of the section, we will turn to a philosophical consideration in the background of this entire section: what is conflict of interest in a signaling scenario, and how might we measure it? As will become clear, attempts to answer this question press on a previous maxim: that when there is complete conflict of interest, signaling is impossible.

4.1 Costs and Conflict of Interest

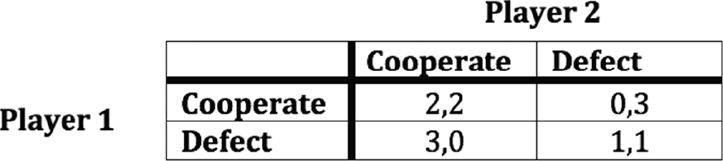

The first thing to observe is that even the mantis shrimp do not have completely opposing interests. In particular, neither of them wants to fight, meaning that instead, they have a partial conflict of interest. The most classic case of partial conflict of interest signaling in biology comes from mate selection. In peacocks, for example, the peahen shares interests with high quality males because they would both like to mate. But while low-quality males would like to mate with her, she would not choose them. High-quality males could send a signal of their quality to facilitate mating, but low-quality males would be expected to immediately co-opt it. Analogous situations crop up in human society. The classic case is one of a business trying to hire. They share interests with high-quality candidates but have a conflict with low-quality ones. How can the business trust signals of quality it receives, given that low-quality candidates want to be hired?

Both biologists and economists figured out a solution to the signaling problem just introduced. In biology, Amotz Zahavi introduced what is called the handicap principle, which was later modeled using signaling games by Alan Grafen (Reference ZahaviZahavi, 1975; Reference GrafenGrafen, 1990). The insight is that communication can remain honest in partial conflict of interest cases if signals are costly in such a way that high-quality individuals are able to bear the cost, while low-quality individuals are not. In an earlier model, economist Michael Spence showed the same thing for the job market case described above (Reference SpenceSpence, 1978). If a college degree is too costly for low-quality candidates to earn, it can be a trustworthy signal of candidate quality. To be precise, in these models, signaling equilibria exist where high-quality candidates/mates signal, and low-quality ones do not because of these costs.

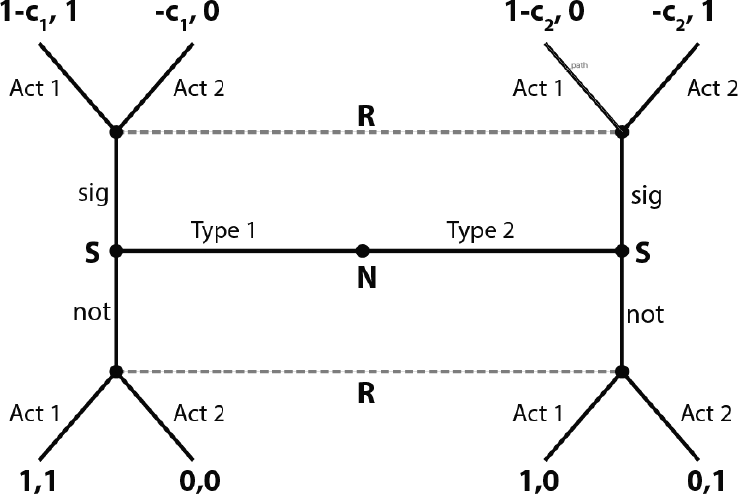

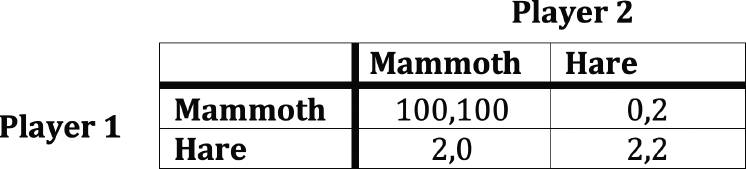

For the purposes of this Element, I will describe a very simple version of a partial conflict of interest signaling game (borrowed from Reference Zollman, Bergstrom and HutteggerZollman et al. (2012)). Figure 4.1 shows the extensive form. Nature plays first and selects either a high-type (type 1) or low-type (type 2) sender. Then the sender decides to signal or not. Upon observing whether a signal was sent, the receiver chooses action 1 (mate, or hire) or action 2 (do not mate, do not hire). The sender’s payoffs are listed first – they get a 1 whenever the receiver chooses action 1, minus a cost, c, if they signaled. We assume that c1 < c2, or high-type senders pay less to signal. Receivers get 1 for matching action 1 to a high-type sender or action 2 to a low-type sender. This corresponds, for example, to a peahen mating with a healthy peacock or rejecting an unhealthy one.

Figure 4.1 The extensive form respresentation of a partial conflict of interest signaling game.

Consider the strategy pairing where high types send, low types do not, and receivers only take action 1 in response to a signal. This is not an equilibrium when costs are zero. This is because if a receiver is taking action 1 in response to the signal, low types will switch to send the signal. Once both high and low types send, there is no longer any information in the signal, and receivers should always take whatever action is best for the more common type.

Suppose instead that c1 < 1 < c2. High types gain a benefit of 1 – c1 from signaling if receivers are then willing to choose action 1 as a result. Low types cannot benefit from signaling, since the cost of the signal is more than the benefit from being chosen as a mate/colleague. Thus there is a costly signaling equilibrium where the signal carries perfect information about the type of the sender, and receivers and high types get a payoff as a result. Reference GrafenGrafen (1990) showed that this type of equilibria are ESSs of costly signaling models.

It has been argued that costly signaling equilibria can help explain a variety of cases of real-world signaling. The original application for biology, as noted, is to the sexual selection of costly ornamentation. The peacock’s tail, for example, imposes a cost that only healthy, high-quality peacocks can bear. Contests, such as those between mantis shrimp, are another potential application (Reference GrafenGrafen, 1990). If it is costly to develop expensively bright meral spots, this may be a reliable indicator of strength and fitness. Another potential application regards signaling between predator and prey. Behaviors like stotting, where, upon noticing a cheetah, gazelles spring into the air to display their strength, may be costly signals of quality.Footnote 35 And as we will see in Section 4.4, similar models have been applied to chick begging.

There are a few issues, though. In many species, when the actual costs paid by supposedly costly signalers are measured, they come up short. They do not seem to be enough to sustain honest signaling (Reference Caro, Lombardo, Goldizen and KellyCaro et al., 1995; Reference Silk, Kaldor and BoydSilk et al., 2000; Reference GroseGrose, 2011; Reference Zollman, Bergstrom and HutteggerZollman et al., 2012; Reference ZollmanZollman, 2013). This has led to widespread criticism of the handicap theory.

There are theoretical issues with the theory as well, which arise because costs are generally bad for evolution. Reference Bergstrom and LachmannBergstrom and Lachmann (1997) point out that some costly signaling equilibria are worse, from a payoff standpoint, than not signaling at all. And once one does a full dynamical analysis, it becomes clear that the existence of costly signaling equilibria is insufficient to explain the presence of real-world partial conflict of interest signaling. This is because costly signaling equilibria tend to be unlikely to evolve due to the fitness costs that senders must pay to signal. These equilibria have small basins of attraction under the replicator dynamics (Reference WagnerWagner, 2009; Reference Huttegger and ZollmanHuttegger and Zollman, 2010; Reference Zollman, Bergstrom and HutteggerZollman et al., 2012). Is the costly signaling hypothesis bankrupt? Or does it nonetheless help explain the various phenomena it has been linked to? Let us now turn to further theoretical work that might help circumvent these worries.

4.2 The Hybrid Equilibrium

By the early 1990s, economists had identified another type of equilibrium of costly signaling models (Reference Fudenberg and TiroleFudenberg and Tirole, 1991; Reference GibbonsGibbons, 1992). In a hybrid equilibrium, costs for signaling are relatively small. But even small costs, as it turns out, are enough to sustain signals that are partially informative.

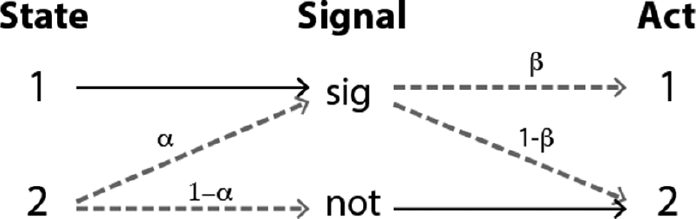

Let’s use the game in Figure 3.1 to fill in the details. Suppose the cost for low types, c2, is constrained such that 0 < c2 < 1, and the cost for high types is less than this, c1 < c2. Under these conditions, there is a hybrid equilibrium where (1) high types always signal, (2) low types signal with some probability α, (3) the receiver always chooses action 2 (i.e., do not mate) if they do not receive the signal, and (4) the receiver chooses action 1 (i.e., mate) with probability β upon receipt of the signal. Figure 4.2 shows this pattern.

Figure 4.2 The hybrid signaling equilibrium.

The values for α and β that sustain this equilibrium depend on the details of the costs and the prevalence of high types in the population. If x is the proportion of high types, α = x/(1 – x) and β = c2 (Reference Zollman, Bergstrom and HutteggerZollman et al., 2012). These values are such that low types expect the same payoffs for sending and not sending (and thus are willing to do both with positive probability), and high types expect the same payoffs from taking either action given the signal. Unlike the costly signaling equilibrium, only partial information is transferred at the hybrid equilibrium. Upon receipt of the signal, the receiver cannot be totally sure which state obtains. But she is more certain of the correct state than she was before observing the signal and can shape her behavior accordingly.

In some ways, the hybrid equilibrium seems inferior to the costly signaling one – in the latter perfect information is transferred. In the former, high types and receivers are only somewhat able to act on their shared interests. From an evolutionary perspective, though, the hybrid equilibrium proves very significant. Unlike the costly signaling equilibria, low signaling costs mean that hybrid equilibria are often easier to evolve in costly signaling models (Reference WagnerWagner, 2013b; Reference Huttegger and ZollmanHuttegger and Zollman, 2010; Reference Zollman, Bergstrom and HutteggerZollman et al., 2012; Reference Kane and ZollmanKane and Zollman, 2015; Reference Huttegger and ZollmanHuttegger and Zollman, 2016). This might help explain how partial conflict of interest communication is sustained throughout the biological world. Small costs help maintain information transfer, without creating such an evolutionary burden that communication is not worthwhile. Furthermore, these low costs seem more in line with empirical findings in many cases.Footnote 36

4.3 When Honesty Met Costs: The Pygmalion Game

Hybrid equilibria can evolve and guarantee partial information transfer. There is another solution, though, to the problem posed by the costs of signaling. Philosophers of biology have argued that, in fact, the very setup of the costly signaling game builds into it an unrealistic assumption.

Aside from signal cost, there is another way to guarantee information transfer in partial conflict of interest scenarios. This is for high types to have a feature or signal that is unavailable to low types, or unfakeable. Suppose humans were able to innately read the quality of job market candidates, for instance. There would be no need for expensive college degrees to act as costly signals, because it would be obvious which candidates to hire. A feature that correlates with type in this way is sometimes called an index rather than a signal (Reference Searcy and NowickiSearcy and Nowicki, 2005).Footnote 37

Reference Huttegger, Bruner and ZollmanHuttegger et al. (2015) point out that while biologists have traditionally thought of costly signaling and indexical signaling as alternative explanations for communication in partial conflict of interest cases, this distinction is a spurious one. They introduce a costly signaling model called the pygmalion game where, when low types attempt to signal, they do not always succeed. With respect to stotting, this would correspond to fast gazelles having a higher probability of jumping high enough to impress a cheetah than slow ones. In other words, the signal is partially indexical. As they point out, at the extremes, their model reduces to either a traditional costly signaling model – if low and high types are both always able to signal perfectly – or to an index model where only high types are able to send the signal at all.

Between these extremes is an entire regime where costs and honesty tradeoff. As they show, the harder a signal is to fake, the lower the costs necessary to sustain a costly signaling equilibrium. The easier the signal is to fake, the higher the costs. In these intermediate cases where signal costs are not too high, the signaling equilibria often have large basins of attraction. So if signals are even a bit hard to fake, the costs necessary to sustain full, communicative signaling might not be very high, easing the empirical and theoretical worries described earlier in the section.Footnote 38

4.4 Altruism, Kin Selection, and the Sir Philip Sydney Game

As mentioned earlier, the costly signaling hypothesis may also explain elements of chick begging. Reference Maynard-SmithMaynard-Smith (1991) introduced the Sir Philip Sydney game to capture this case.Footnote 39 In this model, chicks are needy or healthy with some probability. They may pay a cost to signal (cheep), or else stay silent. A donor, assumed to be a parent, may decide whether to pay a cost to give food conditional on receipt of the signal. If chicks do not receive a donation, their payoff (chance of survival) decreases by some factor that is larger for needy chicks. Notice that this model is analogous to the partial conflict of interest models described thus far, but with a notable difference – healthy and needy chicks do not pay differential costs to signal but instead receive differential benefits.